This paper focuses on LiDAR-camera fusion for 3D object detection. If you find this project useful, please cite:

@article{liang2022bevfusion,

title={{BEVFusion: A Simple and Robust LiDAR-Camera Fusion Framework}},

author={Tingting Liang, Hongwei Xie, Kaicheng Yu, Zhongyu Xia, Zhiwei Lin, Yongtao Wang, Tao Tang, Bing Wang and Zhi Tang},

journal={arxiv},

year={2022}

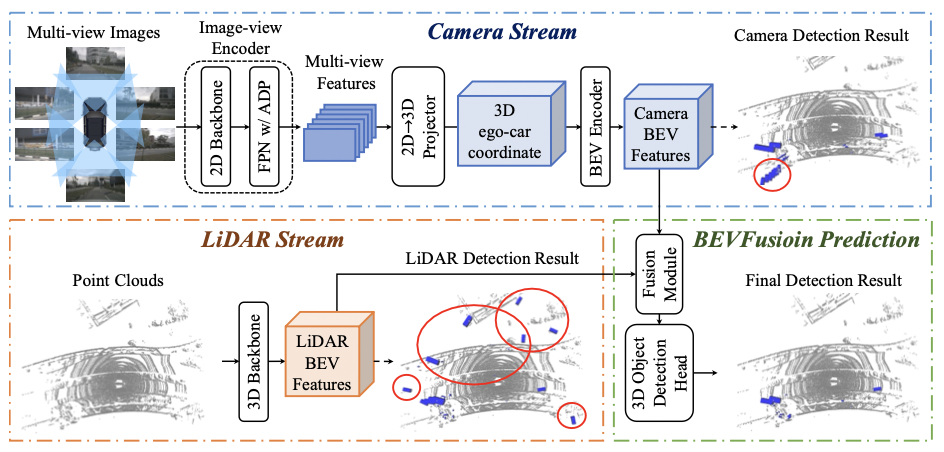

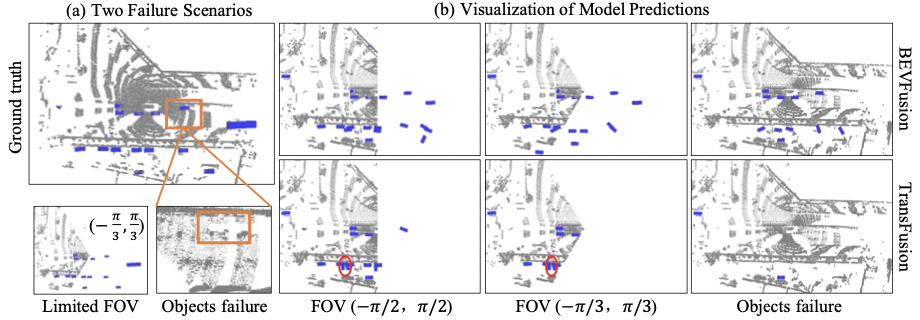

}Fusing the camera and LiDAR information has become a de-facto standard for 3D object detection tasks. Current methods rely on point clouds from the LiDAR sensor as queries to leverage the feature from the image space. However, people discover that this underlying assumption makes the current fusion framework infeasible to produce any prediction when there is a LiDAR malfunction, regardless of minor or major. This fundamentally limits the deployment capability to realistic autonomous driving scenarios. In contrast, we propose a surprisingly simple yet novel fusion framework, dubbed BEVFusion, whose camera stream does not depend on the input of LiDAR data, thus addressing the downside of previous methods. We empirically show that our framework surpasses the state-of-the-art methods under the normal training settings. Under the robustness training settings that simulate various LiDAR malfunctions, our framework significantly surpasses the state-of-the-art methods by 15.7% to 28.9% mAP. To the best of our knowledge, we are the first to handle realistic LiDAR malfunction and can be deployed to realistic scenarios without any post-processing procedure.

| Model | Head | 3DBackbone | 2DBackbone | mAP | NDS | Link |

|---|---|---|---|---|---|---|

| BEVFusion | TransFusion-L | VoxelNet | Dual-Swin-T | 69.2 | 71.8 | Detection |

| Model | Head | 3DBackbone | 2DBackbone | mAP | NDS | Model |

|---|---|---|---|---|---|---|

| BEVFusion | PointPillars | - | Dual-Swin-T | 22.9 | 31.1 | Model |

| BEVFusion | PointPillars | PointPillars | - | 35.1 | 49.8 | Model |

| BEVFusion | PointPillars | PointPillars | Dual-Swin-T | 53.5 | 60.4 | Model |

| BEVFusion | CenterPoint | - | Dual-Swin-T | 27.1 | 32.1 | - |

| BEVFusion | CenterPoint | VoxelNet | - | 57.1 | 65.4 | - |

| BEVFusion | CenterPoint | VoxelNet | Dual-Swin-T | 64.2 | 68.0 | - |

| BEVFusion | TransFusion-L | - | Dual-Swin-T | 22.7 | 26.1 | - |

| BEVFusion | TransFusion-L | VoxelNet | - | 64.9 | 69.9 | - |

| BEVFusion | TransFusion-L | VoxelNet | Dual-Swin-T | 67.9 | 71.0 | - |

Infos for random box dropping in validation set are in drop_foreground.json, with LiDAR file name: dropping box (True) or not (False).

| Model | Limited FOV | Objects failure | Head | 3DBackbone | 2DBackbone | mAP | NDS | Model |

|---|---|---|---|---|---|---|---|---|

| BEVFusion | (-π/3,π/3) | False | PointPillars | PointPillars | Dual-Swin-T | 33.5 | 42.1 | Model |

| BEVFusion | (-π/2,π/2) | False | PointPillars | PointPillars | Dual-Swin-T | 36.8 | 45.8 | Model |

| BEVFusion | - | True | PointPillars | PointPillars | Dual-Swin-T | 41.6 | 51.9 | Model |

| BEVFusion | (-π/3,π/3) | False | CenterPoint | VoxelNet | Dual-Swin-T | 40.9 | 49.9 | - |

| BEVFusion | (-π/2,π/2) | False | CenterPoint | VoxelNet | Dual-Swin-T | 45.5 | 54.9 | - |

| BEVFusion | - | True | CenterPoint | VoxelNet | Dual-Swin-T | 54.0 | 61.6 | - |

| BEVFusion | (-π/3,π/3) | False | TransFusion-L | VoxelNet | Dual-Swin-T | 41.5 | 50.8 | - |

| BEVFusion | (-π/2,π/2) | False | TransFusion-L | VoxelNet | Dual-Swin-T | 46.4 | 55.8 | - |

| BEVFusion | - | True | TransFusion-L | VoxelNet | Dual-Swin-T | 50.3 | 57.6 | - |

| Model | 2DBackbone | bbox_mAP | segm_mAP | Model |

|---|---|---|---|---|

| Mask R-CNN | Dual-Swin-T | 56.0 | 46.1 | Model |

Installation

Please refer to getting_started.md for installation of mmdet3d.

Recommended environments:

python==3.8.3

mmdet==2.11.0 (please install mmdet in mmdetection-2.11.0)

mmcv==1.4.0

mmdet3d==0.11.0

numpy==1.19.2

torch==1.7.0

torchvision==0.8.0Benchmark Evaluation and Training

Please refer to data_preparation.md to prepare the data. Then follow the instruction there to train our model. All detection configurations are included in configs.

# training example for bevfusion-pointpillar

# train nuimage for camera stream backbone and neck.

./tools/dist_train.sh configs/bevfusion/cam_stream/mask_rcnn_dbswin-t_fpn_3x_nuim_cocopre.py 8

# first train camera stream

./tools/dist_train.sh configs/bevfusion/cam_stream/bevf_pp_4x8_2x_nusc_cam.py 8

# then train LiDAR stream

./tools/dist_train.sh configs/bevfusion/lidar_stream/hv_pointpillars_secfpn_sbn-all_4x8_2x_nus-3d.py 8

# then train BEVFusion

./tools/dist_train.sh configs/bevfusion/bevf_pp_2x8_1x_nusc.py 8

### evaluation example for bevfusion-pointpillar

./tools/dist_test.sh configs/bevfusion/bevf_pp_2x8_1x_nusc.py ./work_dirs/bevfusion_pp.pth 8 --eval bboxWe sincerely thank the authors of mmdetection3d, TransFusion, LSS, CenterPoint for open sourcing their methods.