When training classifiers based on BoW or TF/IDF, we operated on high-dimensional bag-of-words vectors with length vocab_size, and we were explicitly converting from low-dimensional positional representation vectors into sparse one-hot representation. This one-hot representation, however, is not memory-efficient. In addition, each word is treated independently from each other, i.e. one-hot encoded vectors do not express any semantic similarity between words.

The idea of embedding is to represent words by lower-dimensional dense vectors, which somehow reflect the semantic meaning of a word. We will later discuss how to build meaningful word embeddings, but for now let's just think of embeddings as a way to lower dimensionality of a word vector.

So, the embedding layer would take a word as an input, and produce an output vector of specified embedding_size. In a sense, it is very similar to a Linear layer, but instead of taking a one-hot encoded vector, it will be able to take a word number as an input, allowing us to avoid creating large one-hot-encoded vectors.

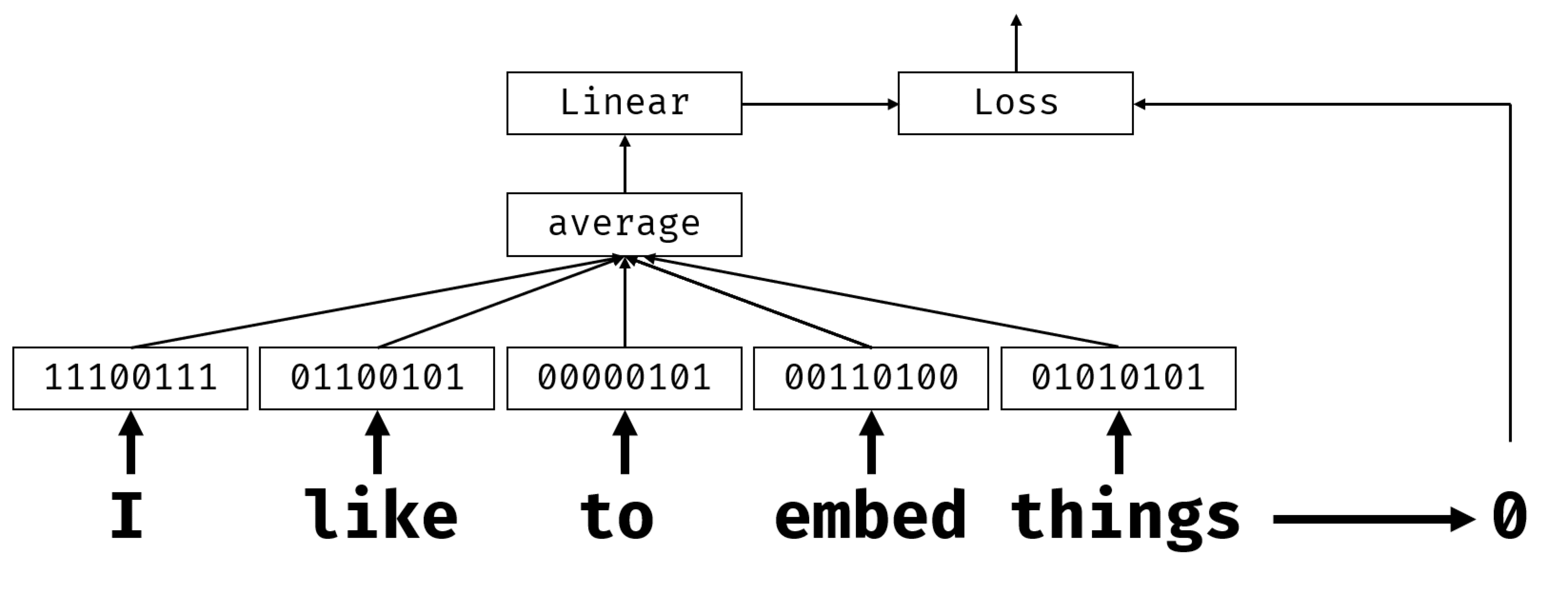

By using an embedding layer as a first layer in our classifier network, we can switch from a bag-of-words to embedding bag model, where we first convert each word in our text into corresponding embedding, and then compute some aggregate function over all those embeddings, such as sum, average or max.

Image by the author

Continue your learning in the following notebooks:

While the embedding layer learned to map words to vector representation, however, this representation did not necessarily have much semantical meaning. It would be nice to learn a vector representation such that similar words or synonyms correspond to vectors that are close to each other in terms of some vector distance (eg. Euclidean distance).

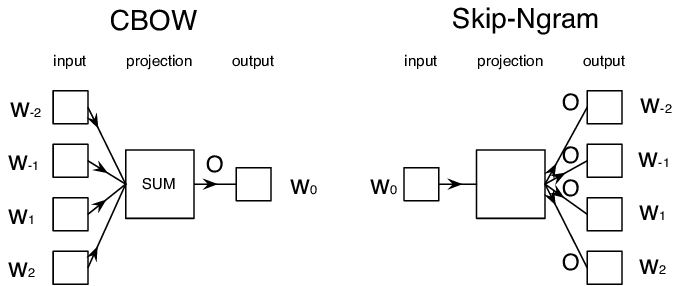

To do that, we need to pre-train our embedding model on a large collection of text in a specific way. One way to train semantic embeddings is called Word2Vec. It is based on two main architectures that are used to produce a distributed representation of words:

-

Continuous bag-of-words (CBoW) — in this architecture, we train the model to predict a word from surrounding context. Given the ngram

$(W_{-2},W_{-1},W_0,W_1,W_2)$ , the goal of the model is to predict$W_0$ from$(W_{-2},W_{-1},W_1,W_2)$ . - Continuous skip-gram is opposite to CBoW. The model uses surrounding window of context words to predict the current word.

CBoW is faster, while skip-gram is slower, but does a better job of representing infrequent words.

Image from this paper

Word2Vec pre-trained embeddings (as well as other similar models, such as GloVe) can also be used in place of embedding layer in neural networks. However, we need to deal with vocabularies, because the vocabulary used to pre-train Word2Vec/GloVe is likely to differ from the vocabulary in our text corpus. Have a look into the above Notebooks to see how this problem can be resolved.

One key limitation of traditional pretrained embedding representations such as Word2Vec is the problem of word sense disambiguation. While pretrained embeddings can capture some of the meaning of words in context, every possible meaning of a word is encoded into the same embedding. This can cause problems in downstream models, since many words such as the word 'play' have different meanings depending on the context they are used in.

For example word 'play' in those two different sentences have quite different meaning:

- I went to a play at the theatre.

- John wants to play with his friends.

The pretrained embeddings above represent both of these meanings of the word 'play' in the same embedding. To overcome this limitation, we need to build embeddings based on the language model, which is trained on a large corpus of text, and knows how words can be put together in different contexts. Discussing contextual embeddings is out of scope for this tutorial, but we will come back to them when talking about language models later in the course.

In this lesson, you discovered how to build and use embedding layers in TensorFlow and Pytorch to better reflect the semantic meanings of words.

Word2Vec has been used for some interesting applications, including generating song lyrics and poetry. Take a look at this article which walks through how the author used Word2Vec to generate poetry. Watch this video by Dan Shiffmann as well to discover a different explanation of this technique. Then try to apply these techniques to your own text corpus, perhaps sourced from Kaggle.

Read through this paper on Word2Vec: Efficient Estimation of Word Representations in Vector Space