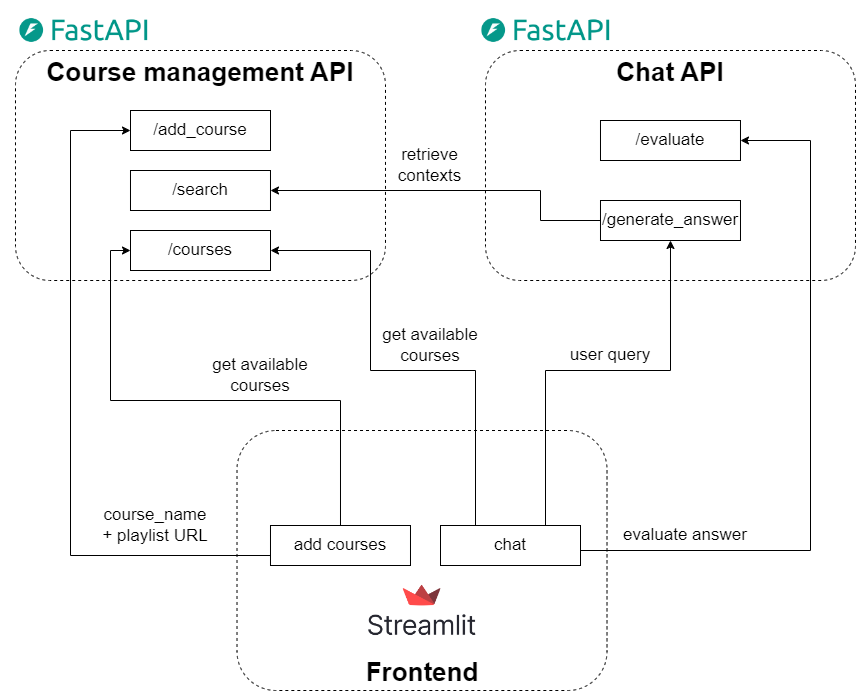

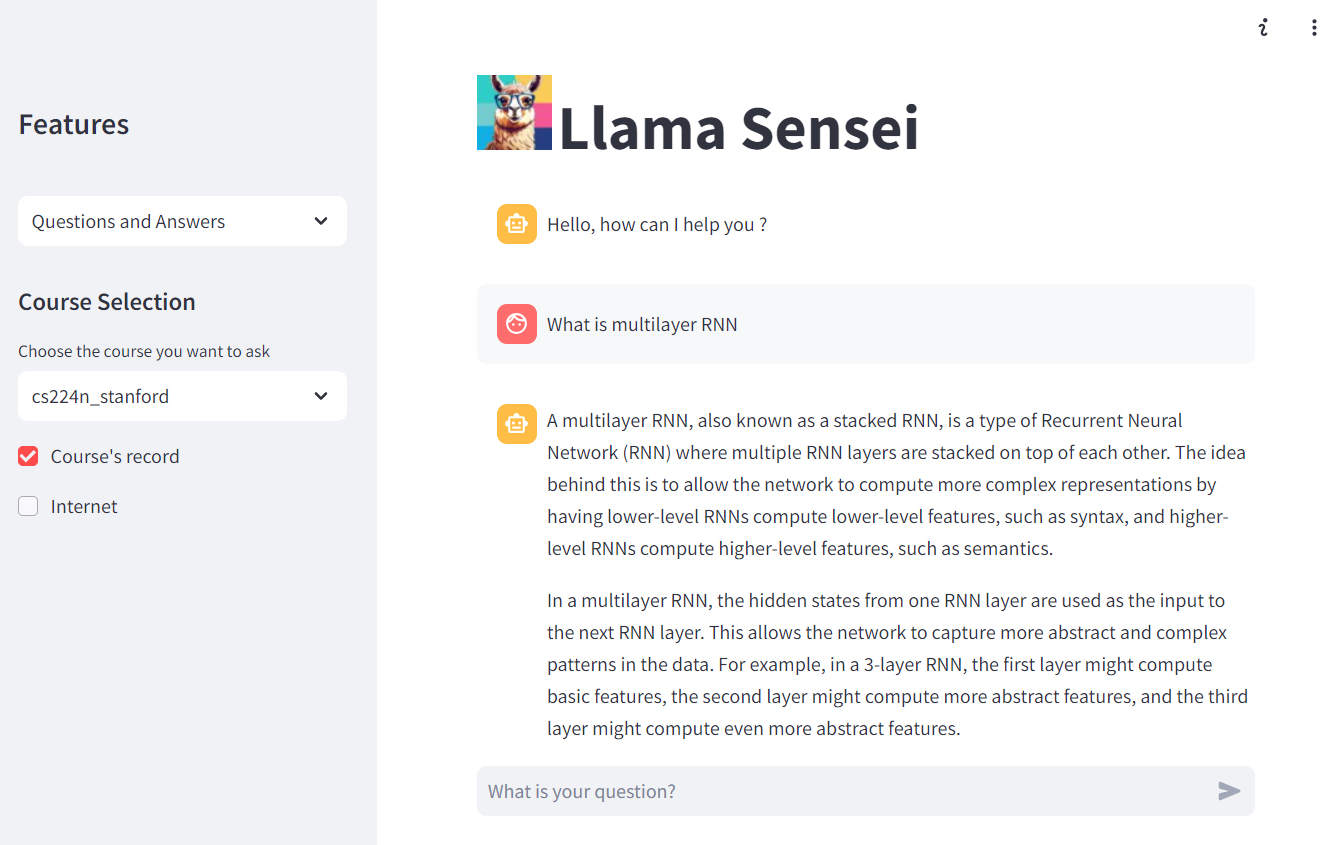

Llama Sensei is a Chatbot application designed to enhance the learning experience by providing instantaneous answers to users' questions about their lecture content on online learning platform (e.g. Youtube). It leverages Large Language Models (LLM) and Retrieval-Augmented Generation (RAG) to ensure accurate and relevant information is delivered. Additionally, it provides precise links to the reference source that RAG uses, enhancing transparency and trust in the provided answers.

|

|

|---|

Llama Sensei offers two main features: Chat and Add New Course.

- Context Retrieval: Retrieve context from either the selected course or the internet, or both, to provide relevant answers.

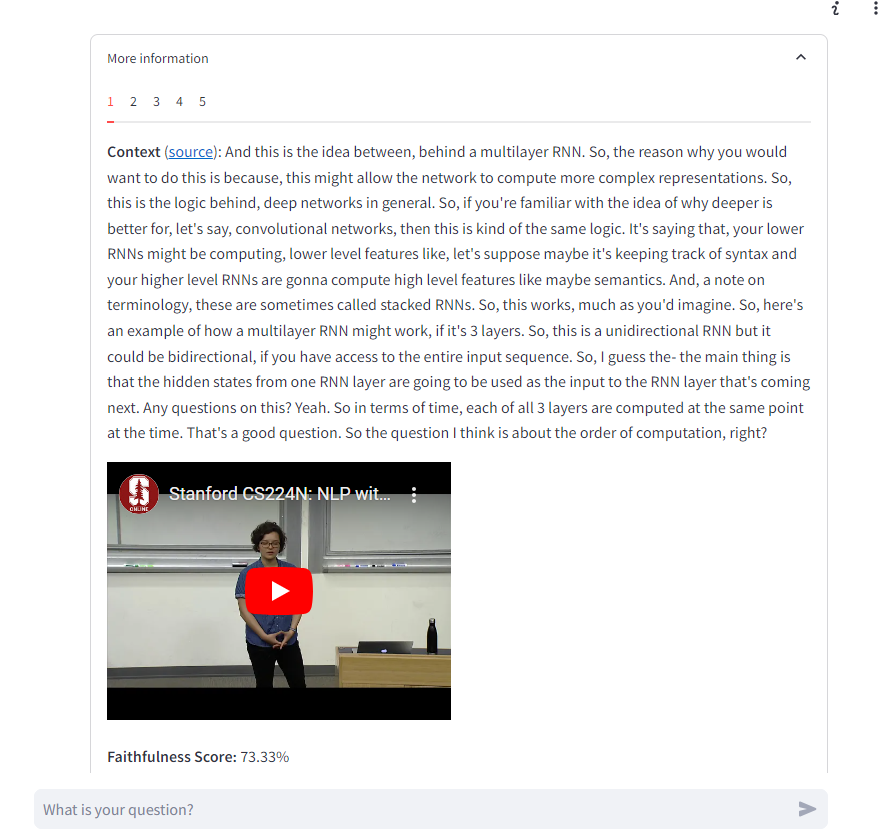

- Reference Linking: Display reference links retrieved from the knowledge base and web search results, including precise timestamps for video content (e.g., specific segments in YouTube videos).

- Automated Answer Grading: Answers are automatically graded by our application to assess their relevance and reliability.

- Create New Course: Easily create a new course within the application by providing the course's URL (Currently we only support Youtube playlist URLs)

- Add Videos: Add new videos to an existing course, allowing for continuous updates and expansion of course content.

- Youtube lecture video crawling: download youtube video playlist that contain lecture videos using yt-dlp.

- Speech-to-Text: transcribe lecture videos into transcripts with specific timestamp using Deepgram API.

- Text Processing: preprocess and handle text data using NLTK.

- Text Embedding: embed text data using sentence_transformers.

- Vector Database: store and retrieve encoded lecture transcripts for RAG using ChromaDB.

- Internet context search: retrieve the external context from internet for RAG using Duckduckgo.

- LLM API: use Groq for fast LLM inference with various models (Llama, Mistral, etc.) and real-time answer streaming.

- Scoring and Evaluation: use Ragas framework to evaluate RAG pipeline and LLM answer.

- User Interface: implement interactive front-end using Streamlit.

- Backend APIs: implement back-end APIs using FastAPI.

- Containerization: use Docker for easy deployment.

This project supports deployment using docker compose. To run the application locally, follow these steps:

-

Clone the repository:

git clone https://github.com/Croissant-Team-Cinnamon-Bootcamp-2024/FinalProject.git llama-sensei cd llama-sensei -

Get the API keys and fill the environment variables in

.env.examplefile and rename the file to.env:- DG_API_KEY: get from https://deepgram.com/

- GROQ_API_KEY: get from https://groq.com/

-

Start the application by running the following command::

docker compose up -d

-

When the service

course_management_apiis building, the yt-dlp will ask you to give authorization with this notification:[youtube+oauth2] To give yt-dlp access to your account, go to https://www.google.com/device and enter code XXX-YYY-ZZZFollow the provided link and enter the code, this will give yt-dlp allowance to download youtube lecture videos.

-

Wait until all the services are running and go to

localhost:8081and enjoy! -

(Optional - for demo purpose) Download the processed course transcript (stanford cs229) here and add the course to database by running the following command (please change the path to the transcript inside the python script file

scripts/create_collection.pyfirst):python scripts/create_collection.py

- Navigate to the "Add Course" page in the application.

- Upload course materials by providing URL to the resources.

- Check your content before add to database and press Submit.

- The application will process and store the course content for future queries.

- Go to the "QA" section.

- Select a course from the database.

- Select retrieve context from either internal database (added lectures) or from internet, or from both.

- Enter your question and submit.

- View the AI-generated answer along with references from the knowledge base or external resources.

The project structure is organized as follows:

📦 llama-sensei

├─ .github/ # For Github Actions configuration

│ └─ ...

├─ app/

│ └─ llama_sensei/

│ ├─ backend/

│ │ ├─ add_courses/

│ │ └─ qa/

│ └─ frontend/

│ ├─ pages/

│ └─ main.py

├─ assets/

│ └─ ...

├─ scripts/

│ └─ ...

├─ tests/

│ └─ unit/

├─ .env.example # example environment variables

├─ .pre-commit-config.yaml

├─ README.md

├─ docker-compose.yaml

├─ requirements-dev.txt

└─ setup.py

If you find any issues or have suggestions for improvements, please feel free to open an issue.

- Technical:

- Optimize the efficienty of the models and latency (which we did not technically measure)

- More features:

- Implement better User Interface (e.g. using React)

- Save answer history

- Get user feedback: Like/dislike button for app evaluation

We maintain high code quality standards through:

- Continuous Integration (CI)

- Coding conventions

- Docstrings

- Pre-commit hooks

- Unit testing

This project was developed by @Croissant team.