These are experiments created while participating in Jeremy Howards's absolutely terrific "Practical Deep Learning for Coders: Part 2" course.

Here's a listing of what each notebook contains:

-

article_summarizer: Using the T5 model to summarize PDF articles from arXiv.

-

diff_edit: Attempts to get the DiffEdit paper implemented. WIP.

-

diffusers_music: Explores using the Hugging Face diffusers library (specifically DanceDiffusionPipeline) to generate music.

-

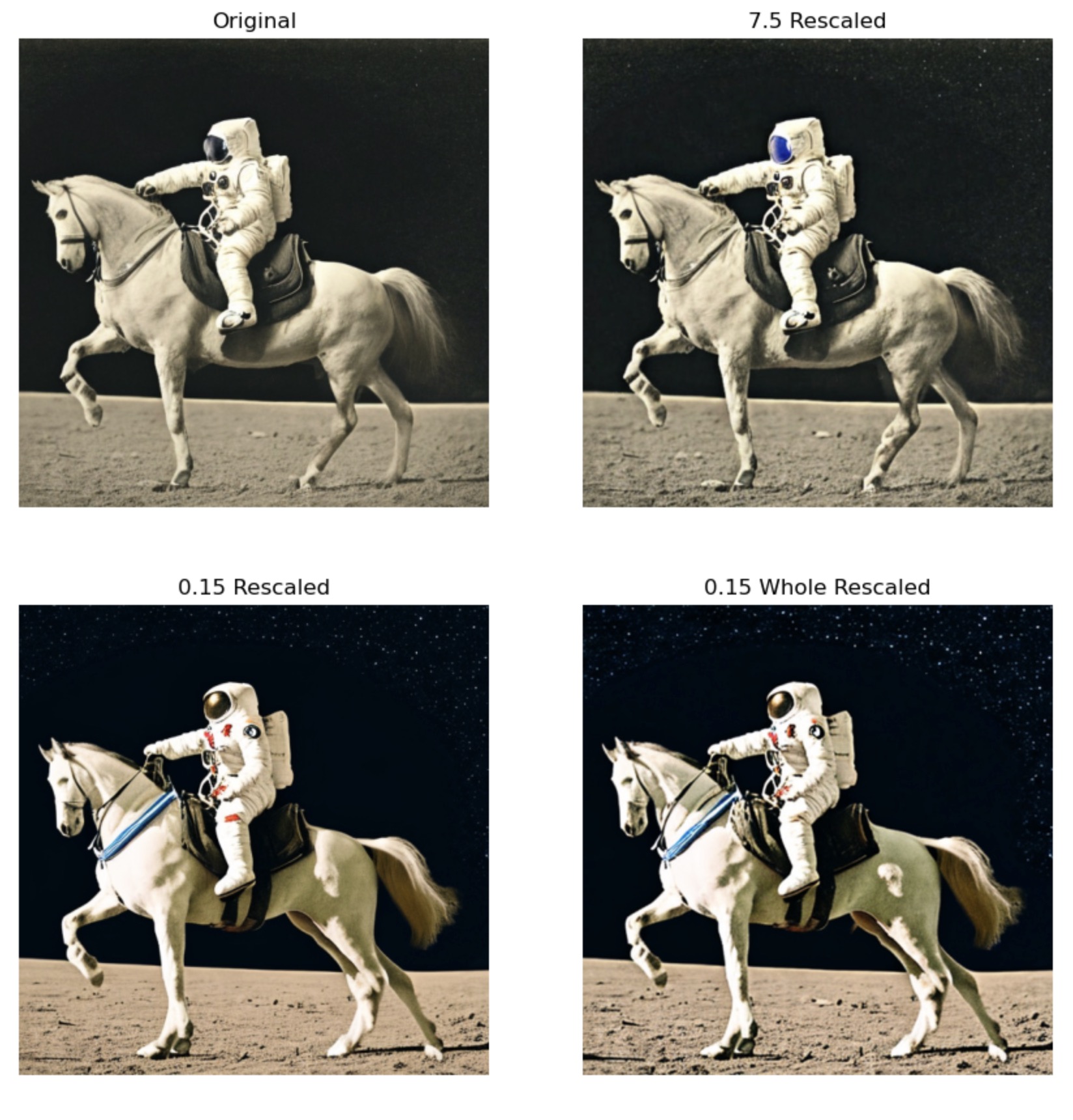

guidance_variations: Trying out various guidance value techniques discussed on the FastAI forums to compare and contrast each method to see which one works best (for a given value of "best").

-

mubert_music: Generating music using the Mubert API and a text prompt. Also explores converting an image to a text description using BLIP/CLIP and then using that description as the prompt to pass on to generate music so that you essentially have an image-to-music pipeline ...

-

prompt_editing: This one has experiments into how to edit elements of an image created using Stable Diffusion by passing the seed used for the original image, the original prompt, and a new edited prompt which contains the changes you want to implement. This is still a WIP since the concept worked on the first try, but I'm not sure if that's a fluke or not ... Needs more testing.

Heres's a bunch of tests where the same prompt (which is the first image in the grid), is edited in various ways to get secondary images which are fairly similar to the first:

-

prompt_edit_image: This is a variation on the prompt_edit example above which takes an image input and edits the image based on an input prompt. Sample output below: