Aggregating and indexing all on-chain event, ticket, and protocol data.

The GET Protocol subgraph acts as the complete data interface to all on-chain data. Its purpose is to properly aggregate and index all static and time-series data passing through the protocol.

- Cross-sectional data is compiled to its latest update and will always return the latest state.

- Aggregate data aggregated across all time, similar to cross-sectional in that there should be one record per-entity.

- Time-series individual granular events of raw data, either as individual records or within a time-bucket.

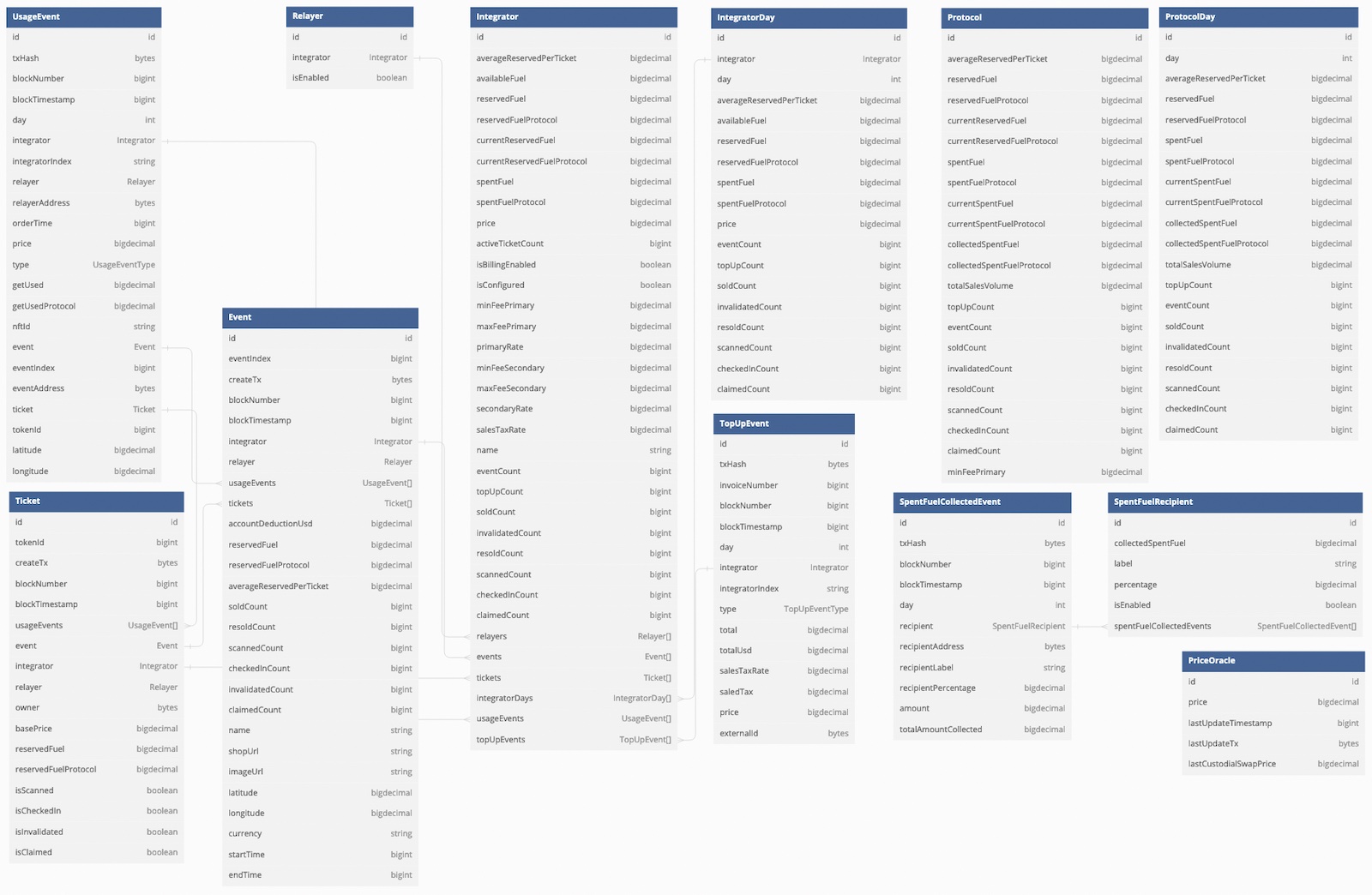

There are a number of key entities to be aware of:

Indexes all metadata for an Event by its address as each Event has it's own independent address on-chain. Updates to an Event will overwrite the data within this entity, so this will always represent the latest state. All actions visible through the associated usageEvents field.

Holds individual records for each Ticket, represented by a unique NFT on-chain and ID'd by its nftIndex. All actions visible through the associated usageEvents field.

All-time data for protocol-wide metrics, aggregated. This is used to capture the all-time usage on the protocol across all integrators. Singleton with ID '1'.

Similar content to Protocol but aggregrated to each UTC-day. Used to track protocol usage over time. ID is a dayInteger from the unix epoch (unix / 86400).

Relayers track the address/account used to mint tickets on behalf of an integrator. No aggregate statistics are kept here and are only used as a reference for the addresses submiting transactions for the integrator.

All-time data for individual integrator, with total usage and counts. ID'd by the integratorIndex. Used for accounting and aggregate NFT statistics.

Usage statistics per-integrator-day. Used to track and compare protocol usage by integrator. ID is a composite key of integratorIndex-dayInteger.

Tracks the configured destination addresses of the spent fuel. Harvesting the spent fuel is a public function and can be executed by any account, so this entity tracks the amount of GET sent to each configured address.

The on-chain price oracle is used to set the price of non-custodial top-ups (integrators that bring their own GET fuel). Here we track the current price of the oracle and the time it was last updated.

Tracks each individual integrator top-up as a separate record to allow for a full record of the amount of each top up and its price in USD. ID is a composite key of txHash-logIndex.

Not to be confused with a real-world Event, these are 'events' that describe an individual uage of the protocol such as EVENT_CREATED, SOLD, SCANNED. Comes with lat/long, the relayer & integrator, the GET used as fuel, the exact timestamp of the block, and the day as an integer. ID is a composite key of txHash-logIndex.

Records each collection of spent fuel within the economics contract per-recipient. When multiple recipient destinations are present, one event will be recorded for each. Tracks where the spent fuel is flowing to.

The GET Protocol Subgraph is available on The Graph Hosted Service, which offers a playground environment for testing queries and exploring entity relationships. A Playground Environment is also available for integrator testing.

Please see the DAO Token Economics Documentation for full details on how GET balances move through the system.

There are a number of steps that GET takes throughout its lifecycle and the subgraph aggregated this for easier charting and analysis. There are a number of key field that help with this:

availableFuelacts as the available balance-on-account for an integrator, used to pay for actions.reservedFuelcontains the total amount of GET fuel that has been reserved.currentReservedFuelis the fuel currently held within the system, and will be released when a ticket is finalized.spentFuelthe total aggregate amount of fuel that has moved from reserved to spent status.currentSpendFuelthe amount of spent fuel ready to be collected and sent to the recipients.collectedSpentFuelthe amount of fuel that has already been sent to recipient addresses. Only at Protocol-level.

No GET is created within this system, so we can define some rules:

availableFuelis equal to the total amount of top-ups, minus thereservedFuel.spentFuelcan never be greater thanreservedFuel, and is equal toreservedFuel + currentReservedFuel.spentFuelis also equal tocurrentSpentFuel + collectedSpentFuel.

Additionally the averageReservedPerTicket provides the average amount of GET that has been required per-ticket. This reserved amount remains in the system until the ticket is either checked-in or invalidated but gives an accurate measure of the token-revenue per NFT-ticket minted. For example, ProtocolDay.averageReservedPerTicket will show the average GET/ticket across all integrators aggregated by day.

The fields listed above track the total fuel consumption for each ticket, regardless of it's destination after being spent. To allow us to more accurately track 'protocol revenue' vs. 'complete product revenue', new fields for reservedFuelProtocol and spentFuelProtocol have been added. Protocol fuel is considered to be the base-cost of the protocol and the minimum charge to mint a ticket NFT without including any of the additional event & ticket management software tooling. Protocol fuel will always be a subset of the total fuel consumption.

{

protocol(id: "1") {

soldCount

resoldCount

scannedCount

checkedInCount

invalidatedCount

claimedCount

}

}{

protocolDays(orderBy: day, orderDirection: desc, first: 7) {

day

reservedFuel

reservedFuelProtocol

spentFuel

averageReservedPerTicket

}

}{

integratorDays(orderBy: day, orderDirection: desc, first: 30, where: { integrator: "0" }) {

integrator {

id

}

day

reservedFuel

spentFuel

averageReservedPerTicket

}

}{

events {

id

name

}

}{

usageEvents(orderBy: blockTimestamp, orderDirection: desc, first: 100) {

type

nftId

event {

id

}

getUsed

}

}{

topUpEvents(orderBy: blockTimestamp, orderDirection: desc, first: 5) {

integratorIndex

total

totalUsd

price

}

}{

ticket(id: "POLYGON-0-209050") {

id

basePrice

event {

id

currency

shopUrl

startTime

integrator {

name

}

}

usageEvents(orderBy: orderTime, orderDirection: asc) {

orderTime

txHash

type

}

}

}{

priceOracle(id: "1") {

price

lastUpdateTimestamp

}

}To run the setup described below you need to have Docker installed to run the subgraph, ipfs, and postgres containers.

Start by setting up a graphprotocol/graph-node. For this you will also need a Polygon RPC endpoint for the graph-node to index from. Infura or Moralis (Speedy Nodes) provide enough capacity on their free-tiers. An archive server is recommended.

- Clone graphprotocol/graph-node to a local directory and use this directory as your working directory during this setup.

- Edit the

services.graph-node.environment.ethereumkey within docker/docker-compose.yml to readmatic:<RPC_ENDPOINT>. - Run

docker compose upto launch the graph-node instance.

At this point you should now have a local graph-node available for local development and you can continue to deploy the get-protcol-subgraph to your local cluster.

yarn installto install dependencies.yarn prepare:productionto prepare the subgraph configuration files. (More information in the "Deployment" chapter below)yarn codegento generate the neccesary code for deployment.yarn create:localto create the local graph namespace.yarn deploy:localto deploy the graph to the local instance.

The local GraphiQL explorer can be found at http://localhost:8000/subgraphs/name/get-protocol-subgraph/graphql. Follow the terminal logs from the docker compose up command to follow progress on the indexing.

Both playground and production deployments are handled within this repository using Mustache for generating templates. The Graph CLI does not have a native way of handling this so the environment that you wish to deploy must first be staged as the main deployment:

yarn prepare:playgroundwill replace thesubgraph.yamlandconstants/contracts.tswith the correct values for the playground environment.yarn deploy:playgroundwould then deploy this to the playground hosted graph.

Prior to deploying an environment you must always ensure to have prepared the correct subgraph.yaml to prevent deploying to the wrong hosted graph.

This repository comes with a Dockerfile to manage dependencies for build and release. To release this subgraph to TheGraph decentralized service you must first have a deploy key, and then:

docker build --build-arg DEPLOY_KEY=<KEY> -t subgraph .docker run -it subgraph release:<ENV> -l <VERSION>

Where ENV is the environment to release to, see package.json for options, and VERSION is the semver tag of the release version.

Contributions are welcome, please feel free to let us know about any issues or create a PR. By contributing, you agree to release your modifications under the MIT license (see the file LICENSE).

Where possible we stick to some sensible defaults in particular @typescript-eslint, and Prettier. Husky has been configured to automatically lint and format upon commit.