The main topics of the labs are Microsoft Azure, Hashicorp Terraform, and GitHub. The labs proceed in that order, with the end goal of deploying a "landing zone" into Azure, leveraging Terraform for Infrastructure as Code (IaC), and GitHub Actions for the deployment pipeline.

- You should have received an email with a user ID and password for the lab environment. You will use those credentials to log into an Azure tenant where you can complete the labs.

- As per the instructions in the workshop email, you will need a GitHub account. If you did not create your GitHub account yet, please follow the instructions in the email to create an account now.

In this lab you will log into the Azure portal and review a sample of the solution deployment. You will also setup some Azure resources required for the subsequent labs.

- In your web browser navigate to https://portal.azure.com. When prompted to login, use the credentials you received via email.

- After logging in, you may be prompted for a tour, or a "Getting Started" page. Dismiss these to proceed to the home page.

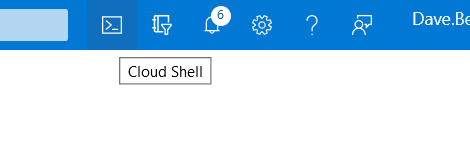

- At the top of the home page you should see the following menu bar:

Below that should be 3 section headings: "Azure services", "Recent resources", and "Navigate".

Below that should be 3 section headings: "Azure services", "Recent resources", and "Navigate".

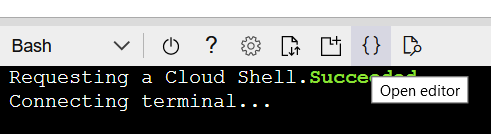

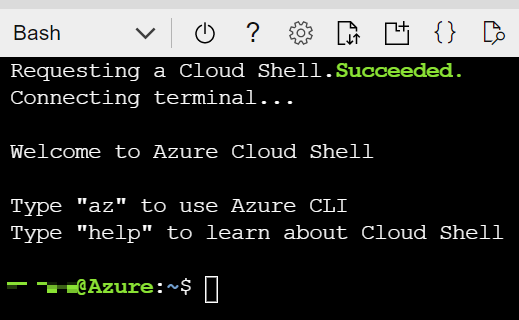

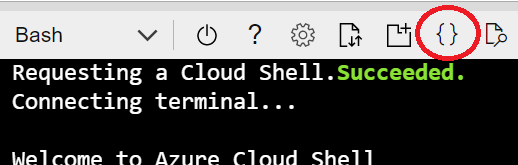

- Azure Cloud Shell is a command-line interface within the Azure portal. It even includes an basic version of Visual Studio Code!

- To setup your own Cloud Shell, either click on the Cloud Shell icon

in the main toolbar at the top of the page

OR for a full page experience, open a new tab and navigate to https://shell.azure.com

in the main toolbar at the top of the page

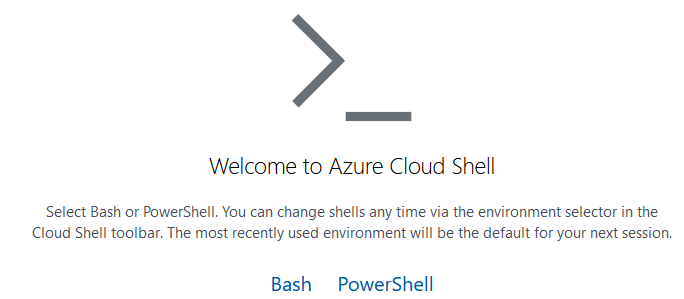

OR for a full page experience, open a new tab and navigate to https://shell.azure.com - The first time you open Cloud Shell the portal will display the following prompt:

- Select Bash or PowerShell, whichever you prefer. You can always switch later on.

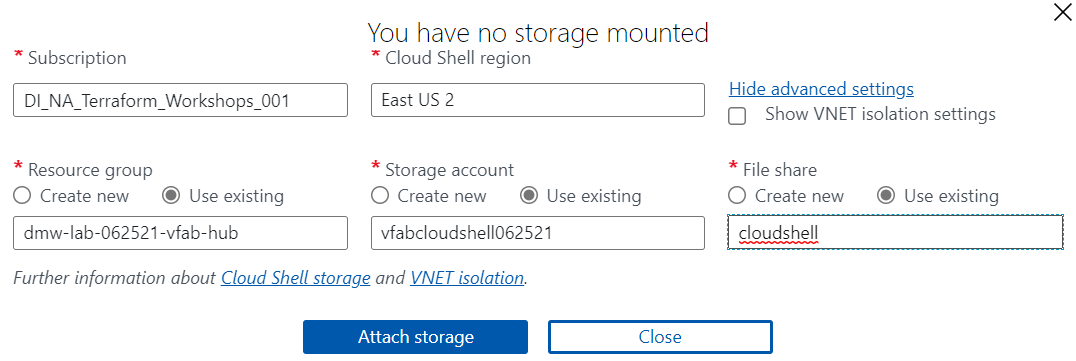

- In the next prompt, click the "Show Advanced Settings" link, which will switch to the following prompt:

- Change the "Cloud Shell region" to "East US 2". This must be selected before you can pick the "Storage account".

- Select the

dmw-lab-[date]-[name-prefix]-hubResource group. - For the "Storage account", select the "Use existing" option, and then select the

[name-prefix]cloudshell[date]Storage Account. - For the "File share", select the "Use existing" option, and then enter "cloudshell" as the fileshare.

- Finally, click the "Create storage" button and after the Cloud Shell is initialized you should see a command prompt.

NOTE: Please follow this step precisely. It is critical for subsequent steps to work correctly.

Now that you're [somewhat?] familiar with the Azure Portal, it's also good to be familiar with the commandline options for interacting with Azure. We're going to use the Azure CLI (CommandLine Interface) to create a folder -- called a "container" -- within your storage account. You store files, called "blobs", within the container. We'll need that container for the upcoming Terraform labs.

Open Cloud Shell (if not already open) and execute the following command:

az storage container create --account-name [name-prefix]cloudshell[date] --name terraform-state

... entering your storage account name and the name of the container. E.g., az storage container create --account-name jsmicloudshell062521 --name terraform-state

If successful the command will return the following:

{ "created": true }

- Either click on the "Resource Groups" button in the "Navigate" section near the bottom of the page:

Or, type "resource" in the Azure search bar at the top, and click on "Resource groups" in the drop-down list.

Or, type "resource" in the Azure search bar at the top, and click on "Resource groups" in the drop-down list.

- You should see a list of Resource Groups similar to the following:

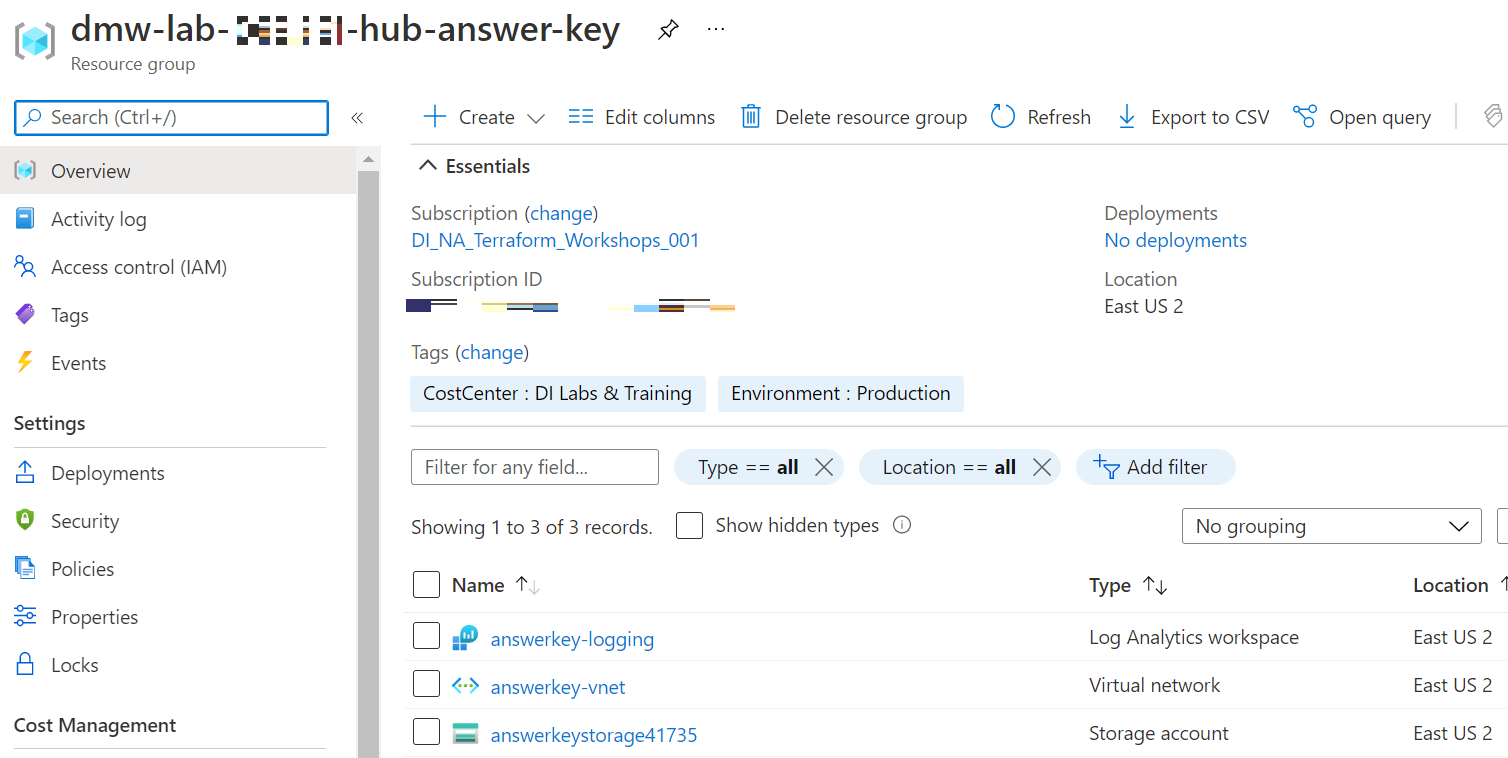

- Click on the

dmw-lab-[date]-hub-answer-keyResource Group. - You should see the following Resource Group tab with these resources:

- Click through the various resources to see their details.

- Navigate back to the list of Resource Groups and click on the

dmw-lab-[date]-spoke-answer-keyResource Group. - There should only be a VNet to review here.

- In the following labs you will be deploying hub and spoke Resource Groups with the same resources.

- You need to complete Lab 1: Microsoft Azure before starting this lab.

- All lab instructions are to be completed in the Azure CloudShell.

-

Visit https://portal.azure.com. You will be prompted to reset your password.

-

Once logged in, open the Cloud Shell.

- Clone the terraform-intro repository into

~/terraform-introby executing the following command:git clone https://github.com/InsightDI/terraform-intro.git - Change into the

terraform-introdirectory:cd terraform-intro

Terraform ships as a binary - a single executable file. This binary is used to do everything from applying a Terraform configuration (more on that later) to interacting with the Terraform state file.

- View the terraform binary:

which terraform - Note that in Cloud Shell, the binary is stored in the

/usr/local/bindirectory, which is part of the shell's PATH:env | grep PATH - View the terraform commands:

terraform - Make note of the

Main commandssection - we will be using a number of these during the lab. - Take a quick glance over the

All other commandssection as well - Determine the version of Terraform running in Cloud Shell:

terraform version - Note that Terraform is versioned with a semantic version number.

- Copy the version number output from the command to your clipboard, e.g.

1.0.2. You do not need to copy the "v" that prepends the version number as we won't be using it. - Because Terraform ships as a single binary, it's easy to install any version you need. Simply visit releases.hashicorp.com/terraform to see available versions.

It's best practice to set the version of Terraform you're using to write your infrastructure-as-code in the code itself.

-

Open the Cloud Shell code editor

-

Expand the

terraform-introdirectory from the FILES tree -

Open the file named

providers.tfand find theterraform {}block -

Update the

required_version = "~> YOURVERSIONHERE", replacing "YOURVERSIONHERE" with1.0, to allow Terraform to use the newest major version.- Please leave the "~> " in front of your version. This is called the pessimistic contstraint operator which allows for variation in the patch number of the required version.

-

Your

providers.tffile should look something like this, though your version may vary:terraform { required_version = "~> 1.0" ... } -

Hit

CTRL + Sto save the file

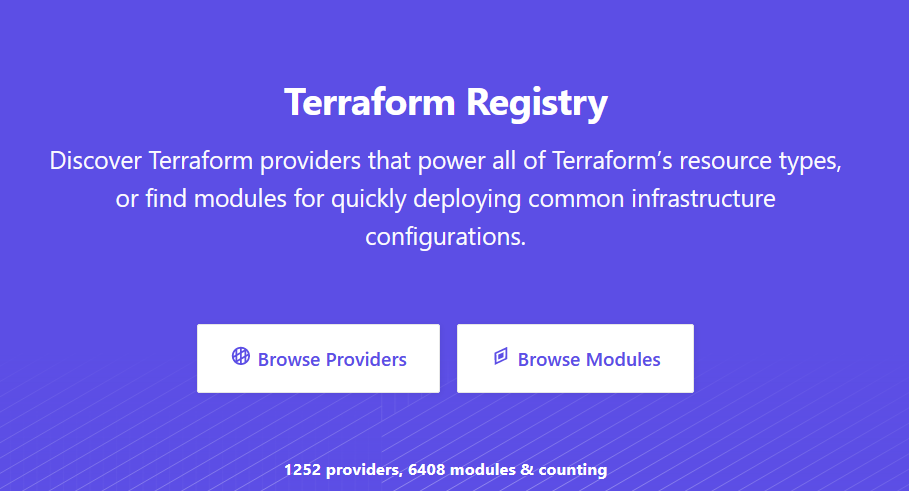

Terraform interacts with one or more APIs (and thus, clouds) via providers. Therefore, you will typically see one or more provider configurations in Terraform code. In this section, we're going to discover the latest provider version for azurerm, which is used to interact with the Azure Resource Manager API.

-

Official Terraform providers can be found in the Terraform Registry at registry.terraform.io. Open a web browser an navigate to https://registry.terraform.io now.

-

Click "Browse Providers"

-

Select "Azure" from the list of providers

-

From the "Overview" tab, copy and paste the azurerm provider VERSION to your clipboard

-

Note the "Documentation" tab - this is where all of the documentation for the provider is located - as in, if you're developing Terraform against Azure, you will use this documentation extensively :)

-

Go back to your editor in Cloud Shell and open

providers.tfif it isn't already open -

Find the

required_providersblock, and within, theazurermblock. -

Edit the

version = "VERSION"replace VERSION with2.65 -

Your

providers.tffile should look something like this, though your azurerm version may vary:terraform { ... required_providers { azurerm = { version = "2.65" } } ... } -

Hit

CTRL + Sto save the file

Any time you make changes to the terraform {} block, you will need to initialize Terraform. The same is true for the first time you go to run a Terraform configuration.

-

Use the Terraform CLI from Cloud Shell to initialize Terraform:

terraform init -

Notice how you didn't pass any flags into the command to tell it where to find your Terraform configuration files? That's because Terraform CLI reads through the directory from where it is executed and loads in all of the files ending in ".tf" automatically. Neat!

-

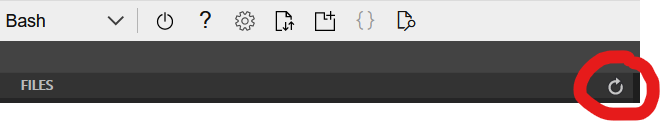

From the code editor, refresh the FILES window.

-

You should now see the following additional files or directories:

.terraform/ .terraform.lock.hcl -

Expand the

.terraform/directory tree as far as it will let you. This directory is where Terraform stores all provider information.- This directory should not be checked into source code

-

Open the

.terraform.lock.hclfile.- This file contains version information for all providers references in the Terraform configuration, including hashes used to calculate the provider configuration in order to provide consistency from one run to the next.

- This file should be checked into source code

We're almost ready to deploy some resources in Azure, but first, you'll need to update your variables to reflect your unique environment.

- Open

variables.tfin the editor. Variables define values that can be input into Terraform at plan, apply, and destroy time.- Some variables, such as

locationhave a default value that will be used if no value is supplied - Other variables are required input - they can either be defined as an environment variable

TF_VAR_<var name>, defined in a "tfvars" file, or manually entered at plan/apply/destroy time when prompted by the Terraform CLI

- Some variables, such as

- Open

terraform.tfvarsin the editor - we'll use this file to define our variable values. - Set

prefix = "YOURPREFIXNAME"to your first initial and lastname, e.g.dbenedic - Open the Azure Portal and find the name of your unique "spoke" resource group. For example, my resource group is named

dmw-lab-062521-dben-spoke. - Copy the resource group name and paste it into the

terraform.tfvarsfile value forresource_group_name. Your tfvars file should look something like this (your strings will vary):prefix = "dbenedic" resource_group_name = "dmw-lab-062521-dben-spoke" - Hit

CTRL + Sto save the file

Terraform is great because it has a workflow that allows you to see the predicted changes before you apply them. This is known as a "plan".

- From Cloud Shell, within the

terraform-introdirectory, run the following to generate a plan:terraform plan - Note that our plan is NOT making any changes yet, it's just communicating with the Azure API and comparing what exists (reality) vs. what we have in our code (source of truth).

- Your plan should show a bunch of resource "adds" with a green "+"

- The plan summary should show

2 to add, 0 to change, 0 to destroy- The summary allows you to see, at-a-glance, what resources Terraform thinks it's going to add, change, or destroy when it is run.

- "Adds" are typically ok, but still worth looking at closely.

- "Changes" or "Destroys" are destructive actions that require understanding before applying the plan.

- It is best practice to output a plan and use that plan output to run an apply against that plan to ensure consistency between the plan and apply phases.

- From Cloud Shell, within the

terraform-introdirectory, run the following to generate a plan and store its output:terraform plan -out myplan.tfplan - Run

ls -la | grep "myplan.tfplan"from Cloud Shell to stat the plan file - Run

cat myplan.tfplanfrom Cloud Shell - note that the plan is not readable!

- From Cloud Shell, within the

terraform-introdirectory, run the following to take our plan output and "make it so":terraform apply myplan.tfplan - If you get an error during your apply, your plan will no longer be valid (by design). You can 1) rerun the plan and outupt a new plan or 2) run

terraform applyand manually approve the plan when prompted - Let the apply run - it will tell you when it's finished!

- Click on the output labelled

url = https://<some_fqdn>- this will open the web page you just deployed to Azure... pat yourself on the back.- Note: when clicking the url from Cloud Shell, you may see an errant

"at the end of your url in the address bar - be sure to remove that and try your url again!

- Note: when clicking the url from Cloud Shell, you may see an errant

- Navigate to the Azure Portal and checkout your resources Terraform deployed for you in the spoke resource group

Terraform uses a state file to keep track of its last-known state of resources it has applied. The statefile is important because it allows future Terraform plans and applies to happen incrementally and keeps your infrastructure idempotent.

- After each Terraform apply, a statefile version is created. If it's the first apply, the statefile itself is also created.

- From Cloud Shell, within the

terraform-introdirectory, run the following to see the statefiles that were created:ls | grep tfstate - Note that you have 2 statefiles - a

terraform.tfstateand aterraform.tfstate.backup - Run the following command to output the statefile to stdout:

cat terraform.tfstate - A few things of note about the statefile:

- It's in JSON format

- It has a version number

- It tracks the version of Terraform that was used to generate it

- It has a GUID called "lineage"

- It contains outputs from the Terraform apply

- It contains a record of the resources it provisioned

Part of Terraform's power lies in its statefile. When you start to grow a team that works with Terraform, you need a shared location to store, read, and write statefiles. Enter remote state.

- Open

providers.tffrom the editor again - Find the commented-out block labelled

backend "azurerm". A backend is used to define a remote backend - in this case an Azure Storage Account. We're going to use a storage account that was pre-provisioned in your Azure lab environment. - Highlight the entire block of code that is commented out and hit

CTRL + /to uncomment it. - From the Azure Portal, find the storage account you created in your lab resource group. Note the storage account name.

- Still from the Azure Portal, locate the "Containers" section on the navigation bar under the "Data storage" heading.

- Make note of the container name.

- If you haven't already created a container or don't see one in the portal, create one now.

- Back in the Cloud Shell editor, in the

providers.tffile, update thestorage_account_nameandcontainer_nameto reflect the values you just looked up in the portal. - Run the following from Cloud Shell, following the prompts to move your statefile when asked:

terraform init - Once you have successfully moved the state into the remote backend, you may remove the local statefiles:

rm terraform.tfstate && rm terraform.tfstate.backup - Run another terraform apply to confirm everything is working:

terraform apply -auto-approve - IMPORTANT!!! Terraform state contains HIGHLY SENSITIVE information and should be SECURED accordingly! Same goes for source control, so don't check it in, ok?

- Bonus points if you can figure out how we're preventing state from getting checked into the

terraform-introrepo...

- Bonus points if you can figure out how we're preventing state from getting checked into the

Alas, all good things must come to an end. Time to destroy some stuff! After all, rapid elasticity is one of the reasons why we use the cloud, right? BURN IT DOWN!

- From Cloud Shell, within the

terraform-introdirectory, run the following to tear down our deployment:terraform destroy - Review the items that Terraform is planning destroy (like a plan, but in reverse!) and type "yes" to approve.

- Wait for Terraform to finish destroying stuff.

- You're done! Congrats!

-

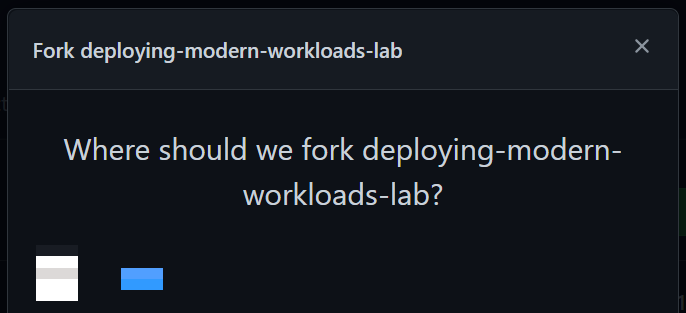

Open a new tab in your browser and navigate to the workshop GitHub repository: https://github.com/InsightDI/deploying-modern-workloads. In the upper right-hand corner click the "Fork" button.

-

Click your personal GitHub organization. GitHub will popup a message stating that it is forking the repo. When complete it will display the forked repo in your org.

-

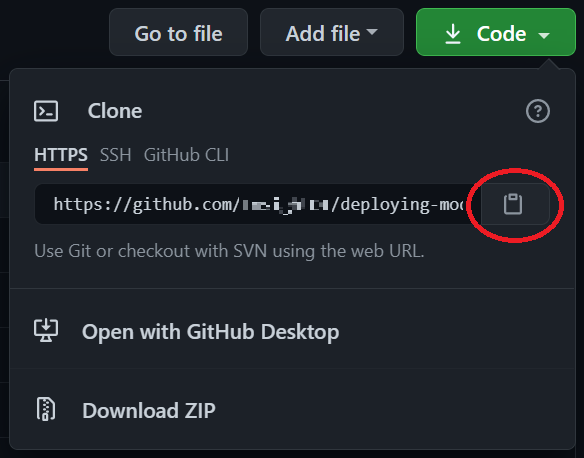

Next, click on the green "Code" button to clone the repo into your Cloud Shell.

-

Return to the Azure portal and open Cloud Shell if it's not already open. At the Cloud Shell command prompt type

git clone <space>and then right-click within Cloud Shell and select "Paste". You should end up withgit clone https://github.com/InsightDI/deploying-modern-workloads.git -

The

git clone...comand will prompt for your GitHub username. If you don't recall your username, just go to GitHub and click on the profile button in the upper right-hand corner. Then it will prompt for your password, for which you should enter your GitHub Personal Access Token, which you created (and stored safely and securely 😁) when following the prerequisites in the workshop email. Once logged in, GitHub will clone the repo to your Cloud Shell session.

Then it will prompt for your password, for which you should enter your GitHub Personal Access Token, which you created (and stored safely and securely 😁) when following the prerequisites in the workshop email. Once logged in, GitHub will clone the repo to your Cloud Shell session. -

When the

git clone...command completes, list the files in the directory (using thelscommand) to verify that you now have a "deploying-modern-workloads" folder with Terraform code in it.

The Terraform code in this lab completes your landing zone deployment. After executing this Terraform code (in the upcoming GitHub labs) your hub and spoke Resource Groups will have the same resources as you reviewed in the "answer key" Resource Groups, although they will be named differently.

-

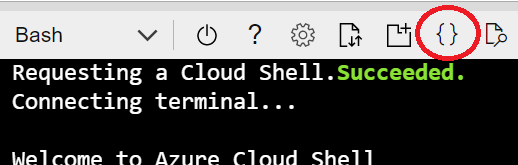

In the toolbar at the top of Cloud Shell click the "Open editor" button:

-

Expand the "deploying-modern-workloads" folder and open the

variables.tffile. See how variables are defined with names, descriptions, types, default values, and even validation. -

Open the

locals.tffile to see how local values can be defined to be used throughout your Terraform code. Change theYOURNAMEHEREvalue and hit Ctrl-S to save. -

Open the

hub.tffile to see how it creates a random number, a virtual network with multiple subnets, and then peers the virtual network with the spoke virtual network. It also creates a Log Analytics workspace for monitoring your resources, and then it creates a storage account and configures some of the storage account's metrics to be routed to the Log Analytics workspace. Finally it creates an Azure Firewall within thefirewallsubnet and provides it a public IP address. -

Next, open the

spoke.tffile. It also creates a random number, a virtual network with a subnet, and then peers the virtual network with the hub virtual network. Then it configures some network routing for the firewall. Finally it creates an Azure Container Instance and configures its networking. -

Open

outputs.tfto see the values that are output when the Terraform deployment completes. -

Finally, open the

providers.tffile to see how the code configures theazurermandrandomTerraform providers. -

Close the Cloud Shell editor by clicking the ellipses in the upper right-hand corner of Cloud Shell and selecting "Close Editor", or hit Ctrl-Q.

In the following steps you will review the first GitHub workflow.

-

Open Cloud Shell, if not open already.

-

Open the editor.

-

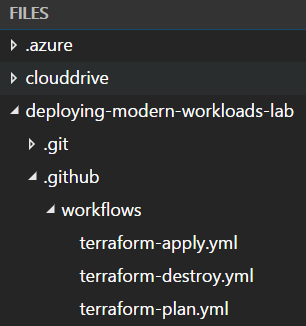

Expand the

deploying-modern-workloadsfolder, and then the.githubandworkflowsfolders under that.

-

Within the

workflowsfolder open theterraform-plan.ymlfile. -

The file is somewhat self-documenting, but see below for some highlights. (Complete GitHub Action syntax can be found here.)

on: For declaring workflow triggers.jobs: The list of jobs that run within the workflow. Each job is composed of multiple steps. This job includes steps that 1) check out the source code, 2) install Terraform, 3) executeterraform initand 4) executeterraform plan.- Note how the

env:property configures environment variables for the subsequent steps. The Azure provider for Terraform uses these environment variables to connect to the Azure subscription. For example, theARM_CLIENT_ID: ${{secrets.DMW_ARM_CLIENT_ID}}code sets an environment variable named "ARM_CLIENT_ID" to the secret value named "DMW_ARM_CLIENT_ID". You will configure these secrets in the next section of the lab.

-

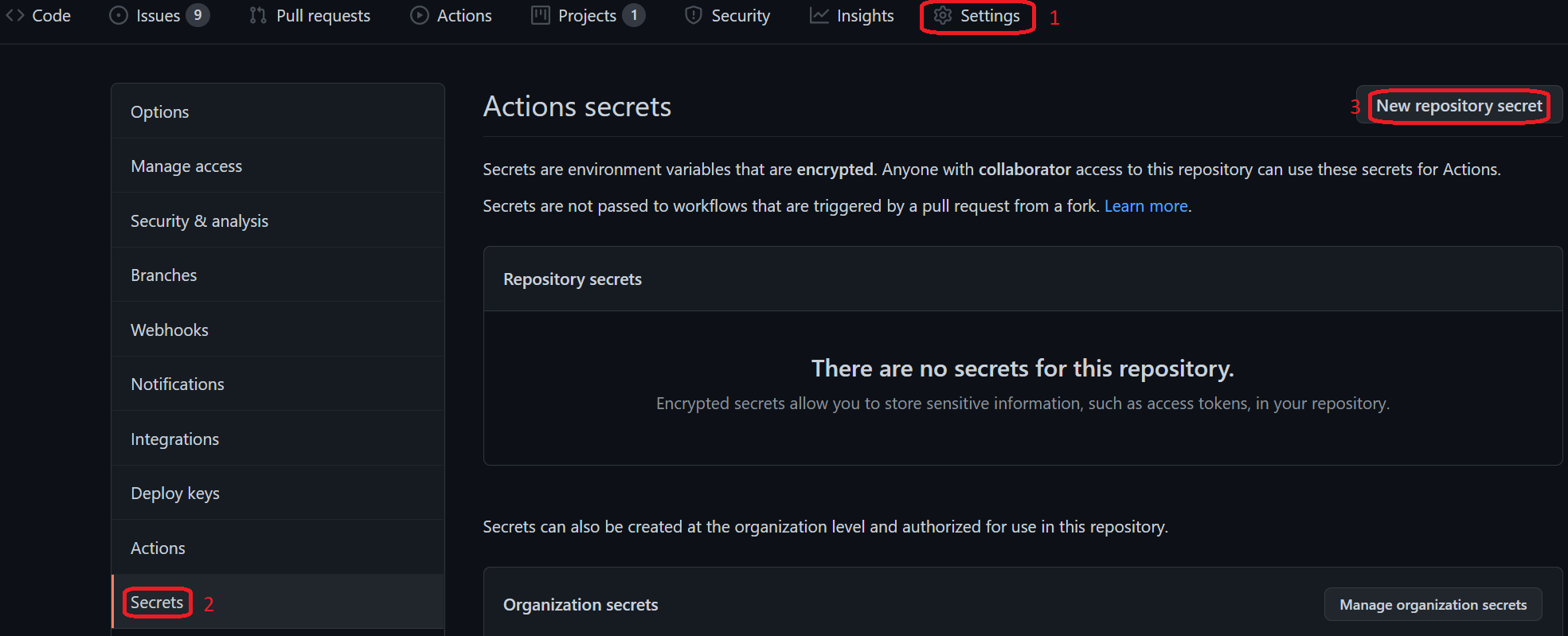

Navigate to GitHub and to the "deploying-modern-workloads-lab" repo. Make sure GitHub is open in a separate tab from the Azure portal as you'll need to navigate between the two tabs.

-

Click on 1) the "Settings" tab, 2) the "Secrets" tab, and 3) the "New repository secret" button.

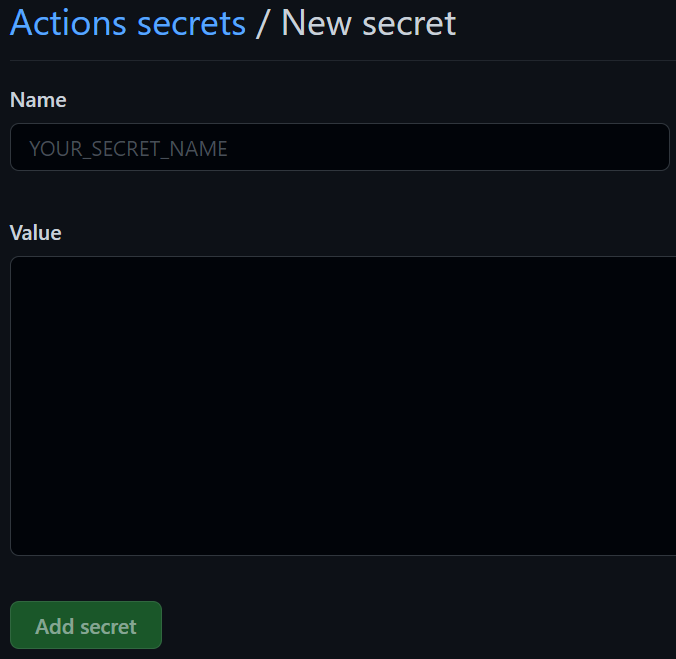

You should see the "New secret" form.

-

Enter "DMW_ARM_CLIENT_ID" as the secret name.

-

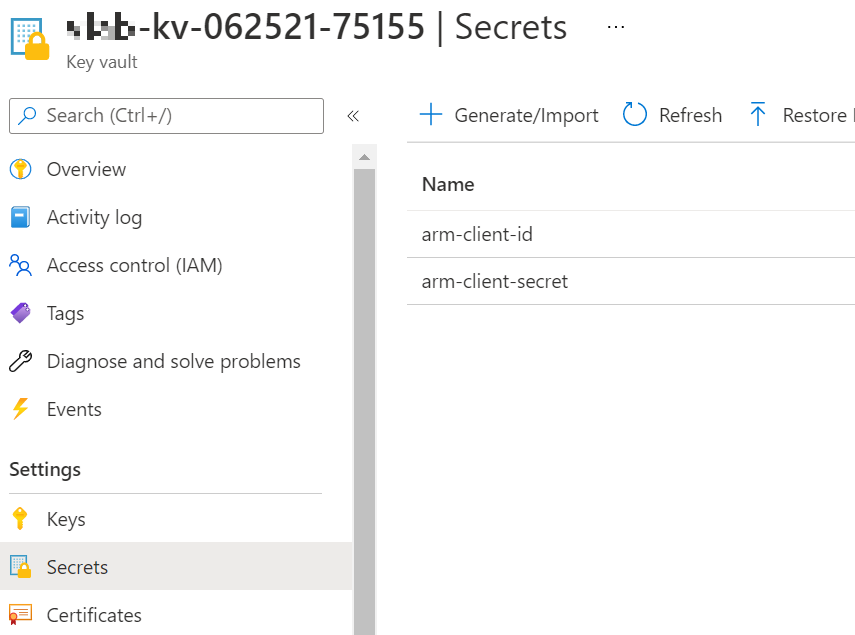

Now switch to the browser tab with the Azure portal. Navigate to the Resource Group named "dmw-lab-[date]-[name-prefix]-hub" (e.g., "dmw-lab-062521-jsmi-hub") and then open the Key Vault named "[name-prefix]-kv-[date]-75155" (e.g., "jsmi-kv-062521-75155").

-

Click on the "Secrets" tab on the left navigation panel to list the current secrets.

-

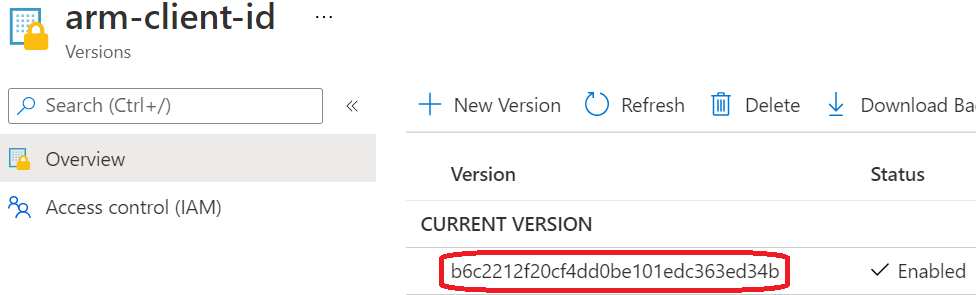

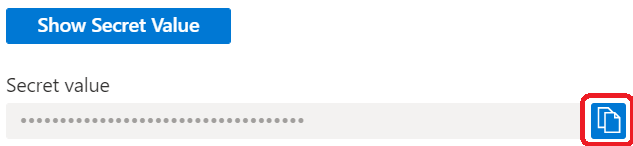

Click on the "arm-client-id" secret, and then click on the current version.

-

Click on the copy button next to the "Secret value".

-

Switch back to the browser tab with GitHub and paste the secret into the secret value. Then click the "Add secret" button.

-

GitHub will return to the list of secrets, which now includes the "DMW_ARM_CLIENT_ID" secret. Click the "New repository secret" again to add the "DMW_ARM_CLIENT_SECRET".

-

Return to the browser tab with the Azure portal to get the "arm-client-secret" secret, then return to GitHub and enter its value for the "DMW_ARM_CLIENT_SECRET" secret, and finally save the GitHub secret.

-

Next, to populate the "DMW_ARM_SUBSCRIPTION_ID" and "DMW_ARM_TENANT_ID" secrets, let's use another common Azure CLI command. To get the subscription ID and tenant ID, return to the Azure portal and open Cloud Shell. Then execute the following command:

az account show. The command will return a JSON object. The "id" property is the subscription ID and the "tenantId" property is the tenant ID. Copy their values to add the two new GitHub secrets. (Do NOT copy the quotes!)

In this portion of the lab we'll first create a .tfvars file with the detailed configuration of our deployment, and then we'll execute the Terraform deployment using a GitHub workflow. (Read here for details on .tfvars files.)

-

Open Cloud Shell if it's not already open.

-

Open the editor, then expand the

deploying-modern-workloadsfolder and open thevariables.tffile. Note that thehub_resource_group,spoke_resource_groupandprefixare all required variables because they do not have default values. Thelocationvariable is not required because it has a default value ofeastus2. -

We are going to create a Terraform configuration file -- a

.tfvarsfile -- that contains values for the variables that will shape our deployment. -

At the Cloud Shell commandline, switch to the

deploying-modern-workloadsfolder usingcd deploying-modern-workloads. The command prompt should now be "[your-name]@Azure:~/deploying-modern-workloads$". -

At the commandline enter

code lab.tfvars. This should open the editor with an empty file. The top bar above the editor should display "lab.tfvars". -

Enter the following in the editor, replacing "[date]" and "[name-prefix]" as needed:

hub_resource_group = "dmw-lab-[date]-[name-prefix]-hub" spoke_resource_group = "dmw-lab-[date]-[name-prefix]-spoke" prefix = "[name-prefix]"For example,

hub_resource_group = "dmw-lab-062521-jsmi-hub" spoke_resource_group = "dmw-lab-062521-jsmi-spoke" prefix = "jsmi" -

Hit Ctrl-S to save your changes and then close the editor (Ctrl-Q).

-

Before you can commit your new file to the GitHub repo you'll need to configure some git settings. At the commandline enter the following 2 commands:

git config --global user.email "[name]@insightworkshop.us" git config --global user.name "[Your Name]"For example,

git config --global user.email "[email protected]" git config --global user.name "[John Smith]" -

Stage your changes with the following command:

git add lab.tfvars -

Commit your changes to the GitHub repo with:

git commit -a -m "Added .tfvars file". Note that you are currently working directly on the "main" branch, which is not normally recommended. (But we won't tell anyone.) -

Finally, push your commit to the remote repo with:

git push.

In this final portion of the lab we will leverage the GitHub workflows you reviewed above to deploy Azure resources to the lab environment.

-

Open another browser tab (if needed) to navigate to your GitHub account where you forked the lab repo, and navigate to the lab repo. The URL will be

https://github.com/[your-account-name]/deploying-modern-workloads. -

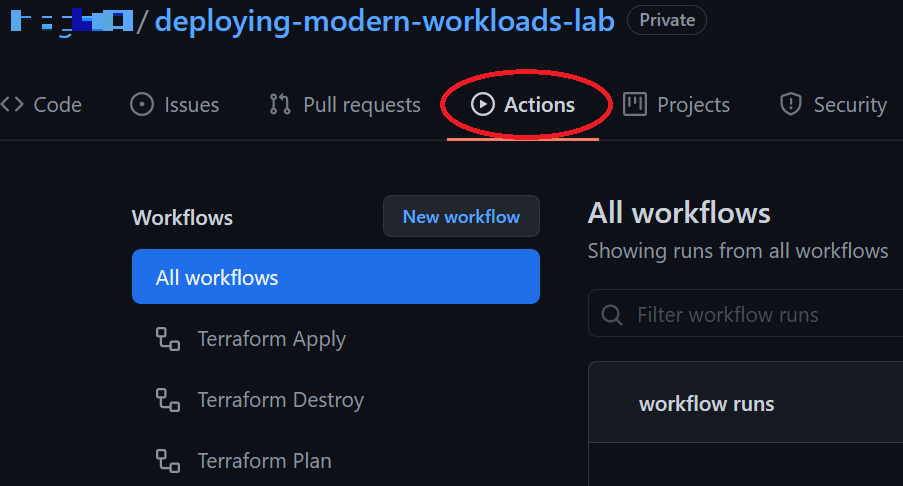

Click on the "Actions" tab.

-

Recall that in this lab's first step you reviewed the code in

terraform-apply.yml,terraform-destroy.ymlandterraform-plan.yml. Now you can see thenameproperty of each workflow listed below the blue "All workflows" button. -

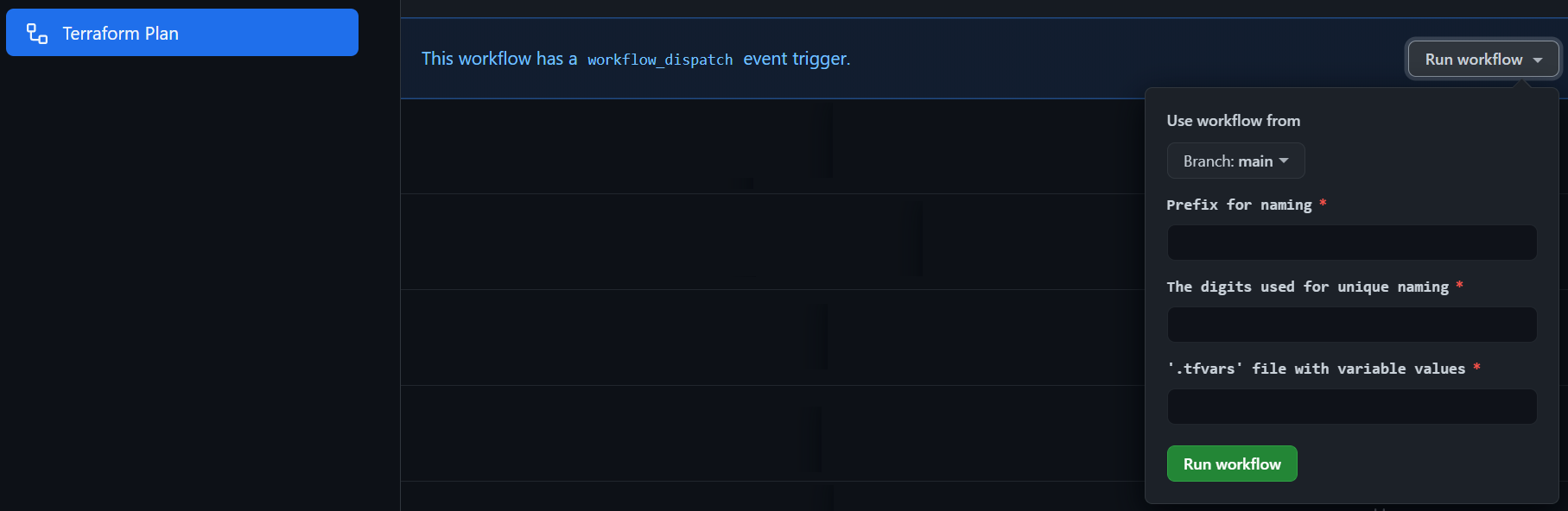

Click on the "Terraform Plan" workflow and then click on the "Run workflow" button.

-

The workflow will prompt for 3 inputs. Enter your name prefix (e.g., "jsmi"), the date digits (e.g., "062521") and the name of your

.tfvarsfile (i.e., "lab.tfvars"). Then click the "Run workflow" button. -

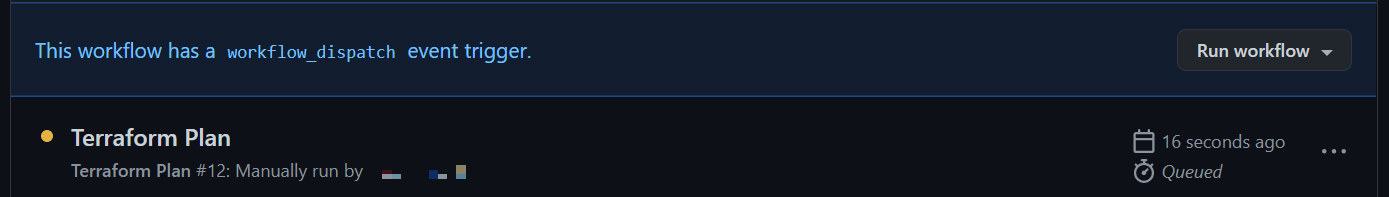

After a few seconds the workflow should display in the list of workflow runs.

-

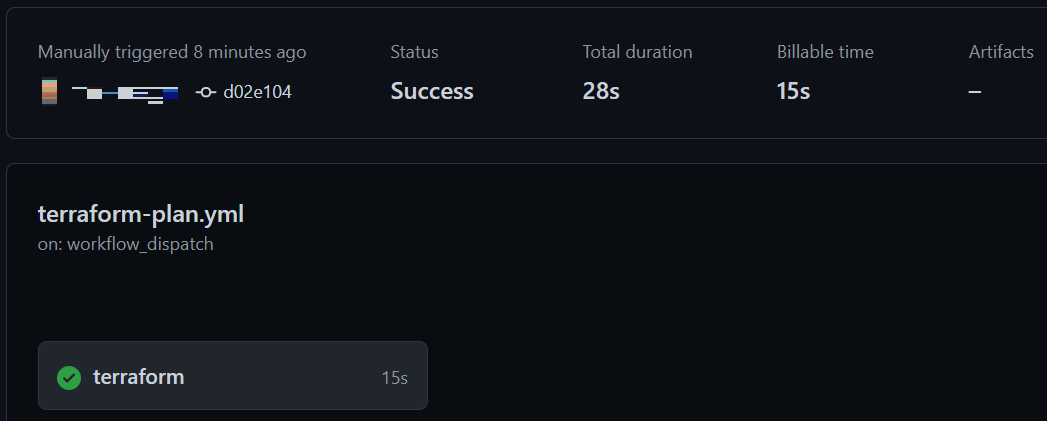

When the workflow run completes it will display either success (

) or fail (

) or fail ( ).

). -

Whether the workflow succeeded or failed, click the workflow name in the list of runs to view the execution summary.

-

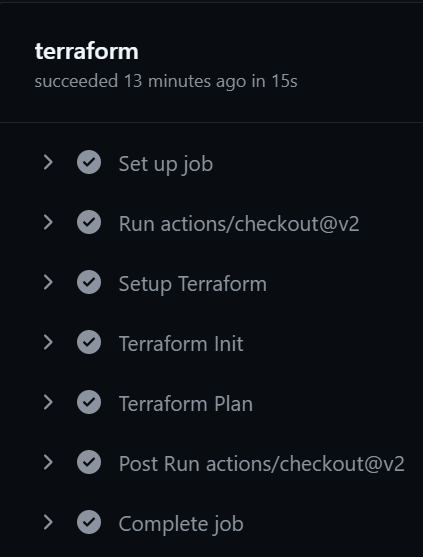

To view the run details click the "terraform" job name in the lower left-hand corner of the summary.

-

You can expand any of the individual steps, but be sure to expand the "Terraform Plan" step to see the plan Terraform generated.

-

Next, click on the "Actions" tab to return to the list of workflows and run the "Terraform Apply" workflow. When the "apply" runs successfully, go to the Azure portal to view the newly added resources in your hub and spoke Resource Groups. Also be sure to view the details of the workflow run in GitHub.

-

After you've verified that your Azure resources were deployed successfully, let's tear down that deployment. To do that, click the "Actions" button again to return to the list of workflows and run the "Terraform Destroy" workflow. Once it completes successfully verify that the resources have been destroyed in Azure. Also view the details of the workflow run in GitHub.

You've done it! You've completed the labs in the "Deploying Modern Workloads" workshop! We sincerely hope you learned a lot and enjoyed the experience.

You have the lab code and all the references to helpful Azure, Terraform and GitHub documentation. We hope that will help kickstart your automation efforts. Please reach out if ever we can help accelerate your automation journey!