@@ -105,18 +103,17 @@ XTuner 是一个高效、灵活、全能的轻量化大模型微调工具库。

|

|

@@ -149,6 +146,9 @@ XTuner 是一个高效、灵活、全能的轻量化大模型微调工具库。

QLoRA

LoRA

全量参数微调

+ DPO

+ ORPO

+ Reward Model

@@ -186,7 +186,7 @@ XTuner 是一个高效、灵活、全能的轻量化大模型微调工具库。

pip install -e '.[all]'

```

-### 微调 [](https://colab.research.google.com/drive/1QAEZVBfQ7LZURkMUtaq0b-5nEQII9G9Z?usp=sharing)

+### 微调

XTuner 支持微调大语言模型。数据集预处理指南请查阅[文档](./docs/zh_cn/user_guides/dataset_prepare.md)。

@@ -209,14 +209,14 @@ XTuner 支持微调大语言模型。数据集预处理指南请查阅[文档](.

xtuner train ${CONFIG_NAME_OR_PATH}

```

- 例如,我们可以利用 QLoRA 算法在 oasst1 数据集上微调 InternLM2-Chat-7B:

+ 例如,我们可以利用 QLoRA 算法在 oasst1 数据集上微调 InternLM2.5-Chat-7B:

```shell

# 单卡

- xtuner train internlm2_chat_7b_qlora_oasst1_e3 --deepspeed deepspeed_zero2

+ xtuner train internlm2_5_chat_7b_qlora_oasst1_e3 --deepspeed deepspeed_zero2

# 多卡

- (DIST) NPROC_PER_NODE=${GPU_NUM} xtuner train internlm2_chat_7b_qlora_oasst1_e3 --deepspeed deepspeed_zero2

- (SLURM) srun ${SRUN_ARGS} xtuner train internlm2_chat_7b_qlora_oasst1_e3 --launcher slurm --deepspeed deepspeed_zero2

+ (DIST) NPROC_PER_NODE=${GPU_NUM} xtuner train internlm2_5_chat_7b_qlora_oasst1_e3 --deepspeed deepspeed_zero2

+ (SLURM) srun ${SRUN_ARGS} xtuner train internlm2_5_chat_7b_qlora_oasst1_e3 --launcher slurm --deepspeed deepspeed_zero2

```

- `--deepspeed` 表示使用 [DeepSpeed](https://github.com/microsoft/DeepSpeed) 🚀 来优化训练过程。XTuner 内置了多种策略,包括 ZeRO-1、ZeRO-2、ZeRO-3 等。如果用户期望关闭此功能,请直接移除此参数。

@@ -229,7 +229,7 @@ XTuner 支持微调大语言模型。数据集预处理指南请查阅[文档](.

xtuner convert pth_to_hf ${CONFIG_NAME_OR_PATH} ${PTH} ${SAVE_PATH}

```

-### 对话 [](https://colab.research.google.com/drive/144OuTVyT_GvFyDMtlSlTzcxYIfnRsklq?usp=sharing)

+### 对话

XTuner 提供与大语言模型对话的工具。

@@ -239,16 +239,10 @@ xtuner chat ${NAME_OR_PATH_TO_LLM} --adapter {NAME_OR_PATH_TO_ADAPTER} [optional

例如:

-与 InternLM2-Chat-7B, oasst1 adapter 对话:

-

-```shell

-xtuner chat internlm/internlm2-chat-7b --adapter xtuner/internlm2-chat-7b-qlora-oasst1 --prompt-template internlm2_chat

-```

-

-与 LLaVA-InternLM2-7B 对话:

+与 InternLM2.5-Chat-7B 对话:

```shell

-xtuner chat internlm/internlm2-chat-7b --visual-encoder openai/clip-vit-large-patch14-336 --llava xtuner/llava-internlm2-7b --prompt-template internlm2_chat --image $IMAGE_PATH

+xtuner chat internlm/internlm2-chat-7b --prompt-template internlm2_chat

```

更多示例,请查阅[文档](./docs/zh_cn/user_guides/chat.md)。

@@ -289,6 +283,7 @@ xtuner chat internlm/internlm2-chat-7b --visual-encoder openai/clip-vit-large-pa

## 🎖️ 致谢

- [Llama 2](https://github.com/facebookresearch/llama)

+- [DeepSpeed](https://github.com/microsoft/DeepSpeed)

- [QLoRA](https://github.com/artidoro/qlora)

- [LMDeploy](https://github.com/InternLM/lmdeploy)

- [LLaVA](https://github.com/haotian-liu/LLaVA)

diff --git a/docs/en/.readthedocs.yaml b/docs/en/.readthedocs.yaml

new file mode 100644

index 000000000..67b9c44e7

--- /dev/null

+++ b/docs/en/.readthedocs.yaml

@@ -0,0 +1,16 @@

+version: 2

+

+build:

+ os: ubuntu-22.04

+ tools:

+ python: "3.8"

+

+formats:

+ - epub

+

+python:

+ install:

+ - requirements: requirements/docs.txt

+

+sphinx:

+ configuration: docs/en/conf.py

diff --git a/docs/en/Makefile b/docs/en/Makefile

new file mode 100644

index 000000000..d4bb2cbb9

--- /dev/null

+++ b/docs/en/Makefile

@@ -0,0 +1,20 @@

+# Minimal makefile for Sphinx documentation

+#

+

+# You can set these variables from the command line, and also

+# from the environment for the first two.

+SPHINXOPTS ?=

+SPHINXBUILD ?= sphinx-build

+SOURCEDIR = .

+BUILDDIR = _build

+

+# Put it first so that "make" without argument is like "make help".

+help:

+ @$(SPHINXBUILD) -M help "$(SOURCEDIR)" "$(BUILDDIR)" $(SPHINXOPTS) $(O)

+

+.PHONY: help Makefile

+

+# Catch-all target: route all unknown targets to Sphinx using the new

+# "make mode" option. $(O) is meant as a shortcut for $(SPHINXOPTS).

+%: Makefile

+ @$(SPHINXBUILD) -M $@ "$(SOURCEDIR)" "$(BUILDDIR)" $(SPHINXOPTS) $(O)

diff --git a/docs/en/_static/css/readthedocs.css b/docs/en/_static/css/readthedocs.css

new file mode 100644

index 000000000..34ed824ba

--- /dev/null

+++ b/docs/en/_static/css/readthedocs.css

@@ -0,0 +1,6 @@

+.header-logo {

+ background-image: url("../image/logo.png");

+ background-size: 177px 40px;

+ height: 40px;

+ width: 177px;

+}

diff --git a/docs/en/_static/image/logo.png b/docs/en/_static/image/logo.png

new file mode 100644

index 000000000..0d6b754c9

Binary files /dev/null and b/docs/en/_static/image/logo.png differ

diff --git a/docs/en/acceleration/benchmark.rst b/docs/en/acceleration/benchmark.rst

new file mode 100644

index 000000000..813fc7d5a

--- /dev/null

+++ b/docs/en/acceleration/benchmark.rst

@@ -0,0 +1,2 @@

+Benchmark

+=========

diff --git a/docs/en/acceleration/deepspeed.rst b/docs/en/acceleration/deepspeed.rst

new file mode 100644

index 000000000..e3dcaccc0

--- /dev/null

+++ b/docs/en/acceleration/deepspeed.rst

@@ -0,0 +1,2 @@

+DeepSpeed

+=========

diff --git a/docs/en/acceleration/flash_attn.rst b/docs/en/acceleration/flash_attn.rst

new file mode 100644

index 000000000..a080373ef

--- /dev/null

+++ b/docs/en/acceleration/flash_attn.rst

@@ -0,0 +1,2 @@

+Flash Attention

+===============

diff --git a/docs/en/acceleration/hyper_parameters.rst b/docs/en/acceleration/hyper_parameters.rst

new file mode 100644

index 000000000..04b82b7e6

--- /dev/null

+++ b/docs/en/acceleration/hyper_parameters.rst

@@ -0,0 +1,2 @@

+HyperParameters

+===============

diff --git a/docs/en/acceleration/length_grouped_sampler.rst b/docs/en/acceleration/length_grouped_sampler.rst

new file mode 100644

index 000000000..2fc723212

--- /dev/null

+++ b/docs/en/acceleration/length_grouped_sampler.rst

@@ -0,0 +1,2 @@

+Length Grouped Sampler

+======================

diff --git a/docs/en/acceleration/pack_to_max_length.rst b/docs/en/acceleration/pack_to_max_length.rst

new file mode 100644

index 000000000..aaddd36aa

--- /dev/null

+++ b/docs/en/acceleration/pack_to_max_length.rst

@@ -0,0 +1,2 @@

+Pack to Max Length

+==================

diff --git a/docs/en/acceleration/train_extreme_long_sequence.rst b/docs/en/acceleration/train_extreme_long_sequence.rst

new file mode 100644

index 000000000..d326bd690

--- /dev/null

+++ b/docs/en/acceleration/train_extreme_long_sequence.rst

@@ -0,0 +1,2 @@

+Train Extreme Long Sequence

+===========================

diff --git a/docs/en/acceleration/train_large_scale_dataset.rst b/docs/en/acceleration/train_large_scale_dataset.rst

new file mode 100644

index 000000000..026ce9dae

--- /dev/null

+++ b/docs/en/acceleration/train_large_scale_dataset.rst

@@ -0,0 +1,2 @@

+Train Large-scale Dataset

+=========================

diff --git a/docs/en/acceleration/varlen_flash_attn.rst b/docs/en/acceleration/varlen_flash_attn.rst

new file mode 100644

index 000000000..2fad725f3

--- /dev/null

+++ b/docs/en/acceleration/varlen_flash_attn.rst

@@ -0,0 +1,2 @@

+Varlen Flash Attention

+======================

diff --git a/docs/en/chat/agent.md b/docs/en/chat/agent.md

new file mode 100644

index 000000000..1da3ebc10

--- /dev/null

+++ b/docs/en/chat/agent.md

@@ -0,0 +1 @@

+# Chat with Agent

diff --git a/docs/en/chat/llm.md b/docs/en/chat/llm.md

new file mode 100644

index 000000000..5c556180c

--- /dev/null

+++ b/docs/en/chat/llm.md

@@ -0,0 +1 @@

+# Chat with LLM

diff --git a/docs/en/chat/lmdeploy.md b/docs/en/chat/lmdeploy.md

new file mode 100644

index 000000000..f4114a3a5

--- /dev/null

+++ b/docs/en/chat/lmdeploy.md

@@ -0,0 +1 @@

+# Accelerate chat by LMDeploy

diff --git a/docs/en/chat/vlm.md b/docs/en/chat/vlm.md

new file mode 100644

index 000000000..54101dcbc

--- /dev/null

+++ b/docs/en/chat/vlm.md

@@ -0,0 +1 @@

+# Chat with VLM

diff --git a/docs/en/conf.py b/docs/en/conf.py

new file mode 100644

index 000000000..457ca5232

--- /dev/null

+++ b/docs/en/conf.py

@@ -0,0 +1,109 @@

+# Configuration file for the Sphinx documentation builder.

+#

+# This file only contains a selection of the most common options. For a full

+# list see the documentation:

+# https://www.sphinx-doc.org/en/master/usage/configuration.html

+

+# -- Path setup --------------------------------------------------------------

+

+# If extensions (or modules to document with autodoc) are in another directory,

+# add these directories to sys.path here. If the directory is relative to the

+# documentation root, use os.path.abspath to make it absolute, like shown here.

+

+import os

+import sys

+

+from sphinx.ext import autodoc

+

+sys.path.insert(0, os.path.abspath('../..'))

+

+# -- Project information -----------------------------------------------------

+

+project = 'XTuner'

+copyright = '2024, XTuner Contributors'

+author = 'XTuner Contributors'

+

+# The full version, including alpha/beta/rc tags

+version_file = '../../xtuner/version.py'

+with open(version_file) as f:

+ exec(compile(f.read(), version_file, 'exec'))

+__version__ = locals()['__version__']

+# The short X.Y version

+version = __version__

+# The full version, including alpha/beta/rc tags

+release = __version__

+

+# -- General configuration ---------------------------------------------------

+

+# Add any Sphinx extension module names here, as strings. They can be

+# extensions coming with Sphinx (named 'sphinx.ext.*') or your custom

+# ones.

+extensions = [

+ 'sphinx.ext.napoleon',

+ 'sphinx.ext.viewcode',

+ 'sphinx.ext.intersphinx',

+ 'sphinx_copybutton',

+ 'sphinx.ext.autodoc',

+ 'sphinx.ext.autosummary',

+ 'myst_parser',

+ 'sphinxarg.ext',

+]

+

+# Add any paths that contain templates here, relative to this directory.

+templates_path = ['_templates']

+

+# List of patterns, relative to source directory, that match files and

+# directories to ignore when looking for source files.

+# This pattern also affects html_static_path and html_extra_path.

+exclude_patterns = ['_build', 'Thumbs.db', '.DS_Store']

+

+# Exclude the prompt "$" when copying code

+copybutton_prompt_text = r'\$ '

+copybutton_prompt_is_regexp = True

+

+language = 'en'

+

+# -- Options for HTML output -------------------------------------------------

+

+# The theme to use for HTML and HTML Help pages. See the documentation for

+# a list of builtin themes.

+#

+html_theme = 'sphinx_book_theme'

+html_logo = '_static/image/logo.png'

+html_theme_options = {

+ 'path_to_docs': 'docs/en',

+ 'repository_url': 'https://github.com/InternLM/xtuner',

+ 'use_repository_button': True,

+}

+# Add any paths that contain custom static files (such as style sheets) here,

+# relative to this directory. They are copied after the builtin static files,

+# so a file named "default.css" will overwrite the builtin "default.css".

+# html_static_path = ['_static']

+

+# Mock out external dependencies here.

+autodoc_mock_imports = [

+ 'cpuinfo',

+ 'torch',

+ 'transformers',

+ 'psutil',

+ 'prometheus_client',

+ 'sentencepiece',

+ 'vllm.cuda_utils',

+ 'vllm._C',

+ 'numpy',

+ 'tqdm',

+]

+

+

+class MockedClassDocumenter(autodoc.ClassDocumenter):

+ """Remove note about base class when a class is derived from object."""

+

+ def add_line(self, line: str, source: str, *lineno: int) -> None:

+ if line == ' Bases: :py:class:`object`':

+ return

+ super().add_line(line, source, *lineno)

+

+

+autodoc.ClassDocumenter = MockedClassDocumenter

+

+navigation_with_keys = False

diff --git a/docs/en/dpo/modify_settings.md b/docs/en/dpo/modify_settings.md

new file mode 100644

index 000000000..d78cc40e6

--- /dev/null

+++ b/docs/en/dpo/modify_settings.md

@@ -0,0 +1,83 @@

+## Modify DPO Training Configuration

+

+This section introduces config parameters related to DPO (Direct Preference Optimization) training. For more details on XTuner config files, please refer to [Modifying Training Configuration](https://xtuner.readthedocs.io/zh-cn/latest/training/modify_settings.html).

+

+### Loss Function

+

+In DPO training, you can choose different types of loss functions according to your needs. XTuner provides various loss function options, such as `sigmoid`, `hinge`, `ipo`, etc. You can select the desired loss function type by setting the `dpo_loss_type` parameter.

+

+Additionally, you can control the temperature coefficient in the loss function by adjusting the `loss_beta` parameter. The `label_smoothing` parameter can be used for smoothing labels.

+

+```python

+#######################################################################

+# PART 1 Settings #

+#######################################################################

+# Model

+dpo_loss_type = 'sigmoid' # One of ['sigmoid', 'hinge', 'ipo', 'kto_pair', 'sppo_hard', 'nca_pair', 'robust']

+loss_beta = 0.1

+label_smoothing = 0.0

+```

+

+### Modifying the Model

+

+Users can modify `pretrained_model_name_or_path` to change the pretrained model.

+

+```python

+#######################################################################

+# PART 1 Settings #

+#######################################################################

+# Model

+pretrained_model_name_or_path = 'internlm/internlm2-chat-1_8b-sft'

+```

+

+### Training Data

+

+In DPO training, you can specify the maximum number of tokens for a single sample sequence using the `max_length` parameter. XTuner will automatically truncate or pad the data.

+

+```python

+# Data

+max_length = 2048

+```

+

+In the configuration file, we use the `train_dataset` field to specify the training dataset. You can specify the dataset loading method using the `dataset` field and the dataset mapping function using the `dataset_map_fn` field.

+

+```python

+#######################################################################

+# PART 3 Dataset & Dataloader #

+#######################################################################

+sampler = SequenceParallelSampler \

+ if sequence_parallel_size > 1 else DefaultSampler

+

+train_dataset = dict(

+ type=build_preference_dataset,

+ dataset=dict(type=load_dataset, path='mlabonne/orpo-dpo-mix-40k'),

+ tokenizer=tokenizer,

+ max_length=max_length,

+ dataset_map_fn=orpo_dpo_mix_40k_map_fn,

+ is_dpo=True,

+ is_reward=False,

+ reward_token_id=-1,

+ num_proc=32,

+ use_varlen_attn=use_varlen_attn,

+ max_packed_length=max_packed_length,

+ shuffle_before_pack=True,

+)

+

+train_dataloader = dict(

+ batch_size=batch_size,

+ num_workers=dataloader_num_workers,

+ dataset=train_dataset,

+ sampler=dict(type=sampler, shuffle=True),

+ collate_fn=dict(

+ type=preference_collate_fn, use_varlen_attn=use_varlen_attn))

+```

+

+In the above configuration, we use `load_dataset` to load the `mlabonne/orpo-dpo-mix-40k` dataset from Hugging Face and use `orpo_dpo_mix_40k_map_fn` as the dataset mapping function.

+

+For more information on handling datasets and writing dataset mapping functions, please refer to the [Preference Dataset Section](../reward_model/preference_data.md).

+

+### Accelerating Training

+

+When training with preference data, we recommend enabling the [Variable-Length Attention Mechanism](https://xtuner.readthedocs.io/zh-cn/latest/acceleration/varlen_flash_attn.html) to avoid memory waste caused by length differences between chosen and rejected samples within a single preference. You can enable the variable-length attention mechanism by setting `use_varlen_attn=True`.

+

+XTuner also supports many training acceleration methods. For details on how to use them, please refer to the [Acceleration Strategies Section](https://xtuner.readthedocs.io/zh-cn/latest/acceleration/hyper_parameters.html).

diff --git a/docs/en/dpo/overview.md b/docs/en/dpo/overview.md

new file mode 100644

index 000000000..0c20946e3

--- /dev/null

+++ b/docs/en/dpo/overview.md

@@ -0,0 +1,27 @@

+## Introduction to DPO

+

+### Overview

+

+DPO (Direct Preference Optimization) is a method used in large language model training for directly optimizing human preferences. Unlike traditional reinforcement learning methods, DPO directly uses human preference data to optimize the model, thereby improving the quality of generated content to better align with human preferences. DPO also eliminates the need to train a Reward Model and a Critic Model, avoiding the complexity of reinforcement learning algorithms, reducing training overhead, and enhancing training efficiency.

+

+Many algorithms have made certain improvements to DPO's loss function. In XTuner, besides DPO, we have also implemented loss functions from papers such as [Identity Preference Optimization (IPO)](https://huggingface.co/papers/2310.12036). To use these algorithms, please refer to the [Modify DPO Settings](./modify_settings.md) section. We also provide some [example configurations](https://github.com/InternLM/xtuner/tree/main/xtuner/configs/dpo) for reference.

+

+In addition to DPO, there are alignment algorithms like [ORPO](https://arxiv.org/abs/2403.07691) that do not require a reference model. ORPO uses the concept of odds ratio to optimize the model by penalizing rejected samples during the training process, thereby adapting more effectively to the chosen samples. ORPO eliminates the dependence on a reference model, making the training process more simplified and efficient. The training method for ORPO in XTuner is very similar to DPO, and we provide some [example configurations](https://github.com/InternLM/xtuner/tree/main/xtuner/configs/orpo). Users can refer to the DPO tutorial to modify the configuration.

+

+### Features of DPO Training in XTuner

+

+DPO training in XTuner offers the following significant advantages:

+

+1. **Latest Algorithms**: In addition to supporting standard DPO, XTuner also supports improved DPO algorithms or memory efficient algorithms like ORPO that do not rely on reference models.

+

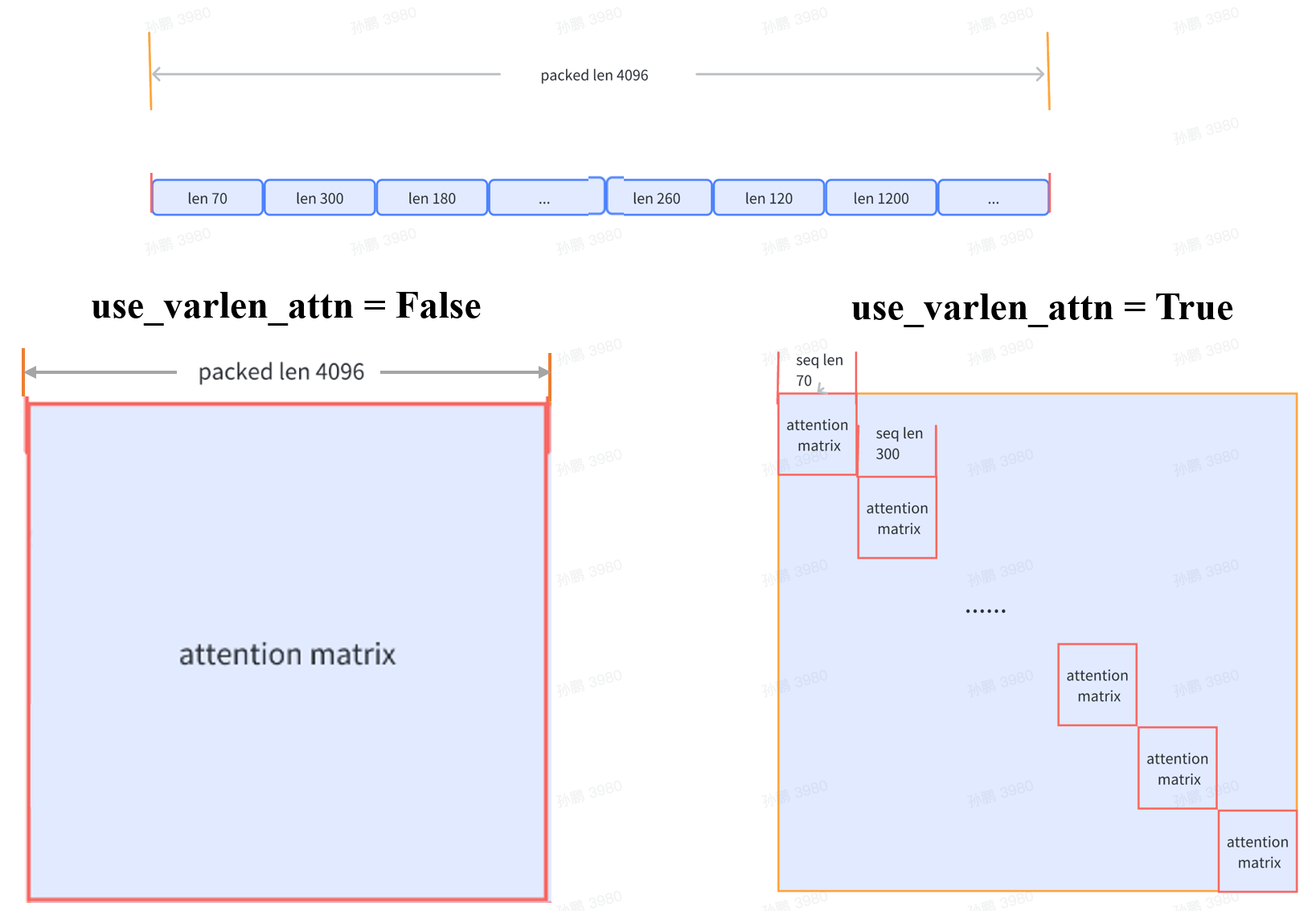

+2. **Reducing Memory Waste**: Due to the length differences in chosen and rejected data in preference datasets, padding tokens during data concatenation can cause memory waste. In XTuner, by utilizing the variable-length attention feature from Flash Attention2, preference pairs are packed into the same sequence during training, significantly reducing memory waste caused by padding tokens. This not only improves memory efficiency but also allows for training larger models or handling more data under the same hardware conditions.

+

+

+

+3. **Efficient Training**: Leveraging XTuner's QLoRA training capabilities, the reference model can be converted into a policy model with the LoRA adapter removed, eliminating the memory overhead of the reference model weights and significantly reducing DPO training costs.

+

+4. **Long Text Training**: With XTuner's sequence parallel functionality, long text data can be trained efficiently.

+

+### Getting Started

+

+Refer to the [Quick Start Guide](./quick_start.md) to understand the basic concepts. For more information on configuring training parameters, please see the [Modify DPO Settings](./modify_settings.md) section.

diff --git a/docs/en/dpo/quick_start.md b/docs/en/dpo/quick_start.md

new file mode 100644

index 000000000..19fffbf8b

--- /dev/null

+++ b/docs/en/dpo/quick_start.md

@@ -0,0 +1,71 @@

+## Quick Start with DPO

+

+In this section, we will introduce how to use XTuner to train a 1.8B DPO (Direct Preference Optimization) model to help you get started quickly.

+

+### Preparing Pretrained Model Weights

+

+We use the model [InternLM2-chat-1.8b-sft](https://huggingface.co/internlm/internlm2-chat-1_8b-sft), as the initial model for DPO training to align human preferences.

+

+Set `pretrained_model_name_or_path = 'internlm/internlm2-chat-1_8b-sft'` in the training configuration file, and the model files will be automatically downloaded when training starts. If you need to download the model weights manually, please refer to the section [Preparing Pretrained Model Weights](https://xtuner.readthedocs.io/zh-cn/latest/preparation/pretrained_model.html), which provides detailed instructions on how to download model weights from Huggingface or Modelscope. Here are the links to the models on HuggingFace and ModelScope:

+

+- HuggingFace link: https://huggingface.co/internlm/internlm2-chat-1_8b-sft

+- ModelScope link: https://modelscope.cn/models/Shanghai_AI_Laboratory/internlm2-chat-1_8b-sft/summary

+

+### Preparing Training Data

+

+In this tutorial, we use the [mlabonne/orpo-dpo-mix-40k](https://huggingface.co/datasets/mlabonne/orpo-dpo-mix-40k) dataset from Huggingface as an example.

+

+```python

+train_dataset = dict(

+ type=build_preference_dataset,

+ dataset=dict(

+ type=load_dataset,

+ path='mlabonne/orpo-dpo-mix-40k'),

+ dataset_map_fn=orpo_dpo_mix_40k_map_fn,

+ is_dpo=True,

+ is_reward=False,

+)

+```

+

+Using the above configuration in the configuration file will automatically download and process this dataset. If you want to use other open-source datasets from Huggingface or custom datasets, please refer to the [Preference Dataset](../reward_model/preference_data.md) section.

+

+### Preparing Configuration File

+

+XTuner provides several ready-to-use configuration files, which can be viewed using `xtuner list-cfg`. Execute the following command to copy a configuration file to the current directory.

+

+```bash

+xtuner copy-cfg internlm2_chat_1_8b_dpo_full .

+```

+

+Open the copied configuration file. If you choose to download the model and dataset automatically, no modifications are needed. If you want to specify paths to your pre-downloaded model and dataset, modify the `pretrained_model_name_or_path` and the `path` parameter in `dataset` under `train_dataset`.

+

+For more training parameter configurations, please refer to the section [Modifying DPO Training Configuration](./modify_settings.md) section.

+

+### Starting the Training

+

+After completing the above steps, you can start the training task using the following commands.

+

+```bash

+# Single machine, single GPU

+xtuner train ./internlm2_chat_1_8b_dpo_full_copy.py

+# Single machine, multiple GPUs

+NPROC_PER_NODE=${GPU_NUM} xtuner train ./internlm2_chat_1_8b_dpo_full_copy.py

+# Slurm cluster

+srun ${SRUN_ARGS} xtuner train ./internlm2_chat_1_8b_dpo_full_copy.py --launcher slurm

+```

+

+### Model Conversion

+

+XTuner provides integrated tools to convert models to HuggingFace format. Simply execute the following commands:

+

+```bash

+# Create a directory for HuggingFace format parameters

+mkdir work_dirs/internlm2_chat_1_8b_dpo_full_copy/iter_15230_hf

+

+# Convert format

+xtuner convert pth_to_hf internlm2_chat_1_8b_dpo_full_copy.py \

+ work_dirs/internlm2_chat_1_8b_dpo_full_copy/iter_15230.pth \

+ work_dirs/internlm2_chat_1_8b_dpo_full_copy/iter_15230_hf

+```

+

+This will convert the XTuner's ckpt to the HuggingFace format.

diff --git a/docs/en/evaluation/hook.md b/docs/en/evaluation/hook.md

new file mode 100644

index 000000000..de9e98c88

--- /dev/null

+++ b/docs/en/evaluation/hook.md

@@ -0,0 +1 @@

+# Evaluation during training

diff --git a/docs/en/evaluation/mmbench.md b/docs/en/evaluation/mmbench.md

new file mode 100644

index 000000000..5421b1c96

--- /dev/null

+++ b/docs/en/evaluation/mmbench.md

@@ -0,0 +1 @@

+# MMBench (VLM)

diff --git a/docs/en/evaluation/mmlu.md b/docs/en/evaluation/mmlu.md

new file mode 100644

index 000000000..4bfabff8f

--- /dev/null

+++ b/docs/en/evaluation/mmlu.md

@@ -0,0 +1 @@

+# MMLU (LLM)

diff --git a/docs/en/evaluation/opencompass.md b/docs/en/evaluation/opencompass.md

new file mode 100644

index 000000000..eb24da882

--- /dev/null

+++ b/docs/en/evaluation/opencompass.md

@@ -0,0 +1 @@

+# Evaluate with OpenCompass

diff --git a/docs/en/get_started/installation.md b/docs/en/get_started/installation.md

new file mode 100644

index 000000000..007e61553

--- /dev/null

+++ b/docs/en/get_started/installation.md

@@ -0,0 +1,52 @@

+### Installation

+

+In this section, we will show you how to install XTuner.

+

+## Installation Process

+

+We recommend users to follow our best practices for installing XTuner.

+It is recommended to use a conda virtual environment with Python-3.10 to install XTuner.

+

+### Best Practices

+

+**Step 0.** Create a Python-3.10 virtual environment using conda.

+

+```shell

+conda create --name xtuner-env python=3.10 -y

+conda activate xtuner-env

+```

+

+**Step 1.** Install XTuner.

+

+Case a: Install XTuner via pip:

+

+```shell

+pip install -U xtuner

+```

+

+Case b: Install XTuner with DeepSpeed integration:

+

+```shell

+pip install -U 'xtuner[deepspeed]'

+```

+

+Case c: Install XTuner from the source code:

+

+```shell

+git clone https://github.com/InternLM/xtuner.git

+cd xtuner

+pip install -e '.[all]'

+# "-e" indicates installing the project in editable mode, so any local modifications to the code will take effect without reinstalling.

+```

+

+## Verify the installation

+

+To verify if XTuner is installed correctly, we will use a command to print the configuration files.

+

+**Print Configuration Files:** Use the command `xtuner list-cfg` in the command line to verify if the configuration files can be printed.

+

+```shell

+xtuner list-cfg

+```

+

+You should see a list of XTuner configuration files, corresponding to the ones in [xtuner/configs](https://github.com/InternLM/xtuner/tree/main/xtuner/configs) in the source code.

diff --git a/docs/en/get_started/overview.md b/docs/en/get_started/overview.md

new file mode 100644

index 000000000..c257c83c6

--- /dev/null

+++ b/docs/en/get_started/overview.md

@@ -0,0 +1,5 @@

+# Overview

+

+This chapter introduces you to the framework and workflow of XTuner, and provides detailed tutorial links.

+

+## What is XTuner

diff --git a/docs/en/get_started/quickstart.md b/docs/en/get_started/quickstart.md

new file mode 100644

index 000000000..23198bf3b

--- /dev/null

+++ b/docs/en/get_started/quickstart.md

@@ -0,0 +1,308 @@

+# Quickstart

+

+In this section, we will show you how to use XTuner to fine-tune a model to help you get started quickly.

+

+After installing XTuner successfully, we can start fine-tuning the model. In this section, we will demonstrate how to use XTuner to apply the QLoRA algorithm to fine-tune InternLM2-Chat-7B on the Colorist dataset.

+

+The Colorist dataset ([HuggingFace link](https://huggingface.co/datasets/burkelibbey/colors); [ModelScope link](https://www.modelscope.cn/datasets/fanqiNO1/colors/summary)) is a dataset that provides color choices and suggestions based on color descriptions. A model fine-tuned on this dataset can be used to give a hexadecimal color code based on the user's description of the color. For example, when the user enters "a calming but fairly bright light sky blue, between sky blue and baby blue, with a hint of fluorescence due to its brightness", the model will output , which matches the user's description. There are a few sample data from this dataset:

+

+| Enligsh Description | Chinese Description | Color |

+| -------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------- | -------------------------------------------------------------------------------------------------------------------------------- | ------------------------------------------------------------------ |

+| Light Sky Blue: A calming, fairly bright color that falls between sky blue and baby blue, with a hint of slight fluorescence due to its brightness. | 浅天蓝色:一种介于天蓝和婴儿蓝之间的平和、相当明亮的颜色,由于明亮而带有一丝轻微的荧光。 | #66ccff:  |

+| Bright red: This is a very vibrant, saturated and vivid shade of red, resembling the color of ripe apples or fresh blood. It is as red as you can get on a standard RGB color palette, with no elements of either blue or green. | 鲜红色: 这是一种非常鲜艳、饱和、生动的红色,类似成熟苹果或新鲜血液的颜色。它是标准 RGB 调色板上的红色,不含任何蓝色或绿色元素。 | #ee0000:  |

+| Bright Turquoise: This color mixes the freshness of bright green with the tranquility of light blue, leading to a vibrant shade of turquoise. It is reminiscent of tropical waters. | 明亮的绿松石色:这种颜色融合了鲜绿色的清新和淡蓝色的宁静,呈现出一种充满活力的绿松石色调。它让人联想到热带水域。 | #00ffcc:  |

+

+## Prepare the model weights

+

+Before fine-tuning the model, we first need to prepare the weights of the model.

+

+### Download from HuggingFace

+

+```bash

+pip install -U huggingface_hub

+

+# Download the model weights to Shanghai_AI_Laboratory/internlm2-chat-7b

+huggingface-cli download internlm/internlm2-chat-7b \

+ --local-dir Shanghai_AI_Laboratory/internlm2-chat-7b \

+ --local-dir-use-symlinks False \

+ --resume-download

+```

+

+### Download from ModelScope

+

+Since pulling model weights from HuggingFace may lead to an unstable download process, slow download speed and other problems, we can choose to download the weights of InternLM2-Chat-7B from ModelScope when experiencing network issues.

+

+```bash

+pip install -U modelscope

+

+# Download the model weights to the current directory

+python -c "from modelscope import snapshot_download; snapshot_download('Shanghai_AI_Laboratory/internlm2-chat-7b', cache_dir='.')"

+```

+

+After completing the download, we can start to prepare the dataset for fine-tuning.

+

+The HuggingFace link and ModelScope link are attached here:

+

+- The HuggingFace link is located at: https://huggingface.co/internlm/internlm2-chat-7b

+- The ModelScope link is located at: https://modelscope.cn/models/Shanghai_AI_Laboratory/internlm2-chat-7b/summary

+

+## Prepare the fine-tuning dataset

+

+### Download from HuggingFace

+

+```bash

+git clone https://huggingface.co/datasets/burkelibbey/colors

+```

+

+### Download from ModelScope

+

+Due to the same reason, we can choose to download the dataset from ModelScope.

+

+```bash

+git clone https://www.modelscope.cn/datasets/fanqiNO1/colors.git

+```

+

+The HuggingFace link and ModelScope link are attached here:

+

+- The HuggingFace link is located at: https://huggingface.co/datasets/burkelibbey/colors

+- The ModelScope link is located at: https://modelscope.cn/datasets/fanqiNO1/colors

+

+## Prepare the config

+

+XTuner provides several configs out-of-the-box, which can be viewed via `xtuner list-cfg`. We can use the following command to copy a config to the current directory.

+

+```bash

+xtuner copy-cfg internlm2_7b_qlora_colorist_e5 .

+```

+

+Explanation of the config name:

+

+| Config Name | internlm2_7b_qlora_colorist_e5 |

+| ----------- | ------------------------------ |

+| Model Name | internlm2_7b |

+| Algorithm | qlora |

+| Dataset | colorist |

+| Epochs | 5 |

+

+The directory structure at this point should look like this:

+

+```bash

+.

+├── colors

+│ ├── colors.json

+│ ├── dataset_infos.json

+│ ├── README.md

+│ └── train.jsonl

+├── internlm2_7b_qlora_colorist_e5_copy.py

+└── Shanghai_AI_Laboratory

+ └── internlm2-chat-7b

+ ├── config.json

+ ├── configuration_internlm2.py

+ ├── configuration.json

+ ├── generation_config.json

+ ├── modeling_internlm2.py

+ ├── pytorch_model-00001-of-00008.bin

+ ├── pytorch_model-00002-of-00008.bin

+ ├── pytorch_model-00003-of-00008.bin

+ ├── pytorch_model-00004-of-00008.bin

+ ├── pytorch_model-00005-of-00008.bin

+ ├── pytorch_model-00006-of-00008.bin

+ ├── pytorch_model-00007-of-00008.bin

+ ├── pytorch_model-00008-of-00008.bin

+ ├── pytorch_model.bin.index.json

+ ├── README.md

+ ├── special_tokens_map.json

+ ├── tokenization_internlm2_fast.py

+ ├── tokenization_internlm2.py

+ ├── tokenizer_config.json

+ └── tokenizer.model

+```

+

+## Modify the config

+

+In this step, we need to modify the model path and dataset path to local paths and modify the dataset loading method.

+In addition, since the copied config is based on the Base model, we also need to modify the `prompt_template` to adapt to the Chat model.

+

+```diff

+#######################################################################

+# PART 1 Settings #

+#######################################################################

+# Model

+- pretrained_model_name_or_path = 'internlm/internlm2-7b'

++ pretrained_model_name_or_path = './Shanghai_AI_Laboratory/internlm2-chat-7b'

+

+# Data

+- data_path = 'burkelibbey/colors'

++ data_path = './colors/train.jsonl'

+- prompt_template = PROMPT_TEMPLATE.default

++ prompt_template = PROMPT_TEMPLATE.internlm2_chat

+

+...

+#######################################################################

+# PART 3 Dataset & Dataloader #

+#######################################################################

+train_dataset = dict(

+ type=process_hf_dataset,

+- dataset=dict(type=load_dataset, path=data_path),

++ dataset=dict(type=load_dataset, path='json', data_files=dict(train=data_path)),

+ tokenizer=tokenizer,

+ max_length=max_length,

+ dataset_map_fn=colors_map_fn,

+ template_map_fn=dict(

+ type=template_map_fn_factory, template=prompt_template),

+ remove_unused_columns=True,

+ shuffle_before_pack=True,

+ pack_to_max_length=pack_to_max_length)

+```

+

+Therefore, `pretrained_model_name_or_path`, `data_path`, `prompt_template`, and the `dataset` fields in `train_dataset` are modified.

+

+## Start fine-tuning

+

+Once having done the above steps, we can start fine-tuning using the following command.

+

+```bash

+# Single GPU

+xtuner train ./internlm2_7b_qlora_colorist_e5_copy.py

+# Multiple GPUs

+NPROC_PER_NODE=${GPU_NUM} xtuner train ./internlm2_7b_qlora_colorist_e5_copy.py

+# Slurm

+srun ${SRUN_ARGS} xtuner train ./internlm2_7b_qlora_colorist_e5_copy.py --launcher slurm

+```

+

+The correct training log may look similar to the one shown below:

+

+```text

+01/29 21:35:34 - mmengine - INFO - Iter(train) [ 10/720] lr: 9.0001e-05 eta: 0:31:46 time: 2.6851 data_time: 0.0077 memory: 12762 loss: 2.6900

+01/29 21:36:02 - mmengine - INFO - Iter(train) [ 20/720] lr: 1.9000e-04 eta: 0:32:01 time: 2.8037 data_time: 0.0071 memory: 13969 loss: 2.6049 grad_norm: 0.9361

+01/29 21:36:29 - mmengine - INFO - Iter(train) [ 30/720] lr: 1.9994e-04 eta: 0:31:24 time: 2.7031 data_time: 0.0070 memory: 13969 loss: 2.5795 grad_norm: 0.9361

+01/29 21:36:57 - mmengine - INFO - Iter(train) [ 40/720] lr: 1.9969e-04 eta: 0:30:55 time: 2.7247 data_time: 0.0069 memory: 13969 loss: 2.3352 grad_norm: 0.8482

+01/29 21:37:24 - mmengine - INFO - Iter(train) [ 50/720] lr: 1.9925e-04 eta: 0:30:28 time: 2.7286 data_time: 0.0068 memory: 13969 loss: 2.2816 grad_norm: 0.8184

+01/29 21:37:51 - mmengine - INFO - Iter(train) [ 60/720] lr: 1.9863e-04 eta: 0:29:58 time: 2.7048 data_time: 0.0069 memory: 13969 loss: 2.2040 grad_norm: 0.8184

+01/29 21:38:18 - mmengine - INFO - Iter(train) [ 70/720] lr: 1.9781e-04 eta: 0:29:31 time: 2.7302 data_time: 0.0068 memory: 13969 loss: 2.1912 grad_norm: 0.8460

+01/29 21:38:46 - mmengine - INFO - Iter(train) [ 80/720] lr: 1.9681e-04 eta: 0:29:05 time: 2.7338 data_time: 0.0069 memory: 13969 loss: 2.1512 grad_norm: 0.8686

+01/29 21:39:13 - mmengine - INFO - Iter(train) [ 90/720] lr: 1.9563e-04 eta: 0:28:36 time: 2.7047 data_time: 0.0068 memory: 13969 loss: 2.0653 grad_norm: 0.8686

+01/29 21:39:40 - mmengine - INFO - Iter(train) [100/720] lr: 1.9426e-04 eta: 0:28:09 time: 2.7383 data_time: 0.0070 memory: 13969 loss: 1.9819 grad_norm: 0.9127

+```

+

+Before training begins, the output of the model is as shown below:

+

+```text

+2024/01/29 21:34:58 - mmengine - INFO - before_train in EvaluateChatHook.

+2024/01/29 21:35:03 - mmengine - INFO - Sample output:

+<|im_start|>system

+You are a professional color designer. Please provide the corresponding colors based on the description of Human.

+<|im_end|>

+<|im_start|>user

+请给我一个像天空一样清澈透明的蓝色。<|im_end|>

+<|im_start|>assistant

+为了匹配您所描述的“像天空一样清澈透明的蓝色”,我建议您选择一种名为“天蓝”(Cerulean)的颜色。这种颜色通常被用来代表天空、海洋和清澈的水域,它具有明亮

+

+2024/01/29 21:35:07 - mmengine - INFO - Sample output:

+<|im_start|>system

+You are a professional color designer. Please provide the corresponding colors based on the description of Human.

+<|im_end|>

+<|im_start|>user

+Please give me a clear blue like the sky.<|im_end|>

+<|im_start|>assistant

+The color you're describing is a clear, bright blue that resembles the color of the sky on a sunny day. This color is often referred to as "sky blue" or "cerulean blue." Here are some specific color codes and names that

+```

+

+After training, the output of the model is as shown below:

+

+```text

+2024/01/29 22:08:07 - mmengine - INFO - Sample output:

+<|im_start|>system

+You are a professional color designer. Please provide the corresponding colors based on the description of Human.

+<|im_end|>

+<|im_start|>user

+请给我一个像天空一样清澈透明的蓝色。<|im_end|>

+<|im_start|>assistant

+#1099ee<|im_end|>

+

+2024/01/29 22:08:08 - mmengine - INFO - Sample output:

+<|im_start|>system

+You are a professional color designer. Please provide the corresponding colors based on the description of Human.

+<|im_end|>

+<|im_start|>user

+Please give me a clear blue like the sky.<|im_end|>

+<|im_start|>assistant

+#0066dd<|im_end|>

+```

+

+The color of the model output is shown below:

+

+- 天空一样清澈透明的蓝色:

+- A clear blue like the sky:

+

+It is clear that the output of the model after training has been fully aligned with the content of the dataset.

+

+# Model Convert + LoRA Merge

+

+After training, we will get several `.pth` files that do **NOT** contain all the parameters of the model, but store the parameters updated by the training process of the QLoRA algorithm. Therefore, we need to convert these `.pth` files to HuggingFace format and merge them into the original LLM weights.

+

+### Model Convert

+

+XTuner has already integrated the tool of converting the model to HuggingFace format. We can use the following command to convert the model.

+

+```bash

+# Create the directory to store parameters in hf format

+mkdir work_dirs/internlm2_7b_qlora_colorist_e5_copy/iter_720_hf

+

+# Convert the model to hf format

+xtuner convert pth_to_hf internlm2_7b_qlora_colorist_e5_copy.py \

+ work_dirs/internlm2_7b_qlora_colorist_e5_copy/iter_720.pth \

+ work_dirs/internlm2_7b_qlora_colorist_e5_copy/iter_720_hf

+```

+

+This command will convert `work_dirs/internlm2_7b_qlora_colorist_e5_copy/iter_720.pth` to hf format based on the contents of the config `internlm2_7b_qlora_colorist_e5_copy.py` and will save it in `work_dirs/internlm2_7b_qlora_colorist_e5_copy/iter_720_hf`.

+

+### LoRA Merge

+

+XTuner has also integrated the tool of merging LoRA weights, we just need to execute the following command:

+

+```bash

+# Create the directory to store the merged weights

+mkdir work_dirs/internlm2_7b_qlora_colorist_e5_copy/merged

+

+# Merge the weights

+xtuner convert merge Shanghai_AI_Laboratory/internlm2-chat-7b \

+ work_dirs/internlm2_7b_qlora_colorist_e5_copy/iter_720_hf \

+ work_dirs/internlm2_7b_qlora_colorist_e5_copy/merged \

+ --max-shard-size 2GB

+```

+

+Similar to the command above, this command will read the original parameter path `Shanghai_AI_Laboratory/internlm2-chat-7b` and the path of parameter which has been converted to hf format `work_dirs/internlm2_7b_qlora_colorist_e5_copy/iter_720_hf` and merge the two parts of the parameters and save them in `work_dirs/internlm2_7b_qlora_colorist_e5_copy/merged`, where the maximum file size for each parameter slice is 2GB.

+

+## Chat with the model

+

+To better appreciate the model's capabilities after merging the weights, we can chat with the model. XTuner also integrates the tool of chatting with models. We can start a simple demo to chat with the model with the following command:

+

+```bash

+xtuner chat work_dirs/internlm2_7b_qlora_colorist_e5_copy/merged \

+ --prompt-template internlm2_chat \

+ --system-template colorist

+```

+

+Of course, we can also choose not to merge the weights and instead chat directly with the LLM + LoRA Adapter, we just need to execute the following command:

+

+```bash

+xtuner chat Shanghai_AI_Laboratory/internlm2-chat-7b

+ --adapter work_dirs/internlm2_7b_qlora_colorist_e5_copy/iter_720_hf \

+ --prompt-template internlm2_chat \

+ --system-template colorist

+```

+

+where `work_dirs/internlm2_7b_qlora_colorist_e5_copy/merged` is the path to the merged weights, `--prompt-template internlm2_chat` specifies that the chat template is InternLM2-Chat, and `-- system-template colorist` specifies that the System Prompt for conversations with models is the template required by the Colorist dataset.

+

+There is an example below:

+

+```text

+double enter to end input (EXIT: exit chat, RESET: reset history) >>> A calming but fairly bright light sky blue, between sky blue and baby blue, with a hint of fluorescence due to its brightness.

+

+#66ccff<|im_end|>

+```

+

+The color of the model output is shown below:

+

+A calming but fairly bright light sky blue, between sky blue and baby blue, with a hint of fluorescence due to its brightness: .

diff --git a/docs/en/index.rst b/docs/en/index.rst

new file mode 100644

index 000000000..c4c18d31a

--- /dev/null

+++ b/docs/en/index.rst

@@ -0,0 +1,123 @@

+.. xtuner documentation master file, created by

+ sphinx-quickstart on Tue Jan 9 16:33:06 2024.

+ You can adapt this file completely to your liking, but it should at least

+ contain the root `toctree` directive.

+

+Welcome to XTuner's documentation!

+==================================

+

+.. figure:: ./_static/image/logo.png

+ :align: center

+ :alt: xtuner

+ :class: no-scaled-link

+

+.. raw:: html

+

+

+ All-IN-ONE toolbox for LLM

+

+

+

+

+

+ Star

+ Watch

+ Fork

+

+

+

+

+Documentation

+-------------

+.. toctree::

+ :maxdepth: 2

+ :caption: Get Started

+

+ get_started/overview.md

+ get_started/installation.md

+ get_started/quickstart.md

+

+.. toctree::

+ :maxdepth: 2

+ :caption: Preparation

+

+ preparation/pretrained_model.rst

+ preparation/prompt_template.rst

+

+.. toctree::

+ :maxdepth: 2

+ :caption: Training

+

+ training/modify_settings.rst

+ training/custom_sft_dataset.rst

+ training/custom_pretrain_dataset.rst

+ training/custom_agent_dataset.rst

+ training/multi_modal_dataset.rst

+ training/open_source_dataset.rst

+ training/visualization.rst

+

+.. toctree::

+ :maxdepth: 2

+ :caption: DPO

+

+ dpo/overview.md

+ dpo/quick_start.md

+ dpo/modify_settings.md

+

+.. toctree::

+ :maxdepth: 2

+ :caption: Reward Model

+

+ reward_model/overview.md

+ reward_model/quick_start.md

+ reward_model/modify_settings.md

+ reward_model/preference_data.md

+

+.. toctree::

+ :maxdepth: 2

+ :caption: Acceleration

+

+ acceleration/deepspeed.rst

+ acceleration/pack_to_max_length.rst

+ acceleration/flash_attn.rst

+ acceleration/varlen_flash_attn.rst

+ acceleration/hyper_parameters.rst

+ acceleration/length_grouped_sampler.rst

+ acceleration/train_large_scale_dataset.rst

+ acceleration/train_extreme_long_sequence.rst

+ acceleration/benchmark.rst

+

+.. toctree::

+ :maxdepth: 2

+ :caption: Chat

+

+ chat/llm.md

+ chat/agent.md

+ chat/vlm.md

+ chat/lmdeploy.md

+

+.. toctree::

+ :maxdepth: 2

+ :caption: Evaluation

+

+ evaluation/hook.md

+ evaluation/mmlu.md

+ evaluation/mmbench.md

+ evaluation/opencompass.md

+

+.. toctree::

+ :maxdepth: 2

+ :caption: Models

+

+ models/supported.md

+

+.. toctree::

+ :maxdepth: 2

+ :caption: InternEvo Migration

+

+ internevo_migration/internevo_migration.rst

+ internevo_migration/ftdp_dataset/ftdp.rst

+ internevo_migration/ftdp_dataset/Case1.rst

+ internevo_migration/ftdp_dataset/Case2.rst

+ internevo_migration/ftdp_dataset/Case3.rst

+ internevo_migration/ftdp_dataset/Case4.rst

diff --git a/docs/en/internevo_migration/ftdp_dataset/Case1.rst b/docs/en/internevo_migration/ftdp_dataset/Case1.rst

new file mode 100644

index 000000000..c8eb0c76a

--- /dev/null

+++ b/docs/en/internevo_migration/ftdp_dataset/Case1.rst

@@ -0,0 +1,2 @@

+Case 1

+======

diff --git a/docs/en/internevo_migration/ftdp_dataset/Case2.rst b/docs/en/internevo_migration/ftdp_dataset/Case2.rst

new file mode 100644

index 000000000..74069f68f

--- /dev/null

+++ b/docs/en/internevo_migration/ftdp_dataset/Case2.rst

@@ -0,0 +1,2 @@

+Case 2

+======

diff --git a/docs/en/internevo_migration/ftdp_dataset/Case3.rst b/docs/en/internevo_migration/ftdp_dataset/Case3.rst

new file mode 100644

index 000000000..d963b538b

--- /dev/null

+++ b/docs/en/internevo_migration/ftdp_dataset/Case3.rst

@@ -0,0 +1,2 @@

+Case 3

+======

diff --git a/docs/en/internevo_migration/ftdp_dataset/Case4.rst b/docs/en/internevo_migration/ftdp_dataset/Case4.rst

new file mode 100644

index 000000000..1f7626933

--- /dev/null

+++ b/docs/en/internevo_migration/ftdp_dataset/Case4.rst

@@ -0,0 +1,2 @@

+Case 4

+======

diff --git a/docs/en/internevo_migration/ftdp_dataset/ftdp.rst b/docs/en/internevo_migration/ftdp_dataset/ftdp.rst

new file mode 100644

index 000000000..613568f15

--- /dev/null

+++ b/docs/en/internevo_migration/ftdp_dataset/ftdp.rst

@@ -0,0 +1,2 @@

+ftdp

+====

diff --git a/docs/en/internevo_migration/internevo_migration.rst b/docs/en/internevo_migration/internevo_migration.rst

new file mode 100644

index 000000000..869206508

--- /dev/null

+++ b/docs/en/internevo_migration/internevo_migration.rst

@@ -0,0 +1,2 @@

+InternEVO Migration

+===================

diff --git a/docs/en/make.bat b/docs/en/make.bat

new file mode 100644

index 000000000..954237b9b

--- /dev/null

+++ b/docs/en/make.bat

@@ -0,0 +1,35 @@

+@ECHO OFF

+

+pushd %~dp0

+

+REM Command file for Sphinx documentation

+

+if "%SPHINXBUILD%" == "" (

+ set SPHINXBUILD=sphinx-build

+)

+set SOURCEDIR=.

+set BUILDDIR=_build

+

+%SPHINXBUILD% >NUL 2>NUL

+if errorlevel 9009 (

+ echo.

+ echo.The 'sphinx-build' command was not found. Make sure you have Sphinx

+ echo.installed, then set the SPHINXBUILD environment variable to point

+ echo.to the full path of the 'sphinx-build' executable. Alternatively you

+ echo.may add the Sphinx directory to PATH.

+ echo.

+ echo.If you don't have Sphinx installed, grab it from

+ echo.https://www.sphinx-doc.org/

+ exit /b 1

+)

+

+if "%1" == "" goto help

+

+%SPHINXBUILD% -M %1 %SOURCEDIR% %BUILDDIR% %SPHINXOPTS% %O%

+goto end

+

+:help

+%SPHINXBUILD% -M help %SOURCEDIR% %BUILDDIR% %SPHINXOPTS% %O%

+

+:end

+popd

diff --git a/docs/en/models/supported.md b/docs/en/models/supported.md

new file mode 100644

index 000000000..c61546e52

--- /dev/null

+++ b/docs/en/models/supported.md

@@ -0,0 +1 @@

+# Supported Models

diff --git a/docs/en/preparation/pretrained_model.rst b/docs/en/preparation/pretrained_model.rst

new file mode 100644

index 000000000..a3ac291ac

--- /dev/null

+++ b/docs/en/preparation/pretrained_model.rst

@@ -0,0 +1,2 @@

+Pretrained Model

+================

diff --git a/docs/en/preparation/prompt_template.rst b/docs/en/preparation/prompt_template.rst

new file mode 100644

index 000000000..43ccb98e3

--- /dev/null

+++ b/docs/en/preparation/prompt_template.rst

@@ -0,0 +1,2 @@

+Prompt Template

+===============

diff --git a/docs/en/reward_model/modify_settings.md b/docs/en/reward_model/modify_settings.md

new file mode 100644

index 000000000..4f41ca300

--- /dev/null

+++ b/docs/en/reward_model/modify_settings.md

@@ -0,0 +1,100 @@

+## Modify Reward Model Training Configuration

+

+This section introduces the config related to Reward Model training. For more details on XTuner config files, please refer to [Modify Settings](https://xtuner.readthedocs.io/zh-cn/latest/training/modify_settings.html).

+

+### Loss Function

+

+XTuner uses the [Bradley–Terry Model](https://en.wikipedia.org/wiki/Bradley%E2%80%93Terry_model) for preference modeling in the Reward Model. You can specify `loss_type="ranking"` to use ranking loss. XTuner also implements the focal loss function proposed in InternLM2, which adjusts the weights of difficult and easy samples to avoid overfitting. You can set `loss_type="focal"` to use this loss function. For a detailed explanation of this loss function, please refer to the [InternLM2 Technical Report](https://arxiv.org/abs/2403.17297).

+

+Additionally, to maintain stable reward model output scores, we have added a constraint term in the loss. You can specify `penalty_type='log_barrier'` or `penalty_type='L2'` to enable log barrier or L2 constraints, respectively.

+

+```python

+#######################################################################

+# PART 1 Settings #

+#######################################################################

+# Model

+loss_type = 'focal' # 'ranking' or 'focal'

+penalty_type = 'log_barrier' # 'log_barrier' or 'L2'

+```

+

+### Modifying the Model

+

+Users can modify `pretrained_model_name_or_path` to change the pretrained model.

+

+Note that XTuner calculates reward scores by appending a special token at the end of the data. Therefore, when switching models with different vocabularies, the ID of this special token also needs to be modified accordingly. We usually use an unused token at the end of the vocabulary as the reward token.

+

+For example, in InternLM2, we use `[UNUSED_TOKEN_130]` as the reward token:

+

+```python

+#######################################################################

+# PART 1 Settings #

+#######################################################################

+# Model

+pretrained_model_name_or_path = 'internlm/internlm2-chat-1_8b-sft'

+reward_token_id = 92527 # use [UNUSED_TOKEN_130] as reward token

+```

+

+If the user switches to the llama3 model, we can use `<|reserved_special_token_0|>` as the reward token:

+

+```python

+#######################################################################

+# PART 1 Settings #

+#######################################################################

+# Model

+pretrained_model_name_or_path = 'meta-llama/Meta-Llama-3-8B-Instruct'

+reward_token_id = 128002 # use <|reserved_special_token_0|> as reward token

+```

+

+### Training Data

+

+In Reward Model training, you can specify the maximum number of tokens for a single sample sequence using `max_length`. XTuner will automatically truncate or pad the data.

+

+```python

+# Data

+max_length = 2048

+```

+

+In the configuration file, we use the `train_dataset` field to specify the training dataset. You can specify the dataset loading method using the `dataset` field and the dataset mapping function using the `dataset_map_fn` field.

+

+```python

+#######################################################################

+# PART 3 Dataset & Dataloader #

+#######################################################################

+sampler = SequenceParallelSampler \

+ if sequence_parallel_size > 1 else DefaultSampler

+

+train_dataset = dict(

+ type=build_preference_dataset,

+ dataset=dict(

+ type=load_dataset,

+ path='argilla/ultrafeedback-binarized-preferences-cleaned'),

+ tokenizer=tokenizer,

+ max_length=max_length,

+ dataset_map_fn=orpo_dpo_mix_40k_map_fn,

+ is_dpo=False,

+ is_reward=True,

+ reward_token_id=reward_token_id,

+ num_proc=32,

+ use_varlen_attn=use_varlen_attn,

+ max_packed_length=max_packed_length,

+ shuffle_before_pack=True,

+)

+

+train_dataloader = dict(

+ batch_size=batch_size,

+ num_workers=dataloader_num_workers,

+ dataset=train_dataset,

+ sampler=dict(type=sampler, shuffle=True),

+ collate_fn=dict(

+ type=preference_collate_fn, use_varlen_attn=use_varlen_attn))

+```

+

+In the above configuration, we use `load_dataset` to load the `argilla/ultrafeedback-binarized-preferences-cleaned` dataset from Hugging Face, using `orpo_dpo_mix_40k_map_fn` as the dataset mapping function (this is because `orpo_dpo_mix_40k` and `ultrafeedback-binarized-preferences-cleaned` have the same format, so the same mapping function is used).

+

+For more information on handling datasets and writing dataset mapping functions, please refer to the [Preference Data Section](./preference_data.md).

+

+### Accelerating Training

+

+When training with preference data, we recommend enabling the [Variable-Length Attention Mechanism](https://xtuner.readthedocs.io/zh-cn/latest/acceleration/varlen_flash_attn.html) to avoid memory waste caused by length differences between chosen and rejected samples within a single preference. You can enable the variable-length attention mechanism by setting `use_varlen_attn=True`.

+

+XTuner also supports many training acceleration methods. For details on how to use them, please refer to the [Acceleration Strategies Section](https://xtuner.readthedocs.io/zh-cn/latest/acceleration/hyper_parameters.html).

diff --git a/docs/en/reward_model/overview.md b/docs/en/reward_model/overview.md

new file mode 100644

index 000000000..eb210140c

--- /dev/null

+++ b/docs/en/reward_model/overview.md

@@ -0,0 +1,43 @@

+## Introduction to Reward Model

+

+### Overview

+

+The Reward Model is a crucial component in the reinforcement learning process. Its primary task is to predict reward values based on given inputs, guiding the direction of the learning algorithm. In RLHF (Reinforcement Learning from Human Feedback), the Reward Model acts as a proxy for human preferences, helping the reinforcement learning algorithm optimize strategies more effectively.

+

+In large language model training, the Reward Model typically refers to the Preference Model. By providing good and bad (chosen & rejected) responses to the same prompts during training, it fits human preferences and predicts a reward value during inference to guide the optimization of the Actor model in the RLHF process.

+

+Applications of the Reward Model include but are not limited to:

+

+- **RLHF Training**: During RLHF training such as the Proximal Policy Optimization (PPO) algorithm, the Reward Model provides reward signals, improve the quality of generated content, and align it more closely with human preferences.

+- **BoN Sampling**: In the Best-of-N (BoN) sampling process, users can use the Reward Model to score multiple responses to the same prompt and select the highest-scoring generated result, thereby enhancing the model's output.

+- **Data Construction**: The Reward Model can be used to evaluate and filter training data or replace manual annotation to construct DPO training data.

+

+### Features of Reward Model Training in XTuner

+

+The Reward Model training in XTuner offers the following significant advantages:

+

+1. **Latest Training Techniques**: XTuner integrates the Reward Model training loss function from InternLM2, which stabilizes the numerical range of reward scores and reduces overfitting on simple samples (see [InternLM2 Technical Report](https://arxiv.org/abs/2403.17297) for details).

+

+2. **Reducing Memory Waste**: Due to the length differences in chosen and rejected data in preference datasets, padding tokens during data concatenation can cause memory waste. In XTuner, by utilizing the variable-length attention feature from Flash Attention2, preference pairs are packed into the same sequence during training, significantly reducing memory waste caused by padding tokens. This not only improves memory efficiency but also allows for training larger models or handling more data under the same hardware conditions.

+

+

+

+3. **Efficient Training**: Leveraging XTuner's QLoRA training capabilities, we can perform full parameter training only on the Reward Model's Value Head, while using QLoRA fine-tuning on the language model itself, substantially reducing the memory overhead of model training.

+

+4. **Long Text Training**: With XTuner's sequence parallel functionality, long text data can be trained efficiently.

+

+

+

+### Getting Started

+

+Refer to the [Quick Start Guide](./quick_start.md) to understand the basic concepts. For more information on configuring training parameters, please see the [Modifying Reward Model Settings](./modify_settings.md) section.

+

+### Open-source Models

+

+We use XTuner to train the InternLM2 Reward Models from the InternLM2 Technical Report, welcome to download and use:

+

+| Model | Transformers(HF) | ModelScope(HF) | OpenXLab(HF) | RewardBench Score |

+| ------------------------- | -------------------------------------------------------------------------------- | ---------------------------------------------------------------------------------------------------------- | ----------------------------------------------------------------------------------------------------------------------------------------------------------- | ----------------- |

+| **InternLM2-1.8B-Reward** | [🤗internlm2-1_8b-reward](https://huggingface.co/internlm/internlm2-1_8b-reward) | [internlm2-1_8b-reward](https://modelscope.cn/models/Shanghai_AI_Laboratory/internlm2-1_8b-reward/summary) | [](https://openxlab.org.cn/models/detail/OpenLMLab/internlm2-1_8b-reward) | 80.6 |

+| **InternLM2-7B-Reward** | [🤗internlm2-7b-reward](https://huggingface.co/internlm/internlm2-7b-reward) | [internlm2-7b-reward](https://modelscope.cn/models/Shanghai_AI_Laboratory/internlm2-7b-reward/summary) | [](https://openxlab.org.cn/models/detail/OpenLMLab/internlm2-7b-reward) | 86.6 |

+| **InternLM2-20B-Reward** | [🤗internlm2-20b-reward](https://huggingface.co/internlm/internlm2-20b-reward) | [internlm2-20b-reward](https://modelscope.cn/models/Shanghai_AI_Laboratory/internlm2-20b-reward/summary) | [](https://openxlab.org.cn/models/detail/OpenLMLab/internlm2-20b-reward) | 89.5 |

diff --git a/docs/en/reward_model/preference_data.md b/docs/en/reward_model/preference_data.md

new file mode 100644

index 000000000..2f304e627

--- /dev/null

+++ b/docs/en/reward_model/preference_data.md

@@ -0,0 +1,110 @@

+## Preference Dataset

+

+### Overview

+

+XTuner's Reward Model, along with DPO, ORPO, and other algorithms that training on preference data, adopts the same data format. Each training sample in the preference dataset needs to contain the following three fields: `prompt`, `chosen`, and `rejected`. The values for each field follow the [OpenAI chat message](https://platform.openai.com/docs/api-reference/chat/create) format. A specific example is as follows:

+

+```json

+{

+ "prompt": [

+ {

+ "role": "system",

+ "content": "You are a helpful assistant."

+ },

+ {

+ "role": "user",

+ "content": "Who won the world series in 2020?"

+ },

+ {

+ "role": "assistant",

+ "content": "The Los Angeles Dodgers won the World Series in 2020."

+ },

+ {

+ "role": "user",

+ "content": "Where was it played?"

+ }

+ ],

+ "chosen": [

+ {

+ "role": "assistant",

+ "content": "The 2020 World Series was played at Globe Life Field in Arlington, Texas."

+ }

+ ],

+ "rejected": [

+ {

+ "role": "assistant",

+ "content": "I don't know."

+ }

+ ]

+}

+```

+

+When conducting Reward Model training or DPO training, XTuner processes the preference dataset into different training labels based on the type of training task.

+

+

+

+As shown in the above image, for Reward Model training, we follow the ChatGPT training method by adding a special `<|reward|>` token at the end of the conversation data and calculating the loss only on the logits output by this token. For DPO series algorithm training, we mask the tokens in the prompt part and calculate the loss only on the chosen and rejected responses. In the configuration file, we control the dataset type through the `is_reward` and `is_dpo` fields in the dataset.

+

+### Training with Custom Data

+

+After understanding the data format of a single sample, you only need to save the converted data in [JSON Lines](https://jsonlines.org/) format. Each line in the file is a sample.

+

+```json

+{"prompt": [{"content": "How can I learn to better regulate my emotions as a grown adult?", "role": "user"}], "chosen": [{"content": "You could do emotional regulation exercises with me, and try to notice and practice ways that you could better control your emotions in real life.", "role": "assistant"}], "rejected": [{"content": "Do you mean how can you learn to be more emotionally mature?", "role": "assistant"}]}

+{"prompt": [{"content": "What are some interesting things I can add to a grilled cheese sandwich?", "role": "user"}, {"content": "A tomato, a slice of pickle, some chopped pickles, some chopped green onions. Also, some mayonnaise if you like.", "role": "assistant"}, {"content": "Sounds great. Anything else?", "role": "user"}], "chosen": [{"content": "If you’d like something a little different, I recommend grating some horseradish on top of the sandwich.", "role": "assistant"}], "rejected": [{"content": "Maybe some nice fresh basil?", "role": "assistant"}]}

+{"prompt": [{"content": "How do I bob for apples?", "role": "user"}], "chosen": [{"content": "Ah! Do you mean the game of “applesauce,” or do you mean the idea of “to bob for apples”?", "role": "assistant"}], "rejected": [{"content": "Sorry, I don’t know that term.", "role": "assistant"}]}

+......

+```

+

+After preparing the custom dataset, you need to fill in the path to your saved data in the `data_files` field in the configuration file. You can load multiple JSONL files simultaneously for training.

+

+```python

+#######################################################################

+# PART 3 Dataset & Dataloader #

+#######################################################################

+train_dataset = dict(

+ type=build_preference_dataset,

+ dataset=dict(

+ type=load_jsonl_dataset,

+ data_files=[

+ '/your/jsonl/path/here.jsonl',

+ '/your/another/jsonl/path/here.jsonl'

+ ]),

+)

+```

+

+### Training with Open Source Datasets

+

+Similar to configuring SFT data in XTuner, when using open-source datasets from Hugging Face, you only need to define a mapping function `map_fn` to process the dataset format into XTuner's data format.

+

+Taking `Intel/orca_dpo_pairs` as an example, this dataset has `system`, `question`, `chosen`, and `rejected` fields, with each field's value in text format instead of the [OpenAI chat message](https://platform.openai.com/docs/api-reference/chat/create) format. Therefore, we need to define a mapping function for this dataset:

+

+```python

+def intel_orca_dpo_map_fn(example):

+ prompt = [{

+ 'role': 'system',

+ 'content': example['system']

+ }, {

+ 'role': 'user',

+ 'content': example['question']

+ }]

+ chosen = [{'role': 'assistant', 'content': example['chosen']}]

+ rejected = [{'role': 'assistant', 'content': example['rejected']}]

+ return {'prompt': prompt, 'chosen': chosen, 'rejected': rejected}

+```

+

+As shown in the code, `intel_orca_dpo_map_fn` processes the four fields in the original data, converting them into `prompt`, `chosen`, and `rejected` fields, and ensures each field follows the [OpenAI chat message](https://platform.openai.com/docs/api-reference/chat/create) format, maintaining uniformity in subsequent data processing flows.

+

+After defining the mapping function, you need to import it in the configuration file and configure it in the `dataset_map_fn` field.

+

+```python

+train_dataset = dict(

+ type=build_preference_dataset,

+ dataset=dict(

+ type=load_dataset,

+ path='Intel/orca_dpo_pairs'),

+ tokenizer=tokenizer,

+ max_length=max_length,

+ dataset_map_fn=intel_orca_dpo_map_fn,

+)

+```

diff --git a/docs/en/reward_model/quick_start.md b/docs/en/reward_model/quick_start.md

new file mode 100644

index 000000000..5c802be2f

--- /dev/null

+++ b/docs/en/reward_model/quick_start.md

@@ -0,0 +1,85 @@

+## Quick Start Guide for Reward Model

+

+In this section, we will introduce how to use XTuner to train a 1.8B Reward Model, helping you get started quickly.

+

+### Preparing Pretrained Model Weights

+

+According to the paper [Training language models to follow instructions with human feedback](https://arxiv.org/abs/2203.02155), we use a language model fine-tuned with SFT as the initialization model for the Reward Model. Here, we use [InternLM2-chat-1.8b-sft](https://huggingface.co/internlm/internlm2-chat-1_8b-sft) as the initialization model.

+

+Set `pretrained_model_name_or_path = 'internlm/internlm2-chat-1_8b-sft'` in the training configuration file, and the model files will be automatically downloaded when training starts. If you need to download the model weights manually, please refer to the section [Preparing Pretrained Model Weights](https://xtuner.readthedocs.io/zh-cn/latest/preparation/pretrained_model.html), which provides detailed instructions on how to download model weights from Huggingface or Modelscope. Here are the links to the models on HuggingFace and ModelScope:

+

+- HuggingFace link: https://huggingface.co/internlm/internlm2-chat-1_8b-sft

+- ModelScope link: https://modelscope.cn/models/Shanghai_AI_Laboratory/internlm2-chat-1_8b-sft/summary

+

+### Preparing Training Data

+

+In this tutorial, we use the [UltraFeedback](https://arxiv.org/abs/2310.01377) dataset as an example. For convenience, we use the preprocessed [argilla/ultrafeedback-binarized-preferences-cleaned](https://huggingface.co/datasets/argilla/ultrafeedback-binarized-preferences-cleaned) dataset from Huggingface.

+

+```python

+train_dataset = dict(

+ type=build_preference_dataset,

+ dataset=dict(

+ type=load_dataset,

+ path='argilla/ultrafeedback-binarized-preferences-cleaned'),

+ dataset_map_fn=orpo_dpo_mix_40k_map_fn,

+ is_dpo=False,

+ is_reward=True,

+)

+```

+

+Using the above configuration in the configuration file will automatically download and process this dataset. If you want to use other open-source datasets from Huggingface or custom datasets, please refer to the [Preference Dataset](./preference_data.md) section.

+

+### Preparing Configuration Files

+

+XTuner provides several ready-to-use configuration files, which can be viewed using `xtuner list-cfg`. Execute the following command to copy a configuration file to the current directory.

+

+```bash

+xtuner copy-cfg internlm2_chat_1_8b_reward_full_ultrafeedback .

+```

+

+Open the copied configuration file. If you choose to download the model and dataset automatically, no modifications are needed. If you want to specify paths to your pre-downloaded model and dataset, modify the `pretrained_model_name_or_path` and the `path` parameter in `dataset` under `train_dataset`.

+

+For more training parameter configurations, please refer to the section [Modifying Reward Training Configuration](./modify_settings.md).

+

+### Starting the Training

+

+After completing the above steps, you can start the training task using the following commands.

+

+```bash

+# Single node single GPU

+xtuner train ./internlm2_chat_1_8b_reward_full_ultrafeedback_copy.py

+# Single node multiple GPUs

+NPROC_PER_NODE=${GPU_NUM} xtuner train ./internlm2_chat_1_8b_reward_full_ultrafeedback_copy.py

+# Slurm cluster

+srun ${SRUN_ARGS} xtuner train ./internlm2_chat_1_8b_reward_full_ultrafeedback_copy.py --launcher slurm

+```

+

+The correct training log should look like the following (running on a single A800 GPU):

+

+```

+06/06 16:12:11 - mmengine - INFO - Iter(train) [ 10/15230] lr: 3.9580e-07 eta: 2:59:41 time: 0.7084 data_time: 0.0044 memory: 18021 loss: 0.6270 acc: 0.0000 chosen_score_mean: 0.0000 rejected_score_mean: 0.0000 num_samples: 4.0000 num_tokens: 969.0000

+06/06 16:12:17 - mmengine - INFO - Iter(train) [ 20/15230] lr: 8.3536e-07 eta: 2:45:25 time: 0.5968 data_time: 0.0034 memory: 42180 loss: 0.6270 acc: 0.5000 chosen_score_mean: 0.0013 rejected_score_mean: 0.0010 num_samples: 4.0000 num_tokens: 1405.0000

+06/06 16:12:22 - mmengine - INFO - Iter(train) [ 30/15230] lr: 1.2749e-06 eta: 2:37:18 time: 0.5578 data_time: 0.0024 memory: 32121 loss: 0.6270 acc: 0.7500 chosen_score_mean: 0.0016 rejected_score_mean: 0.0011 num_samples: 4.0000 num_tokens: 932.0000

+06/06 16:12:28 - mmengine - INFO - Iter(train) [ 40/15230] lr: 1.7145e-06 eta: 2:36:05 time: 0.6033 data_time: 0.0025 memory: 42186 loss: 0.6270 acc: 0.7500 chosen_score_mean: 0.0027 rejected_score_mean: 0.0016 num_samples: 4.0000 num_tokens: 994.0000

+06/06 16:12:35 - mmengine - INFO - Iter(train) [ 50/15230] lr: 2.1540e-06 eta: 2:41:03 time: 0.7166 data_time: 0.0027 memory: 42186 loss: 0.6278 acc: 0.5000 chosen_score_mean: 0.0031 rejected_score_mean: 0.0032 num_samples: 4.0000 num_tokens: 2049.0000

+06/06 16:12:40 - mmengine - INFO - Iter(train) [ 60/15230] lr: 2.5936e-06 eta: 2:33:37 time: 0.4627 data_time: 0.0023 memory: 30238 loss: 0.6262 acc: 1.0000 chosen_score_mean: 0.0057 rejected_score_mean: 0.0030 num_samples: 4.0000 num_tokens: 992.0000

+06/06 16:12:46 - mmengine - INFO - Iter(train) [ 70/15230] lr: 3.0331e-06 eta: 2:33:18 time: 0.6018 data_time: 0.0025 memory: 42186 loss: 0.6247 acc: 0.7500 chosen_score_mean: 0.0117 rejected_score_mean: 0.0055 num_samples: 4.0000 num_tokens: 815.0000

+```

+

+### Model Conversion

+

+XTuner provides integrated tools to convert models to HuggingFace format. Simply execute the following commands:

+

+```bash

+# Create a directory to store HF format parameters

+mkdir work_dirs/internlm2_chat_1_8b_reward_full_ultrafeedback_copy/iter_15230_hf

+

+# Convert the format

+xtuner convert pth_to_hf internlm2_chat_1_8b_reward_full_ultrafeedback_copy.py \

+ work_dirs/internlm2_chat_1_8b_reward_full_ultrafeedback_copy.py/iter_15230.pth \

+ work_dirs/internlm2_chat_1_8b_reward_full_ultrafeedback_copy.py/iter_15230_hf

+```

+

+This will convert the XTuner's ckpt to the HuggingFace format.

+

+Note: Since the Reward Model type is not integrated into the official transformers library, only the Reward Models trained with InternLM2 will be converted to the `InternLM2ForRewardModel` type. Other models will default to the `SequenceClassification` type (for example, LLaMa3 will be converted to the `LlamaForSequenceClassification` type).

diff --git a/docs/en/switch_language.md b/docs/en/switch_language.md

new file mode 100644

index 000000000..ff7c4c425

--- /dev/null

+++ b/docs/en/switch_language.md

@@ -0,0 +1,3 @@

+## English

+

+## 简体中文

diff --git a/docs/en/training/custom_agent_dataset.rst b/docs/en/training/custom_agent_dataset.rst

new file mode 100644

index 000000000..b4ad82f01

--- /dev/null

+++ b/docs/en/training/custom_agent_dataset.rst

@@ -0,0 +1,2 @@

+Custom Agent Dataset

+====================

diff --git a/docs/en/training/custom_pretrain_dataset.rst b/docs/en/training/custom_pretrain_dataset.rst

new file mode 100644

index 000000000..00ef0e0cb

--- /dev/null

+++ b/docs/en/training/custom_pretrain_dataset.rst

@@ -0,0 +1,2 @@

+Custom Pretrain Dataset

+=======================

diff --git a/docs/en/training/custom_sft_dataset.rst b/docs/en/training/custom_sft_dataset.rst

new file mode 100644

index 000000000..39a0f7c33

--- /dev/null

+++ b/docs/en/training/custom_sft_dataset.rst

@@ -0,0 +1,2 @@

+Custom SFT Dataset

+==================

diff --git a/docs/en/training/modify_settings.rst b/docs/en/training/modify_settings.rst

new file mode 100644

index 000000000..382aca872

--- /dev/null

+++ b/docs/en/training/modify_settings.rst

@@ -0,0 +1,2 @@

+Modify Settings

+===============

diff --git a/docs/en/training/multi_modal_dataset.rst b/docs/en/training/multi_modal_dataset.rst

new file mode 100644

index 000000000..e3d174a1b

--- /dev/null

+++ b/docs/en/training/multi_modal_dataset.rst

@@ -0,0 +1,2 @@

+Multi-modal Dataset

+===================