A simple solution to incorporate object localization into conventional computer vision object detection algorithms.

IDEA: There aren't that many open source real-time 3D object detection. This is an example of using "more popular" 2D object detection and then localize it with a few feature points. It uses recently released Barracuda for object detection and ARFoundation for AR. It works both on IOS and Android devices.

"com.unity.barracuda": "1.0.3",

"com.unity.xr.arfoundation": "4.0.8",

"com.unity.xr.arkit": "4.0.8",

"com.unity.xr.arcore": "4.0.8"

It is developed in Unity 2019.4.9 and requires product ready Barracuda with updated AR packages. The preview Barracuda versions seems unstable and may not work.

- Open the project in Unity (Versions > 2019.4.9).

- In

Edit -> Player Settings -> Other XR Plug-in Management, make sure Initialize XR on Startup and Plug-in providers are marked to enable ARCamera. - Make sure that Detector has ONNX Model file and Labels file set.

- For Android, check the Minimum API Level at

Project Settings -> Player -> Others Settings -> Minimum API Level. it requires at least Android 7.0 'Nougat' (API Level 24). - In

File -> Build settingschoose Detect and hit Build and run. - For IOS, fix team setting in

Signing & Capabilities.

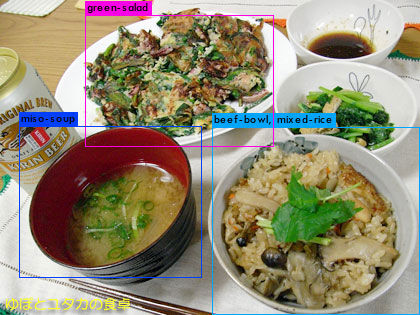

The current uploaded model is Yolo version 2 (tiny) and trained on FOOD100 dataset through darknet. A good example of the training tool is here. Ideally, it can detect 100 categories of dishes.

- Convert your model into the ONNX format. If it is trained through Darknet, convert it into frozen tensorflow model first, then ONNX.

- Upload the model and label to

Assets/Models. Use inspector to check the model input and output names and modify the associated variables in here and here inAssets/Scripts/Detector.cs.

Partial code borrowed from TFClassify-Unity-Barracuda and arfoundation-samples.