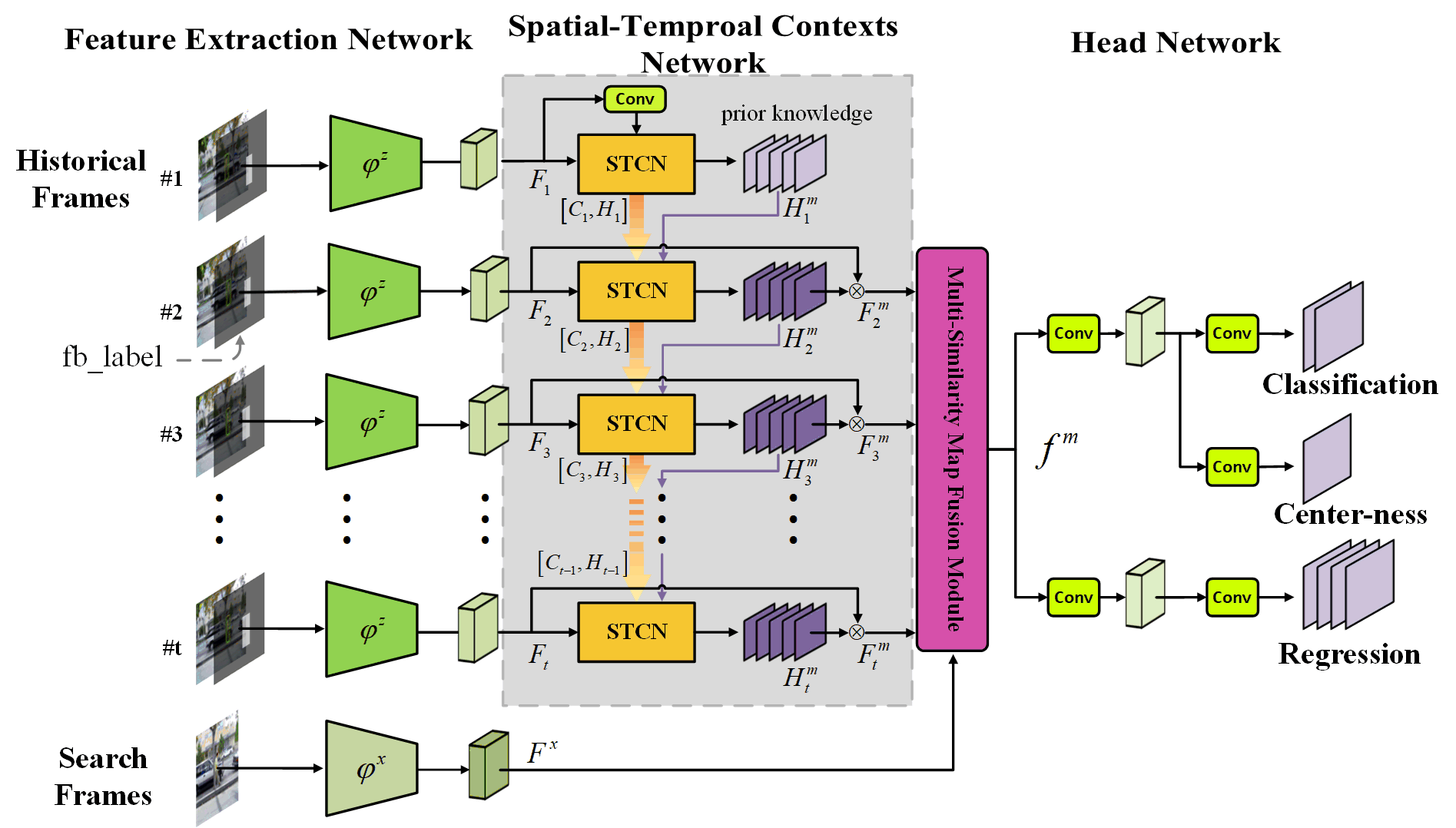

This is the official implementation of the paper: Don't Forget the Past, Learn From It for Object Tracking.

-

Prepare Anaconda, CUDA and the corresponding toolkits. CUDA version required: 10.0+

-

Create a new conda environment and activate it.

conda create -n STCTrack python=3.7 -y

conda activate STCTrack- Install

pytorchandtorchvision.

conda install pytorch==1.4.0 torchvision==0.5.0 cudatoolkit=10.0 -c pytorch

# pytorch v1.5.0, v1.6.0, or higher should also be OK. - Install other required packages.

pip install -r requirements.txt- Prepare the datasets: OTB2015, VOT2018, UAV123, GOT-10k, TrackingNet, LaSOT, ILSVRC VID*, ILSVRC DET*, COCO*, and something else you want to test. Set the paths as the following:

├── STCTrack

| ├── ...

| ├── ...

| ├── datasets

| | ├── COCO -> /opt/data/COCO

| | ├── GOT-10k -> /opt/data/GOT-10k

| | ├── ILSVRC2015 -> /opt/data/ILSVRC2015

| | ├── LaSOT -> /opt/data/LaSOT/LaSOTBenchmark

| | ├── OTB

| | | └── OTB2015 -> /opt/data/OTB2015

| | ├── TrackingNet -> /opt/data/TrackingNet

| | ├── UAV123 -> /opt/data/UAV123/UAV123

| | ├── VOT

| | | ├── vot2018

| | | | ├── VOT2018 -> /opt/data/VOT2018

| | | | └── VOT2018.json- Notes

i. Star notation(*): just for training. You can ignore these datasets if you just want to test the tracker.

ii. In this case, we create soft links for every dataset. The real storage location of all datasets is

/data/dir/. You can change them according to your situation.iii. The

VOT2018.jsonfile can be download from here.

-

Download the models we trained.

- GOT-10k model (😁Coming soon...)

- fulldata model (😁Coming soon...)

-

Use the path of the trained model to set the

pretrain_model_pathitem in the configuration file correctly, then run the shell command. -

Note that all paths we used here are relative, not absolute. See any configuration file in the

experimentsdirectory for examples and details.

python main/test.py --config testing_dataset_config_file_pathTake GOT-10k as an example:

python main/test.py --config experiments/stctrack/test/got10k/stctrack-got.yaml- Prepare the datasets as described in the last subsection.

- Download the pretrained backbone model from here( 😁Coming soon...).

- Run the shell command.

python main/train.py --config experiments/stctrack/train/got10k/stctrack-trn.yamlpython main/train.py --config experiments/stmtrack/train/fulldata/stctrack-trn-fulldata.yamlClick Baidu Web Drive(code:0fzv) to download all the following.

- OTB2015: Baidu Web Drive code:z856 , Google Drive(😁Coming soon...)

- GOT-10k:Baidu Web Drive code:xnwq , Google Drive(😁Coming soon...)

- LaSOT:Baidu Web Drive code:6zfu , Google Drive(😁Coming soon...)

- TrackingNet:Baidu Web Drive code:26at , Google Drive(😁Coming soon...)

- UAV123:Baidu Web Drive code:k3id , Google Drive(😁Coming soon...)

This repository is developed based on the single object tracking framework video_analyst. See it for more instructions and details.

None- Kai Huang@kevoen

If you have any questions, just create issues or email me:smile:.