head.mp4

-

2024/05/05: 🎉🎉🎉Sample training data on HuggingFace released. -

2024/05/02: 🌟🌟🌟Training source code released #99. -

2024/04/28: 👏👏👏Smooth SMPLs in Blender method released #96. -

2024/04/26: 🚁Great Blender Adds-on CEB Studios for various SMPL process! -

2024/04/12: ✨✨✨SMPL & Rendering scripts released! Champ your dance videos now💃🤸♂️🕺. See docs. -

2024/03/30: 🚀🚀🚀Amazing ComfyUI Wrapper by community. Here is the video tutorial. Thanks to @kijai🥳 -

2024/03/27: Cool Demo on replicate🌟. Thanks to @camenduru👏 -

2024/03/27: Visit our roadmap🕒 to preview the future of Champ.

- System requirement: Ubuntu20.04/Windows 11, Cuda 12.1

- Tested GPUs: A100, RTX3090

Create conda environment:

conda create -n champ python=3.10

conda activate champInstall packages with pip

pip install -r requirements.txtInstall packages with poetry

If you want to run this project on a Windows device, we strongly recommend to use

poetry.

poetry install --no-rootThe inference entrypoint script is ${PROJECT_ROOT}/inference.py. Before testing your cases, there are two preparations need to be completed:

You can easily get all pretrained models required by inference from our HuggingFace repo.

Clone the the pretrained models into ${PROJECT_ROOT}/pretrained_models directory by cmd below:

git lfs install

git clone https://huggingface.co/fudan-generative-ai/champ pretrained_modelsOr you can download them separately from their source repo:

- Champ ckpts: Consist of denoising UNet, guidance encoders, Reference UNet, and motion module.

- StableDiffusion V1.5: Initialized and fine-tuned from Stable-Diffusion-v1-2. (Thanks to runwayml)

- sd-vae-ft-mse: Weights are intended to be used with the diffusers library. (Thanks to stablilityai)

- image_encoder: Fine-tuned from CompVis/stable-diffusion-v1-4-original to accept CLIP image embedding rather than text embeddings. (Thanks to lambdalabs)

Finally, these pretrained models should be organized as follows:

./pretrained_models/

|-- champ

| |-- denoising_unet.pth

| |-- guidance_encoder_depth.pth

| |-- guidance_encoder_dwpose.pth

| |-- guidance_encoder_normal.pth

| |-- guidance_encoder_semantic_map.pth

| |-- reference_unet.pth

| `-- motion_module.pth

|-- image_encoder

| |-- config.json

| `-- pytorch_model.bin

|-- sd-vae-ft-mse

| |-- config.json

| |-- diffusion_pytorch_model.bin

| `-- diffusion_pytorch_model.safetensors

`-- stable-diffusion-v1-5

|-- feature_extractor

| `-- preprocessor_config.json

|-- model_index.json

|-- unet

| |-- config.json

| `-- diffusion_pytorch_model.bin

`-- v1-inference.yaml

Guidance motion data which is produced via SMPL & Rendering is necessary when performing inference.

You can download our pre-rendered samples on our HuggingFace repo and place into ${PROJECT_ROOT}/example_data directory:

git lfs install

git clone https://huggingface.co/datasets/fudan-generative-ai/champ_motions_example example_dataOr you can follow the SMPL & Rendering doc to produce your own motion datas.

Finally, the ${PROJECT_ROOT}/example_data will be like this:

./example_data/

|-- motions/ # Directory includes motions per subfolder

| |-- motion-01/ # A motion sample

| | |-- depth/ # Depth frame sequance

| | |-- dwpose/ # Dwpose frame sequance

| | |-- mask/ # Mask frame sequance

| | |-- normal/ # Normal map frame sequance

| | `-- semantic_map/ # Semanic map frame sequance

| |-- motion-02/

| | |-- ...

| | `-- ...

| `-- motion-N/

| |-- ...

| `-- ...

`-- ref_images/ # Reference image samples(Optional)

|-- ref-01.png

|-- ...

`-- ref-N.png

Now we have all prepared models and motions in ${PROJECT_ROOT}/pretrained_models and ${PROJECT_ROOT}/example_data separately.

Here is the command for inference:

python inference.py --config configs/inference/inference.yamlIf using poetry, command is

poetry run python inference.py --config configs/inference/inference.yamlAnimation results will be saved in ${PROJECT_ROOT}/results folder. You can change the reference image or the guidance motion by modifying inference.yaml.

The default motion-02 in inference.yaml has about 250 frames, requires ~20GB VRAM.

Note: If your VRAM is insufficient, you can switch to a shorter motion sequence or cut out a segment from a long sequence. We provide a frame range selector in inference.yaml, which you can replace with a list of [min_frame_index, max_frame_index] to conveniently cut out a segment from the sequence.

| Type | HuggingFace | ETA |

|---|---|---|

| Inference | SMPL motion samples | Thu Apr 18 2024 |

| Training | Sample datasets for Training | Coming Soon🚀🚀 |

| Status | Milestone | ETA |

|---|---|---|

| ✅ | Inference source code meet everyone on GitHub first time | Sun Mar 24 2024 |

| ✅ | Model and test data on Huggingface | Tue Mar 26 2024 |

| ✅ | Optimize dependencies and go well on Windows | Sun Mar 31 2024 |

| ✅ | Data preprocessing code release | Fri Apr 12 2024 |

| ✅ | Training code release | Thu May 02 2024 |

| ✅ | Sample of training data release on HuggingFace | Sun May 05 2024 |

| ✅ | Smoothing SMPL motion | Sun Apr 28 2024 |

| 🚀🚀🚀 | Gradio demo on HuggingFace | TBD |

If you find our work useful for your research, please consider citing the paper:

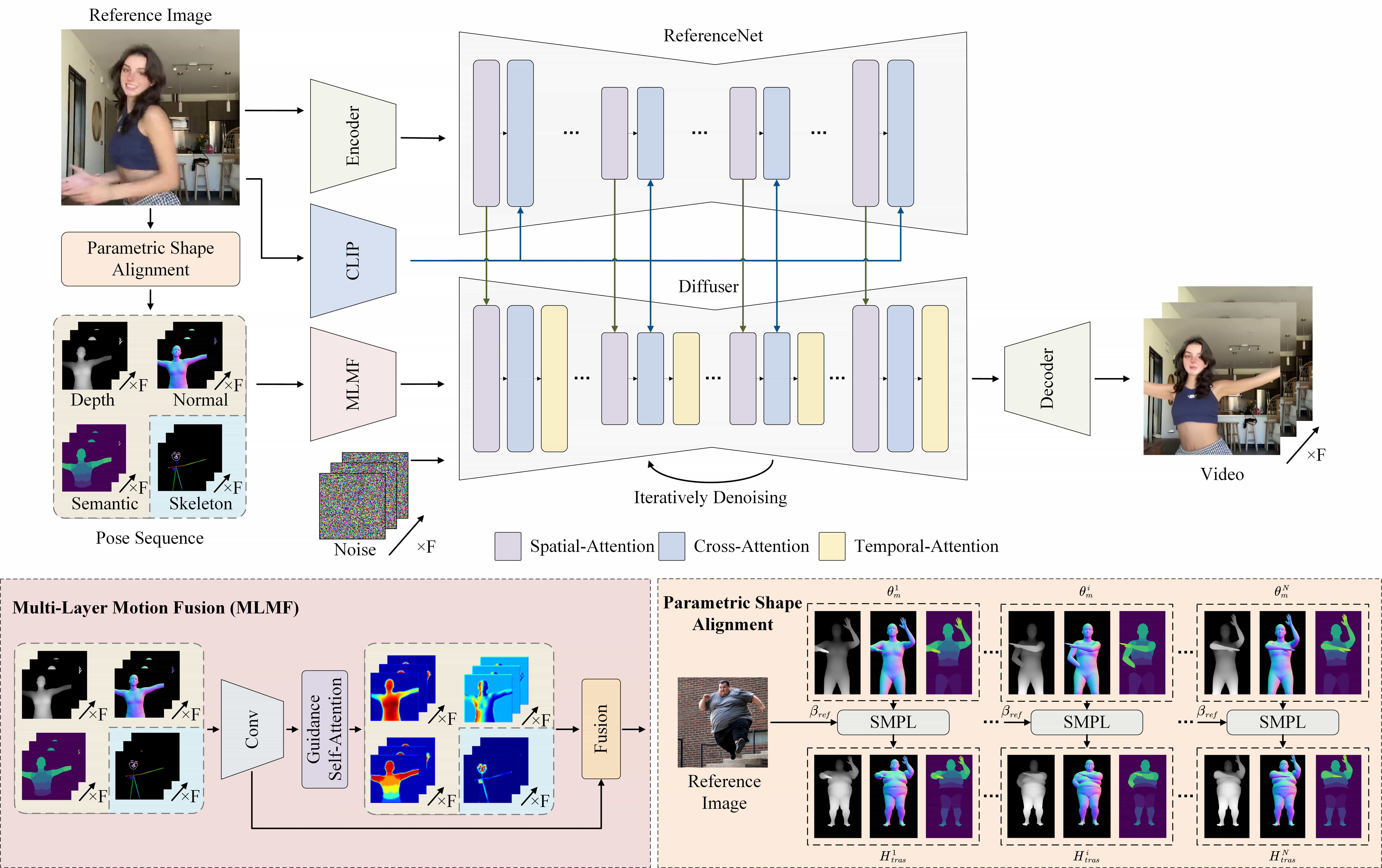

@misc{zhu2024champ,

title={Champ: Controllable and Consistent Human Image Animation with 3D Parametric Guidance},

author={Shenhao Zhu and Junming Leo Chen and Zuozhuo Dai and Yinghui Xu and Xun Cao and Yao Yao and Hao Zhu and Siyu Zhu},

year={2024},

eprint={2403.14781},

archivePrefix={arXiv},

primaryClass={cs.CV}

}

Multiple research positions are open at the Generative Vision Lab, Fudan University! Include:

- Research assistant

- Postdoctoral researcher

- PhD candidate

- Master students

Interested individuals are encouraged to contact us at [email protected] for further information.