By Jiawei Fan, Chao Li, Xiaolong Liu, Meina Song and Anbang Yao.

This repository is the official PyTorch implementation Af-DCD (Augmentation-Free Dense Contrastive Knowledge Distillation for Efficient Semantic Segmentation) published in NeurIPS 2023.

Af-DCD is a new contrastive distillation learning paradigm to train compact and accurate deep neural networks for semantic segmentation applications. Af-DCD leverages a masked feature mimicking strategy, and formulates a novel contrastive learning loss via taking advantage of tactful feature partitions across both channel and spatial dimensions, allowing to effectively transfer dense and structured local knowledge learnt by the teacher model to a target student model while maintaining training efficiency.

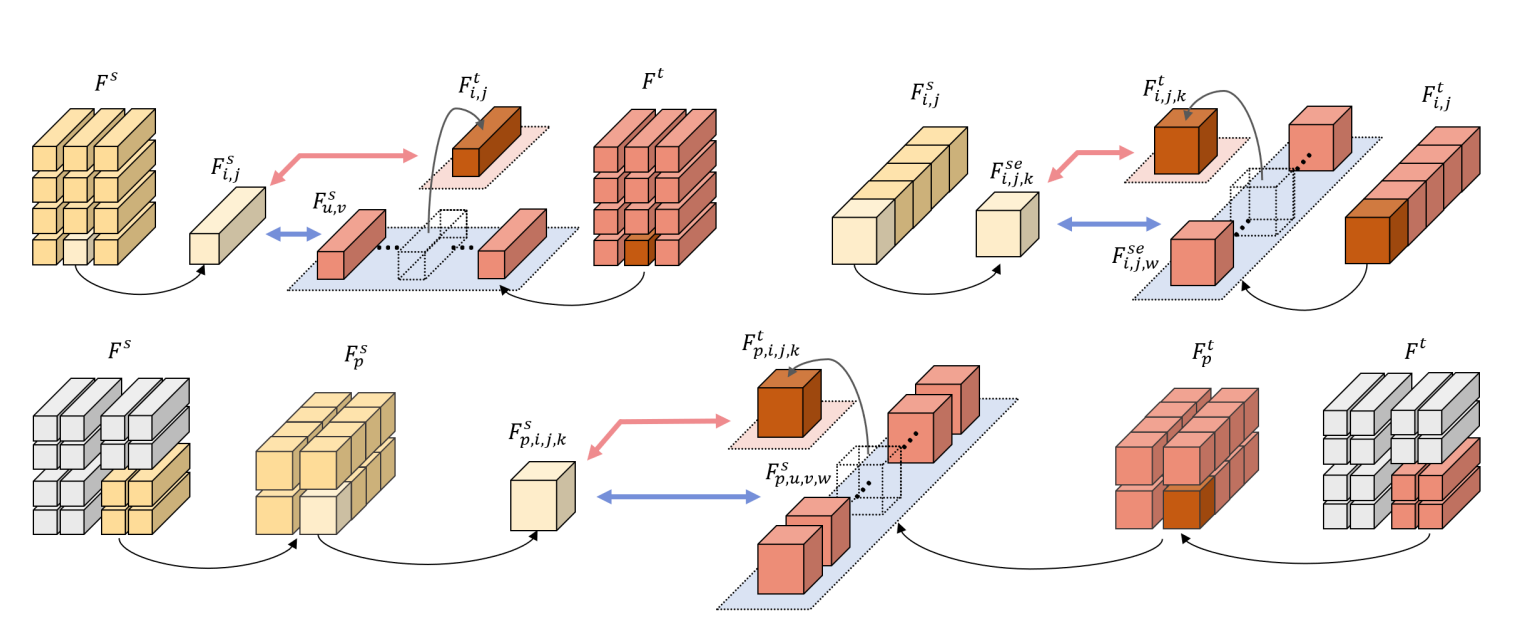

Detailed illustrations on three different types of Af-DCD, which are Spatial Contrasting (topleft), Channel Contrasting (top right) and Omni-Contrasting (bottom). For brevity, the contrasting process is illustrated merely using a specific contrastive sample in student feature maps, denoted as

Detailed illustrations on three different types of Af-DCD, which are Spatial Contrasting (topleft), Channel Contrasting (top right) and Omni-Contrasting (bottom). For brevity, the contrasting process is illustrated merely using a specific contrastive sample in student feature maps, denoted as

Ubuntu 18.04 LTS

Python 3.8 (Anaconda is recommended)

CUDA 11.1

PyTorch 1.10.0

NCCL for CUDA 11.1

Install python packages:

pip install torch==1.10.1+cu111 torchvision==0.11.2+cu111 torchaudio==0.10.1 -f https://download.pytorch.org/whl/cu111/torch_stable.html

pip install timm==0.3.2

pip install mmcv-full==1.2.7

pip install opencv-python==4.5.1.48- Download the target checkpoints. resnet101-imagenet.pth, resnet18-imagenet.pth and mobilenetv2-imagenet.pth

- Then, move these checkpoints into folder pretrained_ckpt/

- Download the target datasets: Cityscapes: You can access and download in this website; ADE20K: You can access and download in this Google Drive; COCO-Stuff-164K: You can access and download in this website; Pascal VOC: You can access and download in this Baidu Drive; CamVid: You can access and download in this Baidu Drive

- Then, move these data into folder data/

Note: The models released here show slightly different (mostly better) accuracies compared to the original models reported in our paper.

| Teacher | Student | Distillaton Methods | Performance (mIOU, %) |

|---|---|---|---|

| DeepLabV3-ResNet101 (78.07) | DeepLabV3-MobileNetV2 (73.12) | SKD | 73.82 |

| DeepLabV3-ResNet101 (78.07) | DeepLabV3-MobileNetV2 (73.12) | IFVD | 73.50 |

| DeepLabV3-ResNet101 (78.07) | DeepLabV3-MobileNetV2 (73.12) | CWD | 74.66 |

| DeepLabV3-ResNet101 (78.07) | DeepLabV3-MobileNetV2 (73.12) | CIRKD | 75.42 |

| DeepLabV3-ResNet101 (78.07) | DeepLabV3-MobileNetV2 (73.12) | MasKD | 75.26 |

| DeepLabV3-ResNet101 (78.07) | DeepLabV3-MobileNetV2 (73.12) | Af-DCD | 76.43 |

| DeepLabV3-ResNet101 (78.07) | DeepLabV3-ResNet18 (74.21) | SKD | 75.42 |

| DeepLabV3-ResNet101 (78.07) | DeepLabV3-ResNet18 (74.21) | IFVD | 75.59 |

| DeepLabV3-ResNet101 (78.07) | DeepLabV3-ResNet18 (74.21) | CWD | 75.55 |

| DeepLabV3-ResNet101 (78.07) | DeepLabV3-ResNet18 (74.21) | CIRKD | 76.38 |

| DeepLabV3-ResNet101 (78.07) | DeepLabV3-ResNet18 (74.21) | MasKD | 77.00 |

| DeepLabV3-ResNet101 (78.07) | DeepLabV3-ResNet18 (74.21) | Af-DCD | 77.03 |

| Teacher | Student | Distillaton Methods | Performance (mIOU, %) |

|---|---|---|---|

| DeepLabV3-ResNet101 (42.70) | DeepLabV3-ResNet18 (33.91) | KD | 34.88 |

| DeepLabV3-ResNet101 (42.70) | DeepLabV3-ResNet18 (33.91) | CIRKD | 35.41 |

| DeepLabV3-ResNet101 (42.70) | DeepLabV3-ResNet18 (33.91) | Af-DCD | 36.21 |

| Teacher | Student | Distillaton Methods | Performance (mIOU, %) |

|---|---|---|---|

| DeepLabV3-ResNet101 (38.71) | DeepLabV3-ResNet18 (32.60) | KD | 32.88 |

| DeepLabV3-ResNet101 (38.71) | DeepLabV3-ResNet18 (32.60) | CIRKD | 33.11 |

| DeepLabV3-ResNet101 (38.71) | DeepLabV3-ResNet18 (32.60) | Af-DCD | 34.02 |

| Teacher | Student | Distillaton Methods | Performance (mIOU, %) |

|---|---|---|---|

| DeepLabV3-ResNet101 (77.67) | DeepLabV3-ResNet18 (73.21) | SKD | 73.51 |

| DeepLabV3-ResNet101 (78.07) | DeepLabV3-ResNet18 (73.12) | IFVD | 73.85 |

| DeepLabV3-ResNet101 (78.07) | DeepLabV3-ResNet18 (73.12) | CWD | 74.02 |

| DeepLabV3-ResNet101 (78.07) | DeepLabV3-ResNet18 (73.12) | CIRKD | 74.50 |

| DeepLabV3-ResNet101 (78.07) | DeepLabV3-ResNet18 (73.12) | Af-DCD | 76.25 |

| DeepLabV3-ResNet101 (77.67) | PSPNet-ResNet18 (73.33) | SKD | 74.07 |

| DeepLabV3-ResNet101 (78.07) | PSPNet-ResNet18 (73.33) | IFVD | 73.54 |

| DeepLabV3-ResNet101 (78.07) | PSPNet-ResNet18 (73.33) | CWD | 73.99 |

| DeepLabV3-ResNet101 (78.07) | PSPNet-ResNet18 (73.33) | CIRKD | 74.78 |

| DeepLabV3-ResNet101 (78.07) | PSPNet-ResNet18 (73.33) | Af-DCD | 76.14 |

| Teacher | Student | Distillaton Methods | Performance (mIOU, %) |

|---|---|---|---|

| DeepLabV3-ResNet101 (69.84) | DeepLabV3-ResNet18 (66.92) | SKD | 67.46 |

| DeepLabV3-ResNet101 (69.84) | DeepLabV3-ResNet18 (66.92) | IFVD | 67.28 |

| DeepLabV3-ResNet101 (69.84) | DeepLabV3-ResNet18 (66.92) | CWD | 67.71 |

| DeepLabV3-ResNet101 (69.84) | DeepLabV3-ResNet18 (66.92) | CIRKD | 68.21 |

| DeepLabV3-ResNet101 (69.84) | DeepLabV3-ResNet18 (66.92) | Af-DCD | 69.27 |

| DeepLabV3-ResNet101 (69.84) | PSPNet-ResNet18 (66.73) | SKD | 67.83 |

| DeepLabV3-ResNet101 (69.84) | PSPNet-ResNet18 (66.73) | IFVD | 67.61 |

| DeepLabV3-ResNet101 (69.84) | PSPNet-ResNet18 (66.73) | CWD | 67.92 |

| DeepLabV3-ResNet101 (69.84) | PSPNet-ResNet18 (66.73) | CIRKD | 68.65 |

| DeepLabV3-ResNet101 (69.84) | PSPNet-ResNet18 (66.73) | Af-DCD | 69.48 |

Train DeepLabv3-Res101 -> DeepLabV3-MBV2 teacher-student pairs:

bash train_scripts/deeplabv3_r101_mbv2_r18_v2_ocmgd.shIf you find our work useful in your research, please consider citing:

@inproceedings{fan2023augmentation,

title={Augmentation-free Dense Contrastive Distillation for Efficient Semantic Segmentation},

author={Fan, Jiawei and Li, Chao and Liu, Xiaolong and Song, Meina and Yao, Anbang},

booktitle={Thirty-seventh Conference on Neural Information Processing Systems},

year={2023}

}Af-DCD is released under the Apache license. We encourage use for both research and commercial purposes, as long as proper attribution is given.

This repository is built based on CIRKD repository. We thank the authors for releasing their amazing codes.