V2XFusion is a multi-sensor fusion 3D object detection solution for roadside scenarios. Utilizing the relative concentration of object heights in the roadside scenarios and the more information brought by multi-sensor fusion, V2XFusion achieved a good performance on the DAIR-V2X-I Dataset. This repository contains training, quantization, and ONNX exportation for V2XFusion. The pictures below show the camera and LiDAR installation locations and the characteristics of the roadside scenarios.

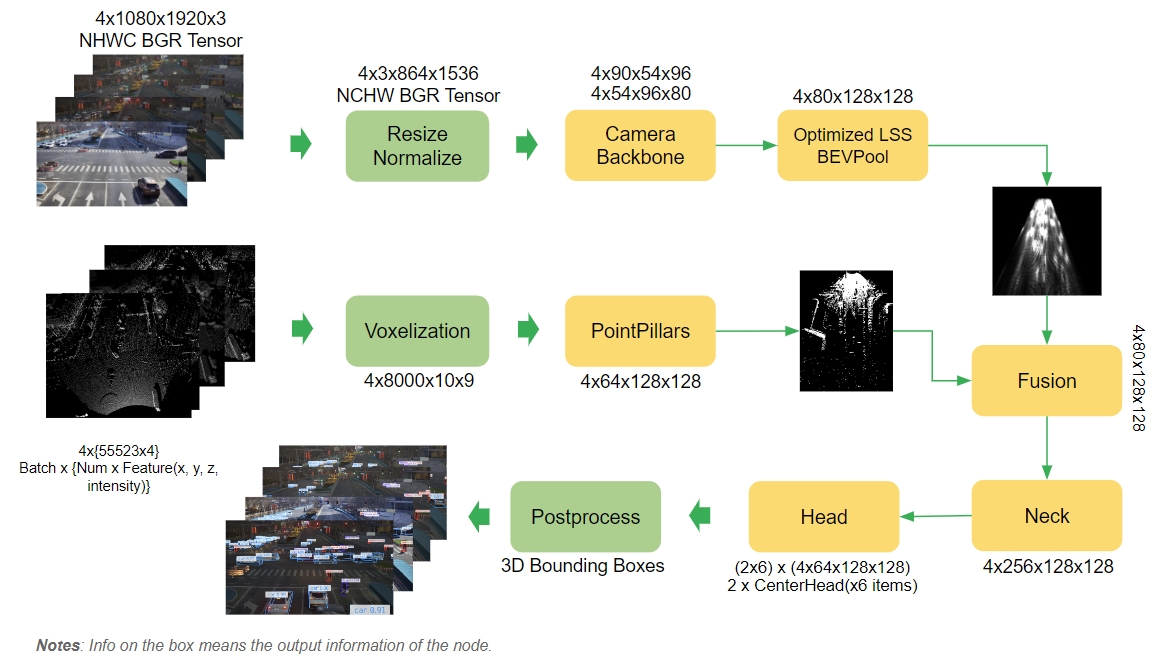

The architecture image takes batch size of 4 as an example. The data input of each batch consists of a camera data and the corresponding lidar data.

Please pull the specified version of BEVFusion and copy our patch package into it.

git clone https://github.com/mit-han-lab/bevfusion

cd bevfusion

git checkout db75150717a9462cb60241e36ba28d65f6908607

cd ..

git clone https://github.com/ADLab-AutoDrive/BEVHeight.git

cd BEVHeight

cp -r evaluators ../bevfusion/

cp -r scripts ../bevfusion/

cd ..

cp -r Lidar_AI_Solution/CUDA-V2XFusion/* bevfusion/ Convert the dataset format from DAIR-V2X-I to KITTI. Please refer to this document provided by BEVHeight.

Set the dataset_root and dataset_kitti_root in the configs/V2X-I/default.yaml to the paths where DAIR-V2X-I and DAIR-V2X-I-KITTI are located.

We have two training mechanisms, including sparsity training (2:4 structured sparsity) and dense training. The 2:4 structured sparsity can usually yield significant performance improvement on GPU that supports sparsity. This is an acceleration technique supported by hardware that we recommended.

For sparsity training and PTQ:

- Training

$ torchpack dist-run -np 8 python scripts/train.py configs/V2X-I/det/centerhead/lssfpn/camera+pointpillar/resnet34/default.yaml --mode sparsity

- PTQ

$ python scripts/ptq_v2xfusion.py configs/V2X-I/det/centerhead/lssfpn/camera+pointpillar/resnet34/default.yaml sparsity_epoch_100.pth --mode sparsity

For dense training and PTQ

- Training

$ torchpack dist-run -np 8 python scripts/train.py configs/V2X-I/det/centerhead/lssfpn/camera+pointpillar/resnet34/default.yaml --mode dense

- PTQ

$ python scripts/ptq_v2xfusion.py configs/V2X-I/det/centerhead/lssfpn/camera+pointpillar/resnet34/default.yaml dense_epoch_100.pth --mode dense

We have two export configurations available: FP16 (--precision fp16) and INT8 (--precision int8). FP16 configuration provides sub-optimal TensorRT performance but the best prediction accuracy when compared to INT8. There are no QDQ nodes in the exported ONNX file. INT8 configuration provides optimal TensorRT performance but sub-optimal prediction accuracy when compared to FP16. In this configuration, quantization parameters are present as QDQ nodes in the exported ONNX file.

- FP16

$ python scripts/export_v2xfusion.py configs/V2X-I/det/centerhead/lssfpn/camera+pointpillar/resnet34/default.yaml ptq.pth --precision fp16

- INT8

$ python scripts/export_v2xfusion.py configs/V2X-I/det/centerhead/lssfpn/camera+pointpillar/resnet34/default.yaml ptq.pth --precision int8

Please refer to the V2XFusion inference sample provided by DeepStream. The configuration parameters can be adjusted during training to adapt to different datasets, especially the dbound parameter. Before inference, you need to confirm that the parameters in the precomputation script in DeepStream are the same with the training setup.

image_size = [864, 1536]

downsample_factor = 16

C = 80

xbound = [0, 102.4, 0.8]

ybound = [-51.2, 51.2, 0.8]

zbound = [-5, 3, 8]

dbound = [-2, 0, 90] -

DAIR-V2X-I Dataset

Method Sparsity/Dense FP16/INT8 Car Pedestrain Cyclist model pth [email protected] [email protected] [email protected] Easy Mod. Hard Easy Mod. Hard Easy Mod. Hard V2XFusion sparsity FP16 82.08 69.70 69.76 51.51 49.15 49.54 61.21 58.07 58.65 model sparsity INT8-PTQ 82.06 69.70 69.75 51.13 48.86 49.22 60.95 57.81 58.43 ptq.pth Dense FP16 82.30 69.84 69.90 49.47 47.31 47.60 59.09 58.29 58.70 model Dense INT8-PTQ 82.33 69.88 69.94 48.60 46.55 46.82 59.28 58.12 58.52 ptq.pth V2XFusion Backbone: ResNet34 + PointPillars

Note:

To make the model more robust, the sequence dataset V2X-Seq-SPD also can be added to the training phase. We provide a pre-trained model (dbound=[-1.5, 3.0, 180]) that is used in the Deepstream demo for reference. Please refer to the script dataset_merge for data merge.FP16 Dense(ms) FP16 Spasity(ms) INT8 Dense(ms) INT8 Sparsity(ms) 49.3 42.2 31.6 25.3 - Device: NVIDIA Jetson AGX Orin Developer Kit (MAXN power mode)

- Version: Jetpack 6.0 DP

- Modality: Camera + LiDAR

- Image Resolution: 864 x 1536

- Batch size: 4

- V2X-DAIR Dataset: https://thudair.baai.ac.cn/coop-forecast

- BEVFusion-R: https://github.com/mit-han-lab/bevfusion

- BEVHeight: https://github.com/ADLab-AutoDrive/BEVHeight