- aws cli

- sdk

- shell script

apt install aws-shell - online trainings

- online trainings, current education

- youtube videos

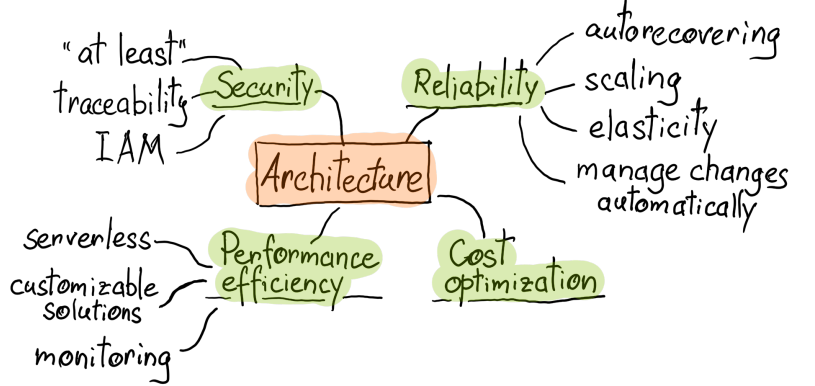

- Architecture

- certification preparation

- hand on

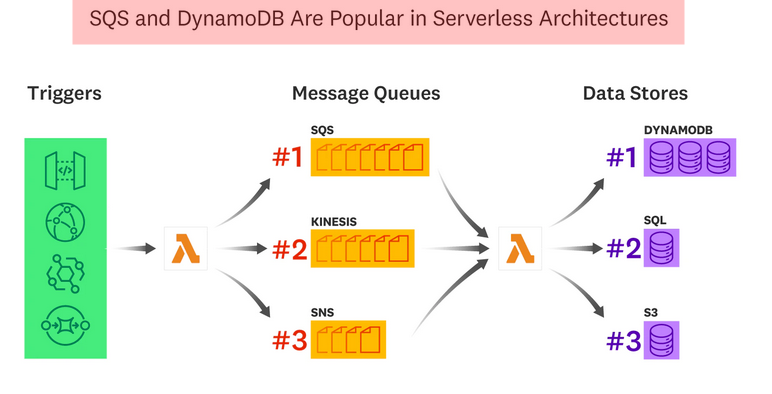

- serverless

- workshops

- podcasts

- labs

- samples

pip3 install awscli

#complete -C `locate aws_completer` aws

complete -C /usr/local/bin/aws_completer awsAWS_SNS_TOPIC_ARN=arn:aws:sns:eu-central-1:85153298123:gmail-your-name

AWS_KEY_PAIR=/path/to/file/key-pair.pem

AWS_PROFILE=aws-user

AWS_REGION=eu-central-1. /home/projects/current-project/aws.sh# installation

apt install aws

# set up user

aws configurationvim ~/.aws/credentials[cherkavi-user]

aws_access_key_id = AKI...

aws_secret_access_key = ur1DxNvEn...

using profiling

--region, --output, --profile

aws s3 ls --profile $AWS_PROFILEvim ~/.aws/credentials

aws configure list

aws configure get region --profile $AWS_PROFILE

aws configure get aws_access_key_id

aws configure get default.aws_access_key_id

aws configure get $AWS_PROFILE.aws_access_key_id

aws configure get $AWS_PROFILE.aws_secret_access_keycurrent_browser="google-chrome"

current_doc_topic="sns"

alias cli-doc='$current_browser "https://docs.aws.amazon.com/cli/latest/reference/${current_doc_topic}/index.html" &'

alias faq='$current_browser "https://aws.amazon.com/${current_doc_topic}/faqs/" &'

alias console='$current_browser "https://console.aws.amazon.com/${current_doc_topic}/home?region=$AWS_REGION" &'User is not authorized to perform AccessDeniedException

aws iam list-groups 2>&1 | /home/projects/bash-example/awk-policy-json.sh

# or just copy it

echo "when calling the ListFunctions operation: Use..." | /home/projects/bash-example/awk-policy-json.sh

current_doc_topic="iam"

cli-doc

faq

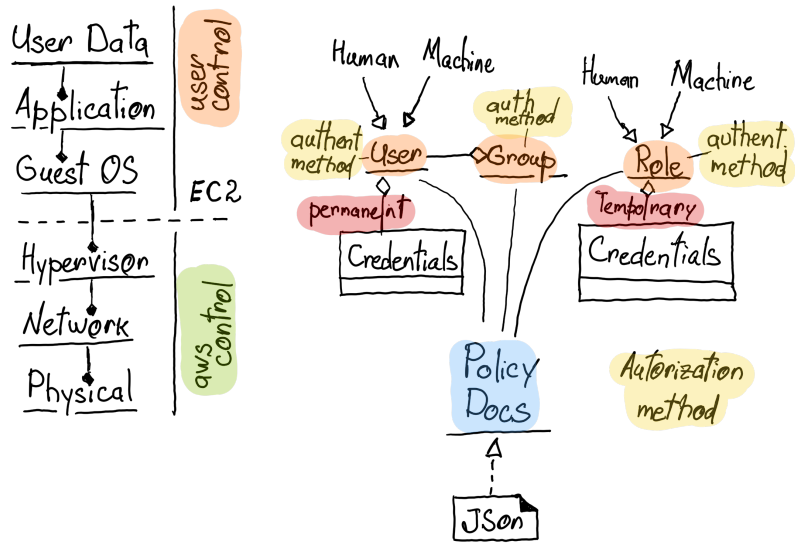

consoleaws iam list-users

# example of adding user to group

aws iam add-user-to-group --group-name s3-full-access --user-name user-s3-bucketcurrent_doc_topic="vpc"

cli-doc

faq

consoleexample of creating subnetwork:

VPC: 172.31.0.0

Subnetwork: 172.31.0.0/16, 172.31.0.0/26, 172.31.0.64/26

current_doc_topic='s3'

cli-doc

faq

console# make bucket - create bucket with globally unique name

AWS_BUCKET_NAME="my-bucket-name"

aws s3 mb s3://$AWS_BUCKET_NAME

aws s3 mb s3://$AWS_BUCKET_NAME --region us-east-1

# list of all s3

aws s3 ls

aws s3api list-buckets

aws s3api list-buckets --query "Buckets[].Name"

# get bucket location

aws s3api get-bucket-location --bucket $AWS_BUCKET_NAME

# copy to s3, upload file less than 5 Tb

aws s3 cp /path/to/file-name.with_extension s3://$AWS_BUCKET_NAME

aws s3 cp /path/to/file-name.with_extension s3://$AWS_BUCKET_NAME/path/on/s3/filename.ext

# update metadata

aws s3 cp test.txt s3://a-bucket/test.txt --metadata '{"x-amz-meta-cms-id":"34533452"}'

# read metadata

aws s3api head-object --bucket a-bucketbucket --key img/dir/legal-global/zach-walsh.jpeg

# copy from s3 to s3

aws s3 cp s3://$AWS_BUCKET_NAME/index.html s3://$AWS_BUCKET_NAME/index2.html

# download file

aws s3api get-object --bucket $AWS_BUCKET_NAME --key path/on/s3 /local/path

# create folder, s3 mkdir

aws s3api put-object --bucket my-bucket-name --key foldername/

# sync folder with remote s3 folder

aws s3 sync /path/to/some/folder s3://my-bucket-name/some/folder

# sync folder with remote s3 folder with public access

aws s3 sync /path/to/some/folder s3://my-bucket-name/some/folder --acl public-read

# list of all objects

aws s3 ls --recursive s3://my-bucket-name

# list of all object by specified path ( / at the end must be )

aws s3 ls --recursive s3://my-bucket-name/my-sub-path/

# download file

aws s3api head-object --bucket my-bucket-name --key file-name.with_extension

# move file

aws s3 mv s3://$AWS_BUCKET_NAME/index.html s3://$AWS_BUCKET_NAME/index2.html

# remove file

aws s3 rm s3://my-bucket-name/file-name.with_extension --profile marketing-staging --region us-east-1

# remove all

aws s3 rm s3://$AWS_S3_BUCKET_NAME --recursive --exclude "account.json" --include "*"

# upload file and make it public

aws s3api put-object-acl --bucket <bucket name> --key <path to file> --acl public-read

# read file

aws s3api get-object --bucket <bucket-name> --key=<path on s3> <local output file>policy

- Bucket Policy, public read ( Block all public access - Off )

{

"Version": "2012-10-17",

"Id": "policy-bucket-001",

"Statement": [

{

"Sid": "statement-bucket-001",

"Effect": "Allow",

"Principal": "*",

"Action": "s3:GetObject",

"Resource": "arn:aws:s3:::YOUR_BUCKET_NAME/*"

}

]

}- Access Control List - individual objects level

{

"Version": "2012-10-17",

"Statement": [

{

"Sid": "VisualEditor0",

"Effect": "Allow",

"Action": [

"s3:GetObjectAcl",

"s3:PutObjectAcl"

],

"Resource": "arn:aws:s3:::*/*"

}

]

}!!! important during creation need to set up next parameter: Additional configuration->Database options->Initial Database ->

current_doc_topic="athena"

cli-doc

faq

console### simple data

s3://my-bucket-001/temp/

```csv

column-1,column-2,column3

1,one,first

2,two,second

3,three,third

4,four,fourth

5,five,fifth

CREATE DATABASE IF NOT EXISTS cherkavi_database_001 COMMENT 'csv example' LOCATION 's3://my-bucket-001/temp/';CREATE EXTERNAL TABLE IF NOT EXISTS num_sequence (id int,column_name string,column_value string)

ROW FORMAT DELIMITED

FIELDS TERMINATED BY ','

ESCAPED BY '\\'

LINES TERMINATED BY '\n'

LOCATION 's3://my-bucket-001/temp/';

--- another way to create table

CREATE EXTERNAL TABLE num_sequence2 (id int,column_name string,column_value string)

ROW FORMAT SERDE 'org.apache.hadoop.hive.serde2.OpenCSVSerde'

WITH SERDEPROPERTIES ("separatorChar" = ",", "escapeChar" = "\\")

LOCATION 's3://my-bucket-001/temp/' select * from num_sequence;current_doc_topic="cloudfront"

cli-doc

faq

consoleRegion <>---------- AvailabilityZone <>--------- EdgeLocationcurrent_doc_topic="secretsmanager"

cli-doc

faq

console# CLI example

aws secretsmanager get-secret-value --secret-id LinkedIn_project_Web_LLC --region $AWS_REGION --profile cherkavi-userreadonly policy

{

"Version": "2012-10-17",

"Statement": [

{

"Sid": "VisualEditor0",

"Effect": "Allow",

"Action": "secretsmanager:GetSecretValue",

"Resource": "arn:aws:secretsmanager:*:*:secret:*"

}

]

}current_doc_topic="ec2"

cli-doc

faq

console# list ec2, ec2 list, instances list

aws ec2 describe-instances --profile $AWS_PROFILE --region $AWS_REGION --filters Name=tag-key,Values=test

# example

aws ec2 describe-instances --region us-east-1 --filters "Name=tag:Name,Values=ApplicationInstance"

# !!! without --filters will give you not a full list of EC2 !!!connect to launched instance

INSTANCE_PUBLIC_DNS="ec2-52-29-176.eu-central-1.compute.amazonaws.com"

ssh -i $AWS_KEY_PAIR ubuntu@$INSTANCE_PUBLIC_DNSreading information about current instance, local ip address, my ip address, connection to current instance, instance reflection, instance metadata, instance description

curl -X GET http://169.254.169.254/latest/meta-data/

curl -X GET http://169.254.169.254/latest/meta-data/iam/security-credentials/

curl -X GET http://169.254.169.254/latest/api/token

# public ip

curl -X GET http://169.254.169.254/latest/meta-data/public-ipv4connect to launched instance without ssh

# ssm role should be provided for account

aws ssm start-session --target i-00ac7eee --profile awsstudent --region us-east-1current_doc_topic="ebs"

cli-doc

faqsnapshot can be created from one ESB snapshot can be copied to another region volume can be created from snapshot and attached to EC2 ESB --> Snapshot --> copy to region --> Snapshot --> ESB --> attach to EC2

attach new volume

# list volumes

sudo lsblk

sudo fdisk -l

# describe volume from previous command - /dev/xvdf

sudo file -s /dev/xvdf

# !!! new partitions !!! format volume

# sudo mkfs -t xfs /dev/xvdf

# or # sudo mke2fs /dev/xvdf

# attach volume

sudo mkdir /external-drive

sudo mount /dev/xvdf /external-drivecurrent_doc_topic="elb"

cli-doc

faq# documentation

current_doc_topic="elb"; cli-doccurrent_doc_topic="lambda"

cli-doc

faq

consolegoogle-chrome https://"$AWS_REGION".console.aws.amazon.com/apigateway/main/apis?region="$AWS_REGION"

# API -> Stagesenter point for created Lambdas

google-chrome "https://"$AWS_REGION".console.aws.amazon.com/lambda/home?region="$AWS_REGION"#/functions"LAMBDA_NAME="function_name"

# example of lambda execution

aws lambda invoke \

--profile $AWS_PROFILE --region $AWS_REGION \

--function-name $LAMBDA_NAME \

output.log

# example of lambda execution with payload

aws lambda invoke \

--profile $AWS_PROFILE --region $AWS_REGION \

--function-name $LAMBDA_NAME \

--payload '{"key1": "value-1"}' \

output.log

# example of asynchronic lambda execution with payload

# !!! with SNS downstream execution !!!

aws lambda invoke \

--profile $AWS_PROFILE --region $AWS_REGION \

--function-name $LAMBDA_NAME \

--invocation-type Event \

--payload '{"key1": "value-1"}' \

output.logIAM->Policies->Create policy

{

"Version": "2012-10-17",

"Statement": [

{

"Sid": "VisualEditor0",

"Effect": "Allow",

"Action": "lambda:InvokeFunction",

"Resource": "arn:aws:lambda:*:*:function:*"

},

{

"Sid": "VisualEditor1",

"Effect": "Allow",

"Action": [

"logs:CreateLogStream",

"dynamodb:PutItem",

"dynamodb:GetItem",

"logs:PutLogEvents"

],

"Resource": [

"arn:aws:dynamodb:*:*:table/*",

"arn:aws:logs:eu-central-1:8557202:log-group:/aws/lambda/function-name-1:*"

]

}

]

}

lambda logs, check logs

### lambda all logs

google-chrome "https://"$AWS_REGION".console.aws.amazon.com/cloudwatch/home?region="$AWS_REGION"#logs:

### lambda part of logs

google-chrome "https://"$AWS_REGION".console.aws.amazon.com/cloudwatch/home?region="$AWS_REGION"#logStream:group=/aws/lambda/"$LAMBDA_NAME";streamFilter=typeLogStreamPrefix"

- IntellijIDEA

- Apex

- Python Zappa

- AWS SAM

- Go SPARTA

- aws-serverless-java-container

- Chalice ...

- install plugin: AWS Toolkit,

- right bottom corner - select Region, select Profile

profile must have:

{ "Version": "2012-10-17", "Statement": [ { "Sid": "VisualEditor0", "Effect": "Allow", "Action": [ "iam:ListRoleTags", "iam:GetPolicy", "iam:ListRolePolicies" ], "Resource": [ "arn:aws:iam:::policy/", "arn:aws:iam:::role/" ] }, { "Sid": "VisualEditor1", "Effect": "Allow", "Action": "iam:ListRoles", "Resource": "" }, { "Sid": "VisualEditor2", "Effect": "Allow", "Action": "iam:PassRole", "Resource": "arn:aws:iam:::role/" }, { "Effect": "Allow", "Action": "s3:", "Resource": "*" } ] } ```

- New->Project->AWS

- create new Python file from template ( my_aws_func.py )

import json

def lambda_handler(event, context):

return {

'statusCode': 200,

'body': json.dumps('Hello from Lambda!')

}- Create Lambda Function, specify handler: my_aws_func.lambda_handler

pip3 install aws-sam-clivirtualenv env

source env/bin/activate

# update your settings https://github.com/Miserlou/Zappa#advanced-settings

zappa init

zappa deploy dev

zappa update devcurrent_doc_topic="dynamodb"

cli-doc

faq

consoledocumentation documentation developer guide

$current_browser https://$AWS_REGION.console.aws.amazon.com/dynamodb/home?region=$AWS_REGION#tables:# create table from CLI

aws dynamodb create-table \

--table-name my_table \

--attribute-definitions \

AttributeName=column_id,AttributeType=N \

AttributeName=column_name,AttributeType=S \

--key-schema \

AttributeName=column_id,KeyType=HASH \

AttributeName=column_anme,KeyType=RANGE \

--billing-mode=PAY_PER_REQUEST \

--region=$AWS_REGION

# write item, write into DynamoDB

aws dynamodb put-item \

--table-name my_table \

--item '{"column_1":{"N":1}, "column_2":{"S":"first record"} }'

--region=$AWS_REGION

--return-consumed-capacity TOTAL

# update item

aws dynamodb put-item \

--table-name my_table \

--key '{"column_1":{"N":1}, "column_2":{"S":"first record"} }'

--update-expression "SET country_name=:new_name"

--expression-attribute-values '{":new_name":{"S":"first"} }'

--region=$AWS_REGION

--return-value ALL_NEW

# select records

aws dynamodb query \

--table-name my_table \

--key-condition-expression "column_1 = :id" \

--expression-attribute-values '{":id":{"N":"1"}}' \

--region=$AWS_REGION

--output=tableType mismatch for key id expected: N actual: S"

key id must be Numeric

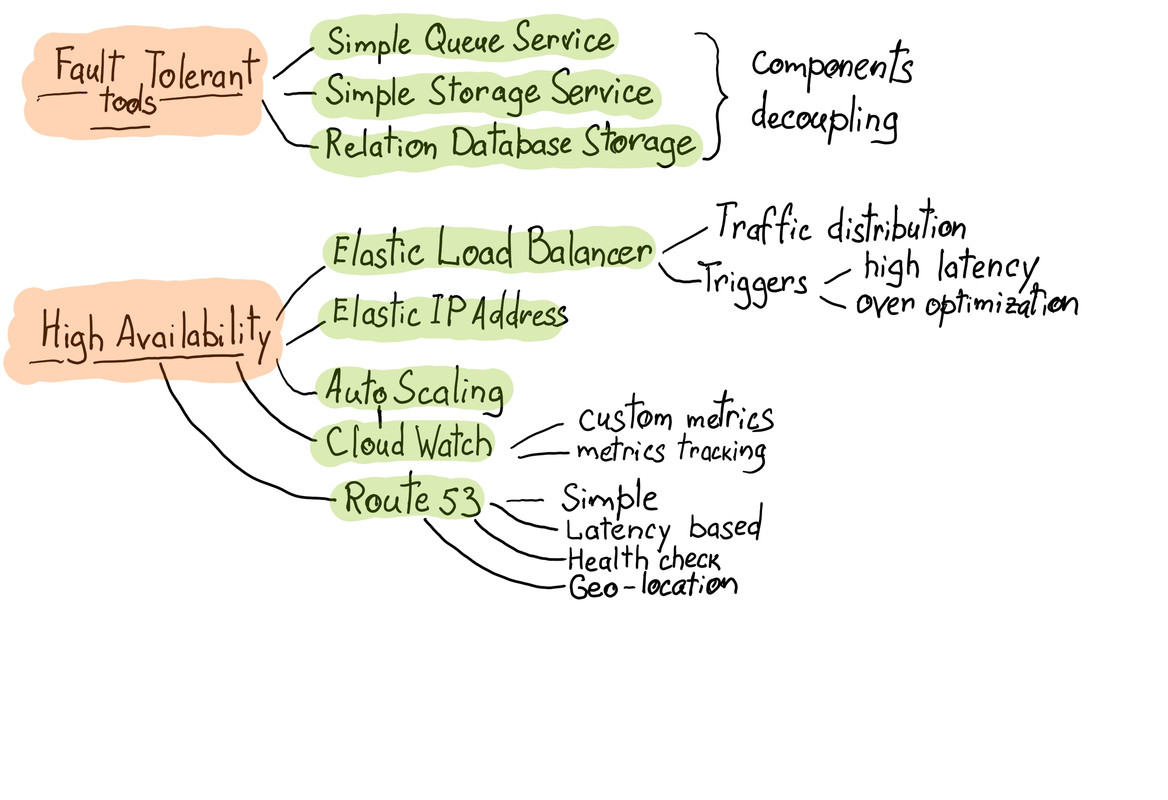

{"id": 10003, "id_value": "cherkavi_value3"}current_doc_topic="route53"

cli-doc

faq

consolecurrent_doc_topic="sns"

cli-doc

faq

console

### list of topics

aws sns list-topics --profile $AWS_PROFILE --region $AWS_REGION

#### open browser with sns dashboard

google-chrome "https://"$AWS_REGION".console.aws.amazon.com/sns/v3/home?region="$AWS_REGION"#/topics"

### list of subscriptions

aws sns list-subscriptions-by-topic --profile $AWS_PROFILE --region $AWS_REGION --topic-arn {topic arn from previous command}

### send example via cli

#--message file://message.txt

aws sns publish --profile $AWS_PROFILE --region $AWS_REGION \

--topic-arn "arn:aws:sns:us-west-2:123456789012:my-topic" \

--message "hello from aws cli"

### send message via web

google-chrome "https://"$AWS_REGION".console.aws.amazon.com/sns/v3/home?region="$AWS_REGION"#/publish/topic/topics/"$AWS_SNS_TOPIC_ARNcurrent_doc_topic="cloudwatch"

cli-doc

faq

console Metrics-----\

+--->Events------>Alarm

Logs-------/

+----------------------------------+

dashboards

current_doc_topic="kinesis"

cli-doc

faq

console# write record

aws kinesis put-record --stream-name my_kinesis_stream --partition_key "my_partition_key_1" --data "{'first':'1'}"

# describe stream

aws kinesis describe-stream --stream-name my_kinesis_stream

# get records

aws kinesis get-shard-iterator --stream-name my_kinesis_stream --shard-id "shardId-000000000" --shard-iterator-type TRIM_HORIZON

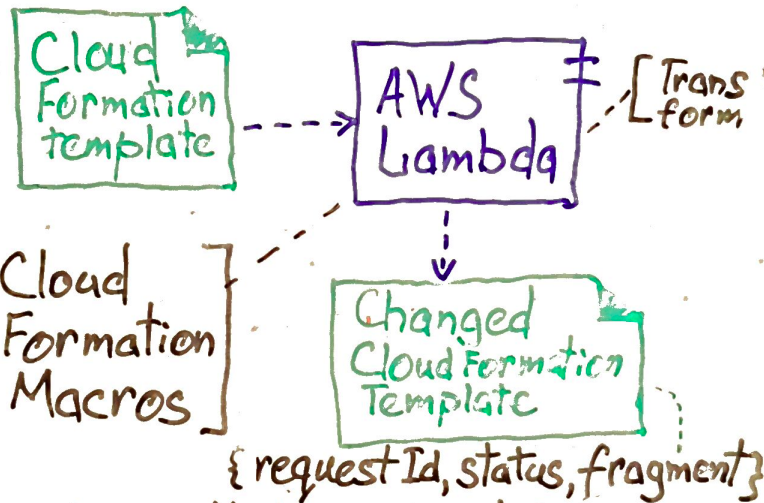

aws kinesis get-records --shard-iterator current_doc_topic="cloudformation"

cli-doc

faq

console

# cloudformation designer web

google-chrome "https://"$AWS_REGION".console.aws.amazon.com/cloudformation/designer/home?region="$AWS_REGIONaws cloudformation describe-stacks --region us-east-1- CloudFormation

- CloudCraft

- VisualOps

- draw.io

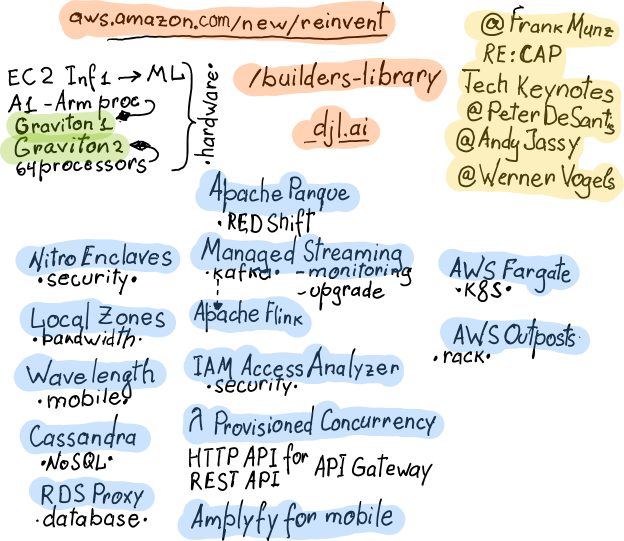

upcoming courses:

- https://aws.amazon.com/certification/certified-cloud-practitioner/

- https://aws.amazon.com/dms/

- https://aws.amazon.com/mp/

- https://aws.amazon.com/vpc/

- https://aws.amazon.com/compliance/shared-responsibility-model/

- https://aws.amazon.com/cloudfront/

- https://aws.amazon.com/iam/details/mfa/

- http://docs.aws.amazon.com/awscloudtrail/latest/userguide/cloudtrail-user-guide.html

- http://docs.aws.amazon.com/AmazonCloudWatch/latest/monitoring/AlarmThatSendsEmail.html

- https://aws.amazon.com/aup/

- https://docs.aws.amazon.com/elasticbeanstalk/latest/dg/tutorials.html