🚩Project Page | 📑Paper | 🤗Data

This is the unorganized version of the code for RoboMM: All-in-One Multimodal Large Model for Robotic Manipulation.

RoboMM: All-in-One Multimodal Large Model for Robotic Manipulation

Fen Yan*, Fanfan Liu*, Liming Zheng, Yufeng Zhong, Yiyang Huang, Zechao Guan, Chenjian Feng, Lin Ma†

*Equal Contribution †Corresponding Authors

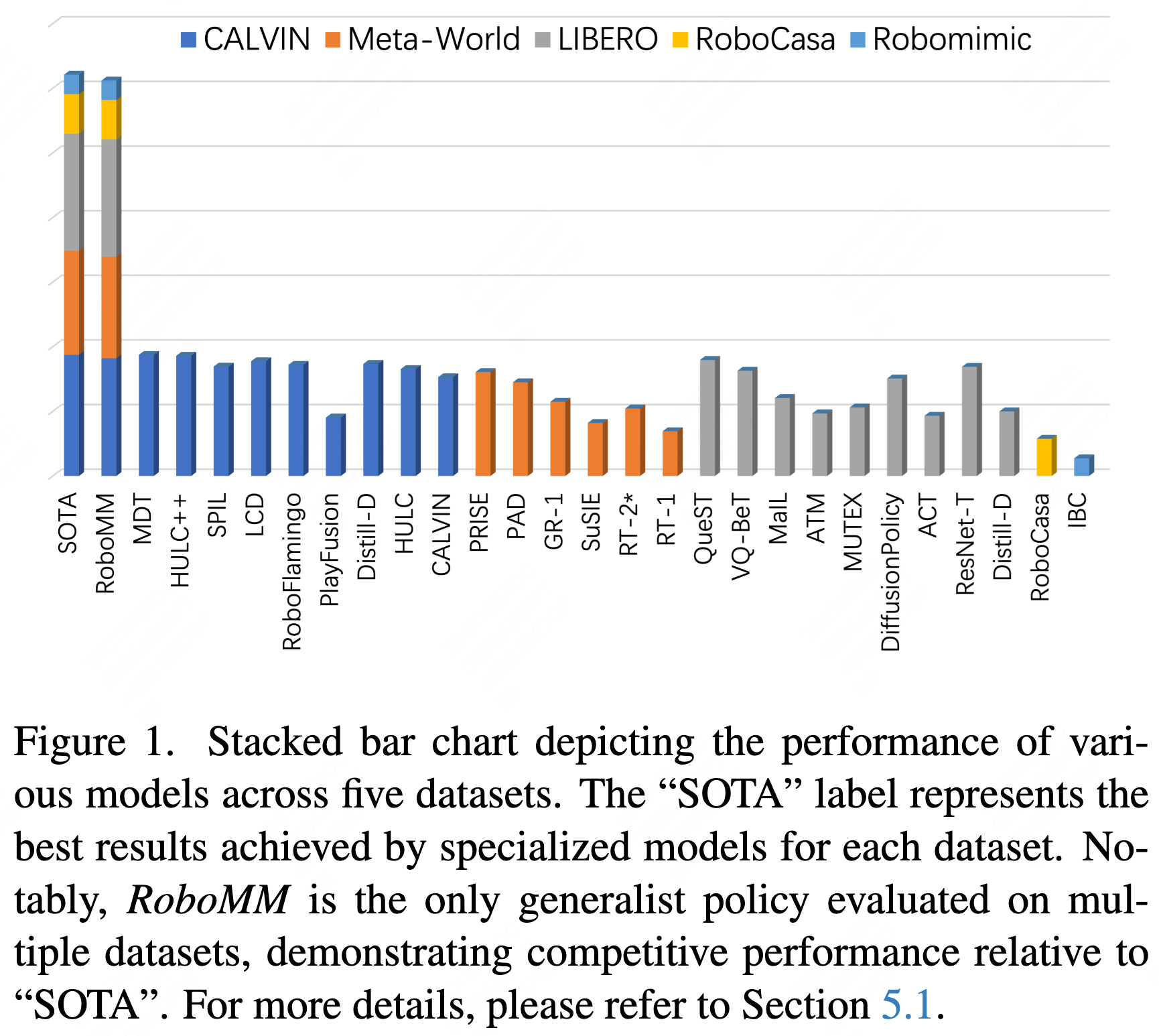

In recent years, robotics has advanced significantly through the integration of larger models and large-scale datasets. However, challenges remain in applying these models to 3D spatial interactions and managing data collection costs. To address these issues, we propose the multimodal robotic manipulation model, RoboMM, along with the comprehensive dataset, RoboData. RoboMM enhances 3D perception through camera parameters and occupancy supervision. Building on OpenFlamingo, it incorporates Modality-Isolation-Mask and multimodal decoder blocks, improving modality fusion and fine-grained perception. % , thus boosting performance in robotic manipulation tasks. RoboData offers the complete evaluation system by integrating several well-known datasets, achieving the first fusion of multi-view images, camera parameters, depth maps, and actions, and the space alignment facilitates comprehensive learning from diverse robotic datasets. Equipped with RoboData and the unified physical space, RoboMM is the first generalist policy that enables simultaneous evaluation across all tasks within multiple datasets, rather than focusing on limited selection of data or tasks. Its design significantly enhances robotic manipulation performance, increasing the average sequence length on the CALVIN from 1.7 to 3.3 and ensuring cross-embodiment capabilities, achieving state-of-the-art results across multiple datasets. The code will be released following acceptance.

- 2024.12: We release RoboMM paper on arxiv!We release the training and inference code!

Currently, the Calvin data has been fully uploaded, and RoboMM can now be trained using only the Calvin dataset

Download the data from Data and extract it. Modify the corresponding paths in the config file and use the following files for training.

bash tools/train.sh 8 --config ${config}

bash tools/test.sh 8 ${ckpt}

- RoboMM traing code

- RoboMM inference code

- RoboMM evaluation code

- RoboMM training data

- RoboMM model

Original: https://github.com/mees/calvin License: MIT

Original: https://github.com/Farama-Foundation/Metaworld License: MIT

Original: https://github.com/Lifelong-Robot-Learning/LIBERO License: MIT

Original: https://github.com/robocasa/robocasa License: MIT

Original: https://github.com/ARISE-Initiative/robomimic License: MIT

Original: https://github.com/notFoundThisPerson/RoboCAS-v0 License: MIT

Original: https://github.com/stepjam/RLBench License: MIT

Original: https://github.com/robot-colosseum/robot-colosseum License: MIT

Original: https://github.com/haosulab/ManiSkill/tree/v0.5.3 License: Apache

Original: https://github.com/openai/CLIP License: MIT

Original: https://github.com/mlfoundations/open_flamingo License: MIT

Original: https://github.com/RoboFlamingo/RoboFlamingo License: MIT

Original: https://github.com/RoboUniview/RoboUniview License: MIT

@misc{yan2024robomm,

title={RoboMM: All-in-One Multimodal Large Model for Robotic Manipulation},

author={Feng Yan and Fanfan Liu and Liming Zheng and Yufeng Zhong and Yiyang Huang and Zechao Guan and Chengjian Feng and Lin Ma},

year={2024},

eprint={2412.07215},

archivePrefix={arXiv},

primaryClass={cs.RO},

url={https://arxiv.org/abs/2412.07215},

}