English | 简体中文

A tiny boost library in C++11.

Coost is an elegant and efficient cross-platform C++ base library, it is not as heavy as boost, but still provides enough powerful features:

- Command line and config file parser (flag)

- High performance log library (log)

- Unit testing framework (unitest)

- go-style coroutine

- Coroutine-based network programming framework

- Efficient JSON library

- JSON based RPC framework

- God-oriented programming

- Atomic operation (atomic)

- Random number generator (random)

- Efficient stream (fastream)

- Efficient string (fastring)

- String utility (str)

- Time library (time)

- Thread library (thread)

- Fast memory allocator

- LruMap

- hash library

- path library

- File system operations (fs)

- System operations (os)

Coost, formerly known as cocoyaxi (co for short), for fear of exposing too much information and causing the Namake planet to suffer attack from the Dark Forest, was renamed Coost, which means a more lightweight C++ base library than boost.

It was said that about xx light-years from the Earth, there is a planet named Namake. Namake has three suns, a large one and two small ones. The Namakians make a living by programming. They divide themselves into nine levels according to their programming level, and the three lowest levels will be sent to other planets to develop programming technology. These wandering Namakians must collect at least 10,000 stars through a project before they can return to Namake.

Several years ago, two Namakians, ruki and alvin, were dispatched to the Earth. To go back to the Namake planet as soon as possible, ruki has developed a powerful build tool xmake, whose name is taken from Namake. At the same time, alvin has developed a tiny boost library coost, whose original name cocoyaxi is taken from the Cocoyaxi village where ruki and alvin lived on Namake.

Coost needs your help. If you are using it or like it, you may consider becoming a sponsor. Thank you very much!

Special Sponsors

Coost is specially sponsored by the following companies, thank you very much!

#include "co/god.h"

void f() {

god::bless_no_bugs();

}co/flag is a command line and config file parser. It is similar to gflags, but more powerful:

- Support input parameters from command line and config file.

- Support automatic generation of the config file.

- Support flag aliases.

- Flag of integer type, the value can take a unit

k,m,g,t,p.

#include "co/flag.h"

#include "co/cout.h"

DEF_bool(x, false, "bool x");

DEF_int32(i, 0, "...");

DEF_string(s, "hello world", "string");

int main(int argc, char** argv) {

flag::init(argc, argv);

COUT << "x: " << FLG_x;

COUT << "i: " << FLG_i;

COUT << FLG_s << "|" << FLG_s.size();

return 0;

}In the above example, the macros start with DEF_ define 3 flags. Each flag corresponds to a global variable, whose name is FLG_ plus the flag name. After building, the above code can run as follows:

./xx # Run with default configs

./xx -x -s good # x = true, s = "good"

./xx -i 4k -s "I'm ok" # i = 4096, s = "I'm ok"

./xx -mkconf # Automatically generate a config file: xx.conf

./xx xx.conf # run with a config file

./xx -conf xx.conf # Same as aboveco/log is a high-performance and memory-friendly log library, which nearly needs no memory allocation.

co/log supports two types of logs: one is the level log, which divides the logs into 5 levels: debug, info, warning, error and fatal. Printing a fatal log will terminate the program; the other one is TLOG, logs are classified by topic, and logs of different topics are written to different files.

DLOG << "hello " << 23; // debug

LOG << "hello " << 23; // info

WLOG << "hello " << 23; // warning

ELOG << "hello " << 23; // error

FLOG << "hello " << 23; // fatal

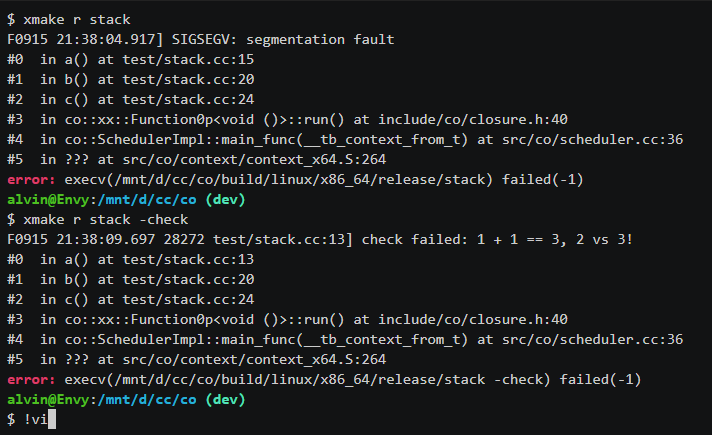

TLOG("xx") << "s" << 23; // topic logco/log also provides a series of CHECK macros, which is an enhanced version of assert, and they will not be cleared in debug mode.

void* p = malloc(32);

CHECK(p != NULL) << "malloc failed..";

CHECK_NE(p, NULL) << "malloc failed..";When the CHECK assertion failed, co/log will print the function call stack information, and then terminate the program. On linux and macosx, make sure you have installed libbacktrace on your system.

co/log is very fast. The following are some test results, for reference only:

-

co/log vs glog (single thread)

platform google glog co/log win2012 HHD 1.6MB/s 180MB/s win10 SSD 3.7MB/s 560MB/s mac SSD 17MB/s 450MB/s linux SSD 54MB/s 1023MB/s -

co/log vs spdlog (Linux)

threads total logs co/log time(seconds) spdlog time(seconds) 1 1000000 0.096445 2.006087 2 1000000 0.142160 3.276006 4 1000000 0.181407 4.339714 8 1000000 0.303968 4.700860

co/unitest is a simple and easy-to-use unit test framework. Many components in co use it to write unit test code, which guarantees the stability of co.

#include "co/unitest.h"

#include "co/os.h"

namespace test {

DEF_test(os) {

DEF_case(homedir) {

EXPECT_NE(os::homedir(), "");

}

DEF_case(cpunum) {

EXPECT_GT(os::cpunum(), 0);

}

}

} // namespace testThe above is a simple example. The DEF_test macro defines a test unit, which is actually a function (a method in a class). The DEF_case macro defines test cases, and each test case is actually a code block. The main function is simple as below:

#include "co/unitest.h"

int main(int argc, char** argv) {

flag::init(argc, argv);

unitest::run_all_tests();

return 0;

}unitest contains the unit test code in coost. Users can run unitest with the following commands:

xmake r unitest # Run all test cases

xmake r unitest -os # Run test cases in the os unitco/json is a fast JSON library, and it is quite easy to use.

// {"a":23,"b":false,"s":"xx","v":[1,2,3],"o":{"xx":0}}

Json x = {

{ "a", 23 },

{ "b", false },

{ "s", "xx" },

{ "v", {1,2,3} },

{ "o", {

{"xx", 0}

}},

};

// equal to x

Json y = Json()

.add_member("a", 23)

.add_member("b", false)

.add_member("s", "xx")

.add_member("v", Json().push_back(1).push_back(2).push_back(3))

.add_member("o", Json().add_member("xx", 0));

x.get("a").as_int(); // 23

x.get("s").as_string(); // "xx"

x.get("v", 0).as_int(); // 1

x.get("v", 2).as_int(); // 3

x.get("o", "xx").as_int(); // 0-

co/json vs rapidjson (Linux)

parse stringify parse(minimal) stringify(minimal) rapidjson 1270 us 2106 us 1127 us 1358 us co/json 1005 us 920 us 788 us 470 us

co has implemented a go-style coroutine, which has the following features:

-

Support multi-thread scheduling, the default number of threads is the number of system CPU cores.

-

Shared stack, coroutines in the same thread share several stacks (the default size is 1MB), and the memory usage is low. Simple test on Linux shows that 10 millions of coroutines only take 2.8G of memory (for reference only).

-

There is a flat relationship between coroutines, and new coroutines can be created from anywhere (including in coroutines).

-

Support system API hook (Windows/Linux/Mac), you can directly use third-party network library in coroutine.

-

Support coroutine lock co::Mutex, coroutine synchronization event co::Event.

-

Support channel and waitgroup in golang: co::Chan, co::WaitGroup.

-

Support coroutine pool co::Pool (no lock, no atomic operation).

#include "co/co.h"

int main(int argc, char** argv) {

flag::init(argc, argv);

go(ku); // void ku();

go(f, 7); // void f(int);

go(&T::g, &o); // void T::g(); T o;

go(&T::h, &o, 7); // void T::h(int); T o;

go([](){

LOG << "hello go";

});

co::sleep(32); // sleep 32 ms

return 0;

}In the above code, the coroutines created by go() will be evenly distributed to different scheduling threads. Users can also control the scheduling of coroutines by themselves:

// run f1 and f2 in the same scheduler

auto s = co::next_scheduler();

s->go(f1);

s->go(f2);

// run f in all schedulers

for (auto& s : co::schedulers()) {

s->go(f);

}co provides a set of coroutineized socket APIs, most of them are consistent with the native socket APIs in form, with which, you can easily write high-performance network programs in a synchronous manner.

In addition, co has also implemented higher-level network programming components, including TCP, HTTP and RPC framework based on JSON, they are IPv6-compatible and support SSL at the same time, which is more convenient than socket APIs.

- RPC server

int main(int argc, char** argv) {

flag::init(argc, argv);

rpc::Server()

.add_service(new xx::HelloWorldImpl)

.start("127.0.0.1", 7788, "/xx");

for (;;) sleep::sec(80000);

return 0;

}co/rpc also supports HTTP protocol, you can use the POST method to call the RPC service:

curl http://127.0.0.1:7788/xx --request POST --data '{"api":"ping"}'- Static web server

#include "co/flag.h"

#include "co/http.h"

DEF_string(d, ".", "root dir"); // docroot for the web server

int main(int argc, char** argv) {

flag::init(argc, argv);

so::easy(FLG_d.c_str()); // mum never have to worry again

return 0;

}- HTTP server

void cb(const http::Req& req, http::Res& res) {

if (req.is_method_get()) {

if (req.url() == "/hello") {

res.set_status(200);

res.set_body("hello world");

} else {

res.set_status(404);

}

} else {

res.set_status(405); // method not allowed

}

}

// http

http::Server().on_req(cb).start("0.0.0.0", 80);

// https

http::Server().on_req(cb).start(

"0.0.0.0", 443, "privkey.pem", "certificate.pem"

);- HTTP client

void f() {

http::Client c("https://github.com");

c.get("/");

LOG << "response code: "<< c.status();

LOG << "body size: "<< c.body().size();

LOG << "Content-Length: "<< c.header("Content-Length");

LOG << c.header();

c.post("/hello", "data xxx");

LOG << "response code: "<< c.status();

}

go(f);-

Header files of co.

-

Source files of co, built as libco.

-

Some test code, each

.ccfile will be compiled into a separate test program. -

Some unit test code, each

.ccfile corresponds to a different test unit, and all code will be compiled into a single test program. -

A code generator for the RPC framework.

To build co, you need a compiler that supports C++11:

- Linux: gcc 4.8+

- Mac: clang 3.3+

- Windows: vs2015+

co recommends using xmake as the build tool.

# All commands are executed in the root directory of co (the same below)

xmake # build libco by default

xmake -a # build all projects (libco, gen, test, unitest)xmake f -k shared

xmake -vxmake f -p mingw

xmake -vxmake f --with_libcurl=true --with_openssl=true

xmake -v# Install header files and libco by default.

xmake install -o pkg # package related files to the pkg directory

xmake i -o pkg # the same as above

xmake install -o /usr/local # install to the /usr/local directoryxrepo install -f "openssl=true,libcurl=true" coostizhengfan helped to provide cmake support, SpaceIm improved it and made it perfect.

mkdir build && cd build

cmake ..

make -j8mkdir build && cd build

cmake .. -DBUILD_ALL=ON

make -j8mkdir build && cd build

cmake .. -DWITH_LIBCURL=ON -DWITH_OPENSSL=ON

make -j8cmake .. -DBUILD_SHARED_LIBS=ON

make -j8vcpkg install coost:x64-windows

# HTTP & SSL support

vcpkg install coost[libcurl,openssl]:x64-windowsconan install coostfind_package(coost REQUIRED CONFIG)

target_link_libraries(userTarget coost::co)The MIT license. coost contains codes from some other projects, which have their own licenses, see details in LICENSE.md.

- The code of co/context is from tbox by ruki, special thanks!

- The early English documents of co are translated by Leedehai and daidai21, special thanks!

- ruki has helped to improve the xmake building scripts, thanks in particular!

- izhengfan provided cmake building scripts, thank you very much!

- SpaceIm has improved the cmake building scripts, and provided support for

find_package. Really great help, thank you!