Python: 3.10.12 or above

OS: It is recommended to run on a Linux operating system with NVIDIA GPU to avoid installation issues with

the xformers library.

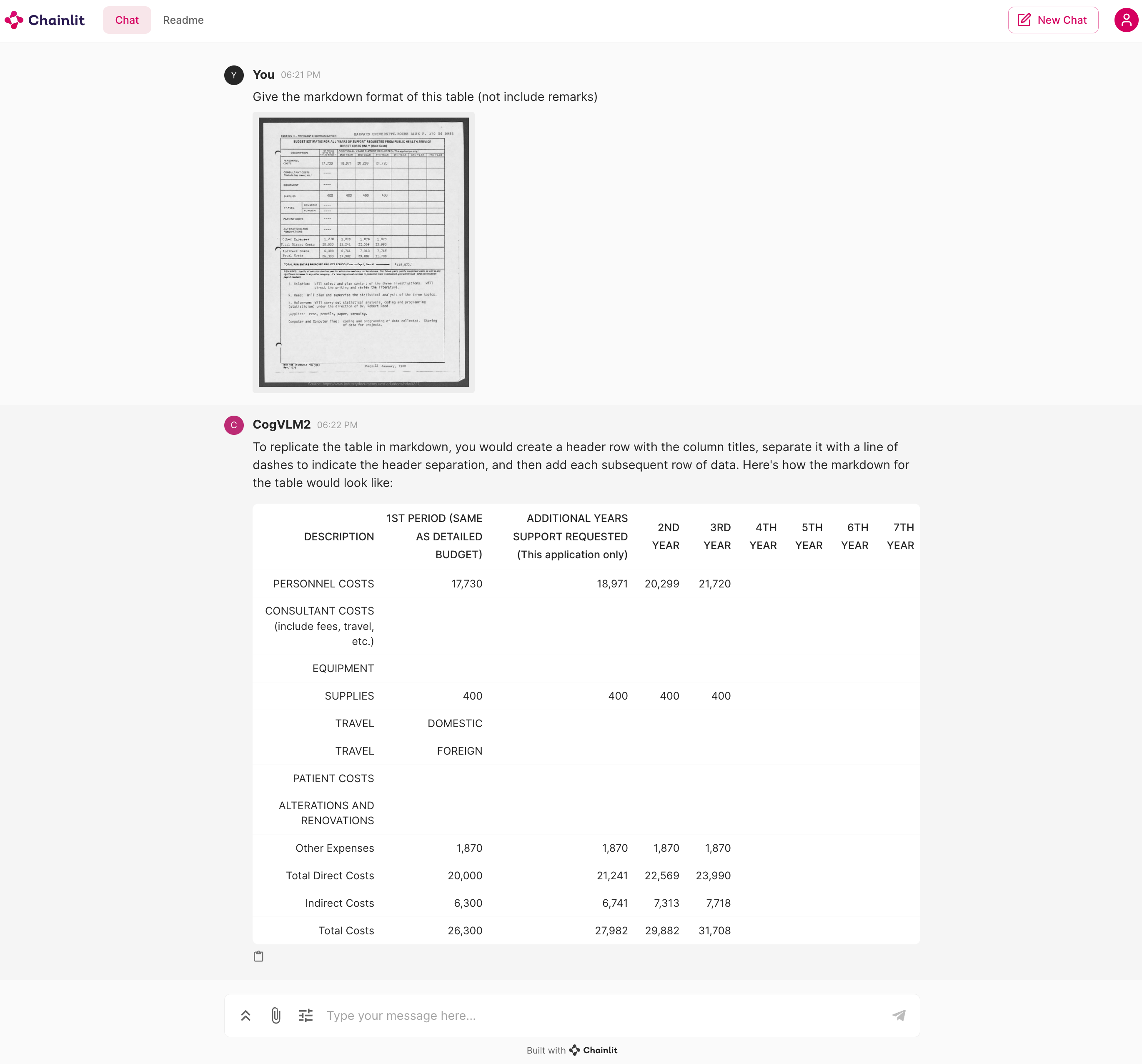

GPU requirements are as shown in the table below:

| Model Name | 19B Series Model | Remarks |

|---|---|---|

| BF16 inference | 42GB | Tested with 2K dialogue text |

| Int4 inference | 16GB | Tested with 2K dialogue text |

| BF16 Lora Tuning (With Vision Expert Part) | 73GB(8 GPUs with A100 x 80G using zero 2) | Trained with 2K dialogue text |

Before running any code, make sure you have all dependencies installed. You can install all dependency packages with the following command:

pip install -r requirements.txtRun this code to start a conversation at the command line. Please note that the model must be loaded on a GPU

CUDA_VISIBLE_DEVICES=0 python cli_demo.pyIf you want to use int4 (or int8) quantization, please use

CUDA_VISIBLE_DEVICES=0 python cli_demo.py --quant 4If you have multiple GPUs, you can use the following code to perform multiple pull-up models and distribute different layers of the model on different GPUs.

python cli_demo_multi_gpus.pyIn cli_demo_multi_gpus.py, we use the infer_auto_device_map function to automatically allocate different layers of

the model to different GPUs. You need to set the max_memory parameter to specify the maximum memory for each GPU. For

example, if you have two GPUs, each with 23GiB of memory, you can set it like this:

device_map = infer_auto_device_map(

model=model,

max_memory={i: "23GiB" for i in range(torch.cuda.device_count())},

# set 23GiB for each GPU, depends on your GPU memory, you can adjust this value

no_split_module_classes=["CogVLMDecoderLayer"]

)Run this code to start a conversation in the WebUI.

chainlit run web_demo.pyif you want to use int4 or in8 quantization, you can launch it like:

CUDA_VISIBLE_DEVICES=0 QUANT=4 chainlit run web_demo.pyAfter starting the conversation, you will be able to interact with the model, as shown below:

We provide a simple example to pull up the model through the following code.

After that, you can use the OpenAI API

format to request a conversation with the model (optionally --quant 4).

python openai_api_demo.pyDevelopers can call the model through the following code:

python openai_api_request.py