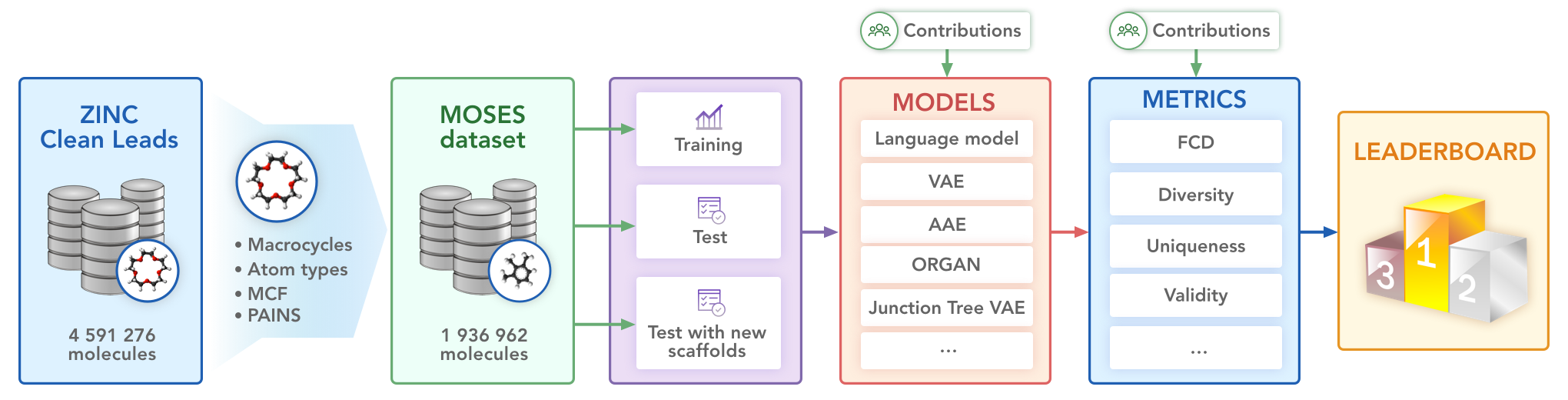

Deep generative models such as generative adversarial networks, variational autoencoders, and autoregressive models are rapidly growing in popularity for the discovery of new molecules and materials. In this work, we introduce MOlecular SEtS (MOSES), a benchmarking platform to support research on machine learning for drug discovery. MOSES implements several popular molecular generation models and includes a set of metrics that evaluate the diversity and quality of generated molecules. MOSES is meant to standardize the research on molecular generation and facilitate the sharing and comparison of new models. Additionally, we provide a large-scale comparison of existing state of the art models and elaborate on current challenges for generative models that might prove fertile ground for new research. Our platform and source code are freely available here.

We propose a biological molecule benchmark set refined from the ZINC database.

The set is based on the ZINC Clean Leads collection. It contains 4,591,276 molecules in total, filtered by molecular weight in the range from 250 to 350 Daltons, a number of rotatable bonds not greater than 7, and XlogP less than or equal to 3.5. We removed molecules containing charged atoms or atoms besides C, N, S, O, F, Cl, Br, H or cycles longer than 8 atoms. The molecules were filtered via medicinal chemistry filters (MCFs) and PAINS filters18.

The dataset contains 1,936,962 molecular structures. For experiments, we also provide a training, test and scaffold test sets containing 250k, 10k, and 10k molecules respectively. The scaffold test set contains unique Bemis-Murcko scaffolds26 that were not present in the training and test sets. We use this set to assess how well the model can generate previously unobserved scaffolds.

- Character-level Recurrent Neural Network (CharRNN)

- Variational Autoencoder (VAE)

- Adversarial Autoencoder (AAE)

- Objective-Reinforced Generative Adversarial Network (ORGAN)

- Junction Tree Variational Autoencoder (JTN-VAE)

| model | Valid (↑) | U@1k (↑) | U@10k (↑) | FCD (↓) | SNN (↓) | Frag (↑) | Scaff (↑) | IntDiv (↑) | Filters (↑) | ||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Test | TestSF | Test | TestSF | Test | TestSF | Test | TestSF | ||||||

| CharRNN | 0.9598 | 1.0000 | 0.9993 | 0.3233 | 0.8355 | 0.4606 | 0.4492 | 0.9977 | 0.9962 | 0.7964 | 0.1281 | 0.8561 | 0.9920 |

| VAE | 0.9528 | 1.0000 | 0.9992 | 0.2540 | 0.6959 | 0.4684 | 0.4547 | 0.9978 | 0.9963 | 0.8277 | 0.0925 | 0.8548 | 0.9925 |

| AAE | 0.9341 | 1.0000 | 1.0000 | 1.3511 | 1.8587 | 0.4191 | 0.4113 | 0.9865 | 0.9852 | 0.6637 | 0.1538 | 0.8531 | 0.9759 |

| ORGAN | 0.8731 | 0.9910 | 0.9260 | 1.5748 | 2.4306 | 0.4745 | 0.4593 | 0.9897 | 0.9883 | 0.7843 | 0.0632 | 0.8526 | 0.9934 |

| JTN-VAE | 1.0000 | 0.9980 | 0.9972 | 4.3769 | 4.6299 | 0.3909 | 0.3902 | 0.9679 | 0.9699 | 0.3868 | 0.1163 | 0.8495 | 0.9566 |

You can calculate all metrics with:

cd scripts

python run.py

If necessary, dataset will be downloaded, splited and all models will be trained. As result in current directory will appear metrics.csv with values.

For more details use python run.py --help.

- Install RDKit for metrics calculation.

- Install models with

python setup.py install

You can train model with:

cd scripts/<model name>

python train.py --train_load <path to train dataset> --model_save <path to model> --config_save <path to config> --vocab_save <path to vocabulary>

For more details use python train.py --help.

You can calculate metrics with:

cd scripts/<model name>

python sample.py --model_load <path to model> --config_load <path to config> --vocab_load <path to vocabulary> --n_samples <number of smiles> --gen_save <path to generated smiles>

cd ../metrics

python eval.py --ref_path <path to referenced smiles> --gen_path <path to generated smiles>

All metrics output to screen.

For more details use python sample.py --help and python eval.py --help.

You also can use python run.py --model <model name> for calculation metrics.