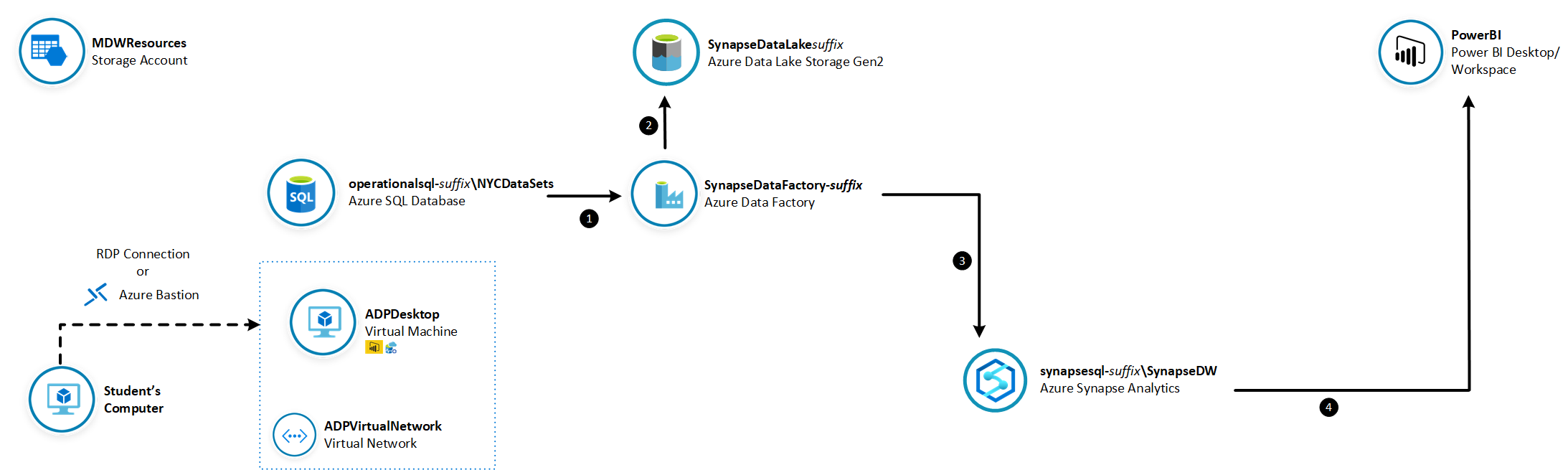

In this lab you will configure the Azure environment to allow relational data to be transferred from an Azure SQL Database to an Azure Synapse Analytics data warehouse using Azure Data Factory. The dataset you will use contains data about motor vehicle collisions that happened in New Your City from 2012 to 2019. You will use Power BI to visualise collision data loaded from your Azure Synapse Analytics data warehouse.

The estimated time to complete this lab is: 45 minutes.

The following Azure services will be used in this lab. If you need further training resources or access to technical documentation please find in the table below links to Microsoft Learn and to each service's Technical Documentation.

| Azure Service | Microsoft Learn | Technical Documentation |

|---|---|---|

| Azure SQL Database | Work with relational data in Azure | Azure SQL Database Technical Documentation |

| Azure Data Factory | Data ingestion with Azure Data Factory | Azure Data Factory Technical Documentation |

| Azure Synapse Analytics | Implement a Data Warehouse with Azure Synapse Analytics | Azure Synapse Analytics Technical Documentation |

| Azure Data Lake Storage Gen2 | Large Scale Data Processing with Azure Data Lake Storage Gen2 | Azure Data Lake Storage Gen2 Technical Documentation |

IMPORTANT: Some of the Azure services provisioned require globally unique name and a “-suffix” has been added to their names to ensure this uniqueness. Please take note of the suffix generated as you will need it for the following resources in this lab:

| Name | Type |

|---|---|

| SynapseDataFactory-suffix | Data Factory (V2) |

| synapsedatalakesuffix | Data Lake Storage Gen2 |

| synapsesql-suffix | SQL server |

| operationalsql-suffix | SQL server |

In this section you are going to establish a Remote Desktop Connection to ADPDesktop virtual machine.

| IMPORTANT |

|---|

| Execute these steps on your host computer |

-

In the Azure Portal, navigate to the lab resource group and click the ADPDesktop virtual machine.

-

On the ADPDesktop blade, from the Overview menu, click the Connect button.

-

On the Connect to virtual machine blade, click Download RDP File. This will download a .rdp file that you can use to establish a Remote Desktop Connection with the virtual machine.

-

If you have issues connecting via Remote Desktop Protocol (RDP), you can then connect via Azure Bastion by clicking the Bastion tab and providing the credentials indicated in the next section. This will open a new browser tab with the remote connection via SSL and HTML5.

In this section you are going to install Power BI Desktop and Azure Data Studio on ADPDesktop.

| IMPORTANT |

|---|

| Execute these steps inside the ADPDesktop remote desktop connection |

-

Once the RDP file is downloaded, click on it to establish an RDP connection with ADPDesktop

-

User the following credentials to authenticate:

- User Name: ADPAdmin

- Password: P@ssw0rd123! -

Once logged in, accept the default privacy settings.

-

Using the browser, download and install the latest version of following software. During the setup, accept all default settings:

Azure Data Studio (User Installer)

https://docs.microsoft.com/en-us/sql/azure-data-studio/download

Power BI Desktop (64-bit)

https://aka.ms/pbiSingleInstaller

In this section you will connect to Azure Synapse Analytics to create the database objects used to host and process data.

| IMPORTANT |

|---|

| Execute these steps inside the ADPDesktop remote desktop connection |

-

Open Azure Data Studio. On the Servers panel, click New Connection.

-

On the Connection Details panel, enter the following connection details:

- Server: synapsesql-suffix.database.windows.net

- Authentication Type: SQL Login

- User Name: ADPAdmin

- Password: P@ssw0rd123!

- Database: SynapseDW -

Click Connect.

-

Right-click the server name and click New Query.

-

On the new query window, create a new database schema named [NYC]. Use this SQL Command:

create schema [NYC]

go- Create a new round robin distributed table named NYC.NYPD_MotorVehicleCollisions, see column definitions on the SQL Command:

create table [NYC].[NYPD_MotorVehicleCollisions](

[UniqueKey] [int] NULL,

[CollisionDate] [date] NULL,

[CollisionDayOfWeek] [varchar](9) NULL,

[CollisionTime] [time](7) NULL,

[CollisionTimeAMPM] [varchar](2) NOT NULL,

[CollisionTimeBin] [varchar](11) NULL,

[Borough] [varchar](200) NULL,

[ZipCode] [varchar](20) NULL,

[Latitude] [float] NULL,

[Longitude] [float] NULL,

[Location] [varchar](200) NULL,

[OnStreetName] [varchar](200) NULL,

[CrossStreetName] [varchar](200) NULL,

[OffStreetName] [varchar](200) NULL,

[NumberPersonsInjured] [int] NULL,

[NumberPersonsKilled] [int] NULL,

[IsFatalCollision] [int] NOT NULL,

[NumberPedestriansInjured] [int] NULL,

[NumberPedestriansKilled] [int] NULL,

[NumberCyclistInjured] [int] NULL,

[NumberCyclistKilled] [int] NULL,

[NumberMotoristInjured] [int] NULL,

[NumberMotoristKilled] [int] NULL,

[ContributingFactorVehicle1] [varchar](200) NULL,

[ContributingFactorVehicle2] [varchar](200) NULL,

[ContributingFactorVehicle3] [varchar](200) NULL,

[ContributingFactorVehicle4] [varchar](200) NULL,

[ContributingFactorVehicle5] [varchar](200) NULL,

[VehicleTypeCode1] [varchar](200) NULL,

[VehicleTypeCode2] [varchar](200) NULL,

[VehicleTypeCode3] [varchar](200) NULL,

[VehicleTypeCode4] [varchar](200) NULL,

[VehicleTypeCode5] [varchar](200) NULL

)

with (distribution = round_robin)

goIn this section you create a staging container in your data lake account that will be used as a staging environment for Polybase before data can be copied to Azure Synapse Analytics.

| IMPORTANT |

|---|

| Execute these steps on your host computer |

-

In the Azure Portal, go to the lab resource group and locate the Azure Storage account synapsedatalakesuffix.

-

On the Overview panel, click Containers.

-

On the synapsedatalakesuffix – Containers blade, click + Container. On the New container blade, enter the following details:

- Name: polybase

- Public access level: Private (no anynymous access) -

Click OK to create the new container.

In this section you will build an Azure Data Factory pipeline to copy a table from NYCDataSets database to Azure Synapse Analytics data warehouse.

| IMPORTANT |

|---|

| Execute these steps on your host computer |

-

In the Azure Portal, go to the lab resource group and locate the Azure Data Factory resource SynapseDataFactory-suffix.

-

On the Overview panel, click Author & Monitor. The Azure Data Factory portal will open in a new browser tab.

-

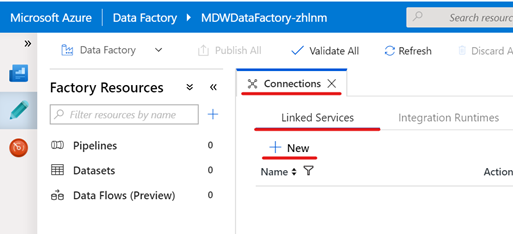

In the Azure Data Factory portal and click the Author (pencil icon) option on the left-hand side panel. Under Connections tab, click Linked Services and then click + New to create a new linked service connection.

-

On the New Linked Service blade, type “Azure SQL Database” in the search box to find the Azure SQL Database linked service. Click Continue.

-

On the New Linked Service (Azure SQL Database) blade, enter the following details:

- Name: OperationalSQL_NYCDataSets

- Account selection method: From Azure subscription

- Azure subscription: [your subscription]

- Server Name: operationalsql-suffix

- Database Name: NYCDataSets

- Authentication Type: SQL Authentication

- User Name: ADPAdmin

- Password: P@ssw0rd123! -

Click Test connection to make sure you entered the correct connection details and then click Finish.

-

Repeat the process to create an Azure Synapse Analytics linked service connection.

-

On the New Linked Service (Azure Synapse Analytics) blade, enter the following details:

- Name: SynapseSQL_SynapseDW

- Connect via integration runtime: AutoResolveIntegrationRuntime

- Account selection method: From Azure subscription

- Azure subscription: [your subscription]

- Server Name: synapsesql-suffix

- Database Name: SynapseDW

- Authentication Type: SQL Authentication

- User Name: ADPAdmin

- Password: P@ssw0rd123! -

Click Test connection to make sure you entered the correct connection details and then click Finish.

-

Repeat the process once again to create an Azure Blob Storage linked service connection.

-

On the New Linked Service (Azure Blob Storage) blade, enter the following details:

- Name: synapsedatalake

- Connect via integration runtime: AutoResolveIntegrationRuntime

- Authentication method: Account key

- Account selection method: From Azure subscription

- Azure subscription: [your subscription]

- Storage account name: synapsedatalakesuffix -

Click Test connection to make sure you entered the correct connection details and then click Finish.

-

You should now see 3 linked services connections that will be used as source, destination and staging.

| IMPORTANT |

|---|

| Execute these steps on your host computer |

-

Open the Azure Data Factory portal and click the Author (pencil icon) option on the left-hand side panel. Under Factory Resources tab, click the ellipsis (…) next to Datasets and then click New Dataset to create a new dataset.

-

Type "Azure SQL Database" in the search box and select Azure SQL Database. Click Finish.

-

On the New Data Set tab, enter the following details:

- Name: NYCDataSets_MotorVehicleCollisions

- Linked Service: OperationalSQL_NYCDataSets

- Table: [NYC].[NYPD_MotorVehicleCollisions]Alternatively you can copy and paste the dataset JSON definition below:

{ "name": "NYCDataSets_MotorVehicleCollisions", "properties": { "linkedServiceName": { "referenceName": "OperationalSQL_NYCDataSets", "type": "LinkedServiceReference" }, "folder": { "name": "Lab1" }, "annotations": [], "type": "AzureSqlTable", "schema": [], "typeProperties": { "schema": "NYC", "table": "NYPD_MotorVehicleCollisions" } } } -

Leave remaining fields with default values and click Continue.

-

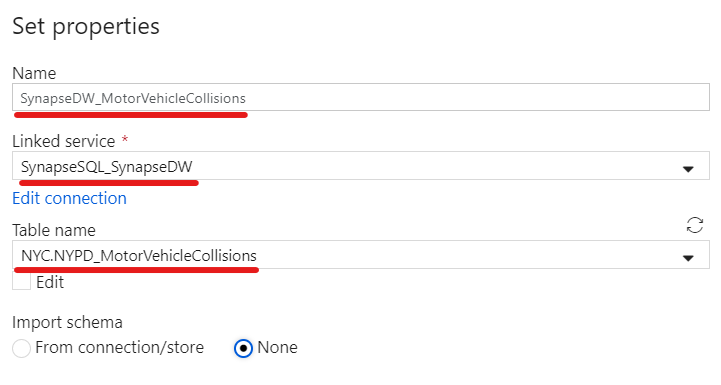

Repeat the process to create a new Azure Synapse Analytics data set.

-

On the New Data Set tab, enter the following details:

- Name: SynapseDW_MotorVehicleCollisions

- Linked Service: SynapseSQL_SynapseDW

- Table: [NYC].[NYPD_MotorVehicleCollisions]Alternatively you can copy and paste the dataset JSON definition below:

{ "name": "SynapseDW_MotorVehicleCollisions", "properties": { "linkedServiceName": { "referenceName": "SynapseSQL_SynapseDW", "type": "LinkedServiceReference" }, "folder": { "name": "Lab1" }, "annotations": [], "type": "AzureSqlDWTable", "schema": [], "typeProperties": { "schema": "NYC", "table": "NYPD_MotorVehicleCollisions" } } } -

Leave remaining fields with default values and click Continue.

-

Under Factory Resources tab, click the ellipsis (…) next to Datasets and then click New folder to create a new Folder. Name it Lab1.

-

Drag the two datasets created into the Lab1 folder you just created.

-

Publish your dataset changes by clicking the Publish All button on the top of the screen.

| IMPORTANT |

|---|

| Execute these steps on your host computer |

-

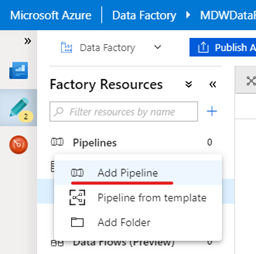

Open the Azure Data Factory portal and click the Author (pencil icon) option on the left-hand side panel. Under Factory Resources tab, click the ellipsis (…) next to Pipelines and then click Add Pipeline to create a new pipeline.

-

On the New Pipeline tab, enter the following details:

- General > Name: Lab1 - Copy Collision Data -

Leave remaining fields with default values.

-

From the Activities panel, type “Copy Data” in the search box. Drag the Copy Data activity on to the design surface.

-

Select the Copy Data activity and enter the following details:

- General > Name: CopyMotorVehicleCollisions

- Source > Source dataset: NYCDataSets_MotorVehicleCollisions

- Sink > Sink dataset: SynapseDW_MotorVehicleCollisions

- Sink > Allow PolyBase: Checked

- Settings > Enable staging: Checked

- Settings > Staging account linked service: synapsedatalake

- Settings > Storage Path: polybase -

Leave remaining fields with default values.

-

Publish your pipeline changes by clicking the Publish all button.

-

To execute the pipeline, click on Add trigger menu and then Trigger Now.

-

On the Pipeline Run blade, click Finish.

-

To monitor the execution of your pipeline, click on the Monitor menu on the left-hand side panel.

-

You should be able to see the Status of your pipeline execution on the right-hand side panel.

In this section you are going to use Power BI to visualize data from Azure Synapse Analytics. The Power BI report will use an Import connection to query Azure Synapse Analytics and visualise Motor Vehicle Collision data from the table you loaded in the previous exercise.

| IMPORTANT |

|---|

| Execute these steps inside the ADPDesktop remote desktop connection |

- On ADPDesktop, download the Power BI report from the link https://aka.ms/ADPLab1 and save it on the Desktop.

- Open the file ADPLab1.pbit with Power BI Desktop. Optionally sign up for the Power BI tips and tricks email, or to dismiss this, click to sign in with an existing account, and then hit the escape key.

- When prompted to enter the value of the SynapseSQLEnpoint parameter, type the full server name: synapsesql-suffix.database.windows.net

- Click Load, and then Run to acknowledge the Native Database Query message

- When prompted, enter the Database credentials:

- User Name: adpadmin

- Password: P@ssw0rd123!