A music streaming startup, Sparkify, has grown their user base and song database even more and want to move their data warehouse to a data lake. Their data resides in S3, in a directory of JSON logs on user activity on the app, as well as a directory with JSON metadata on the songs in their app.

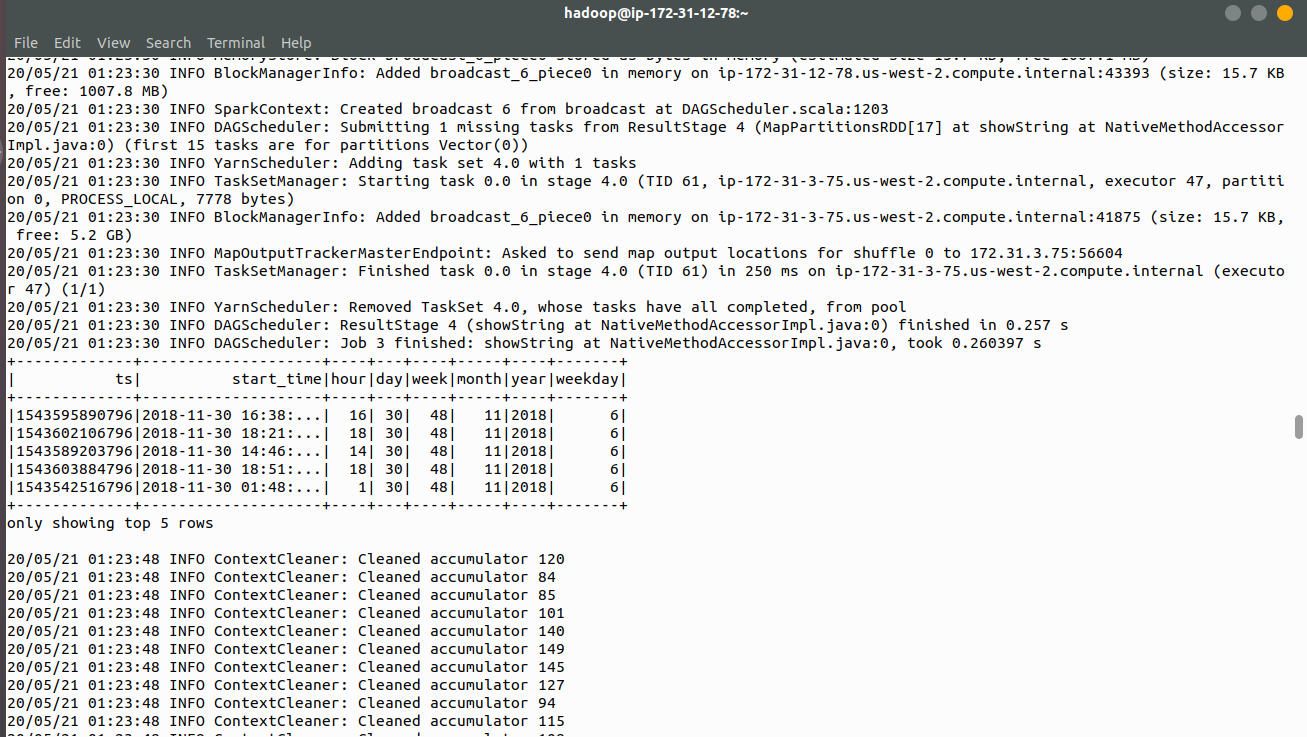

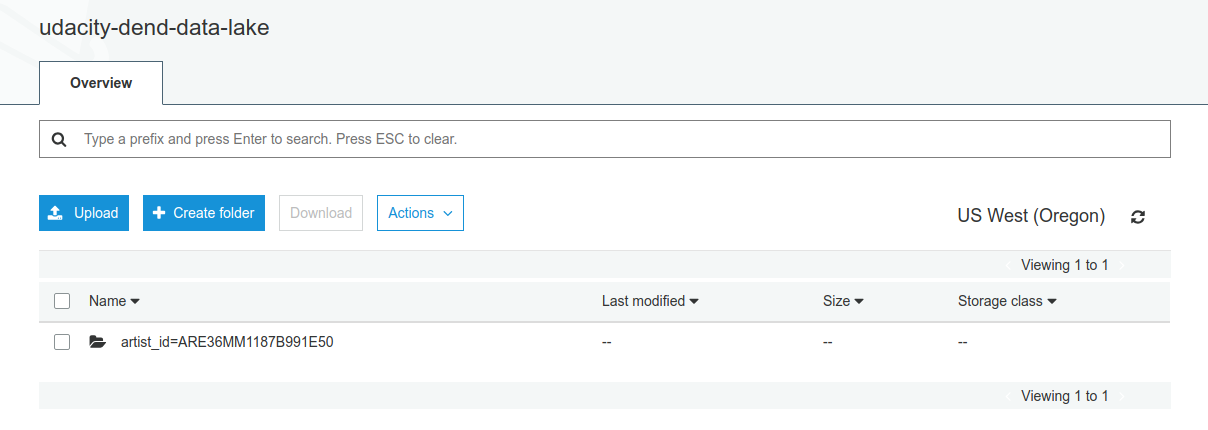

In this project, we will build an ETL pipeline for a data lake hosted on S3. We will load data from S3, process the data into analytics tables using Spark, and load them back into S3. We will deploy this Spark process on a cluster using AWS.

- /images - Some screenshots.

- etl.py - Spark script to perform ETL job.

- dl.cfg - Configuration file to add AWS credentials.

You'll be working with two datasets that reside in S3. Here are the S3 links for each:

- Song data - s3://udacity-dend/song_data

- Log data - s3://udacity-dend/log_data

songplays - records in event data associated with song plays. Columns for the table:

songplay_id, start_time, user_id, level, song_id, artist_id, session_id, location, user_agent

user_id, first_name, last_name, gender, level

song_id, title, artist_id, year, duration

artist_id, name, location, lattitude, longitude

start_time, hour, day, week, month, year, weekday

- Fill your AWS Credentials in dl.clg file

- Create a EMR Cluster

- Copy your code to emr

- Execute etl.py as a spark-submit job

- Check the processed result in s3