theme: Letters from Sweden, 4

Intro to Maths for Machine Learning

*Amit Kapoor* @amitkaps

What I cannot create, I do not understand -- Richard Feynman

Hacker literally means developing mastery over something. -- Paul Graham

Here we will aim to learn Math essential for Data Science in this hacker way.

- Why do you need to understand the math?

- What math knowledge do you need?

- Why approach it the hacker's way?

- Understand the Math.

- Code it to learn it.

- Play with code.

- Solve

$$Ax = b$$ for $$ n \times n$$ - Solve

$$Ax = b$$ for $$ n \times p + 1$$ - Linear Regression

- Ridge Regularization (L2)

- Bootstrapping

- Logistic Regression (Classification)

- Basic Statistics

- Distributions

- Shuffling

- Bootstrapping & Simulation

- A/B Testing

- Solve

$$Ax = \lambda x$$ for $$ n \times n$$ - Eigenvectors & Eigenvalues

- Principle Component Analysis

- Cluster Analysis (K-Means)

It’s tough to make predictions, especially about the future. -- Yogi Berra

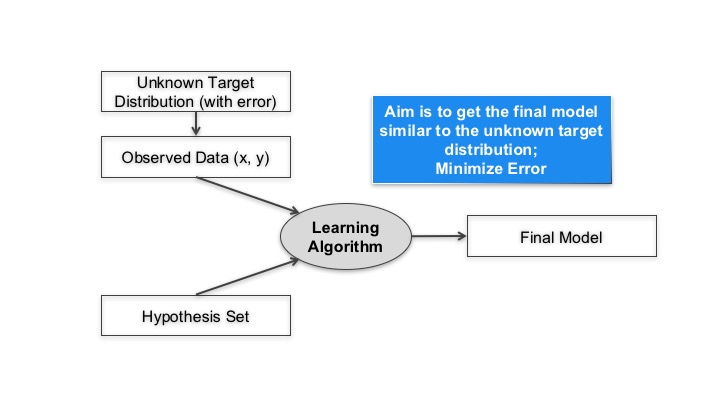

[Machine learning is the] field of study that gives computers the ability to learn without being explicitly programmed. -- Arthur Samuel

Machine learning is the study of computer algorithm that improve automatically through experience -- Tom Mitchell

- “Is this cancer?”

- “What is the market value of this house?”

- “Which of these people are friends?”

- “Will this person like this movie?”

- “Who is this?”

- “What did you say?”

- “How do you fly this thing?”.

- Search

- Photo Tagging

- Spam Filtering

- Recommendation

- ...

- Database Mining e.g. Clickstream data, Business data

- Automating e.g. Handwriting, Natural Language Processing, Computer Vision

- Self Customising Program e.g. Recommendations

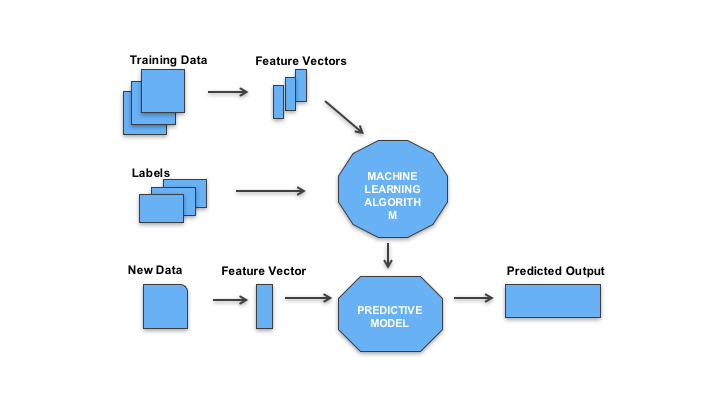

- Supervised Learning

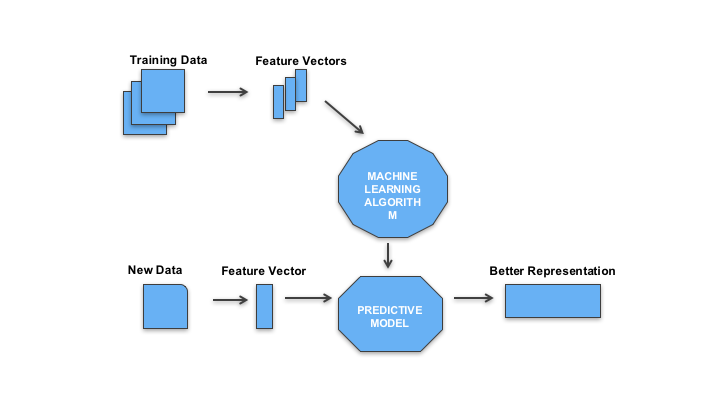

- Unsupervised Learning

- Reinforcement Learning

- Online Learning

- Regression

- Classification

- Clustering

- Dimensionality Reduction

- Frame: Problem definition

- Acquire: Data ingestion

- Refine: Data wrangling

- Transform: Feature creation

- Explore: Feature selection

- Model: Model creation & assessment

- Insight: Communication

*Interactive Example: [http://setosa.io/ev/](http://setosa.io/ev/ordinary-least-squares-regression/)*

Find the $$ \beta $$ parameters that best fit:

$$ y=1 $$ if

Follows:

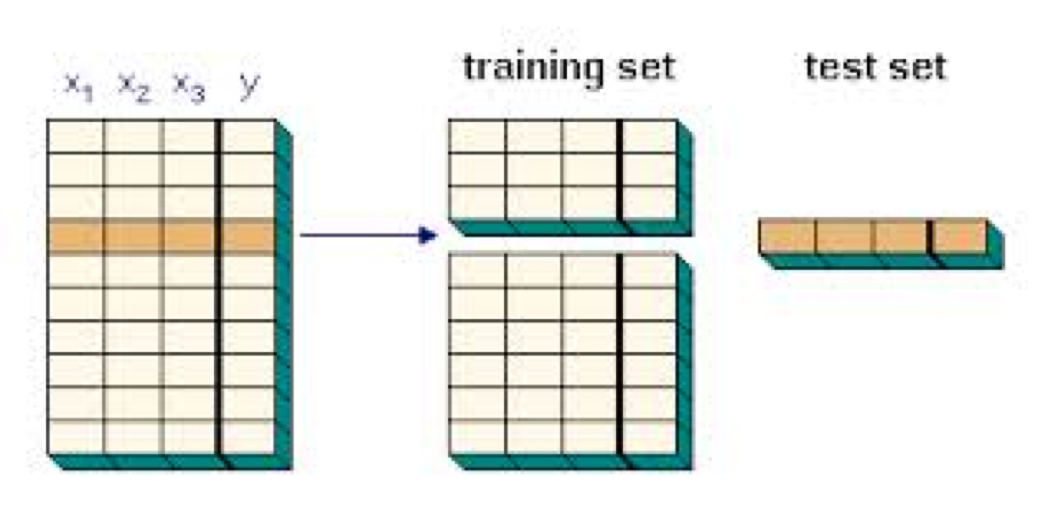

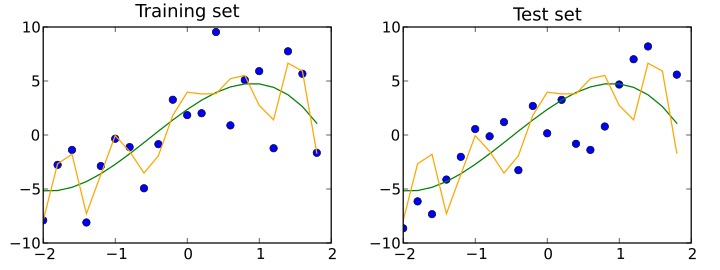

Split the Data - 80% / 20%

Measure the error on Test data

Attempts to impose Occam's razor on the solution

Mean Squared Error

Confusion Matrix

Classification Metrics

Recall (TPR) = TP / (TP + FN)

Precision = TP / (TP + FP)

Specificity (TNR) = TN / (TN + FP)

Receiver Operating Characteristic Curve

Plot of TPR vs FPR at different discrimination threshold

Example: Survivor on Titanic

- Easy to interpret

- Little data preparation

- Scales well with data

- White-box model

- Instability – changing variables, altering sequence

- Overfitting

- Also called bootstrap aggregation, reduces variance

- Uses decision trees and uses a model averaging approach

- Combines bagging idea and random selection of features.

- Similar to decision trees are constructed – but at each split, a random subset of features is used.

If you torture the data enough, it will confess. -- Ronald Case

- Data Snooping

- Selection Bias

- Survivor Bias

- Omitted Variable Bias

- Black-box model Vs White-Box model

- Adherence to regulations

Module 1: Linear Algebra Supervised ML - Regression, Classification

- Solve

$$Ax = b$$ for $$ n \times n$$ - Solve

$$Ax = b$$ for $$ n \times p + 1$$ - Linear Regression

- Bootstrapping

- Regularization - L1, L2

- Gradient Descent

- Steep learning curve

- Different audience level

- Balance speed and coverage

- Be considerate

Module 1: Linear Algebra Supervised ML - Classification

- Logistic Regression

Module 2: Statistics Hypothesis Testing: A/B Testing

- Basic Statistics, Distributions

- Bootstrapping & Simulation

- A/B Testing

Module 3: Linear Algebra contd. Unsupervised ML: Dimensionality Reduction

- Solve

$$Ax = \lambda x$$ for $$ n \times n$$ - Eigenvectors & Eigenvalues

- Principle Component Analysis

- Cluster Analysis (K-Means)