- @<proposer1 @fengjian428>

- @<proposer2 @xushiyan>

- @<approver1 @vinothchandar>

- @<approver2 @codope>

JIRA: HUDI-3730

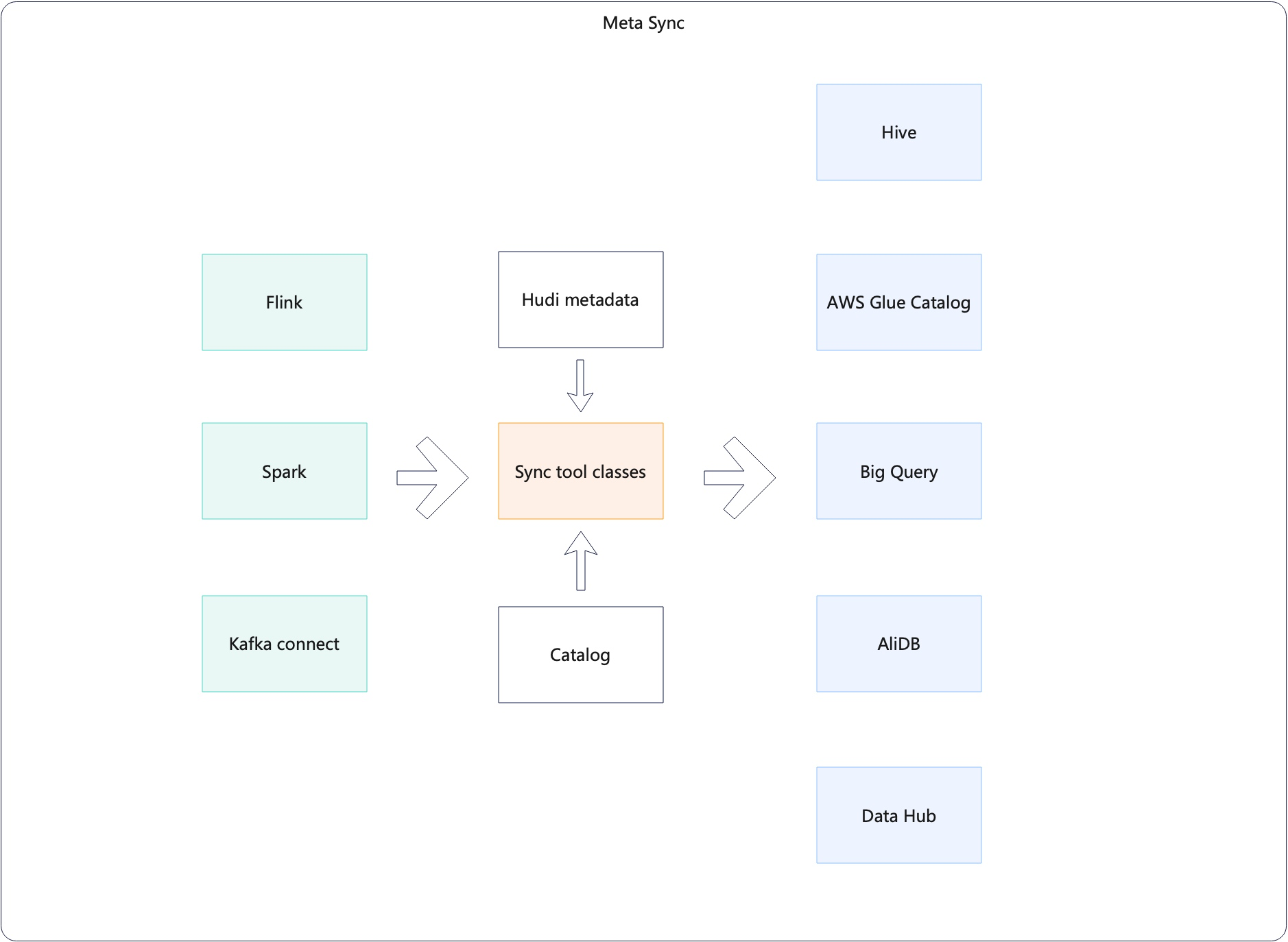

Hudi support sync to various metastores via different processing framework like Spark, Flink, and Kafka connect.

There are some room for improvement

- The way to generate Sync configs are inconsistent in different framework

- The abstraction of SyncClasses was designed for HiveSync, hence there are duplicated code, unused method, and parameters.

We need a standard way to run hudi sync. We also need a unified abstraction of XXXSyncTool , XXXSyncClient and XXXSyncConfig to handle supported metastores, like hive metastore, bigquery, datahub, etc.

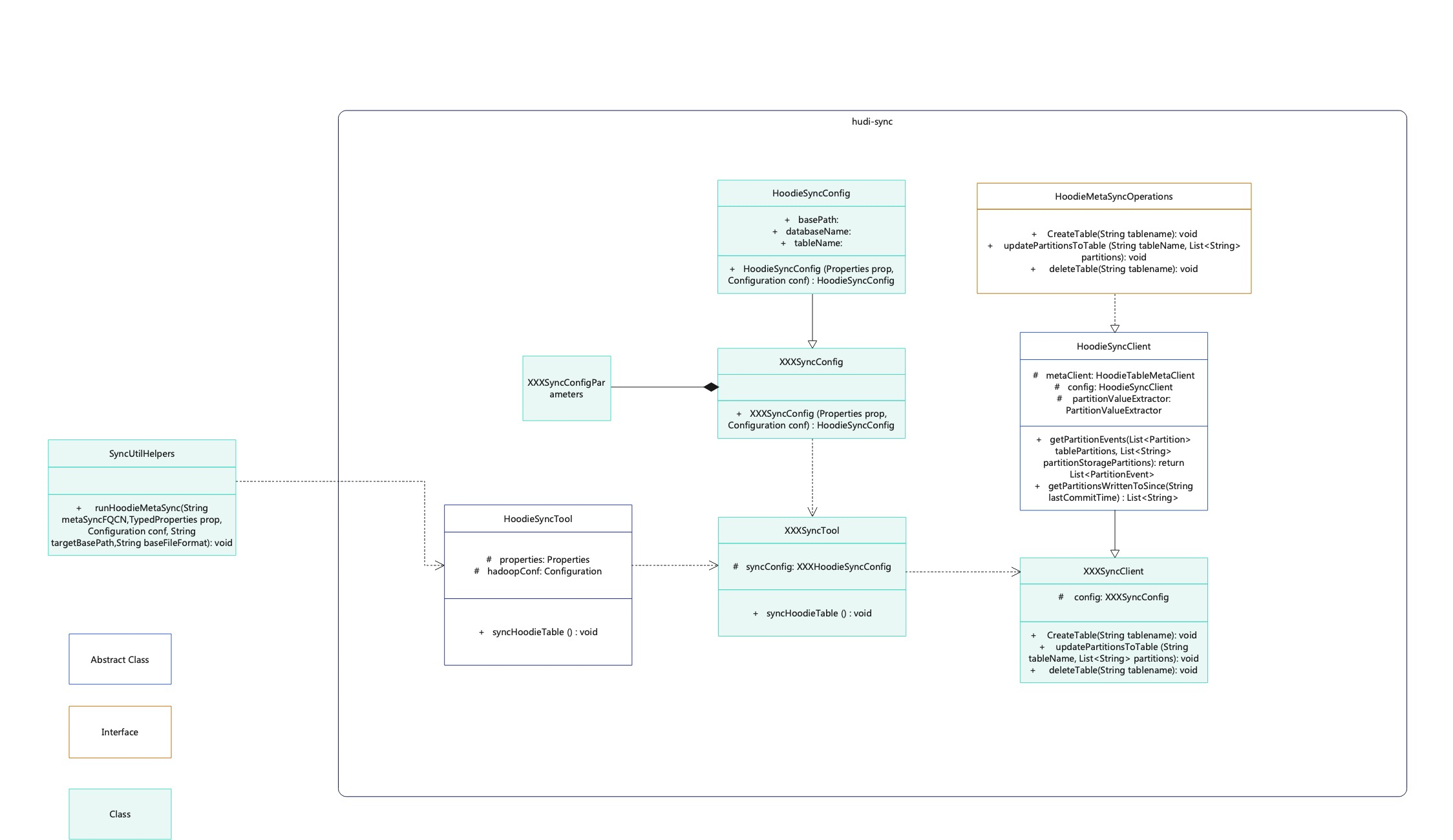

Below are the proposed key classes to handle the main sync logic. They are extensible for different metastores.

Renamed from AbstractSyncTool.

public abstract class HoodieSyncTool implements AutoCloseable {

protected HoodieSyncClient syncClient;

/**

* Sync tool class is the entrypoint to run meta sync.

*

* @param props A bag of properties passed by users. It can contain all hoodie.* and any other config.

* @param hadoopConf Hadoop specific configs.

*/

public HoodieSyncTool(Properties props, Configuration hadoopConf);

public abstract void syncHoodieTable();

public static void main(String[] args) {

// instantiate HoodieSyncConfig and concrete sync tool, and run sync.

}

}public class HoodieSyncConfig extends HoodieConfig {

public static class HoodieSyncConfigParams {

// POJO class to take command line parameters

@Parameter()

private String basePath; // common essential parameters

public Properties toProps();

}

/**

* XXXSyncConfig is meant to be created and used by XXXSyncTool exclusively and internally.

*

* @param props passed from XXXSyncTool.

* @param hadoopConf passed from XXXSyncTool.

*/

public HoodieSyncConfig(Properties props, Configuration hadoopConf);

}

public class HiveSyncConfig extends HoodieSyncConfig {

public static class HiveSyncConfigParams {

@Parameter()

private String syncMode;

// delegate common parameters to other XXXParams class

// this overcomes single-inheritance's inconvenience

// see https://jcommander.org/#_parameter_delegates

@ParametersDelegate()

private HoodieSyncConfigParams hoodieSyncConfigParams = new HoodieSyncConfigParams();

public Properties toProps();

}

public HoodieSyncConfig(Properties props);

}Renamed from AbstractSyncHoodieClient.

public abstract class HoodieSyncClient implements AutoCloseable {

// metastore-agnostic APIs

}- support all sync related configs to use suffix as

hoodie.meta.sync.*- move

hoodie.meta_sync.*or any other variance to alias - rename module

hudi-synctohudi-meta-sync(no bundle name change) - rename

hoodieSync*variables or methods tohoodieMetaSync*

- move

databaseandtableshould not be required by sync tool; they should be inferred from table properties- users should not need to set PartitionValueExtractor; partition value extractors should be inferred automatically

- infer repeated sync configs from original configs

META_SYNC_BASE_FILE_FORMAT- infer from

org.apache.hudi.common.table.HoodieTableConfig.BASE_FILE_FORMAT

- infer from

META_SYNC_ASSUME_DATE_PARTITION- infer from

org.apache.hudi.common.config.HoodieMetadataConfig.ASSUME_DATE_PARTITIONING

- infer from

META_SYNC_DECODE_PARTITION- infer from

org.apache.hudi.common.table.HoodieTableConfig.URL_ENCODE_PARTITIONING

- infer from

META_SYNC_USE_FILE_LISTING_FROM_METADATA- infer from

org.apache.hudi.common.config.HoodieMetadataConfig.ENABLE

- infer from

The breaking changes should be made at once together with other user-facing config changes at a chosen proper release.

- remove all sync related option constants from

DataSourceOptions - remove

USE_JDBCand fully adoptSYNC_MODE - remove

HIVE_SYNC_ENDABLEDand related arguments from sync tools and delta streamers. UseSYNC_ENABLED - remove repeated sync configs, use original configs

META_SYNC_BASE_FILE_FORMAT->org.apache.hudi.common.table.HoodieTableConfig.BASE_FILE_FORMATMETA_SYNC_ASSUME_DATE_PARTITION->org.apache.hudi.common.config.HoodieMetadataConfig.ASSUME_DATE_PARTITIONINGMETA_SYNC_DECODE_PARTITION->org.apache.hudi.common.table.HoodieTableConfig.URL_ENCODE_PARTITIONINGMETA_SYNC_USE_FILE_LISTING_FROM_METADATA->org.apache.hudi.common.config.HoodieMetadataConfig.ENABLE

- Users who set

USE_JDBCwill need to change to setSYNC_MODE=jdbc - Users who set

--enable-hive-syncorHIVE_SYNC_ENABLEDwill need to drop the argument or config and change to--enable-syncorSYNC_ENABLED. - Users who import from

DataSourceOptionsfor meta sync constants will need to import relevant configs fromHoodieSyncConfigand subclasses. - Users who set

AwsGlueCatalogSyncToolas sync tool class need to update the class name toAWSGlueCatalogSyncTool

- CI covers most operations for Hive sync with HMS

- end-to-end testing with setup for Glue Catalog, BigQuery, DataHub instance

- manual testing with partitions added and removed