Open Intelligence processes any camera motion triggered images and sorts seen objects using Yolo, it provides easy to use front end web interface with rich features so that you can have up to date intel what is the current status on your property. Open Intelligence uses license plate detection (ALPR) to detect vehicle plates and face detection to detect people faces which then can be sorted into person folders and then can be trained so that Open Intelligence can try to identify seen people. All this can be done from front end interface.

Open Intelligence uses super resolution neural network to process super resolution images for improved license plate detection.

Project goal is to be useful information gathering tool to provide data for easy property monitoring without need for expensive camera systems because any existing cameras are suitable.

I developed this to my own use because were tired to use existing monitoring tools to go through recorded video. I wanted to know what has been happening quickly.

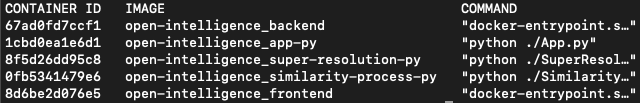

Open Intelligence is meant to be run with Docker.

Click below to watch promo video

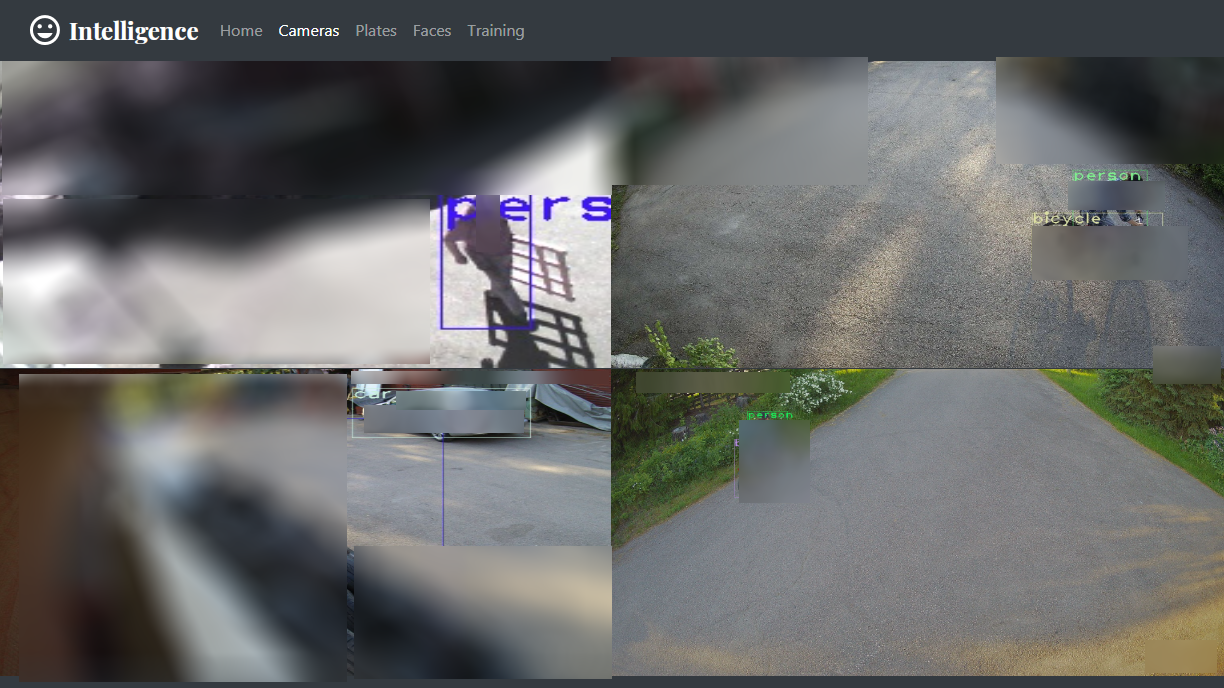

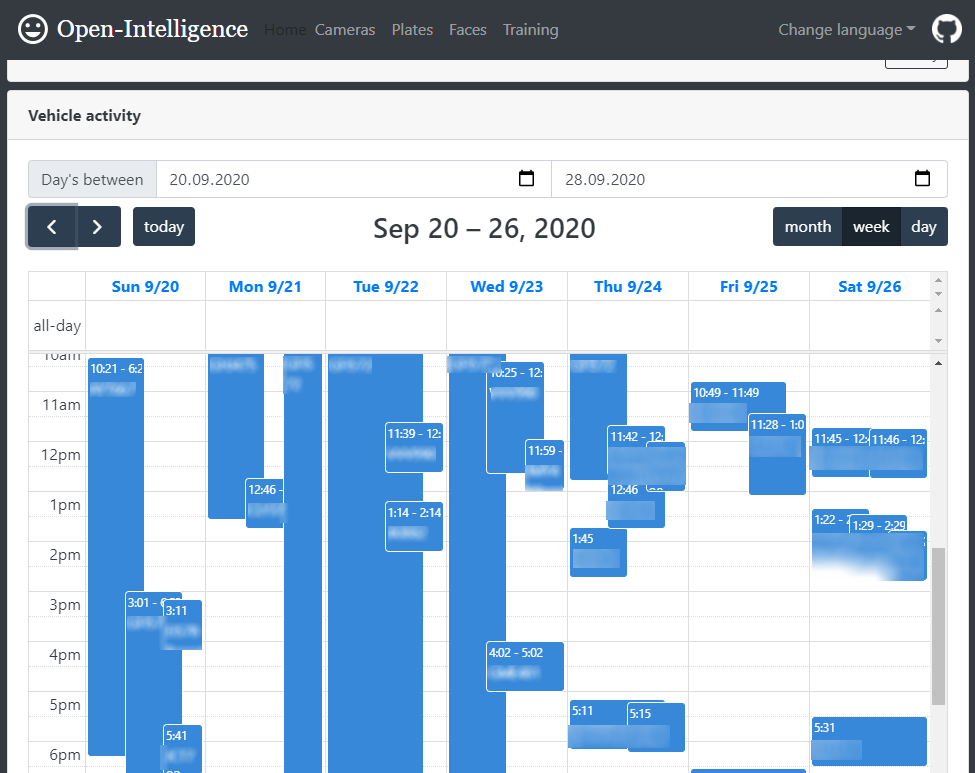

| Cameras view | Plate calendar |

|---|---|

|

|

- It's possible to make cameras view play heard microphone sounds.

- Calendar view can open full source detection image by clicking car plate event.

| Face wall | Face wall source dialog |

|---|---|

|

|

- Face wall is one of the creepiest features.

- You can go trough pile of faces and by clicking them, you can see source image.

- Environment

- Installing with Docker

- Update to the latest version

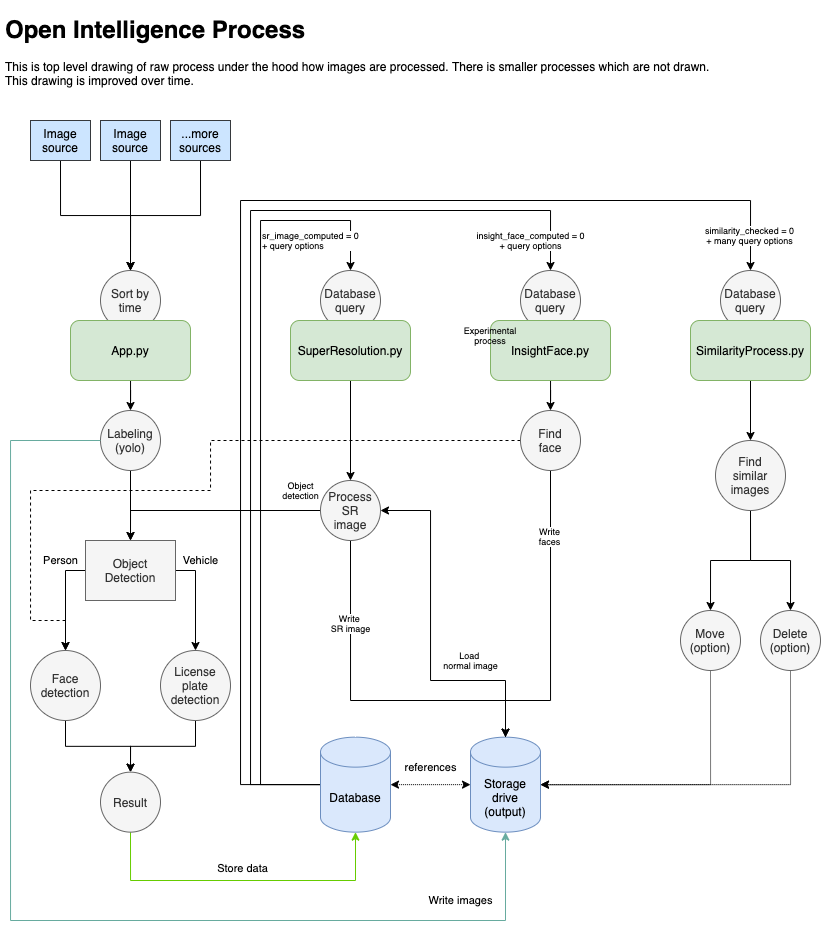

- Process drawing

- Project folder structure

- Python Apps

- Installing manually

- Multi node support

- Cuda GPU Support

- Postgresql notes

- Openalpr notes

- Front end development

- Troubleshooting

- Authors

- License

Everything can be installed on one server or to separate servers meaning that database is at server one, python application at server two and api hosting at server three. Processes must have access to output storage containing processed image result files.

This section has step-by-step installation tutorial to get started with Docker based installation. Docker way of running Open-Intelligence is not going to limit you for only Docker, you can still run for example api, front-end, app.py, similarity processes with Docker and have separate GPU enabled machine for super resolution and insightface processes.

Download PostgreSQL and install it on any machine you want on your network. Look over with search engine how to make it available on local network devices if you are installing it on a different machine than what is running Docker images.

If you don't already have, go to https://docs.docker.com/get-docker/ and follow their instructions.

If you don't already have, go to https://docs.docker.com/compose/install// and follow their instructions.

Use any version control tool to clone this repository code. I recommend using any git based tool so that you can checkout latest code and by recommend, I mean don't download it as "offline" zip file.

Using git from shell:

git clone https://github.com/norkator/open-intelligence.gitGet models here https://drive.google.com/file/d/1dSJuxpwSFfF7SIJg8NMKG5yCIG9CHQKw/view?usp=sharing

unzip models into open intelligence /python/models folder.

- Rename

docker-compose.yml_tplintodocker-compose.ymland fill in your environment variables which pretty much are database configuration.

https://github.com/norkator/open-intelligence/wiki/Linux-notes#ensure-your-timezone-is-right

On linux machine configuring storage steps are:

Open docker-compose.yml and tweak all volume configs

volumes:

- ./python/:/app

- /Users/<user-name>/Desktop/camera_root:/input

- /Users/<user-name>/Desktop/output:/output_testwhere first part /Users/<user-name>/Desktop/camera_root is your path to your host machine folder.

Same with another output folder path. Its just some folder in your machine where you want all open intelligence

output files to be stored.

That latter part like :/input and :/output_test is how python process sees paths in container side.

It does not affect any way to your actual mounted folders so better not change these.

More on this article: https://github.com/norkator/open-intelligence/wiki/Configuring-storage

- Run

docker-compose upin root of this project and let magic happen. - Open

http://localhost:3000/and you should see Open Intelligence front page. Hopefully.

- Go to Configuration page and fill in your details. Content settings are explained at configuration page.

- Run

docker-compose upagain so that changes takes effect in python side.

Run at root of this project:

git fetch

git pull

docker-compose build

docker-compose upOverall process among different python processes for Open Intelligence.

Default folders

.

├── api # Backend service for frontend webpage

├── docs # Documents folder containing images and drawings

├── front-end # Web interface for this project

├── python # Python backend applications doing heavy lifting

├── classifiers # Classifiers for different detectors like faces

├── libraries # Modified third party libraries

├── models # Yolo and other detector model files

├── module # Python side application logic, source files

├── objects # Base objects for internal logic

├── scripts # Scripts to ease things

This part is explaining in better detail what each of base python app scripts is meant for. Many tasks are separated for each part. App.py is always main process, the first thing that sees images.

- File:

App.py - Status: Mandatory

- This is main app which is responsible for processing input images from configured sources.

- Cluster support: Yes.

- File:

StreamGrab.py - Status: Optional

- If you don't have cameras which are outputting images, you can configure multiple camera streams using this stream grabber tool to create constant input images.

- Cluster support: No.

- File:

SuperResolution.py - Status: Optional

- This tool processes super resolution images and run's new detections for these processed sr images. This is no way mandatory for process.

- Cluster support: No.

- Mainly meant for improved license plate detection.

- Testing: use command

python SuperResolutionTest.py --testfile="some_file.jpg"which will load image by given name from/imagesfolder.

- File:

InsightFace.py - Status: Optional

- Process faces page 'face wall' images using InsightFace retina model.

- Cluster support: No.

- File:

SimilarityProcess.py - Status: Optional

- Compares current running day images for close duplicates and deletes images determined as duplicate having no higher value (no detection result). Processes images in one hour chunks.

- Cluster support: No.

- Process is trying to save some space.

This is no longer recommended. Using docker method is much better.

Terminology for words like API side and Python side:

- "API Side" is

/apifolder containing node api processintelligence.js. - "Python side" is

/pythonfolder containing different python processes.

See Project folder structure for more details about folders.

- Go to

/apifolder and runnpm install - Install PostgreSQL server: https://www.postgresql.org/

- Accessing postgres you need to find tool like pgAdmin which comes with postgres, command line or some IDE having db tools.

- Rename

.env_tplto.envand fill details. - Run

intelligence-tasks.jsor with PM2 process managerpm2 start intelligence-tasks.js. - Run

node intelligence.jsor with PM2 process managerpm2 start intelligence.js -i 2. - Running these NodeJS scripts will create database and table structures, if you see error run it again.

- Go to

/front-endfolder and rename.env_tplto.env. - At

/front-endrunnpm startso you have both api and front end running. - Access

localhost:3000if react app doesn't open browser window automatically. - Outdated frontend user manual for old ui version https://docs.google.com/document/d/1BwjXO0tUM9aemt1zNzofSY-DKeno321zeqpcmPI-wEw/edit?usp=sharing

- Go to

/front-end - Check your

.envREACT_APP_API_BASE_URL that it corresponds your machine ip address where node js api is running. - Build react front end via running

npm run build - Copy/replace

/buildfolder contents somewhere to serve build webpage if you want.

(Windows)

- Download Python 3.6 ( https://www.python.org/ftp/python/3.6.0/python-3.6.0-amd64.exe )

- Only tested to work with Python 3.6. Newer ones caused problems with packages when tested.

- Jump to

/pythonfolder - Activate python virtual env.

.\venv\Scripts\activate.bat

- Install dependencies

pip install -r requirements_windows.txt - Get models using these instruction https://github.com/norkator/open-intelligence/wiki/Models

- Download PostgreSQL server ( https://www.postgresql.org/ ) I am using version 11.6 but its also tested with version 12. (if you didn't install at upper api section)

- Ensure you have

Microsoft Visual C++ 2015 Redistributable (x64)installed.- This is needed by openALPR

- Separate camera and folder names with comma just like at base config template

- Run wanted python apps, see

Python Appssection.

(Linux)

- Install required Python version.

sudo add-apt-repository ppa:deadsnakes/ppa sudo apt-get install python3.6 virtualenv --python=/usr/bin/python3.6 ./ source ./bin/activate - Install dependencies

pip install -r requirements_linux.txt - Get models using these instruction https://github.com/norkator/open-intelligence/wiki/Models

- Download PostgreSQL server ( https://www.postgresql.org/ ) I am using version 11.6 but its also tested with version 12. (if you didn't install at upper api section)

- Separate camera and folder names with comma just like at base config template

- Run wanted python apps, see

Python Appssection.

Multi node support requires little more work to configure, but it's doable. Follow instructions below.

- Each node needs to have access to source files hosted by one main node via network share.

- Create configuration file

config_slave.inifrom templateconfig_slave.ini.tpl - Fill in postgres connection details having server running postgres as target location.

- Fill [camera] section folders, these should be behind same mount letter+path on each node.

- Point your command prompt into network share folder containing

App.pyand other files. - On each slave node run

App.pyvia giving argument:\.App.py --bool_slave_node True

Cuda only works with some processes like super resolution and insightface. Requirements are:

- NVIDIA only; GPU hardware compute capability: The minimum required Cuda capability is 3.5 so old GPU's won't work.

- CUDA toolkit version. Windows link for right 10.0 is https://developer.nvidia.com/cuda-10.0-download-archive?target_os=Windows&target_arch=x86_64

- Download cuDNN "Download cuDNN v7.6.3 (August 23, 2019), for CUDA 10.0" https://developer.nvidia.com/rdp/cudnn-archive

- Place cuDNN files inside proper Cuda toolkit installation folders. cuDNN archive has folder structure.

All datetime fields are inserted without timezone so that:

File : 2020-01-03 08:51:43

Database : 2020-01-03 06:51:43.000000

Database timestamps are shifted on use based on local time offset.

These notes are for Windows. Current Docker way makes this installation automatic.

Got it running with following works. Downloaded 2.3.0 release from here https://github.com/openalpr/openalpr/releases

- Unzipped

openalpr-2.3.0-win-64bit.zipto/librariesfolder - Downloaded and unzipped

Source code(zip) - Navigated to

src/bindings/python - Run

python setup.py install - From appeared

build/libmoved contents to projectlibraries/openalpr_64/openalprfolder. - At license plate detection file imported contents with

from libraries.openalpr_64.openalpr import Alpr

Now works without any python site-package installation.

There is a separate readme file for this side so see more at ./front-end/README.md

Refer to troubleshooting wiki.

- Norkator - Initial work - norkator

Note that /libraries folder has Python applications made by other people. I have needed to make small changes to them,

that's why those are included here.

See LICENSE file.