https://arxiv.org/abs/2309.13860

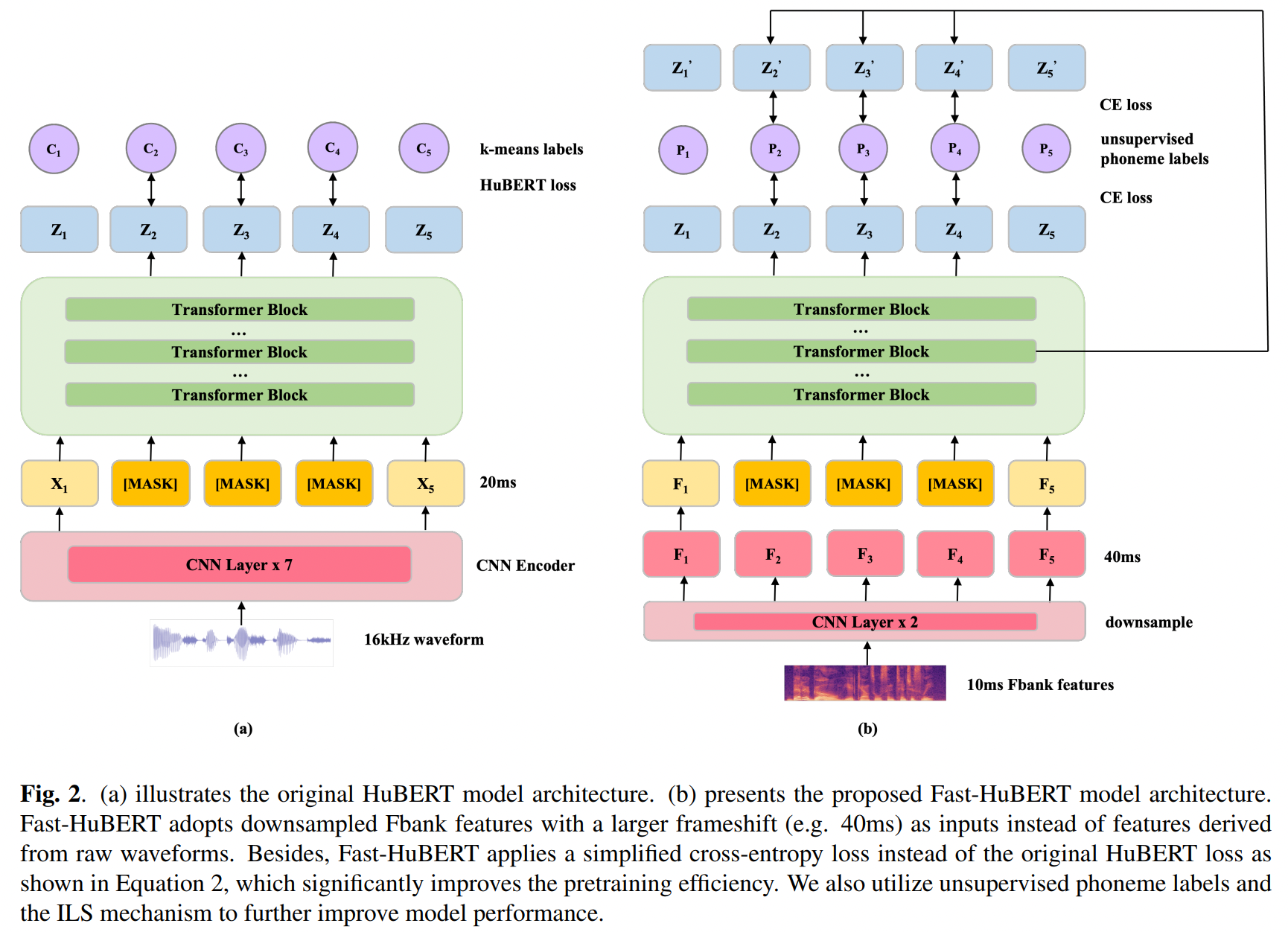

Existing speech-based SSL models face a common dilemma in terms of computational cost, which might hinder their potential application and in-depth academic research. Fast-HuBERT is proposed to improve the pretraining efficiency.

Fast-HuBERT optimizes the front-end inputs, loss computation and also aggregates other SOTA techniques, including ILS and MonoBERT.

Fast-HuBERT can be trained in 1.1 days with 8 V100 GPUs on Librispeech benchmark, without performance drop compared to the original implementation, resulting in 5.2X speedup.

git clone https://github.com/pytorch/fairseq

cd fairseq

pip install --editable ./

git clone https://github.com/yanghaha0908/FastHuBERT

Refer to this to prepare data.

$ python fairseq_cli/hydra_train.py \

--config-dir /path/to/FastHuBERT/config/pretrain \

--config-name fasthubert_base_lirbispeech \

common.user_dir=/path/to/FastHuBERT \

task.data=/path/to/data \

task.label_dir=/path/to/labels \

task.labels=["phn"] \

model.label_rate=50 \

$ python fairseq_cli/hydra_train.py \

--config-dir /path/to/FastHuBERT/config/finetune \

--config-name base_100h \

common.user_dir=/path/to/FastHuBERT \

task.data=/path/to/data

task.label_dir=/path/to/transcriptions \

model.w2v_path=/path/to/checkpoint \

$ python examples/speech_recognition/new/infer.py \

--config-dir examples/hubert/config/decode \

--config-name infer_viterbi \

common.user_dir=/path/to/FastHuBERT \

common_eval.path=/path/to/checkpoint \

task.data=/path/to/data \

task.normalize=[true|false] \

task._name=fasthubert_pretraining \

dataset.gen_subset=test \

$ python examples/speech_recognition/new/infer.py \

--config-dir examples/hubert/config/decode \

--config-name infer_kenlm \

common.user_dir=/path/to/FastHuBERT \

common_eval.path=/path/to/checkpoint \

task.data=/path/to/data \

task.normalize=[true|false] \

task._name=fasthubert_pretraining \

dataset.gen_subset=test \

decoding.lmweight=2 decoding.wordscore=-1 decoding.silweight=0 \

decoding.beam=1500 \

decoding.lexicon=/path/to/lexicon \

decoding.lmpath=/path/to/arpa \