The concept of lightweight processes is the essence of Erlang and the BEAM; they are what makes BEAM stand out from other virtual machines. In order to understand how the BEAM (and Erlang and Elixir) works you need to know the details of how processes work, which will help you understand the central concept of the BEAM, including what is easy and cheap for a process and what is hard and expensive.

Almost everything in the BEAM is connected to the concept of processes and in this chapter we will learn more about these connections. We will expand on what we learned in the introduction and take a deeper look at concepts such as memory management, message passing, and in particular scheduling.

An Erlang process is very similar to an OS process. It has its own address space, it can communicate with other processes through signals and messages, and the execution is controlled by a preemptive scheduler.

When you have a performance problem in an Erlang or Elixir system the problem is very often stemming from a problem within a particular process or from an imbalance between processes. There are of course other common problems such as bad algorithms or memory problems which we will look at in other chapters. Still, being able to pinpoint the process which is causing the problem is always important, therefore we will look at the tools available in the Erlang RunTime System for process inspection.

We will introduce the tools throughout the chapter as we go through how a process and the scheduler works, and then we will bring all tools together for an exercise at the end.

A process is an isolated entity where code execution occurs. A process protects your system from errors in your code by isolating the effect of the error to the process executing the faulty code.

The runtime comes with a number of tools for inspecting processes to help us find bottlenecks, problems and overuse of resources. These tools will help you identify and inspect problematic processes.

Let us dive right in and look at which processes we have

in a running system. The easiest way to do that is to

just start an Erlang shell and issue the shell command i().

In Elixir you can call the function in the shell_default module as

:shell_default.i.

$ erl

Erlang/OTP 19 [erts-8.1] [source] [64-bit] [smp:4:4] [async-threads:10]

[hipe] [kernel-poll:false]

Eshell V8.1 (abort with ^G)

1> i().

Pid Initial Call Heap Reds Msgs

Registered Current Function Stack

<0.0.0> otp_ring0:start/2 376 579 0

init init:loop/1 2

<0.1.0> erts_code_purger:start/0 233 4 0

erts_code_purger erts_code_purger:loop/0 3

<0.4.0> erlang:apply/2 987 100084 0

erl_prim_loader erl_prim_loader:loop/3 5

<0.30.0> gen_event:init_it/6 610 226 0

error_logger gen_event:fetch_msg/5 8

<0.31.0> erlang:apply/2 1598 416 0

application_controlle gen_server:loop/6 7

<0.33.0> application_master:init/4 233 64 0

application_master:main_loop/2 6

<0.34.0> application_master:start_it/4 233 59 0

application_master:loop_it/4 5

<0.35.0> supervisor:kernel/1 610 1767 0

kernel_sup gen_server:loop/6 9

<0.36.0> erlang:apply/2 6772 73914 0

code_server code_server:loop/1 3

<0.38.0> rpc:init/1 233 21 0

rex gen_server:loop/6 9

<0.39.0> global:init/1 233 44 0

global_name_server gen_server:loop/6 9

<0.40.0> erlang:apply/2 233 21 0

global:loop_the_locker/1 5

<0.41.0> erlang:apply/2 233 3 0

global:loop_the_registrar/0 2

<0.42.0> inet_db:init/1 233 209 0

inet_db gen_server:loop/6 9

<0.44.0> global_group:init/1 233 55 0

global_group gen_server:loop/6 9

<0.45.0> file_server:init/1 233 79 0

file_server_2 gen_server:loop/6 9

<0.46.0> supervisor_bridge:standard_error/ 233 34 0

standard_error_sup gen_server:loop/6 9

<0.47.0> erlang:apply/2 233 10 0

standard_error standard_error:server_loop/1 2

<0.48.0> supervisor_bridge:user_sup/1 233 54 0

gen_server:loop/6 9

<0.49.0> user_drv:server/2 987 1975 0

user_drv user_drv:server_loop/6 9

<0.50.0> group:server/3 233 40 0

user group:server_loop/3 4

<0.51.0> group:server/3 987 12508 0

group:server_loop/3 4

<0.52.0> erlang:apply/2 4185 9537 0

shell:shell_rep/4 17

<0.53.0> kernel_config:init/1 233 255 0

gen_server:loop/6 9

<0.54.0> supervisor:kernel/1 233 56 0

kernel_safe_sup gen_server:loop/6 9

<0.58.0> erlang:apply/2 2586 18849 0

c:pinfo/1 50

Total 23426 220863 0

222

okThe i/0 function prints out a list of all processes in the system.

Each process gets two lines of information. The first two lines

of the printout are the headers telling you what the information

means. As you can see you get the Process ID (Pid) and the name of the

process if any, as well as information about the code the process

is started with and is executing. You also get information about the

heap and stack size and the number of reductions and messages in

the process. In the rest of this chapter we will learn in detail

what a stack, a heap, a reduction and a message are. For now we

can just assume that if there is a large number for the heap size,

then the process uses a lot of memory and if there is a large number

for the reductions then the process has executed a lot of code.

We can further examine a process with the i/3 function. Let

us take a look at the code_server process. We can see in the

previous list that the process identifier (pid) of the code_server

is <0.36.0>. By calling i/3 with the three numbers of

the pid we get this information:

2> i(0,36,0).

[{registered_name,code_server},

{current_function,{code_server,loop,1}},

{initial_call,{erlang,apply,2}},

{status,waiting},

{message_queue_len,0},

{messages,[]},

{links,[<0.35.0>]},

{dictionary,[]},

{trap_exit,true},

{error_handler,error_handler},

{priority,normal},

{group_leader,<0.33.0>},

{total_heap_size,46422},

{heap_size,46422},

{stack_size,3},

{reductions,93418},

{garbage_collection,[{max_heap_size,#{error_logger => true,

kill => true,

size => 0}},

{min_bin_vheap_size,46422},

{min_heap_size,233},

{fullsweep_after,65535},

{minor_gcs,0}]},

{suspending,[]}]

3>We got a lot of information from this call and in the rest of this

chapter we will learn in detail what most of these items mean. The

first line tells us that the process has been given a name

code_server. Next we can see which function the process is

currently executing or suspended in (current_function)

and the name of the function that the process started executing in

(initial_call).

We can also see that the process is suspended waiting for messages

({status,waiting}) and that there are no messages in the

mailbox ({message_queue_len,0}, {messages,[]}). We will look

closer at how message passing works later in this chapter.

The fields priority, suspending, reductions, links,

trap_exit, error_handler, and group_leader control the process

execution, error handling, and IO. We will look into this a bit more

when we introduce the Observer.

The last few fields (dictionary, total_heap_size, heap_size,

stack_size, and garbage_collection) give us information about the

process memory usage. We will look at the process memory areas in

detail in chapter [CH-Memory].

Another, even more intrusive way of getting information

about processes is to use the process information given

by the BREAK menu: ctrl+c p [enter]. Note that while

you are in the BREAK state the whole node freezes.

The shell functions just print the information about the

process but you can actually get this information as data,

so you can write your own tools for inspecting processes.

You can get a list of all processes with erlang:processes/0,

and more information about a process with

erlang:process_info/1. We can also use the function

whereis/1 to get a pid from a name:

1> Ps = erlang:processes().

[<0.0.0>,<0.1.0>,<0.4.0>,<0.30.0>,<0.31.0>,<0.33.0>,

<0.34.0>,<0.35.0>,<0.36.0>,<0.38.0>,<0.39.0>,<0.40.0>,

<0.41.0>,<0.42.0>,<0.44.0>,<0.45.0>,<0.46.0>,<0.47.0>,

<0.48.0>,<0.49.0>,<0.50.0>,<0.51.0>,<0.52.0>,<0.53.0>,

<0.54.0>,<0.60.0>]

2> CodeServerPid = whereis(code_server).

<0.36.0>

3> erlang:process_info(CodeServerPid).

[{registered_name,code_server},

{current_function,{code_server,loop,1}},

{initial_call,{erlang,apply,2}},

{status,waiting},

{message_queue_len,0},

{messages,[]},

{links,[<0.35.0>]},

{dictionary,[]},

{trap_exit,true},

{error_handler,error_handler},

{priority,normal},

{group_leader,<0.33.0>},

{total_heap_size,24503},

{heap_size,6772},

{stack_size,3},

{reductions,74260},

{garbage_collection,[{max_heap_size,#{error_logger => true,

kill => true,

size => 0}},

{min_bin_vheap_size,46422},

{min_heap_size,233},

{fullsweep_after,65535},

{minor_gcs,33}]},

{suspending,[]}]By getting process information as data we can write code

to analyze or sort the data as we please. If we grab all

processes in the system (with erlang:processes/0) and

then get information about the heap size of each process

(with erlang:process_info(P,total_heap_size)) we can

then construct a list with pid and heap size and sort

it on heap size:

1> lists:reverse(lists:keysort(2,[{P,element(2,

erlang:process_info(P,total_heap_size))}

|| P <- erlang:processes()])).

[{<0.36.0>,24503},

{<0.52.0>,21916},

{<0.4.0>,12556},

{<0.58.0>,4184},

{<0.51.0>,4184},

{<0.31.0>,3196},

{<0.49.0>,2586},

{<0.35.0>,1597},

{<0.30.0>,986},

{<0.0.0>,752},

{<0.33.0>,609},

{<0.54.0>,233},

{<0.53.0>,233},

{<0.50.0>,233},

{<0.48.0>,233},

{<0.47.0>,233},

{<0.46.0>,233},

{<0.45.0>,233},

{<0.44.0>,233},

{<0.42.0>,233},

{<0.41.0>,233},

{<0.40.0>,233},

{<0.39.0>,233},

{<0.38.0>,233},

{<0.34.0>,233},

{<0.1.0>,233}]

2>You might notice that many processes have a heap size of 233, that is because it is the default starting heap size of a process.

See the documentation of the module erlang for a full description of

the information available with process_info.

Notice how the process_info/1 function only returns a subset of all

the information available for the process and how the process_info/2

function can be used to fetch extra information. As an example, to

extract the backtrace for the code_server process above, we could

run:

3> process_info(whereis(code_server), backtrace).

{backtrace,<<"Program counter: 0x00000000161de900 (code_server:loop/1 + 152)\nCP: 0x0000000000000000 (invalid)\narity = 0\n\n0"...>>}See the three dots at the end of the binary above? That means that the

output has been truncated. A useful trick to see the whole value is to

wrap the above function call using the rp/1 function:

4> rp(process_info(whereis(code_server), backtrace)).An alternative is to use the io:put_chars/1 function, as follows:

5> {backtrace, Backtrace} = process_info(whereis(code_server), backtrace).

{backtrace,<<"Program counter: 0x00000000161de900 (code_server:loop/1 + 152)\nCP: 0x0000000000000000 (invalid)\narity = 0\n\n0"...>>}

6> io:put_chars(Backtrace).Due to its verbosity, the output for commands 4> and 6> has not

been included here, but feel free to try the above commands in your

Erlang shell.

A third way of examining processes is with the Observer. The Observer is an extensive graphical interface for inspecting the Erlang RunTime System. We will use the Observer throughout this book to examine different aspects of the system.

The Observer can either be started from the OS shell and attach itself

to an node or directly from an Elixir or Erlang shell. For now we will

just start the Observer from the Elixir shell with :observer.start

or from the Erlang shell with:

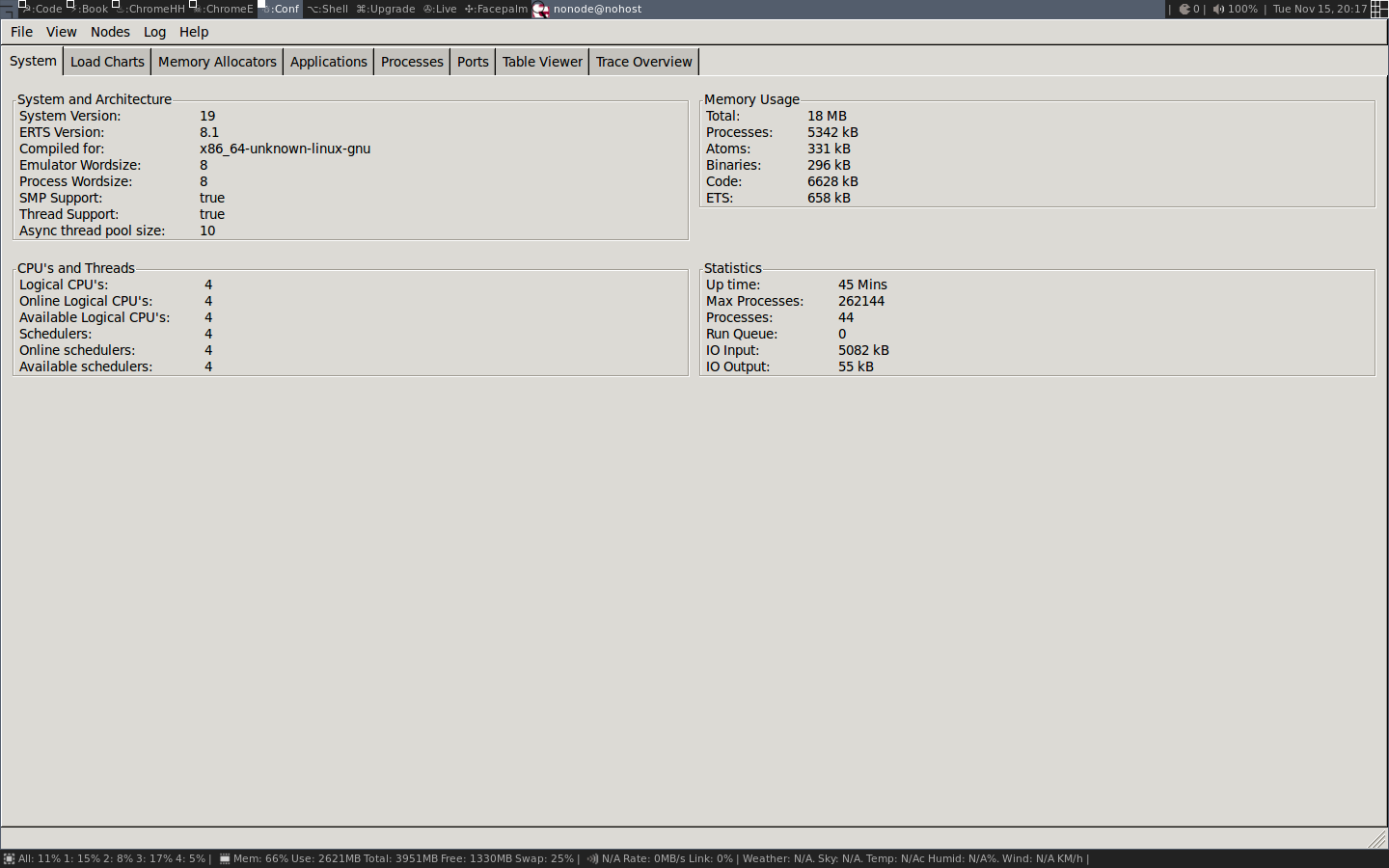

7> observer:start().When the Observer is started it will show you a system overview, see the following screen shot:

We will go over some of this information in detail later in

this and the next chapter. For now we will just use the Observer to look

at the running processes. First we take a look at the

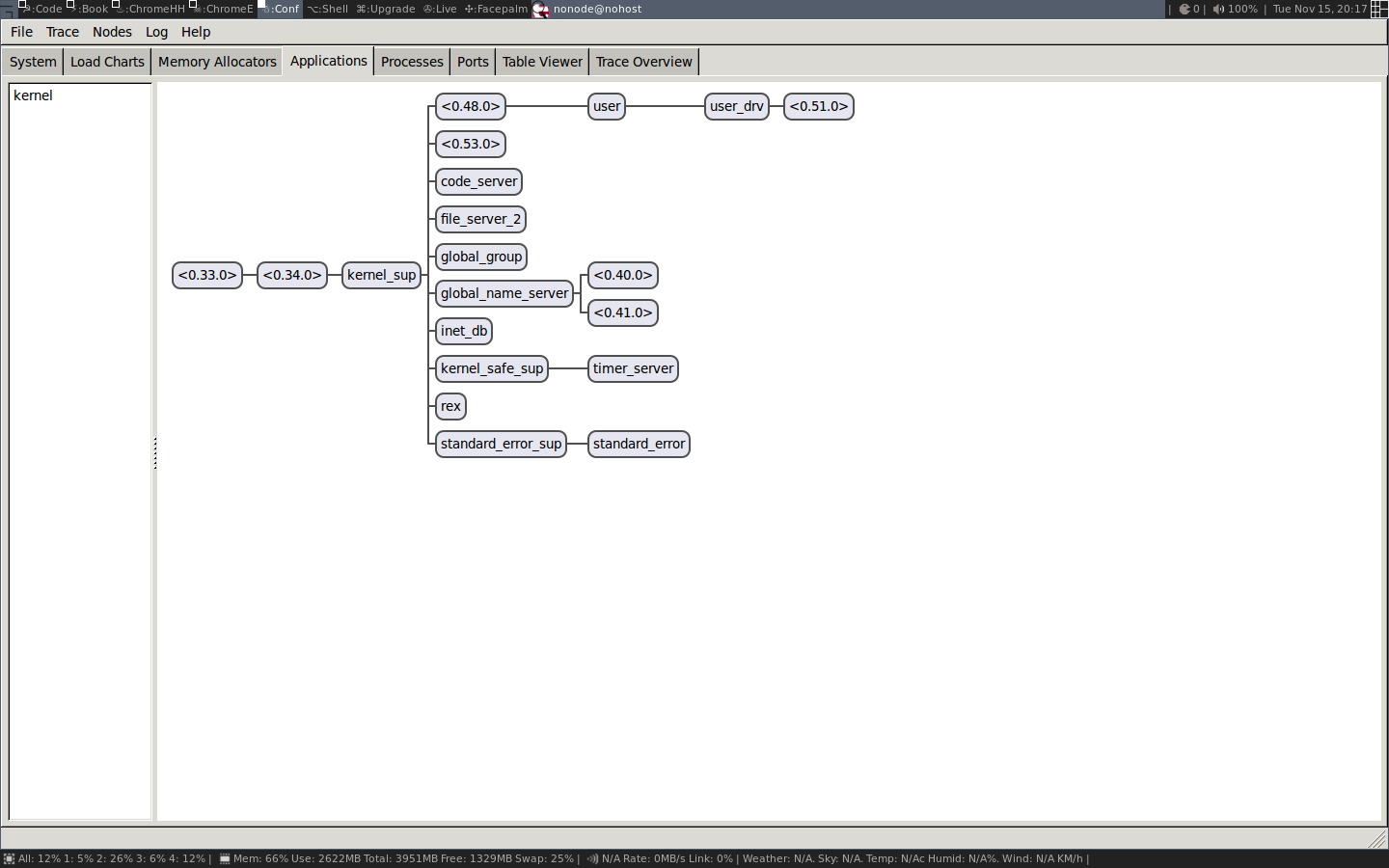

Applications tab which shows the supervision

tree of the running system:

Here we get a graphical view of how the processes are linked. This is a very nice way to get an overview of how a system is structured. You also get a nice feeling of processes as isolated entities floating in space connected to each other through links.

To actually get some useful information about the processes

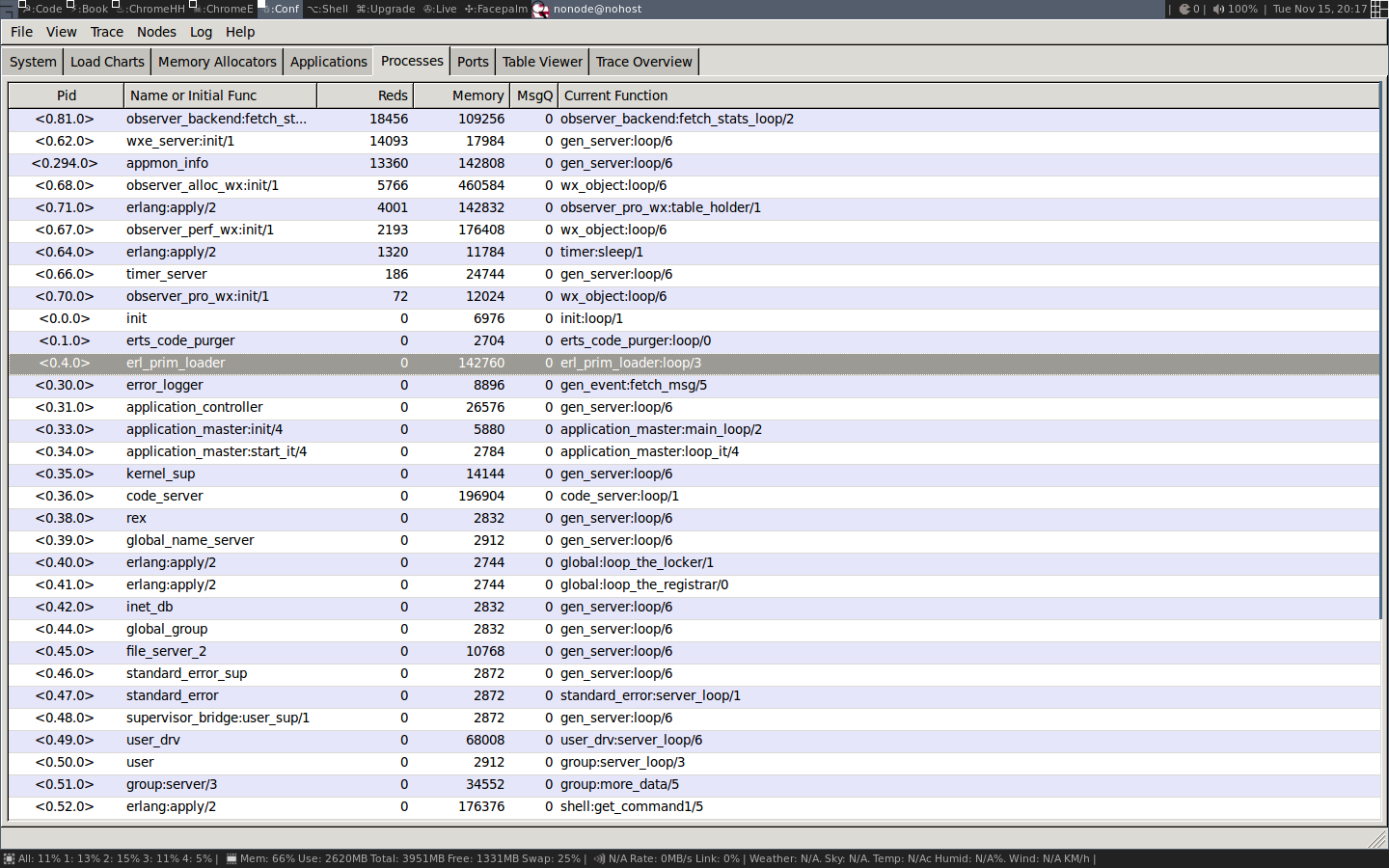

we switch to the Processes tab:

In this view we get basically the same information as with

i/0 in the shell. We see the pid, the registered name,

number of reductions, memory usage and number of messages

and the current function.

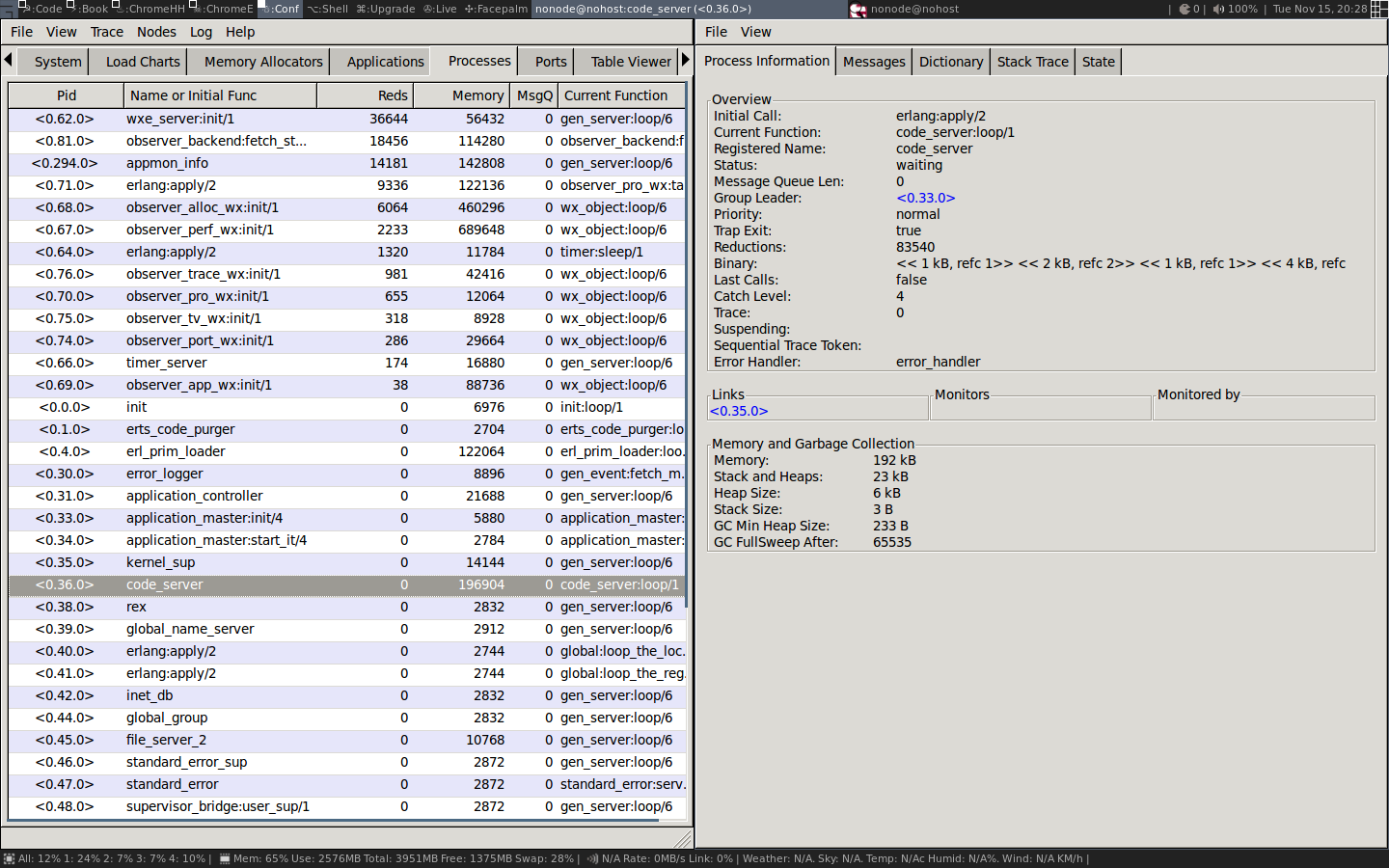

We can also look into a process by double clicking on its

row, for example on the code server, to get the kind of

information you can get with process_info/2:

We will not go through what all this information means right now, but if you keep on reading all will eventually be revealed.

If you are building your application with erlang.mk or

rebar and you want to include the Observer application

in your build you might need to add the applications

runtime_tools, wx, and observer to your list

of applications in yourapp.app.src.

Now that we have a basic understanding of what a process is and some tools to find and inspect processes in a system we are ready to dive deeper to learn how a process is implemented.

A process is basically four blocks of memory: a stack, a heap, a message area, and the Process Control Block (the PCB).

The stack is used for keeping track of program execution by storing return addresses, for passing arguments to functions, and for keeping local variables. Larger structures, such as lists and tuples are stored on the heap.

The message area, also called the mailbox, is used to store messages sent to the process from other processes. The process control block is used to keep track of the state of the process.

See the following figure for an illustration of a process as memory:

+-------+ +-------+ | PCB | | Stack | +-------+ +-------+ +-------+ +-------+ | M-box | | Heap | +-------+ +-------+

This picture of a process is very much simplified, and we will go through a number of iterations of more refined versions to get to a more accurate picture.

The stack, the heap, and the mailbox are all dynamically allocated and can grow and shrink as needed. We will see exactly how this works in later chapters. The PCB on the other hand is statically allocated and contains a number of fields that controls the process.

We can actually inspect some of these memory areas by using HiPE’s Built In Functions (HiPE BIFs) for introspection. With these BIFs we can print out the memory content of stacks, heaps, and the PCB. The raw data is printed and in most cases a human readable version is pretty printed alongside the data. To really understand everything that we see when we inspect the memory we will need to know more about the Erlang tagging scheme (which we will go through in [CH-TypeSystem] and about the execution model and error handling which we will go through in [CH-BEAM], but using these tools will give us a nice view of how a process really is just memory.

The HiPE BIFs are not an official part of Erlang/OTP. They are not supported by the OTP team. They might be removed or changed at any time, so don’t base your mission critical services on them.

These BIFs examine the internals of ERTS in a way that might not be safe. The BIFs for introspection often just print to standard out and you might be surprised where that output ends up.

These BIFs can lock up a scheduler thread for a long time without using any reductions (we will look at what that means in the next chapter). Printing the heap of a very large process for example can take a long time.

These BIFs are only meant to be used for debugging and you use them at your own risk. You should probably not run them on a live system.

Many of the HiPE BIFs where written by the author in the mid nineties (before 64 bit Erlang existed) and the printouts on a 64 bit machine might be a bit off. There are new versions of these BIFs that do a better job, hopefully they will be included in ERTS at the time of the printing of this book. Otherwise you can build your own version with the patch provided in the code section and the instructions in [AP-BuildingERTS].

We can see the context of the stack of a process with hipe_bifs:show_estack/1:

1> hipe_bifs:show_estack(self()).

| BEAM STACK |

| Address | Contents |

|--------------------|--------------------| BEAM ACTIVATION RECORD

| 0x00007f9cc3238310 | 0x00007f9cc2ea6fe8 | BEAM PC shell:exprs/7 + 0x4e

| 0x00007f9cc3238318 | 0xfffffffffffffffb | []

| 0x00007f9cc3238320 | 0x000000000000644b | none

|--------------------|--------------------| BEAM ACTIVATION RECORD

| 0x00007f9cc3238328 | 0x00007f9cc2ea6708 | BEAM PC shell:eval_exprs/7 + 0xf

| 0x00007f9cc3238330 | 0xfffffffffffffffb | []

| 0x00007f9cc3238338 | 0xfffffffffffffffb | []

| 0x00007f9cc3238340 | 0x000000000004f3cb | cmd

| 0x00007f9cc3238348 | 0xfffffffffffffffb | []

| 0x00007f9cc3238350 | 0x00007f9cc3237102 | {value,#Fun<shell.5.104321512>}

| 0x00007f9cc3238358 | 0x00007f9cc323711a | {eval,#Fun<shell.21.104321512>}

| 0x00007f9cc3238360 | 0x00000000000200ff | 8207

| 0x00007f9cc3238368 | 0xfffffffffffffffb | []

| 0x00007f9cc3238370 | 0xfffffffffffffffb | []

| 0x00007f9cc3238378 | 0xfffffffffffffffb | []

|--------------------|--------------------| BEAM ACTIVATION RECORD

| 0x00007f9cc3238380 | 0x00007f9cc2ea6300 | BEAM PC shell:eval_loop/3 + 0x47

| 0x00007f9cc3238388 | 0xfffffffffffffffb | []

| 0x00007f9cc3238390 | 0xfffffffffffffffb | []

| 0x00007f9cc3238398 | 0xfffffffffffffffb | []

| 0x00007f9cc32383a0 | 0xfffffffffffffffb | []

| 0x00007f9cc32383a8 | 0x000001a000000343 | <0.52.0>

|....................|....................| BEAM CATCH FRAME

| 0x00007f9cc32383b0 | 0x0000000000005a9b | CATCH 0x00007f9cc2ea67d8

| | | (BEAM shell:eval_exprs/7 + 0x29)

|********************|********************|

|--------------------|--------------------| BEAM ACTIVATION RECORD

| 0x00007f9cc32383b8 | 0x000000000093aeb8 | BEAM PC normal-process-exit

| 0x00007f9cc32383c0 | 0x00000000000200ff | 8207

| 0x00007f9cc32383c8 | 0x000001a000000343 | <0.52.0>

|--------------------|--------------------|

true

2>We will look closer at the values on the stack and the

heap in [CH-TypeSystem].

The content of the heap is printed by hipe_bifs:show_heap/1.

Since we do not want to list a large heap here we’ll just

spawn a new process that does nothing and show that heap:

2> hipe_bifs:show_heap(spawn(fun () -> ok end)).

From: 0x00007f7f33ec9588 to 0x00007f7f33ec9848

| H E A P |

| Address | Contents |

|--------------------|--------------------|

| 0x00007f7f33ec9588 | 0x00007f7f33ec959a | #Fun<erl_eval.20.52032458>

| 0x00007f7f33ec9590 | 0x00007f7f33ec9839 | [[]]

| 0x00007f7f33ec9598 | 0x0000000000000154 | Thing Arity(5) Tag(20)

| 0x00007f7f33ec95a0 | 0x00007f7f3d3833d0 | THING

| 0x00007f7f33ec95a8 | 0x0000000000000000 | THING

| 0x00007f7f33ec95b0 | 0x0000000000600324 | THING

| 0x00007f7f33ec95b8 | 0x0000000000000000 | THING

| 0x00007f7f33ec95c0 | 0x0000000000000001 | THING

| 0x00007f7f33ec95c8 | 0x000001d0000003a3 | <0.58.0>

| 0x00007f7f33ec95d0 | 0x00007f7f33ec95da | {[],{eval...

| 0x00007f7f33ec95d8 | 0x0000000000000100 | Arity(4)

| 0x00007f7f33ec95e0 | 0xfffffffffffffffb | []

| 0x00007f7f33ec95e8 | 0x00007f7f33ec9602 | {eval,#Fun<shell.21.104321512>}

| 0x00007f7f33ec95f0 | 0x00007f7f33ec961a | {value,#Fun<shell.5.104321512>}...

| 0x00007f7f33ec95f8 | 0x00007f7f33ec9631 | [{clause...

...

| 0x00007f7f33ec97d0 | 0x00007f7f33ec97fa | #Fun<shell.5.104321512>

| 0x00007f7f33ec97d8 | 0x00000000000000c0 | Arity(3)

| 0x00007f7f33ec97e0 | 0x0000000000000e4b | atom

| 0x00007f7f33ec97e8 | 0x000000000000001f | 1

| 0x00007f7f33ec97f0 | 0x0000000000006d0b | ok

| 0x00007f7f33ec97f8 | 0x0000000000000154 | Thing Arity(5) Tag(20)

| 0x00007f7f33ec9800 | 0x00007f7f33bde0c8 | THING

| 0x00007f7f33ec9808 | 0x00007f7f33ec9780 | THING

| 0x00007f7f33ec9810 | 0x000000000060030c | THING

| 0x00007f7f33ec9818 | 0x0000000000000002 | THING

| 0x00007f7f33ec9820 | 0x0000000000000001 | THING

| 0x00007f7f33ec9828 | 0x000001d0000003a3 | <0.58.0>

| 0x00007f7f33ec9830 | 0x000001a000000343 | <0.52.0>

| 0x00007f7f33ec9838 | 0xfffffffffffffffb | []

| 0x00007f7f33ec9840 | 0xfffffffffffffffb | []

|--------------------|--------------------|

true

3>We can also print the content of some of the fields in

the PCB with hipe_bifs:show_pcb/1:

3> hipe_bifs:show_pcb(self()).

P: 0x00007f7f3cbc0400

---------------------------------------------------------------

Offset| Name | Value | *Value |

0 | id | 0x000001d0000003a3 | |

72 | htop | 0x00007f7f33f15298 | |

96 | hend | 0x00007f7f33f16540 | |

88 | heap | 0x00007f7f33f11470 | |

104 | heap_sz | 0x0000000000000a1a | |

80 | stop | 0x00007f7f33f16480 | |

592 | gen_gcs | 0x0000000000000012 | |

594 | max_gen_gcs | 0x000000000000ffff | |

552 | high_water | 0x00007f7f33f11c50 | |

560 | old_hend | 0x00007f7f33e90648 | |

568 | old_htop | 0x00007f7f33e8f8e8 | |

576 | old_head | 0x00007f7f33e8e770 | |

112 | min_heap_.. | 0x00000000000000e9 | |

328 | rcount | 0x0000000000000000 | |

336 | reds | 0x0000000000002270 | |

16 | tracer | 0xfffffffffffffffb | |

24 | trace_fla.. | 0x0000000000000000 | |

344 | group_lea.. | 0x0000019800000333 | |

352 | flags | 0x0000000000002000 | |

360 | fvalue | 0xfffffffffffffffb | |

368 | freason | 0x0000000000000000 | |

320 | fcalls | 0x00000000000005a2 | |

384 | next | 0x0000000000000000 | |

48 | reg | 0x0000000000000000 | |

56 | nlinks | 0x00007f7f3cbc0750 | |

616 | mbuf | 0x0000000000000000 | |

640 | mbuf_sz | 0x0000000000000000 | |

464 | dictionary | 0x0000000000000000 | |

472 | seq..clock | 0x0000000000000000 | |

480 | seq..astcnt | 0x0000000000000000 | |

488 | seq..token | 0xfffffffffffffffb | |

496 | intial[0] | 0x000000000000320b | |

504 | intial[1] | 0x0000000000000c8b | |

512 | intial[2] | 0x0000000000000002 | |

520 | current | 0x00007f7f3be87c20 | 0x000000000000ed8b |

296 | cp | 0x00007f7f3d3a5100 | 0x0000000000440848 |

304 | i | 0x00007f7f3be87c38 | 0x000000000044353a |

312 | catches | 0x0000000000000001 | |

224 | arity | 0x0000000000000000 | |

232 | arg_reg | 0x00007f7f3cbc04f8 | 0x000000000000320b |

240 | max_arg_reg | 0x0000000000000006 | |

248 | def..reg[0] | 0x000000000000320b | |

256 | def..reg[1] | 0x0000000000000c8b | |

264 | def..reg[2] | 0x00007f7f33ec9589 | |

272 | def..reg[3] | 0x0000000000000000 | |

280 | def..reg[4] | 0x0000000000000000 | |

288 | def..reg[5] | 0x00000000000007d0 | |

136 | nsp | 0x0000000000000000 | |

144 | nstack | 0x0000000000000000 | |

152 | nstend | 0x0000000000000000 | |

160 | ncallee | 0x0000000000000000 | |

56 | ncsp | 0x0000000000000000 | |

64 | narity | 0x0000000000000000 | |

---------------------------------------------------------------

true

4>Now armed with these inspection tools we are ready to look at what these fields in the PCB mean.

The Process Control Block contains all the fields that control the behaviour and current state of a process. In this section and the rest of the chapter we will go through the most important fields. We will leave out some fields that have to do with execution and tracing from this chapter, instead we will cover those in [CH-BEAM].

If you want to dig even deeper than we will go in this chapter you can

look at the C source code. The PCB is implemented as a C struct called process in the file

erl_process.h.

The field id contains the process ID (or PID).

0 | id | 0x000001d0000003a3 | |

The process ID is an Erlang term and hence tagged (See [CH-TypeSystem]). This means that the 4 least significant bits are a tag (0011). In the code section there is a module for inspecting Erlang terms (see show.erl) which we will cover in the chapter on types. We can use it now to to examine the type of a tagged word though.

4> show:tag_to_type(16#0000001d0000003a3).

pid

5>The fields htop and stop are pointers to the top of the heap and

the stack, that is, they are pointing to the next free slots on the heap

or stack. The fields heap (start) and hend points to the start and

the stop of the whole heap, and heap_sz gives the size of the heap

in words. That is hend - heap = heap_sz * 8 on a 64 bit machine and

hend - heap = heap_sz * 4 on a 32 bit machine.

The field min_heap_size is the size, in words, that the heap starts

with and which it will not shrink smaller than, the default value is

233.

We can now refine the picture of the process heap with the fields from the PCB that controls the shape of the heap:

hend -> +----+ -

| | ^

| | | -

htop -> | | | heap_sz*8 ^

|....| | hend-heap | min_heap_size

|....| v v

heap -> +----+ - -

The Heap

But wait, how come we have a heap start and a heap end, but no start and stop for the stack? That is because the BEAM uses a trick to save space and pointers by allocating the heap and the stack together. It is time for our first revision of our process as memory picture. The heap and the stack are actually just one memory area:

+-------+ +-------+

| PCB | | Stack |

+-------+ +-------+

| free |

+-------+ +-------+

| M-box | | Heap |

+-------+ +-------+

The stack grows towards lower memory addresses and the heap towards higher memory, so we can also refine the picture of the heap by adding the stack top pointer to the picture:

hend -> +----+ -

|....| ^

stop -> | | |

| | |

| | | -

htop -> | | | heap_sz ^

|....| | | min_heap_size

|....| v v

heap -> +----+ - -

The Heap

If the pointers htop and stop were to meet, the

process would run out of free memory and would have to do a garbage

collection to free up memory.

The heap memory management schema is to use a per process copying generational garbage collector. When there is no more space on the heap (or the stack, since they share the allocated memory block), the garbage collector kicks in to free up memory.

The GC allocates a new memory area called the to space. Then it goes through the stack to find all live roots and follows each root and copies the data on the heap to the new heap. Finally it also copies the stack to the new heap and frees up the old memory area.

The GC is controlled by these fields in the PCB:

Eterm *high_water;

Eterm *old_hend; /* Heap pointers for generational GC. */

Eterm *old_htop;

Eterm *old_heap;

Uint max_heap_size; /* Maximum size of heap (in words). */

Uint16 gen_gcs; /* Number of (minor) generational GCs. */

Uint16 max_gen_gcs; /* Max minor gen GCs before fullsweep. */Since the garbage collector is generational it will use a heuristic to just look at

new data most of the time. That is, in what is called a minor

collection, the GC

only looks at the top part of the stack and moves new data to the new

heap. Old data, that is data allocated below the high_water mark

(see the figure below) on the heap, is moved to a special area called the old

heap.

Most of the time, then, there is

another heap area for

each process: the old heap, handled by the fields old_heap,

old_htop and old_hend in the PCB. This almost brings us back to

our original picture of a process as four memory areas:

+-------+ +-------+

| PCB | | Stack | +-------+ - old_hend

+-------+ +-------+ + + - old_htop

| free | +-------+

+-------+ high_water -> +-------+ | Old |

| M-box | | Heap | | Heap |

+-------+ +-------+ +-------+ - old_heap

When a process starts there is no old heap, but as soon as young data has matured to old data and there is a garbage collection, the old heap is allocated. The old heap is garbage collected when there is a major collection, also called a full sweep. See [CH-Memory] for more details of how garbage collection works. In that chapter we will also look at how to track down and fix memory related problems.

Process communication is done through message passing. A process send is implemented so that a sending process copies the message from its own heap to the mailbox of the receiving process.

In the early days of Erlang concurrency was implemented through multitasking in the scheduler. We will talk more about concurrency in the section about the scheduler later in this chapter, for now it is worth noting that in the first version of Erlang there was no parallelism and there could only be one process running at the time. In that version the sending process could write data directly on the receiving process' heap.

When multicore systems were introduced and the Erlang implementation

was extended with several schedulers running processes in parallel it

was no longer safe to write directly on another process' heap without

taking the main lock of the receiver. At this time the concept of

m-bufs was introduced (also called heap fragments). An m-buf is

a memory area

outside of a process heap where other processes can

safely write data. If a sending process can not get the lock it would

write to the m-buf instead. When all data of a message has been

copied to the m-buf the message is linked to the process through the

mailbox. The linking (LINK_MESSAGE in

erl_message.h)

appends the message to the receiver’s

message queue.

The garbage collector would then copy the messages onto the process' heap. To reduce the pressure on the GC the mailbox is divided into two lists, one containing seen messages and one containing new messages. The GC does not have to look at the new messages since we know they will survive (they are still in the mailbox) and that way we can avoid some copying.

In Erlang 19 a new per process setting was introduced, message_queue_data,

which can take the values on_heap or off_heap. When set to on_heap

the sending process will first try to take the main lock of the

receiver and if it succeeds the message will be copied directly

onto the receiver’s heap. This can only be done if the receiver is

suspended and if no other process has grabbed the lock to send to

the same process. If the sender can not obtain the lock it will

allocate a heap fragment and copy the message there instead.

If the flag is set to off_heap the sender will not try to get the lock and instead write directly to a heap fragment. This will reduce lock contention but allocating a heap fragment is more expensive than writing directly to the already allocated process heap and it can lead to larger memory usage. There might be a large empty heap allocated and still new messages are written to new fragments.

With on_heap allocation all the messages, both directly allocated on the heap and messages in heap fragments, will be copied by the GC. If the message queue is large and many messages are not handled and therefore still are live, they will be promoted to the old heap and the size of the process heap will increase, leading to higher memory usage.

All messages are added to a linked list (the mailbox) when the

message has been copied to the receiving process. If the message

is copied to the heap of the receiving process the message is

linked in to the internal message queue (or seen messages)

and examined by the GC.

In the off_heap allocation scheme new messages are placed in

the "external" message in queue and ignored by the GC.

We can now revise our picture of the process as four memory areas

once more. Now the process is made up of five memory areas (two

mailboxes) and a varying number of heap fragments (m-bufs):

+-------+ +-------+

| PCB | | Stack |

+-------+ +-------+

| free |

+-------+ +-------+ +-------+ +-------+

| M-box | | M-box | | Heap | | Old |

| intern| | inq | | | | Heap |

+-------+ +-------+ +-------+ +-------+

+-------+ +-------+ +-------+ +-------+

| m−buf | | m−buf | | m−buf | | m−buf |

+-------+ +-------+ +-------+ +-------+

Each mailbox consists of a length and two pointers, stored in the fields

msg.len, msg.first, msg.last for the internal queue and msg_inq.len,

msg_inq.first, and msg_inq.last for the external in queue. There is

also a pointer to the next message to look at (msg.save) to implement

selective receive.

Let us use our introspection tools to see how this works in more detail. We start by setting up a process with a message in the mailbox and then take a look at the PCB.

4> P = spawn(fun() -> receive stop -> ok end end).

<0.63.0>

5> P ! start.

start

6> hipe_bifs:show_pcb(P).

...

408 | msg.first | 0x00007fd40962d880 | |

416 | msg.last | 0x00007fd40962d880 | |

424 | msg.save | 0x00007fd40962d880 | |

432 | msg.len | 0x0000000000000001 | |

696 | msg_inq.first | 0x0000000000000000 | |

704 | msg_inq.last | 0x00007fd40a306238 | |

712 | msg_inq.len | 0x0000000000000000 | |

616 | mbuf | 0x0000000000000000 | |

640 | mbuf_sz | 0x0000000000000000 | |

...From this we can see that there is one message in the message queue and

the first, last and save pointers all point to this message.

As mentioned we can force the message to end up in the in queue by setting the flag message_queue_data. We can try this with the following program:

link:../code/processes_chapter/src/msg.erl[role=include]With this program we can try sending a message on heap and off heap and look at the PCB after each send. With on heap we get the same result as when just sending a message before:

5> msg:send_on_heap().

...

408 | msg.first | 0x00007fd4096283c0 | |

416 | msg.last | 0x00007fd4096283c0 | |

424 | msg.save | 0x00007fd40a3c1048 | |

432 | msg.len | 0x0000000000000001 | |

696 | msg_inq.first | 0x0000000000000000 | |

704 | msg_inq.last | 0x00007fd40a3c1168 | |

712 | msg_inq.len | 0x0000000000000000 | |

616 | mbuf | 0x0000000000000000 | |

640 | mbuf_sz | 0x0000000000000000 | |

...If we try sending to a process with the flag set to off_heap the message ends up in the in queue instead:

6> msg:send_off_heap().

...

408 | msg.first | 0x0000000000000000 | |

416 | msg.last | 0x00007fd40a3c0618 | |

424 | msg.save | 0x00007fd40a3c0618 | |

432 | msg.len | 0x0000000000000000 | |

696 | msg_inq.first | 0x00007fd3b19f1830 | |

704 | msg_inq.last | 0x00007fd3b19f1830 | |

712 | msg_inq.len | 0x0000000000000001 | |

616 | mbuf | 0x0000000000000000 | |

640 | mbuf_sz | 0x0000000000000000 | |

...We will ignore the distribution case for now, that is we will not

consider messages sent between Erlang nodes. Imagine two processes

P1 and P2. Process P1 wants to send a message (Msg) to process

P2, as illustrated by this figure:

P 1

+---------------------------------+

| +-------+ +-------+ +-------+ |

| | PCB | | Stack | | Old | |

| +-------+ +-------+ | Heap | |

| | free | +-------+ |

| | | |

| +-------+ +-------+ +-------+ |

| | M-box | | Heap | | M-box | |

| | inq | | [Msg] | | intern| |

| +-------+ +-------+ +-------+ |

+---------------------------------+

|

| P2 ! Msg

v

P 2

+---------------------------------+

| +-------+ +-------+ +-------+ |

| | PCB | | Stack | | Old | |

| +-------+ +-------+ | Heap | |

| | free | +-------+ |

| | | |

| +-------+ +-------+ +-------+ |

| | M-box | | Heap | | M-box | |

| | inq | | | | intern| |

| +-------+ +-------+ +-------+ |

+---------------------------------+

Process P1 will then take the following steps:

-

Calculate the size of Msg.

-

Allocate space for the message (on or off

P2's heap as described before). -

Copy Msg from

P1's heap to the allocated space. -

Allocate and fill in an ErlMessage struct wrapping up the message.

-

Link in the ErlMessage either in the ErlMsgQueue or in the ErlMsgInQueue.

If process P2 is suspended and no other process is trying to

send a message to P2 and there is space on the heap and the

allocation strategy is on_heap the message will directly end up

on the heap:

P 1

+---------------------------------+

| +-------+ +-------+ +-------+ |

| | PCB | | Stack | | Old | |

| +-------+ +-------+ | Heap | |

| | free | +-------+ |

| | | |

| +-------+ +-------+ +-------+ |

| | M-box | | Heap | | M-box | |

| | inq | | [Msg] | | intern| |

| +-------+ +-------+ +-------+ |

+---------------------------------+

|

| P2 ! Msg

v

P 2

+---------------------------------+

| +-------+ +-------+ +-------+ |

| | PCB | | Stack | | Old | |

| +-------+ +-------+ | Heap | |

| | free | +-------+ |

| | | |

| +-------+ +-------+ +-------+ |

| | M-box | | Heap | | M-box | |

| | inq | | [Msg] | | intern| |

| | | | | | | |

| | | | ^ | | first | |

| +-------+ +--|----+ +---|---+ |

| | v |

| | +-------+ |

| | |next []| |

| | | m * | |

| | +----|--+ |

| | | |

| +------------+ |

+---------------------------------+

If P1 can not get the main lock of P2 or there is not enough space

on P2 's heap and the allocation strategy is on_heap the message

will end up in an m-buf but linked from the internal mailbox:

P 1

+---------------------------------+

| +-------+ +-------+ +-------+ |

| | PCB | | Stack | | Old | |

| +-------+ +-------+ | Heap | |

| | free | +-------+ |

| | | |

| +-------+ +-------+ +-------+ |

| | M-box | | Heap | | M-box | |

| | inq | | [Msg] | | intern| |

| +-------+ +-------+ +-------+ |

+---------------------------------+

|

| P2 ! Msg

v

P 2

+---------------------------------+

| +-------+ +-------+ +-------+ |

| | PCB | | Stack | | Old | |

| +-------+ +-------+ | Heap | |

| | free | +-------+ |

| | | |

| +-------+ +-------+ +-------+ |

| | M-box | | Heap | | M-box | |

| | inq | | | | intern| |

| | | | | | | |

| | | | | | first | |

| +-------+ +-------+ +---|---+ |

| m−buf v |

| +-------+ +-------+ |

| +->| [Msg] | |next []| |

| | | | | m * | |

| | +-------+ +----|--+ |

| | | |

| +------------------+ |

+---------------------------------+

After a GC the message will be moved into the heap.

If the allocation strategy is off_heap the message

will end up in an m-buf and linked from the external mailbox:

P 1

+---------------------------------+

| +-------+ +-------+ +-------+ |

| | PCB | | Stack | | Old | |

| +-------+ +-------+ | Heap | |

| | free | +-------+ |

| | | |

| +-------+ +-------+ +-------+ |

| | M-box | | Heap | | M-box | |

| | inq | | [Msg] | | intern| |

| +-------+ +-------+ +-------+ |

+---------------------------------+

|

| P2 ! Msg

v

P 2

+---------------------------------+

| +-------+ +-------+ +-------+ |

| | PCB | | Stack | | Old | |

| +-------+ +-------+ | Heap | |

| | free | +-------+ |

| | | |

| +-------+ +-------+ +-------+ |

| | M-box | | Heap | | M-box | |

| | inq | | | | intern| |

| | | | | | | |

| | first | | | | first | |

| +---|---+ +-------+ +-------+ |

| v m−buf |

| +--------+ +-----+ |

| |next [] | | | |

| | | | | |

| | m *------>|[Msg]| |

| | | | | |

| +--------+ +-----+ |

+---------------------------------+

After a GC the message will still be in the m-buf. Not until

the message is received and reachable from some other object on

the heap or from the stack will the message be copied to the process

heap during a GC.

Erlang supports selective receive, which means that a message that

doesn’t match can be left in the mailbox for a later receive. And the

processes can be suspended with messages in the mailbox when no

message matches. The msg.save field contains a pointer to a pointer

to the next message to look at.

In later chapters we will cover the details of m-bufs and how the

garbage collector handles mailboxes. We will also go through the

details of how receive is implemented in the BEAM

in later chapters.

With the new message_queue_data flag introduced in Erlang 19 you

can trade memory for execution time in a new way. If the receiving

process is overloaded and holding on to the main lock, it might be

a good strategy to use the off_heap allocation in order to let the

sending process quickly dump the message in an m-buf.

If two processes have a nicely balanced producer consumer behavior where there is no real contention for the process lock then allocation directly on the receivers heap will be faster and use less memory.

If the receiver is backed up and is receiving more messages than it has time to handle, it might actually start using more memory as messages are copied to the heap, and migrated to the old heap. Since unseen messages are considered live, the heap will need to grow and use more memory.

In order to find out which allocation strategy is best for your system you will need to benchmark and measure the behavior. The first and easiest test to do is probably to change the default allocation strategy at the start of the system. The ERTS flag hmqd sets the default strategy to either off_heap or on_heap. If you start Erlang without this flag the default will be on_heap. By setting up your benchmark so that Erlang is started with +hmqd off_heap you can test whether the system behaves better or worse if all processes use off heap allocation. Then you might want to find bottle neck processes and test switching allocation strategies for those processes only.

There is actually one more memory area in a process where Erlang terms can be stored, the Process Dictionary.

The Process Dictionary (PD) is a process local key-value store. One advantage with this is that all keys and values are stored on the heap and there is no copying as with send or an ETS table.

We can now update our view of a process with yet another memory area, PD, the process dictionary:

+-------+ +-------+ +-------+

| PCB | | Stack | | PD |

+-------+ +-------+ +-------+

| free |

+-------+ +-------+ +-------+ +-------+

| M-box | | M-box | | Heap | | Old |

| intern| | inq | | | | Heap |

+-------+ +-------+ +-------+ +-------+

+-------+ +-------+ +-------+ +-------+

| m−buf | | m−buf | | m−buf | | m−buf |

+-------+ +-------+ +-------+ +-------+

With such a small array you are bound to get some collisions before the area grows. Each hash value points to a bucket with key value pairs. The bucket is actually an Erlang list on the heap. Each entry in the list is a two tuple ({key, Value}) also stored on the heap.

Putting an element in the PD is not completely free, it will result in an extra tuple and a cons, and might cause garbage collection to be triggered. Updating a key in the dictionary, which is in a bucket, causes the whole bucket (the whole list) to be reallocated to make sure we don’t get pointers from the old heap to the new heap. (In [CH-Memory] we will see the details of how garbage collection works.)

In this chapter we have looked at how a process is implemented. In particular we looked at how the memory of a process is organized, how message passing works and the information in the PCB. We also looked at a number of tools for inspecting processes introspection, such as erlang:process_info, and the hipe:show*_ bifs.

Use the functions erlang:processes/0 and erlang:process_info/1,2

to inspect the processes in the system. Here are

some functions to try:

1> Ps = erlang:processes().

[<0.0.0>,<0.3.0>,<0.6.0>,<0.7.0>,<0.9.0>,<0.10.0>,<0.11.0>,

<0.12.0>,<0.13.0>,<0.14.0>,<0.15.0>,<0.16.0>,<0.17.0>,

<0.19.0>,<0.20.0>,<0.21.0>,<0.22.0>,<0.23.0>,<0.24.0>,

<0.25.0>,<0.26.0>,<0.27.0>,<0.28.0>,<0.29.0>,<0.33.0>]

2> P = self().

<0.33.0>

3> erlang:process_info(P).

[{current_function,{erl_eval,do_apply,6}},

{initial_call,{erlang,apply,2}},

{status,running},

{message_queue_len,0},

{messages,[]},

{links,[<0.27.0>]},

{dictionary,[]},

{trap_exit,false},

{error_handler,error_handler},

{priority,normal},

{group_leader,<0.26.0>},

{total_heap_size,17730},

{heap_size,6772},

{stack_size,24},

{reductions,25944},

{garbage_collection,[{min_bin_vheap_size,46422},

{min_heap_size,233},

{fullsweep_after,65535},

{minor_gcs,1}]},

{suspending,[]}]

4> lists:keysort(2,[{P,element(2,erlang:process_info(P,

total_heap_size))} || P <- Ps]).

[{<0.10.0>,233},

{<0.13.0>,233},

{<0.14.0>,233},

{<0.15.0>,233},

{<0.16.0>,233},

{<0.17.0>,233},

{<0.19.0>,233},

{<0.20.0>,233},

{<0.21.0>,233},

{<0.22.0>,233},

{<0.23.0>,233},

{<0.25.0>,233},

{<0.28.0>,233},

{<0.29.0>,233},

{<0.6.0>,752},

{<0.9.0>,752},

{<0.11.0>,1363},

{<0.7.0>,1597},

{<0.0.0>,1974},

{<0.24.0>,2585},

{<0.26.0>,6771},

{<0.12.0>,13544},

{<0.33.0>,13544},

{<0.3.0>,15143},

{<0.27.0>,32875}]

9>