Note: This is not a comprehensive list of architectures used in NLP. There may be even more ways, I am providing the most generally used methods. Please feel free to provide feedback (or) suggesting other ways.

Recurrent networks - RNN, LSTM, GRU have proven to be one of the most important unit in NLP applications because of their architecture. There are many problems where the sequence nature needs to be remembered like in order to predict an emotion in the scene, previous scenes needs to be remembered.

My focus here will be on how to use RNN's and variants in PyTorch and also understanding the inputs, outputs of single layer, multi-layer, uni-directional and bi-directional RNN's and it's variants.

Please go through the following resources for better conceptual understanding:

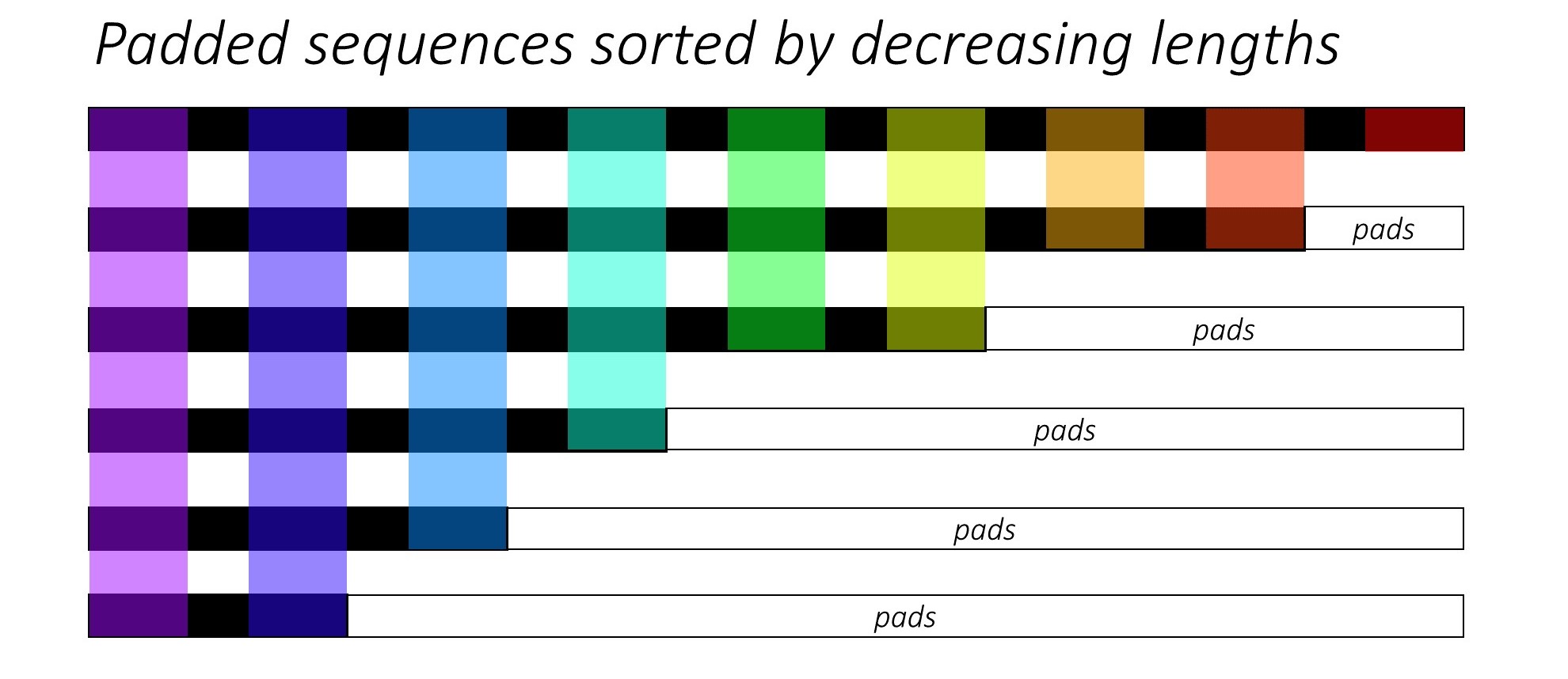

When training RNN (LSTM or GRU or vanilla-RNN), it is difficult to batch the variable length sequences. For ex: if length of sequences in a size 6 batch is [6, 2, 9, 4, 8, 3], you will pad all the sequences and that will results in 6 sequences of length 9. You would end up doing 54 computation (6x9), but you needed to do only 32 computations. Moreover, if you wanted to do something fancy like using a bidirectional-RNN it would be harder to do batch computations just by padding and you might end up doing more computations than required.

Instead, pytorch allows us to pack the sequence, internally packed sequence is a tuple of two lists. One contains the elements of sequences. Elements are interleaved by time steps and other contains the batch size at each step. This is helpful in recovering the actual sequences as well as telling RNN what is the batch size at each time step. This can be passed to RNN and it will internally optimize the computations.

Resources:

The attention mechanism was born to help memorize long source sentences in neural machine translation (NMT). Rather than building a single context vector out of the encoder's last hidden state, attention is used to focus more on the relevant parts of the input while decoding a sentence. There are various types of attention mechanisms. Here I will point out the most used ones.

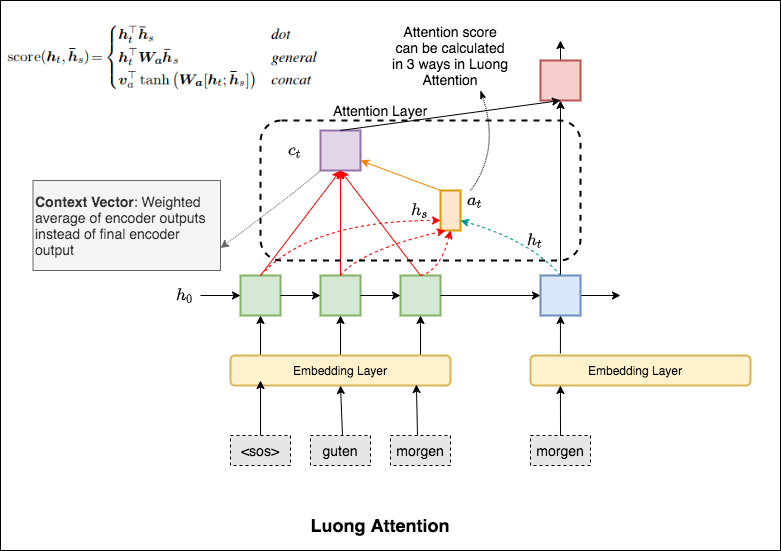

The context vector will be created by taking encoder outputs and the current output of the decoder rnn.

The attention score can be calculated in three ways. dot, general and concat.

Resources:

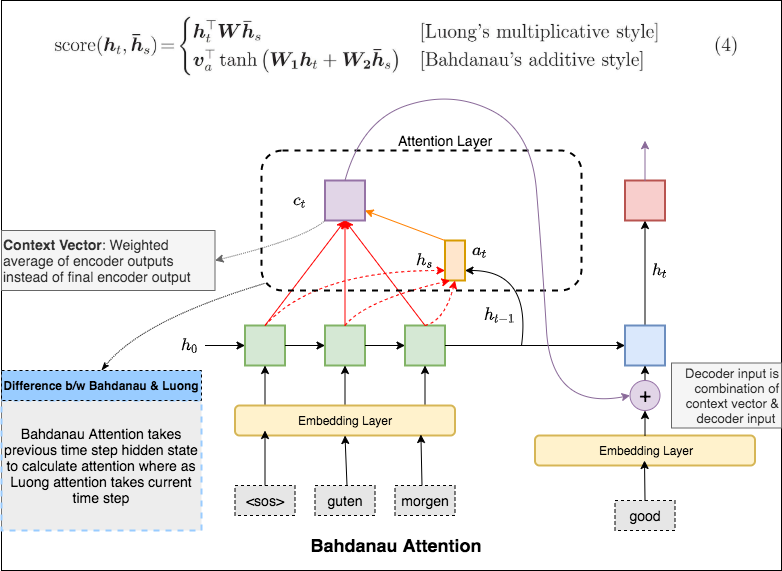

The context vector will be created by taking encoder outputs and the previous hidden state of the decoder rnn. The context vector is combined with decoder input embedding and fed as input to decoder rnn.

The Bahdanau attention is also called as additive attention.

Resources:

- Floyd Hub article on attention mechanisms

- Lilian Blog on attention mechanisms

- Bahdanau Attention Paper

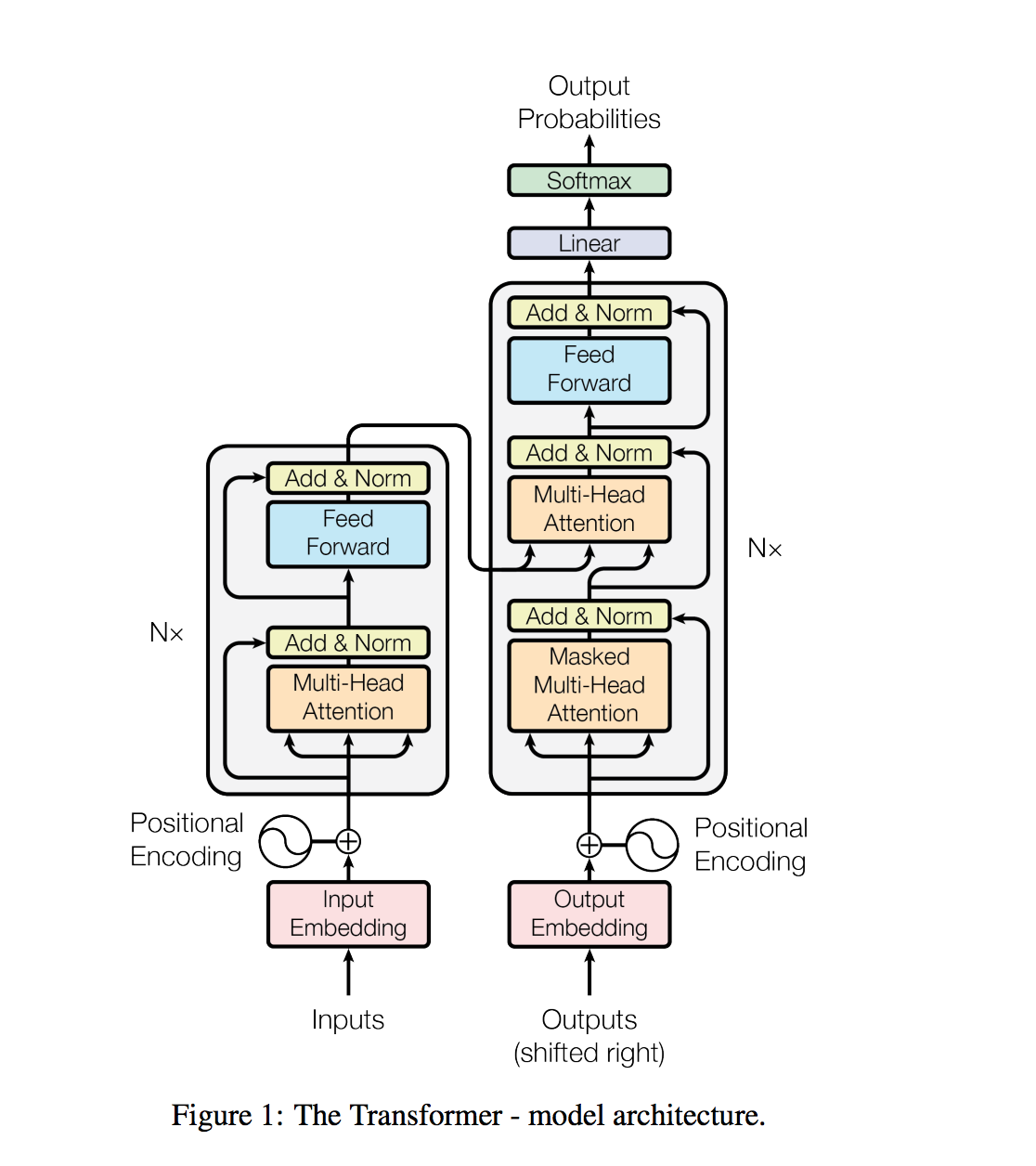

Attention mechanisms have become an integral part of compelling sequence modeling and transduction models in various tasks, allowing modeling of dependencies without regard to their distance in the input or output sequences. Such attention mechanisms are used in conjunction with a recurrent network.

The Transformer, a model architecture eschewing recurrence and instead relying entirely on an attention mechanism to draw global dependencies between input and output.

Resources:

The GPT-2 paper states that:

Natural language processing tasks, such as question answering, machine translation, reading comprehension, and summarization, are typically approached with supervised learning on taskspecific datasets. We demonstrate that language models begin to learn these tasks without any explicit supervision when trained on a new dataset of millions of webpages called WebText. Our largest model, GPT-2, is a 1.5B parameter Transformer that achieves state of the art results on 7 out of 8 tested language modeling datasets in a zero-shot setting but still underfits WebText. Samples from the model reflect these improvements and contain coherent paragraphs of text. These findings suggest a promising path towards building language processing systems which learn to perform tasks from their naturally occurring demonstrations.

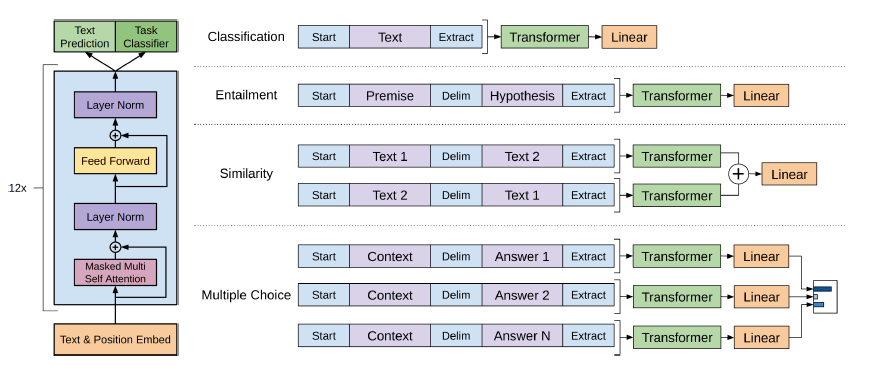

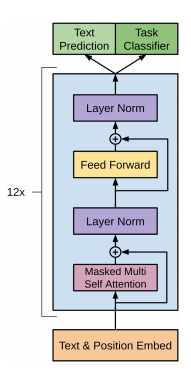

The GPT-2 utilizes a 12-layer Decoder Only Transformer architecture.

There are different size variants of GPT-2

I merely replicated the code from Annotated GPT-2 post to understand the architecture.

Resources:

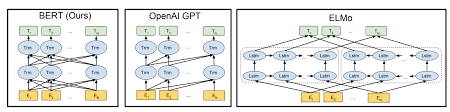

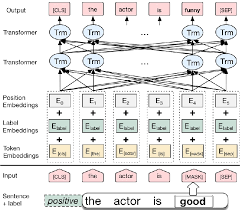

At the end of 2018 researchers at Google AI Language open-sourced a new technique for Natural Language Processing (NLP) called BERT (Bidirectional Encoder Representations from Transformers) — a major breakthrough which took the Deep Learning community by storm because of its incredible performance.

Main take aways:

-

Language modeling is an effective task for using unlabeled data to pretrain neural networks in NLP

-

Traditional language models take the previous n tokens and predict the next one. In contrast, BERT trains a language model that takes both the previous and next tokens into account when predicting.

-

BERT is also trained on a next sentence prediction task to better handle tasks that require reasoning about the relationship between two sentences (e.g. similar questions or not)

-

BERT uses the Transformer architecture for encoding sentences.

-

BERT performs better when given more parameters, even on small datasets.

Similar to GPT-2, different sizes of BERT are also available.

There are many good online available resources to understand the BERT architecure. I can't explain any better than that. So here I try to implement the basic version of BERT. Refer to the following resources for better understanding of BERT.

Resources:

- BERT Explained - TowardsML

- Demystifying BERT - Analytics Vidhya

- BERT paper dissected - ML Explained

- Visual guide to BERT by Jay Alammar

- BERT paper

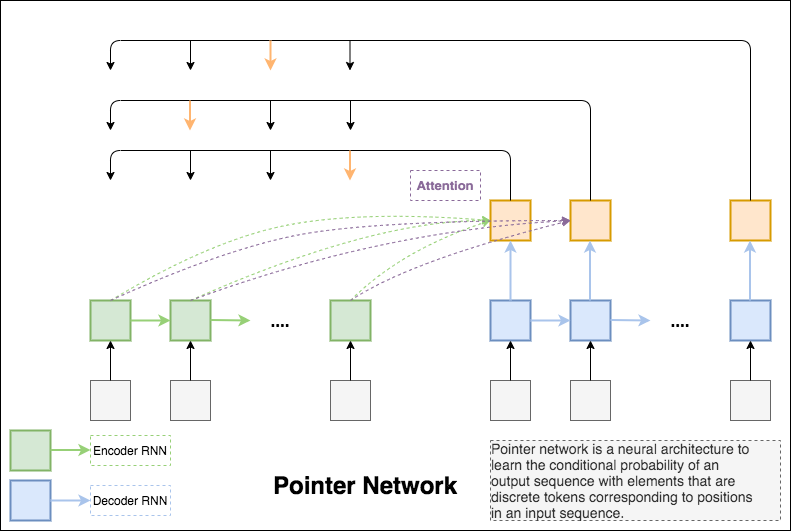

Pointer networks are sequence-to-sequence models where the output is discrete tokens corresponding to positions in an input sequence. The main differences between pointer networks and standard seq2seq models are:

-

The output of pointer networks is discrete and correspond to positions in the input sequence

-

The number of target classes in each step of the output depends on the length of the input, which is variable.

Pointer networks are suitable for problems like sorting, word ordering, or computational linguistic problems such as convex hulls and traveling sales person problems. One common characteristic for all these problems is that the size of the target dictionary varies depending on the input length.

Pointer network solves the problem of variable size output dictionaries using a mechanism of neural attention. It differs from the previous attention attempts in that, instead of using attention to blend hidden units of an encoder to a context vector at each decoder step, it uses attention as a pointer to select a member of the input sequence as the output.

Resources:

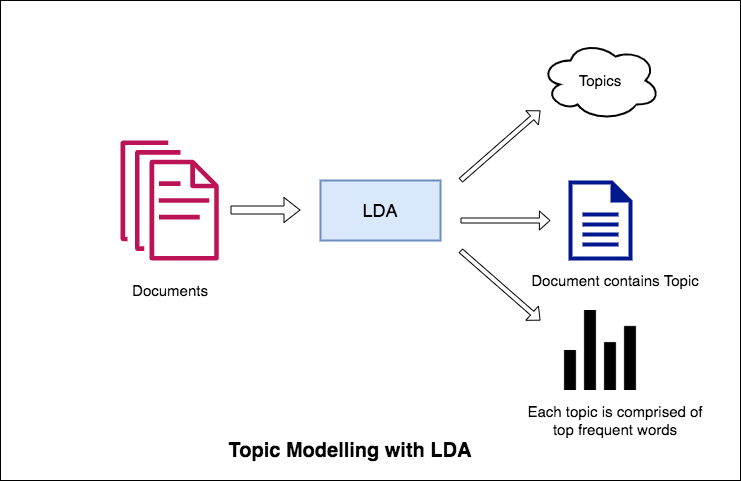

One of the primary applications of natural language processing is to automatically extract what topics people are discussing from large volumes of text. Some examples of large text could be feeds from social media, customer reviews of hotels, movies, etc, user feedbacks, news stories, e-mails of customer complaints etc.

Knowing what people are talking about and understanding their problems and opinions is highly valuable to businesses, administrators, political campaigns. And it’s really hard to manually read through such large volumes and compile the topics.

Thus is required an automated algorithm that can read through the text documents and automatically output the topics discussed.

In this notebook, we will take a real example of the 20 Newsgroups dataset and use LDA to extract the naturally discussed topics.

LDA’s approach to topic modeling is it considers each document as a collection of topics in a certain proportion. And each topic as a collection of keywords, again, in a certain proportion.

Once you provide the algorithm with the number of topics, all it does it to rearrange the topics distribution within the documents and keywords distribution within the topics to obtain a good composition of topic-keywords distribution.

Resources:

- Youtube video on LDA

- LDA Tutorial

- Towardsdatascience blog on LDA

- Analyticsvidhya blog on LDA

- Machinelearningplus blog on LDA

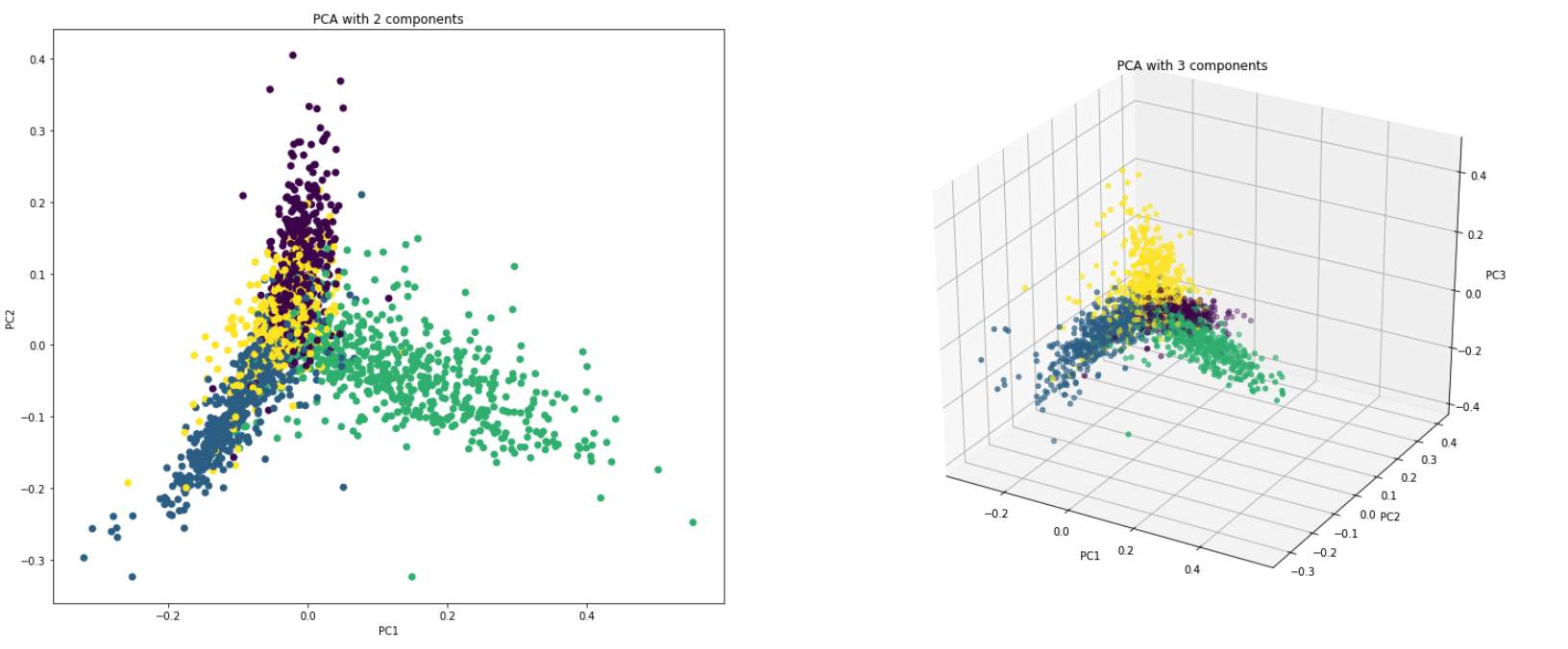

PCA is fundamentally a dimensionality reduction technique that transforms the columns of a dataset into a new set features. It does this by finding a new set of directions (like X and Y axes) that explain the maximum variability in the data. This new system coordinate axes is called Principal Components (PCs).

Practically PCA is used for two reasons:

-

Dimensionality Reduction: The information distributed across a large number of columns is transformed into principal components (PC) such that the first few PCs can explain a sizeable chunk of the total information (variance). These PCs can be used as explanatory variables in Machine Learning models. -

Visualize Data: Visualising the separation of classes (or clusters) is hard for data with more than 3 dimensions (features). With the first two PCs itself, it’s usually possible to see a clear separation.

Use the following resources to understand how PCA works:

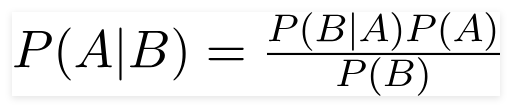

A Naive Bayes classifier is a probabilistic machine learning model that’s used for classification task. The crux of the classifier is based on the Bayes theorem.

Using Bayes theorem, we can find the probability of A happening, given that B has occurred. Here, B is the evidence and A is the hypothesis. The assumption made here is that the predictors/features are independent. That is presence of one particular feature does not affect the other. Hence it is called naive.

Types of Naive Bayes Classifier:

Multinomial Naive Bayes:

This is mostly used when the variables are discrete (like words). The features/predictors used by the classifier are the frequency of the words present in the document.

Gaussian Naive Bayes:

When the predictors take up a continuous value and are not discrete, we assume that these values are sampled from a gaussian distribution.

Bernoulli Naive Bayes:

This is similar to the multinomial naive bayes but the predictors are boolean variables. The parameters that we use to predict the class variable take up only values yes or no, for example if a word occurs in the text or not.

Using 20newsgroup dataset, naive bayes algorithm is explored to do the classification.

Resources:

- Multinomial Naive Bayes video by StatQuest

- Gaussian Naive Bayes video by StatQuest

- Machinelearningplus blog on Naive Bayes

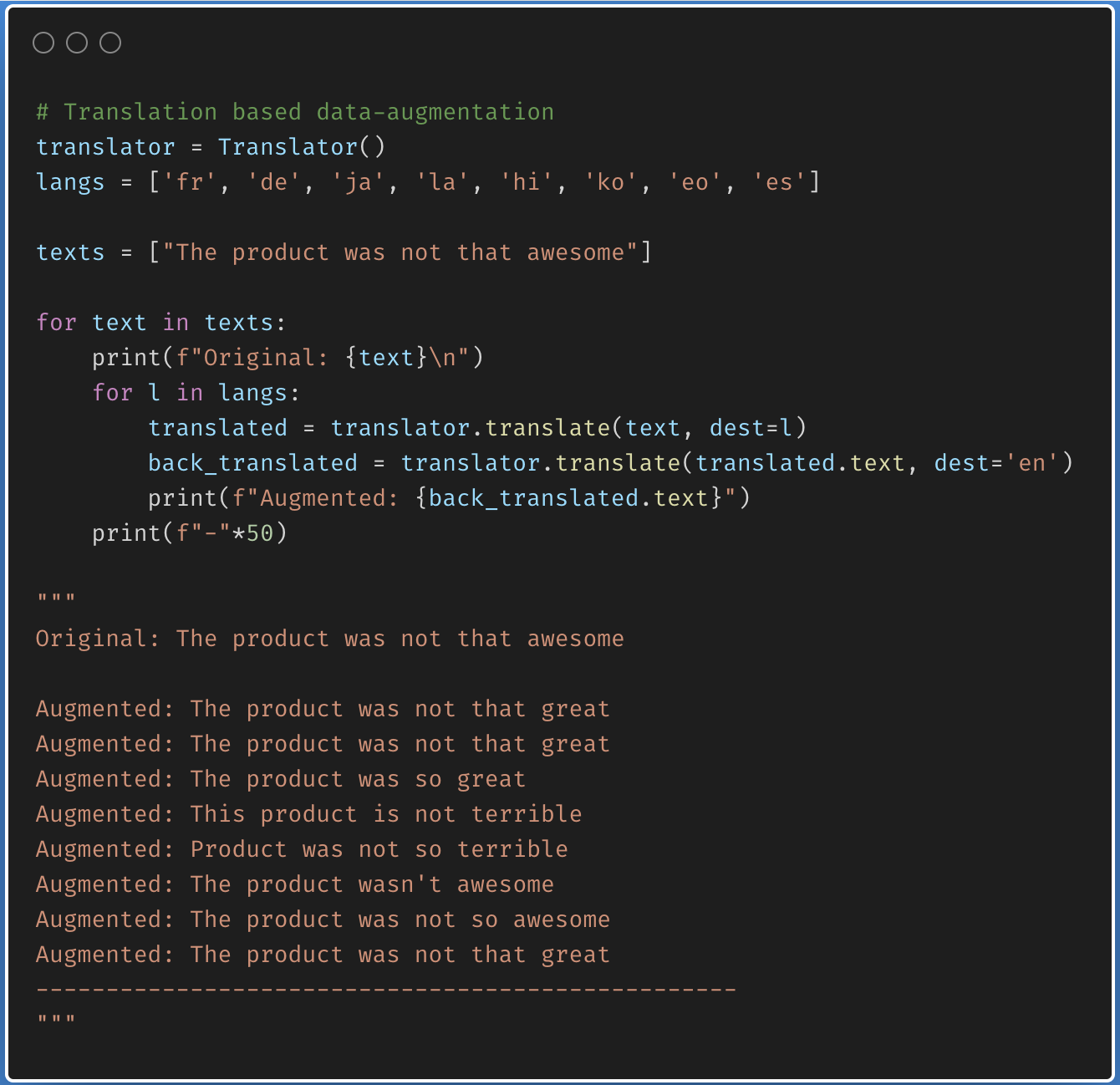

In Computer Vision using image data augmentation is a standard practice. This is because trivial operations for images like rotating an image a few degrees or converting it into grayscale doesn’t change its semantics. Whereas in natural language processing (NLP) field, it is hard to augmenting text due to high complexity of language.

Data Augmentation using the following techniques is explored:

- Synonym-based Substitution

- Antonym-based Substitution

- Back Translation

- Text Surface Transformation

- Random Noise Injection

- Word Embedding based Substitution

- Contextual Word Embeddings (BERT family) based Substitution

Resources: