The community continues to benefit from Kubernetes the Hard Way by Kelsey Hightower in understanding how each of the components work together and are configured in a reasonably secure manner, step-by-step. In a similar manner but using a slightly different approach, this guide attempts to demonstrate how the security-related settings inside kubernetes actually work from the ground up, one change at a time, validated by real attacks where possible.

By following this guide, you will configure one of the least secure clusters possible at the start. Each step will attempt to follow the pattern of a) educate, b) attack, c) harden, and d) verify in order of security importance and maturity. Upon completion of the guide, you will have successfully hacked your cluster several times over and now fully understand all the necessary configuration changes to prevent each one from happening.

The cluster built in this tutorial is not production ready--especially at the beginning--but the concepts learned are definitely applicable to your production clusters.

The target audience for this tutorial is someone who has a working knowledge of running a Kubernetes cluster (or has completed the kubernetes-the-hard-way tutorial) and wants to understand how each security-related setting works at a deep level.

- AWS EC2

- Ubuntu 16.0.4 LTS and search for

16.04 LTS hvm:ebs-ssd - Docker 1.13.x

- CNI Container Networking 0.6.0

- etcd 3.2.11

- Kubernetes 1.9.2

- AWS Account Credentials with permissions to:

- Create/delete VPC (subnets, route tables, internet gateways)

- Create/delete Cloudformation

- Create/delete EC2 * (security groups, keypairs, instances)

- AWS cli tools configured to use said account

- bash

- git

- dig

- kubectl (v1.9.2)

- cfssl

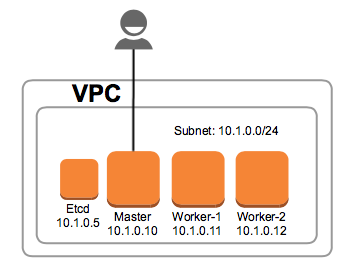

- Etcd - t2.micro (10.1.0.5)

- Controller - t2.small (10.1.0.10)

- Worker1 - t2.small (10.1.0.11)

- Worker2 - t2.small (10.1.0.12)

AWS costs in us-east-1 are just under $2/day.

To keep things simple, this guide is based on a single VPC, single availability zone, single subnet architecture where all nodes have static private IPs, are assigned public IPs to enable direct SSH access, and share a security group that allows each node to have full network access to each other.

These steps will guide you through creating the VPC, subnet, instances, and basic cluster configuration without any hardening measures in place. Pay special attention to the configuration of the security group to ensure only you have access to these systems!

- Create the VPC

- Launch and configure the

etcdinstance - Launch and configure the

controllerinstance - Launch and configure the

worker-1andworker-2instance - Create the local

kubeconfigfile

The following items are to be deployed to fulfill basic Kubernetes cluster functionality. The steps purposefully omit any security-related configuration/hardening.

At this most basic level, "Level 0", the current configuration offers very little (if any) protection from attacks that can take complete control of the the cluster and its nodes.

- Enumerate exposed ports on the nodes and identify their corresponding services

- Probing Etcd to compromise the data store

- Probing the Controller to access the API and other control plane services

- Probing the Worker to access the Kubelet and other worker services

Ouch! The security configuration of "Level 0" is not resistant to remote attacks. Let's do the very basic steps to prevent the "Level 0" attacks from being so straightforward.

- Improve the security group configuration

- Enable TLS on the externally exposed Kubernetes API

With that modest amount of hardening, it's time to have this cluster perform some work. To do that, we'll want to install Helm and its in-cluster helper, "Tiller". With that in place, we'll deploy two sample applications via Helm Charts.

- Install Helm/Tiller

- Install the Vulnapp

- Install the Azure Vote App

At this point, there are some fundamental resource exhaustion problems that authorized users may purposefully (or accidentally) trigger. Without any boundaries in place, deploying too many pods or pods that consume too much CPU/RAM shares can cause serious cluster availability/Denial of Service issues. When the cluster is "full", any new pods will not be scheduled.

- Launch too many pods

- Launch pods that consume too many CPU/RAM shares

In order to provide the proper boundaries around workloads and their resources, using separate namespaces and corresponding resource quotas can prevent the "Level 1" issues.

- Separate workloads using Namespaces

- Set specific Request/Limits on Pods

- Enforce Namespace Resource Quotas

- Discuss multi-etcd, multi-controller

- Malicious Image, Compromised Container, Multi-tenant Misuse

- Service Account Tokens

- Dashboard Access

- Direct Etcd Access

- Tiller Access

- Kubelet Exploit

- Application Tampering

- Metrics Scraping

- Metadata API

- Outbound Scanning/pivoting

- RBAC

- Etcd TLS

- New Dashboard

- Separate Kubeconfigs per user

- Tiller TLS

- Kubelet Authn/z

- Network Policy/CNI

- Admission Controllers

- Logging?

- Malicious Image, Compromised Container, Multi-tenant Misuse

- Escape the container

- Advanced admission controllers

- Restrict images/sources

- Network Egress filtering

- Vuln scan images

- Pod Security Policy

- Encrypted etcd

- Sysdig Falco

- Kubernetes the Hard Way - Kelsey Hightower