small c++ library to quickly use onnxruntime to deploy deep learning models

Thanks to cardboardcode, we have the documentation for this small library. Hope that they both are helpful for your work.

- Support inference of multi-inputs, multi-outputs

- Examples for famous models, like yolov3, mask-rcnn, ultra-light-weight face detector, yolox, PaddleSeg. Might consider supporting more if requested

- Batch-inference

- build onnxruntime from source with the following script

sudo bash ./scripts/install_onnx_runtime.shmake default

# build examples

make apps# after make apps

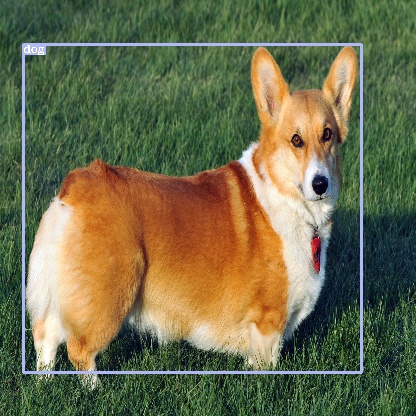

./build/examples/TestImageClassification ./data/squeezenet1.1.onnx ./data/images/dog.jpgthe following result can be obtained

264 : Cardigan, Cardigan Welsh corgi : 0.391365

263 : Pembroke, Pembroke Welsh corgi : 0.376214

227 : kelpie : 0.0314975

158 : toy terrier : 0.0223435

230 : Shetland sheepdog, Shetland sheep dog, Shetland : 0.020529

-

Download model from onnx model zoo: HERE

-

The shape of the output would be

OUTPUT_FEATUREMAP_SIZE X OUTPUT_FEATUREMAP_SIZE * NUM_ANCHORS * (NUM_CLASSES + 4 + 1)

where OUTPUT_FEATUREMAP_SIZE = 13; NUM_ANCHORS = 5; NUM_CLASSES = 20 for the tiny-yolov2 model from onnx model zoo

- Test tiny-yolov2 inference apps

# after make apps

./build/examples/tiny_yolo_v2 [path/to/tiny_yolov2/onnx/model] ./data/images/dog.jpg- Test result

-

Download model from onnx model zoo: HERE

-

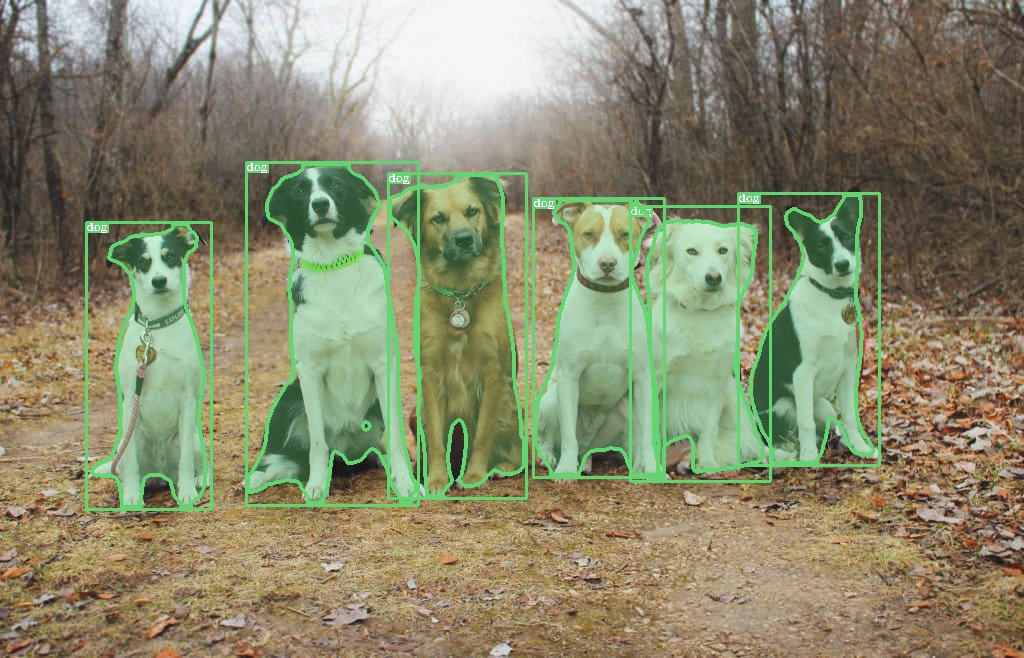

As also stated in the url above, there are four outputs: boxes(nboxes x 4), labels(nboxes), scores(nboxes), masks(nboxesx1x28x28)

-

Test mask-rcnn inference apps

# after make apps

./build/examples/mask_rcnn [path/to/mask_rcnn/onnx/model] ./data/images/dogs.jpg- Test results:

-

Download model from onnx model zoo: HERE

-

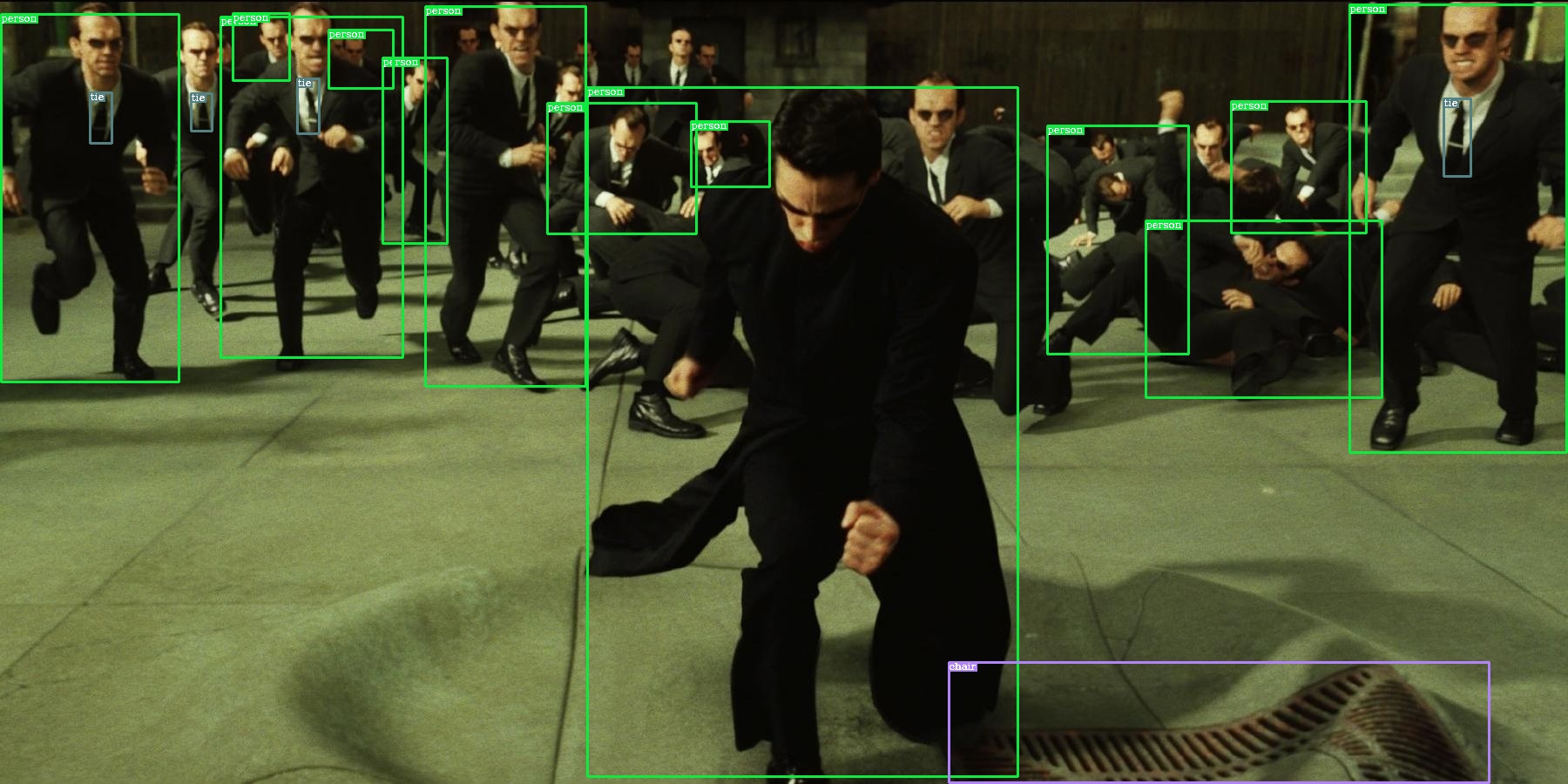

Test yolo-v3 inference apps

# after make apps

./build/examples/yolov3 [path/to/yolov3/onnx/model] ./data/images/no_way_home.jpg- Test result

- App to use onnx model trained with famous light-weight Ultra-Light-Fast-Generic-Face-Detector-1MB

- Sample weight has been saved ./data/version-RFB-640.onnx

- Test inference apps

# after make apps

./build/examples/ultra_light_face_detector ./data/version-RFB-640.onnx ./data/images/endgame.jpg- Download onnx model trained on COCO dataset from HERE

# this app tests yolox_l model but you can try with other yolox models also.

wget https://github.com/Megvii-BaseDetection/YOLOX/releases/download/0.1.1rc0/yolox_l.onnx -O ./data/yolox_l.onnx- Test inference apps

# after make apps

./build/examples/yolox ./data/yolox_l.onnx ./data/images/matrix.jpg- Download PaddleSeg's bisenetv2 trained on cityscapes dataset that has been converted to onnx HERE and copy to ./data directory

You can also convert your own PaddleSeg with following procedures

- export PaddleSeg model

- convert exported model to onnx format with Paddle2ONNX

- Test inference apps

./build/examples/semantic_segmentation_paddleseg_bisenetv2 ./data/bisenetv2_cityscapes.onnx ./data/images/sample_city_scapes.png

./build/examples/semantic_segmentation_paddleseg_bisenetv2 ./data/bisenetv2_cityscapes.onnx ./data/images/odaiba.jpg-

Test results:

- cityscapes dataset's color legend

+ test result on sample image of cityscapes dataset (this model is trained on cityscapes dataset)

+ test result on a new scene at Odaiba, Tokyo, Japan