The fairness R package provides tools to easily calculate algorithmic fairness metrics across different sensitive groups based on model predictions in a binary classification task. It also provides opportunities to visualize and compare other prediction metrics between the groups.

The package contains functions to compute the most commonly used metrics of algorithmic fairness such as:

- Demographic parity

- Proportional parity

- Equalized odds

- Predictive rate parity

In addition, the following comparisons are also implemented:

- False positive rate parity

- False negative rate parity

- Accuracy parity

- Negative predictive value parity

- Specificity parity

- ROC AUC comparison

- MCC comparison

The comprehensive tutorial on using the package is provided in this blogpost. We recommend that you go through the blogpost, as it contains a more in-depth description of the fairness package compared to this README. You will also find a brief tutorial in the fairness vignette:

vignette('fairness')You can install the latest stable package version from CRAN by running:

install.packages('fairness')

library(fairness)You may also install the development version from Github:

library(devtools)

devtools::install_github('kozodoi/fairness')

library(fairness)data('compas')The data already contains all variables necessary to run all parity metrics. In case you set up your own predictive model, you will need to concatenate predicted probabilities or predictions (0/1) to your original dataset or supply them as a vector to the corresponding metric function.

equal_odds(data = compas,

outcome = 'Two_yr_Recidivism',

group = 'ethnicity',

probs = 'probability',

preds_levels = c('no', 'yes'),

cutoff = 0.5,

base = 'Caucasian')Metrics for equalized odds:

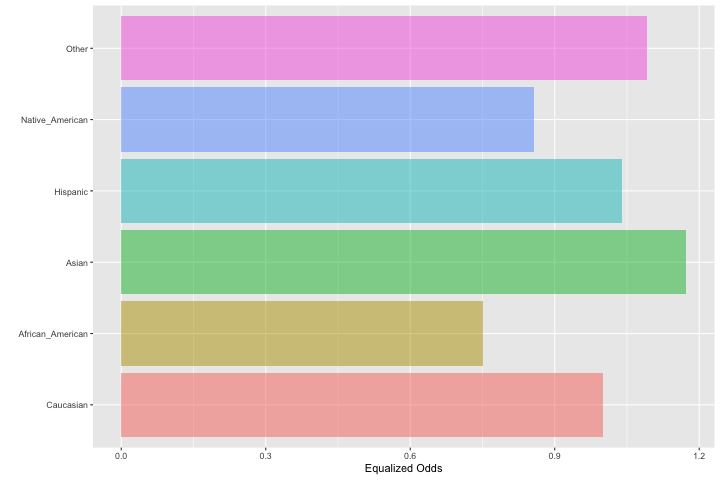

#> Caucasian African_American Asian Hispanic

#> Sensitivity 0.7782982 0.5845443 0.9130435 0.809375

#> Equalized odds 1.0000000 0.7510544 1.1731281 1.039929

#> Native_American Other

#> Sensitivity 0.6666667 0.8493151

#> Equalized odds 0.8565697 1.0912463

Bar chart for the equalized odds metric:

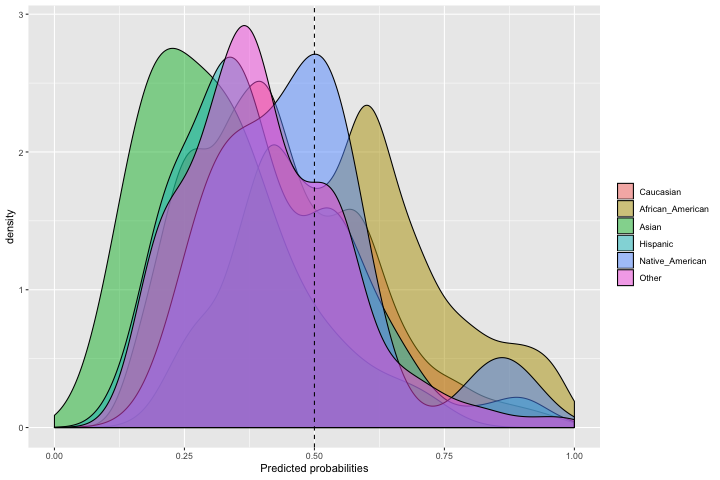

Predicted probability plot for all subgroups:

To cite this package in scientific publications, please use the following query to generate a reference as a text or a BibTeX entry:

citation('fairness')Nikita Kozodoi and Tibor V. Varga (2020). fairness: Algorithmic Fairness Metrics. R package version 1.1.1.

Installation requires R 3.6+ and the following packages:

- Calders, T., & Verwer, S. (2010). Three naive Bayes approaches for discrimination-free classification. Data Mining and Knowledge Discovery, 21(2), 277-292.

- Chouldechova, A. (2017). Fair prediction with disparate impact: A study of bias in recidivism prediction instruments. Big data, 5(2), 153-163.

- Feldman, M., Friedler, S. A., Moeller, J., Scheidegger, C., & Venkatasubramanian, S. (2015, August). Certifying and removing disparate impact. In Proceedings of the 21th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining (pp. 259-268). ACM.

- Friedler, S. A., Scheidegger, C., Venkatasubramanian, S., Choudhary, S., Hamilton, E. P., & Roth, D. (2018). A comparative study of fairness-enhancing interventions in machine learning. arXiv preprint arXiv:1802.04422.

- Zafar, M. B., Valera, I., Gomez Rodriguez, M., & Gummadi, K. P. (2017, April). Fairness beyond disparate treatment & disparate impact: Learning classification without disparate mistreatment. In Proceedings of the 26th International Conference on World Wide Web (pp. 1171-1180). International World Wide Web Conferences Steering Committee.

In case you need help or advice on fairness metrics or you want to report an issue, please do so in a reproducible example at the corresponding GitHub page.