"If you can’t measure it, you can’t improve it." -- Peter Drucker

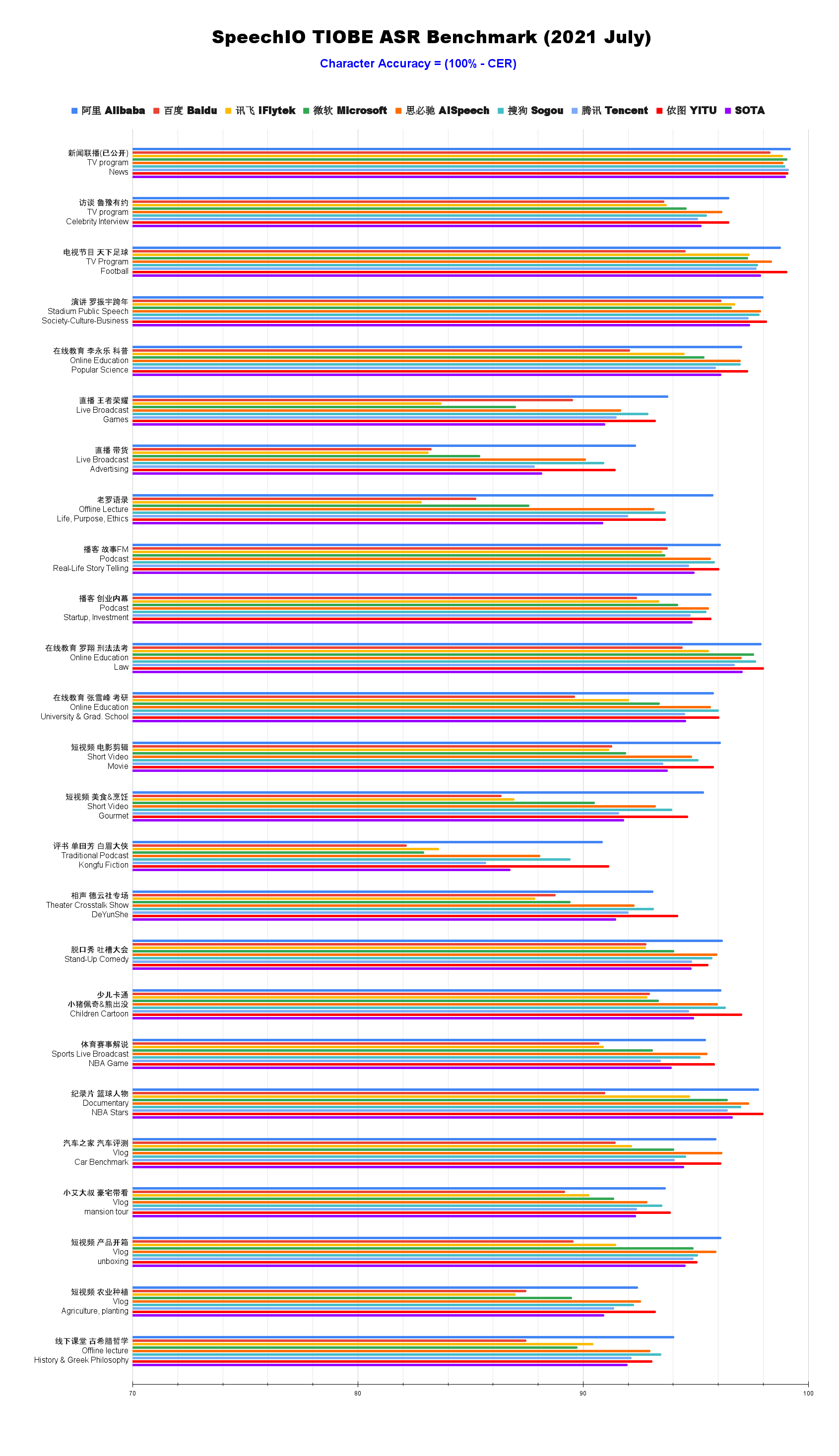

Regarding to the field of Automatic Speech Recognition(ASR) today, people often claim there systems to be SOTA, in research papers, in industrial PR articles etc. The claim of SOTA is not as informative as one might expect, because:

- For industry, there is no objective and quantative benchmark on how these commercial APIs perform in real-life scenarios, at least in public domain.

- For academia, it is becoming harder today to compare ASR models due to the fragmentation of research toolkits and ecosystem.

- How are academic SOTA and industrial SOTA related ?

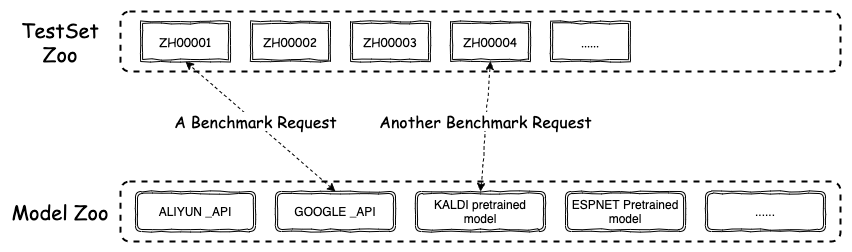

As above figure shows, SpeechIO leaderboard serves as an ASR benchmarking platform, by providing 3 components:

As above figure shows, SpeechIO leaderboard serves as an ASR benchmarking platform, by providing 3 components:

- TestSet Zoo: A collection of test sets covering wide range of speech recognition scenarios

- Model Zoo: A collection of models including commercial APIs and open-sourced pretrained models

- an open benchmarking pipeline:

- defines a simplest-possible specification on recognition interface, the format of input test sets, the format of output recognition results.

- As long as model submitters conform to this specification, a fully automated pipeline will take care of the rest (e.g. data preparation -> recognition invocation -> text post processing -> WER/CER/SER evaluation)

With SpeechIO leaderboard, anyone can benchmark/reproduce/compare performances with arbitrary combinations between test-set zoo and model zoo, by simply filling a request form example form

Collected from all sorts of open-sourced datasets

List of Open-Sourced Test Sets (ZH)

| 编号 TEST_SET_ID |

说明 DESCRIPTION |

|---|---|

| AISHELL-1_TEST | test set of AISHELL-1 |

| AISHELL-2_IOS_TEST | test set of AISHELL-2 (iOS channel) |

| AISHELL-2_ANDROID_TEST | test set of AISHELL-2 (Android channel) |

| AISHELL-2_MIC_TEST | test set of AISHELL-2 (Microphone channel) |

Carefully curated by SpeechIO authors, crawled from publicly available sources (Youtube, TV programs, Podcast etc), covering various well-known acoustic scenarios(AM) and content domains(LM & vocabulary), labeled by professional annotators.

List of SpeechIO Test Sets (ZH)

| 编号 TEST_SET_ID |

名称 Name |

场景 Scenario |

内容领域 Topic Domain |

时长 hours |

难度(1-5) Difficulty |

|---|---|---|---|---|---|

| SPEECHIO_ASR_ZH00000 | 接入调试集 For leaderboard submitter debugging |

视频会议、论坛演讲 video conference & forum speech |

经济、货币、金融 economy, currency, finance |

1.0 | ★★☆ |

| SPEECHIO_ASR_ZH00001 | 新闻联播 | 新闻播报 TV News |

时政 news & politics |

9 | ★ |

| SPEECHIO_ASR_ZH00002 | 鲁豫有约 | 访谈电视节目 TV interview |

名人工作/生活 celebrity & film & music & daily |

3 | ★★☆ |

| SPEECHIO_ASR_ZH00003 | 天下足球 | 专题电视节目 TV program |

足球 Sports & Football & Worldcup |

2.7 | ★★☆ |

| SPEECHIO_ASR_ZH00004 | 罗振宇跨年演讲 | 会场演讲 Stadium Public Speech |

社会、人文、商业 Society & Culture & Business Trend |

2.7 | ★★ |

| SPEECHIO_ASR_ZH00005 | 李永乐老师在线讲堂 | 在线教育 Online Education |

科普 Popular Science |

4.4 | ★★★ |

| SPEECHIO_ASR_ZH00006 | 张大仙 & 骚白 王者荣耀直播 | 直播 Live Broadcasting |

游戏 Game |

1.6 | ★★★☆ |

| SPEECHIO_ASR_ZH00007 | 李佳琪 & 薇娅 直播带货 | 直播 Live Broadcasting |

电商、美妆 Makeup & Online shopping/advertising |

0.9 | ★★★★☆ |

| SPEECHIO_ASR_ZH00008 | 老罗语录 | 线下培训 Offline lecture |

段子、做人 Life & Purpose & Ethics |

1.3 | ★★★★☆ |

| SPEECHIO_ASR_ZH00009 | 故事FM | 播客 Podcast |

人生故事、见闻 Ordinary Life Story Telling |

4.5 | ★★☆ |

| SPEECHIO_ASR_ZH00010 | 创业内幕 | 播客 Podcast |

创业、产品、投资 Startup & Enterprenuer & Product & Investment |

4.2 | ★★☆ |

| SPEECHIO_ASR_ZH00011 | 罗翔 刑法法考培训讲座 | 在线教育 Online Education |

法律 法考 Law & Lawyer Qualification Exams |

3.4 | ★★☆ |

| SPEECHIO_ASR_ZH00012 | 张雪峰 考研线上小讲堂 | 在线教育 Online Education |

考研 高校报考 University & Graduate School Entrance Exams |

3.4 | ★★★☆ |

| SPEECHIO_ASR_ZH00013 | 谷阿莫&牛叔说电影 | 短视频 VLog |

电影剪辑 Movie Cuts |

1.8 | ★★★ |

| SPEECHIO_ASR_ZH00014 | 贫穷料理 & 琼斯爱生活 | 短视频 VLog |

美食、烹饪 Food & Cooking & Gourmet |

1 | ★★★☆ |

| SPEECHIO_ASR_ZH00015 | 单田芳 白眉大侠 | 评书 Traditional Podcast |

江湖、武侠 Kongfu Fiction |

2.2 | ★★☆ |

| SPEECHIO_ASR_ZH00016 | 德云社相声演出 | 剧场相声 Theater Crosstalk Show |

包袱段子 Funny Stories |

1 | ★★★ |

| SPEECHIO_ASR_ZH00017 | 吐槽大会 | 脱口秀电视节目 Standup Comedy |

明星糗事 Celebrity Jokes |

1.8 | ★★☆ |

| SPEECHIO_ASR_ZH00018 | 小猪佩奇 & 熊出没 | 少儿动画 Children Cartoon |

童话故事、日常 Fairy Tale |

0.9 | ★☆ |

| SPEECHIO_ASR_ZH00019 | CCTV5 NBA 比赛转播 | 体育赛事解说 Sports Game Live |

篮球、NBA NBA Game |

0.7 | ★★★ |

| SPEECHIO_ASR_ZH00020 | 篮球人物 | 纪录片 Documentary |

篮球明星、成长 NBA Super Stars' Life & History |

2.2 | ★★ |

| SPEECHIO_ASR_ZH00021 | 汽车之家 车辆评测 | 短视频 VLog |

汽车测评 Car benchmarks, Road driving test |

1.7 | ★★★☆ |

| SPEECHIO_ASR_ZH00022 | 小艾大叔 豪宅带看 | 短视频 VLog |

房地产、豪宅 Realestate, Mansion tour |

1.7 | ★★★ |

| SPEECHIO_ASR_ZH00023 | 无聊开箱 & Zealer评测 | 短视频 VLog |

产品开箱评测 Unboxing |

2 | ★★★ |

| SPEECHIO_ASR_ZH00024 | 付老师种植技术 | 短视频 VLog |

农业、种植 Agriculture, Planting |

2.7 | ★★★☆ |

| SPEECHIO_ASR_ZH00025 | 石国鹏讲古希腊哲学 | 线下培训 Offline lecture |

历史,古希腊哲学 History, Greek philosophy |

1.3 | ★★☆ |

Commercial API (ZH)

| 编号 MODEL_ID |

类型 type |

模型作者/所有人 model author/owner |

简介 description |

链接 url |

|---|---|---|---|---|

| aispeech_api | Cloud API | 思必驰 AISpeech |

思必驰开放平台 | https://cloud.aispeech.com |

| aliyun_api | Cloud API | 阿里巴巴 Alibaba |

阿里云 | https://ai.aliyun.com/nls/asr |

| baidu_pro_api | Cloud API | 百度 Baidu |

百度智能云(极速版) | https://cloud.baidu.com/product/speech/asr |

| Cloud API | 讯飞 IFlyTek |

讯飞开放平台(听写服务) | https://www.xfyun.cn/services/voicedictation | |

| microsoft_api | Cloud API | 微软 Microsoft |

Azure | https://azure.microsoft.com/zh-cn/services/cognitive-services/speech-services/ |

| sogou_api | Cloud API | 搜狗 Sogou |

AI开放平台 | https://ai.sogou.com/product/one_recognition/ |

| tencent_api | Cloud API | 腾讯 Tencent |

腾讯云 | https://cloud.tencent.com/product/asr |

| yitu_api | Cloud API | 依图 YituTech |

依图语音开放平台 | https://speech.yitutech.com |

Open-Sourced Pretrained Models (ZH)

| 编号 MODEL_ID |

类型 type |

模型作者/所有人 model author/owner |

简介 description |

链接 url |

|---|---|---|---|---|

| speechio_kaldi_multicn | pretrained ASR model | 那兴宇 Xingyu NA |

Kaldi预训练模型 Kaldi pretrained ASR |

based on Kaldi recipe https://github.com/kaldi-asr/kaldi/tree/master/egs/multi_cn/s5 |

Follow submission guideline here HOW_TO_SUBMIT.md to submit your own model and get your model benchmarked.

Email: [email protected]