mxnet version of Large-Margin Softmax Loss for Convolutional Neural Networks.

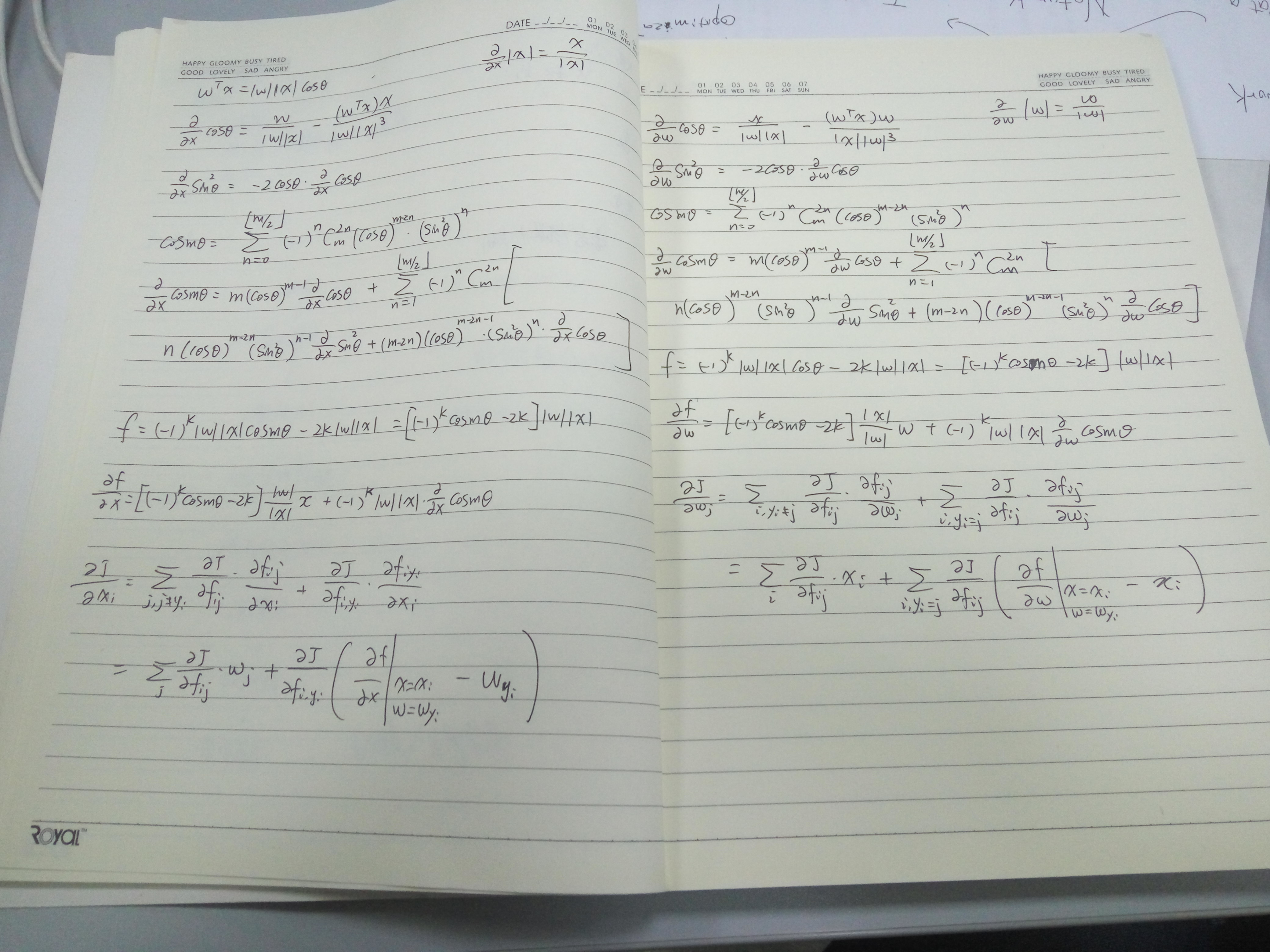

I put all formula I used to calculate the derivatives below. You can check it by yourself. If there's a mistake, please do tell me or open an issue.

The derivatives doesn't include lambda in the paper, but the code does. Instead of using lambda to weight the original f_i_yi = |w_yi||x_i|cos(t), the code uses beta because lambda is a keyword for Python, but beta plays exactly the same role as lambda.

Gradient check can be failed with data type float32 but ok with data type float64. So don't afraid to see gradient check failed.

I implement the operator both in Python and C++(CUDA). The performance below is training LeNet on a single GTX1070 with parameters margin = 4, beta = 1. Notice the C++ implement can only run on GPU context.

| Batch Size | traditional fully connected | lsoftmax in Python | lsoftmax in C++(CUDA) |

|---|---|---|---|

| 128 | ~45000 samples / sec | 2800 ~ 3300 samples / sec | ~40000 samples / sec |

| 256 | ~54000 samples / sec | 3500 ~ 4200 samples / sec | ~47000 samples / sec |