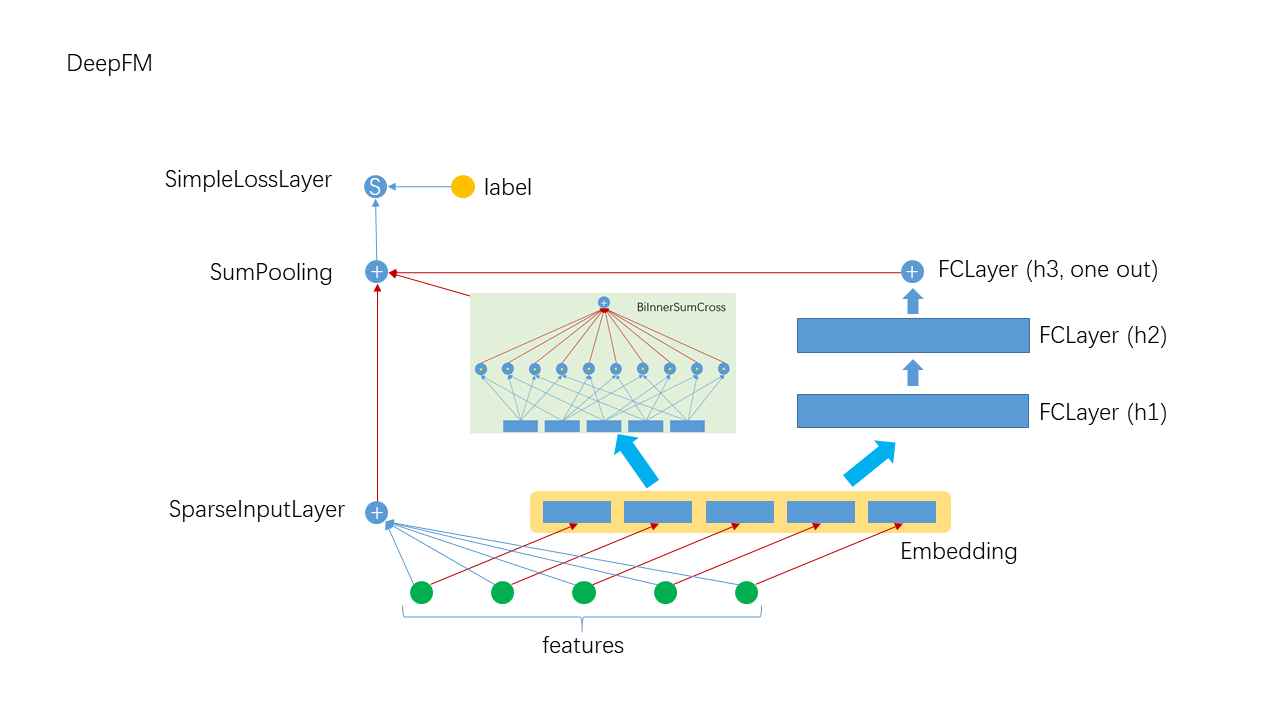

The DeepFM algorithm adds a depth layer to the FM (Factorization Machine). Compared with the PNN and NFM algorithms, it preserves the second-order implicit feature intersection of FM and uses the deep network to obtain high-order feature intersections. The structure is as follows:

Different from the traditional FM implementation, the combination of Embedding and BiInnerSumCross is used to implement the second-order implicit crossover. The expression of the traditional FM quadratic cross term is as follows::

In implementation, it is stored by Embedding, After calling Embedding's 'calOutput', computing

and output result together. So the Embedding output of a sample is

The result of the original quadratic term can be re-expressed as::

The above is BiInnerSumCross's forward calculation method, which is implemented by Scala code:

val sumVector = VFactory.denseDoubleVector(mat.getSubDim)

(0 until batchSize).foreach { row =>

val partitions = mat.getRow(row).getPartitions

partitions.foreach { vectorOuter =>

data(row) -= vectorOuter.dot(vectorOuter)

sumVector.iadd(vectorOuter)

}

data(row) += sumVector.dot(sumVector)

data(row) /= 2

sumVector.clear()

}- SimpleInputLayer: Sparse data input layer, specially optimized for sparse high-dimensional data, essentially a FClayer

- FCLayer: The most common layer in DNN, linear transformation followed by transfer function

- SumPooling: Adding multiple input data as element-wise, requiring inputs have the same shape

- SimpleLossLayer: Loss layer, you can specify different loss functions

override def buildNetwork(): Unit = {

ensureJsonAst()

val wide = new SimpleInputLayer("input", 1, new Identity(),

JsonUtils.getOptimizerByLayerType(jsonAst, "SparseInputLayer")

)

val embeddingParams = JsonUtils.getLayerParamsByLayerType(jsonAst, "Embedding")

.asInstanceOf[EmbeddingParams]

val embedding = new Embedding("embedding", embeddingParams.outputDim,

embeddingParams.numFactors, embeddingParams.optimizer.build()

)

val innerSumCross = new BiInnerSumCross("innerSumPooling", embedding)

val mlpLayer = JsonUtils.getFCLayer(jsonAst, embedding)

val join = new SumPooling("sumPooling", 1, Array[Layer](wide, innerSumCross, mlpLayer))

new SimpleLossLayer("simpleLossLayer", join, lossFunc)

}There are many parameters of DeepFM, which need to be specified by Json configuration file (for a complete description of Json configuration file, please refer toJson explanation), A typical example is:(see data)

{

"data": {

"format": "dummy",

"indexrange": 148,

"numfield": 13,

"validateratio": 0.1,

"sampleratio": 0.2

},

"model": {

"modeltype": "T_DOUBLE_SPARSE_LONGKEY",

"modelsize": 148

},

"train": {

"epoch": 10,

"numupdateperepoch": 10,

"lr": 0.5,

"decayclass": "StandardDecay",

"decaybeta": 0.01

},

"default_optimizer": "Momentum",

"layers": [

{

"name": "wide",

"type": "simpleinputlayer",

"outputdim": 1,

"transfunc": "identity"

},

{

"name": "embedding",

"type": "embedding",

"numfactors": 8,

"outputdim": 104,

"optimizer": {

"type": "momentum",

"momentum": 0.9,

"reg2": 0.01

}

},

{

"name": "fclayer",

"type": "FCLayer",

"outputdims": [

100,

100,

1

],

"transfuncs": [

"relu",

"relu",

"identity"

],

"inputlayer": "embedding"

},

{

"name": "biinnersumcross",

"type": "BiInnerSumCross",

"inputlayer": "embedding",

"outputdim": 1

},

{

"name": "sumPooling",

"type": "SumPooling",

"outputdim": 1,

"inputlayers": [

"wide",

"biinnersumcross",

"fclayer"

]

},

{

"name": "simplelosslayer",

"type": "simplelosslayer",

"lossfunc": "logloss",

"inputlayer": "sumPooling"

}

]

}runner="com.tencent.angel.ml.core.graphsubmit.GraphRunner"

modelClass="com.tencent.angel.ml.core.graphsubmit.AngelModel"

$ANGEL_HOME/bin/angel-submit \

--angel.job.name DeepFM \

--action.type train \

--angel.app.submit.class $runner \

--ml.model.class.name $modelClass \

--angel.train.data.path $input_path \

--angel.save.model.path $model_path \

--angel.log.path $log_path \

--angel.workergroup.number $workerNumber \

--angel.worker.memory.gb $workerMemory \

--angel.worker.task.number $taskNumber \

--angel.ps.number $PSNumber \

--angel.ps.memory.gb $PSMemory \

--angel.output.path.deleteonexist true \

--angel.task.data.storage.level $storageLevel \

--angel.task.memorystorage.max.gb $taskMemory \

--angel.worker.env "LD_PRELOAD=./libopenblas.so" \

--angel.ml.conf $deepfm_json_path \

--ml.optimizer.json.provider com.tencent.angel.ml.core.PSOptimizerProviderFor the deep learning model, its data, training and network configuration should be specified with the Json file first. Resources such as: worker,ps depend on detail dataset.