- Docker

- Configuring InfluxDB

- Configuring Telegraf

- Configuring Graylog

- Configuring Grafana

- Configuration for the Suricata dashboard #Optional

- Troubleshooting

To simplify everything, we'll use a Docker Compose file.

You will need to have Docker and Docker Compose installed on a server that will host the dashboard.

After you've installed Docker and Docker Compose, download the docker-compose.yaml from this repo.

curl https://raw.githubusercontent.com/bsmithio/OPNsense-Dashboard/master/docker-compose.yaml -o docker-compose.yaml

It's important that you change the TZ environment variable to your timezone for everything to work properly.

I also recommend you change the passwords in this compose file as well.

After you've made the necessary changes, run docker-compose up -d in the same directory as your docker-compose.yaml.

Once you have your docker containers running, follow the steps below.

After InfluxDB is started:

- Go to http://<docker_ip>:8086

- Setup your username, password, bucket and organization here.

- Navigate to Data-->Telegraf and create a configuration for a system. Name it, and copy your API token, you will need this for your Telegraf configuration.

- Generate another API token for Grafana. Click on API tokens -> Generate API Token -> Read/Write Access -> Click on your bucket under Read -> and Save. Copy this somewhere as well, you'll need it for Grafana.

If you previously used the pkg install version of telegraf, follow these instructions.

Run sudo pkg remove telegraf to remove telegraf.

Delete the line that starts with telegraf in /usr/local/etc/sudoers.

Once those are done you can continue with the new configuration.

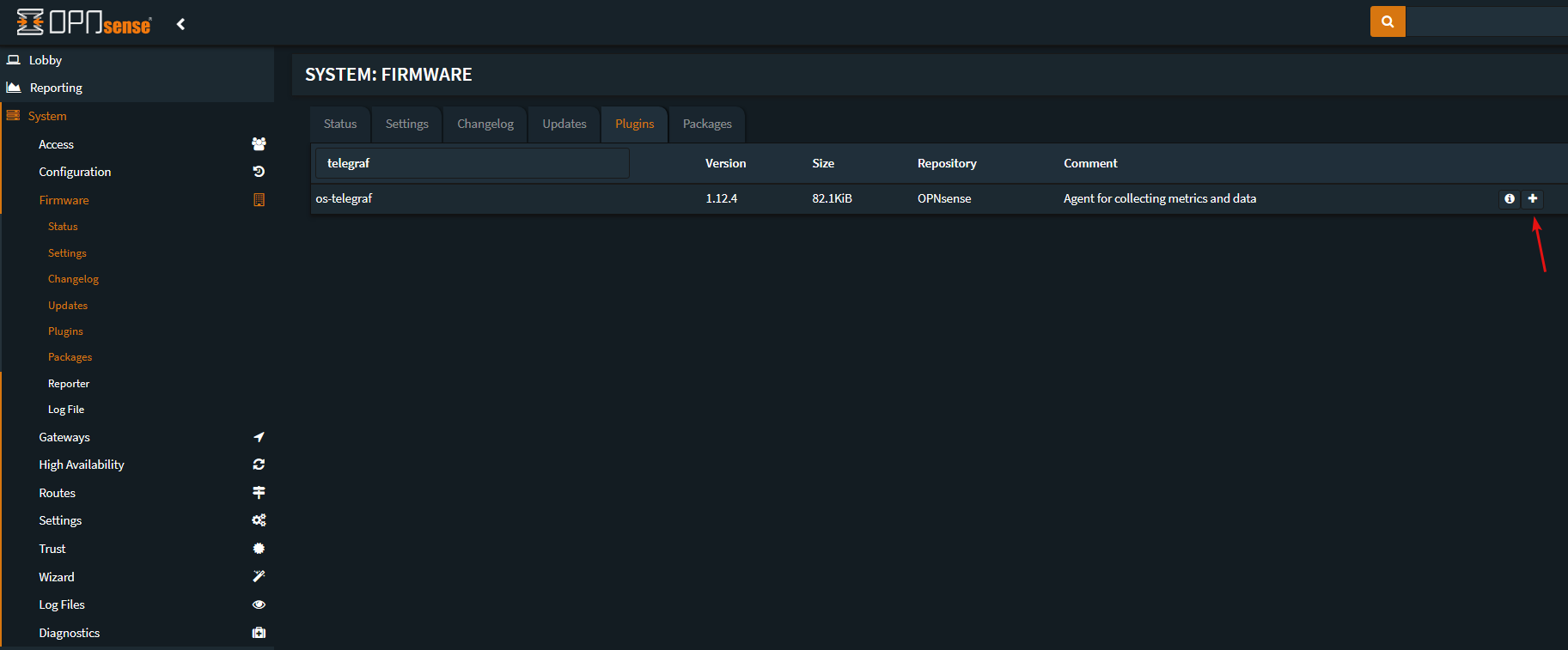

Install the Telegraf plugin on OPNsense. System -> Firmware -> Plugins -> Search for telegraf, and click the plus icon to install.

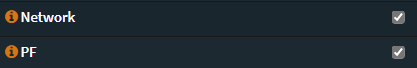

Navigate to Services -> Telegraf -> Input

Enable Network and PF Inputs.

Then click Save.

Now navigate to Services -> Telegraf -> Output

Enable Influx v2 Output and fill in the following:

Influx v2 Token: Your InfluxDB Token

Influx v2 URL: Your InfluxDB URL, this will be the IP address or hostname of your system that is running InfluxDB. E.g http://192.168.1.10:8086

Influx v2 Organization: Your InfluxDB Organization

Influx v2 Bucket: Your InfluxDB Bucket

Then click Save.

You can use Ansible to automate a few sections. Install Ansible on your linux server and use the files at https://github.com/bsmithio/OPNsense-Dashboard/tree/master/ansible. If you use this method you can skip these sections: Add telegraf to sudoers, Telegraf Plugins, and Configuration for the Suricata dashboard.

After that, we need to add telegraf to sudoers and use nopasswd to restrict telegraf to only what it needs to run as root.

printf 'telegraf ALL=(root) NOPASSWD: /usr/local/bin/telegraf_pfifgw.php\n' | sudo tee -a /usr/local/etc/sudoers > /dev/null

You may also wish to disable sudo logging for telegraf_pfifgw.php, otherwise you'll see many sudo logs from telegraf running the script every 10 seconds.

printf 'Cmnd_Alias PFIFGW = /usr/local/bin/telegraf_pfifgw.php\n' | sudo tee -a /usr/local/etc/sudoers > /dev/null

printf 'Defaults!PFIFGW !log_allowed\n' | sudo tee -a /usr/local/etc/sudoers > /dev/null

Add the custom.conf telegraf config to /usr/local/etc/telegraf.d

sudo mkdir /usr/local/etc/telegraf.d

sudo chown telegraf:telegraf /usr/local/etc/telegraf.d

sudo chmod 750 /usr/local/etc/telegraf.d

sudo curl https://raw.githubusercontent.com/bsmithio/OPNsense-Dashboard/master/config/custom.conf -o /usr/local/etc/telegraf.d/custom.conf

Plugins must be copied to your OPNsense system

Place telegraf_pfifgw.php and telegraf_temperature.sh in /usr/local/bin and chmod them to 755.

curl "https://raw.githubusercontent.com/bsmithio/OPNsense-Dashboard/master/plugins/telegraf_pfifgw.php" -o /usr/local/bin/telegraf_pfifgw.php

curl "https://raw.githubusercontent.com/bsmithio/OPNsense-Dashboard/master/plugins/telegraf_temperature.sh" -o /usr/local/bin/telegraf_temperature.sh

chmod 755 /usr/local/bin/telegraf_temperature.sh /usr/local/bin/telegraf_pfifgw.php

Test these out before starting the telegraf service by executing them

sudo telegraf_pfifgw.php

telegraf_temperature.sh

The temperature plugin may not work on every system, if you receive sysctl: unknown oid 'hw.acpi.thermal' comment out or remove that line from the plugin.

After this is done, navigate to Services -> Telegraf -> General -> Enable Telegraf Agent.

Lastly, check if Telegraf is running

sudo service telegraf status

To make the map work on Grafana, you must create a MaxMind account here https://www.maxmind.com/en/geolite2/signup. Then generate a license key by going to Account -> Manage License Keys -> Generate New License Key. Copy this key somewhere because you'll need it again soon.

Three GeoIP files are useful: Country, City, and ASN database. _Note: Due to recent changes by Maxmind, City database does not give the same information as Country. Additional information about GeoIP can be found below

You'll need to download the GeoIP database file to your local machine Graylog container. Access your Graylog container's shell from your Docker host like so:

sudo docker exec -it graylog /bin/bash

Then download the database file, replace YOUR_LICENSE with the key you generated above.

curl -flLs "https://download.maxmind.com/app/geoip_download?edition_id=GeoLite2-Country&license_key=<YOUR_LICENSE>>&suffix=tar.gz" -o GeoLite2-Country.tar.gz \

&& tar -xzvf GeoLite2-Country.tar.gz \

&& mv GeoLite2-Country_*/GeoLite2-Country.mmdb /usr/share/graylog/data/data/

curl -flLs "https://download.maxmind.com/app/geoip_download?edition_id=GeoLite2-City&license_key=<YOUR_LICENSE>&suffix=tar.gz" -o GeoLite2-City.tar.gz \

&& tar -xzvf GeoLite2-City.tar.gz \

&& mv GeoLite2-City_*/GeoLite2-City.mmdb /usr/share/graylog/data/data/

curl -flLs "https://download.maxmind.com/app/geoip_download?edition_id=GeoLite2-City&license_key=<YOUR_LICENSE>&suffix=tar.gz" -o GeoLite2-ASN.tar.gz \

&& tar -xzvf GeoLite2-ASN.tar.gz \

&& mv GeoLite2-ASN_*/GeoLite2-ASN.mmdb /usr/share/graylog/data/data/

-

In a browser login in at http://:9000.

-

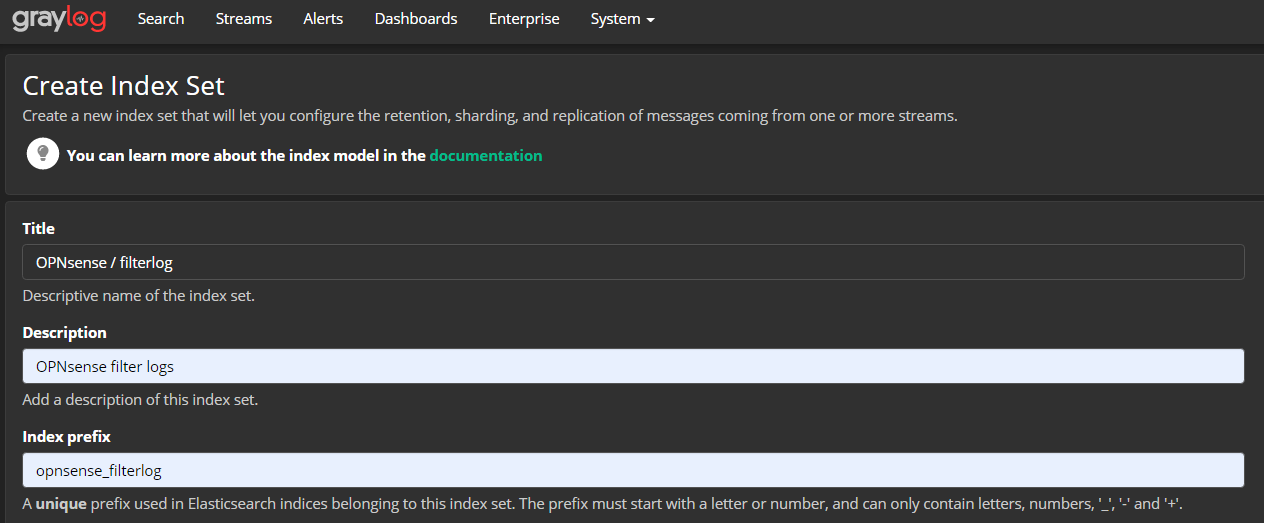

For Graylog, it's recommended to create an index set. To do so, navigate to System -> Indices. Create an index set with the name "OPNsense / filterlog" and set the index prefix to

opnsense_filterlog. -

Download content pack.

-

Install content pack on Graylog:

System -> Content Packs -> Upload, choose the pack,Upload, thenInstall. Two configurables are provided in theContent Pack.a.

Portfor receivingfilterlogb.

Nameof the syslog input -

Content Packshould install the following components:

inputstreamgrok extractorsonly v4 extractors are provided as I do not yet have v6 enabled- ✓ IPv4 UDP

- ✓ IPv4 TCP

- ✓ IPv4 ICMP

- ✗ IPv4 UDP

- ✗ IPv4 TCP

- ✗ IPv4 ICMP

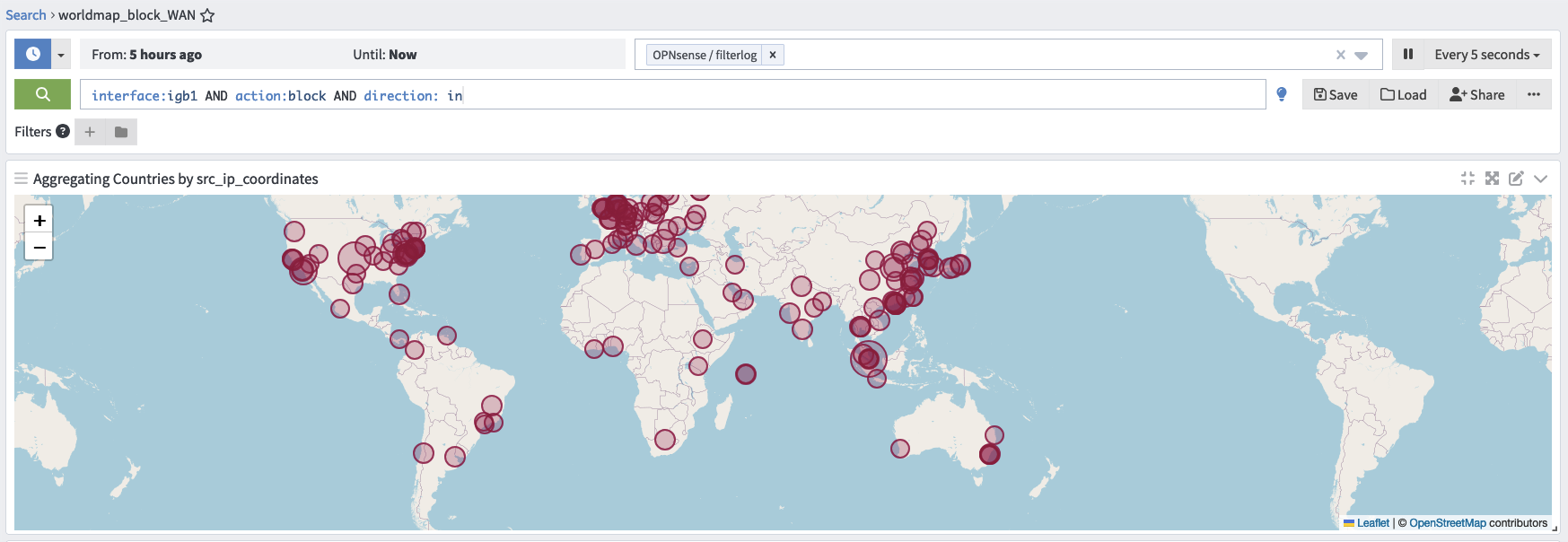

pipelinealong withruleslookup tablecachedata adaptersearchwith geomap display

-

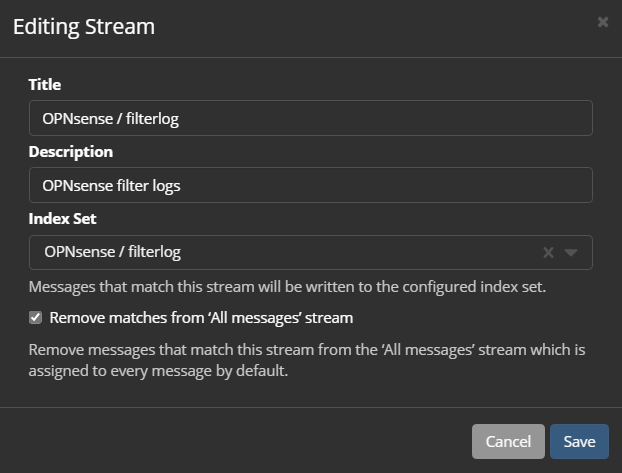

Add index set from [2] earlier to

OPNsense / filterlogstream. Navigate toStreams -> More Actions -> Edit Stream-> select your index set and save. -

Navigate to

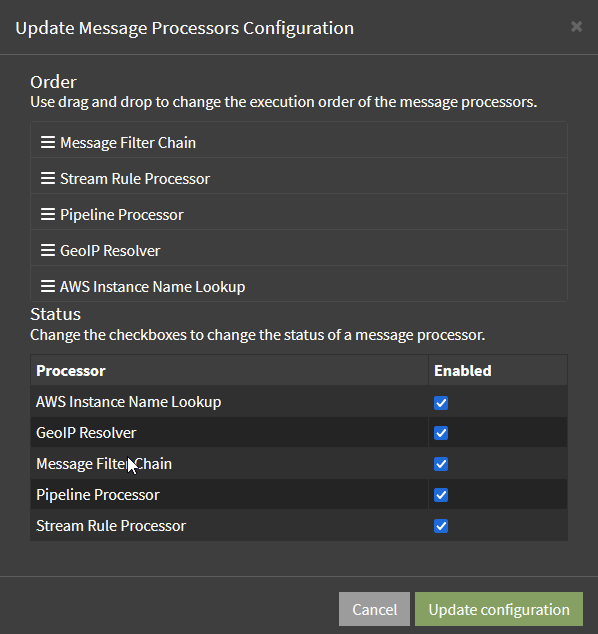

System -> Configurations -> Message Processors. The following order worked for Brian Smith:

But, I had to switch GeoIP Resolver and Pipeline Processor

Ensure that all of these are enabled, and click save.

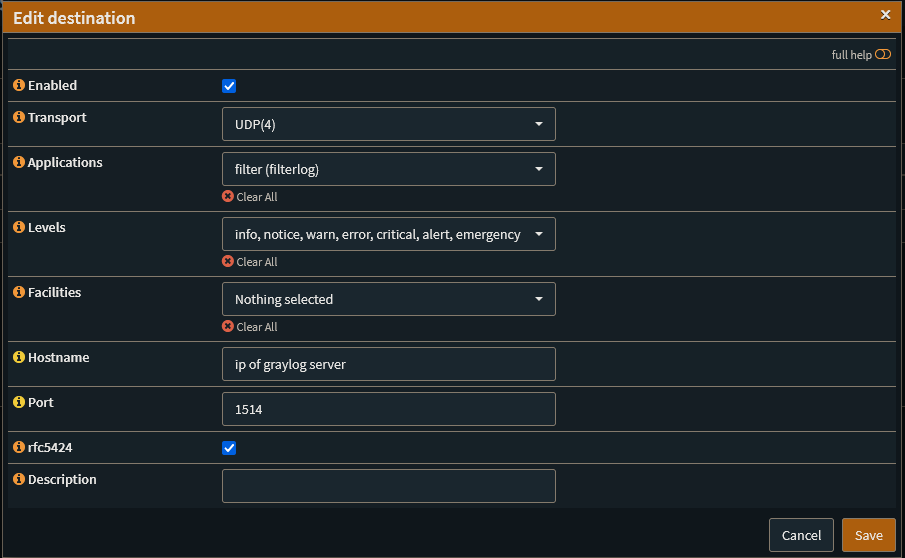

Once that is all done, login to your OPNsense router and navigate to System -> Settings -> Logging / targets. Add a new target with the following options:

Add a description if you'd like, then click save.

Note: I use port 1514 for sending standard syslog UDP streams from many of my VMs/containers. So as to reduce clutter and processing overhead, I changed this port to 1515. This enables the OPNSense / filterlog regex + extractors to work only on filterlog messages from OPNSense; not regular syslogs from other entities.

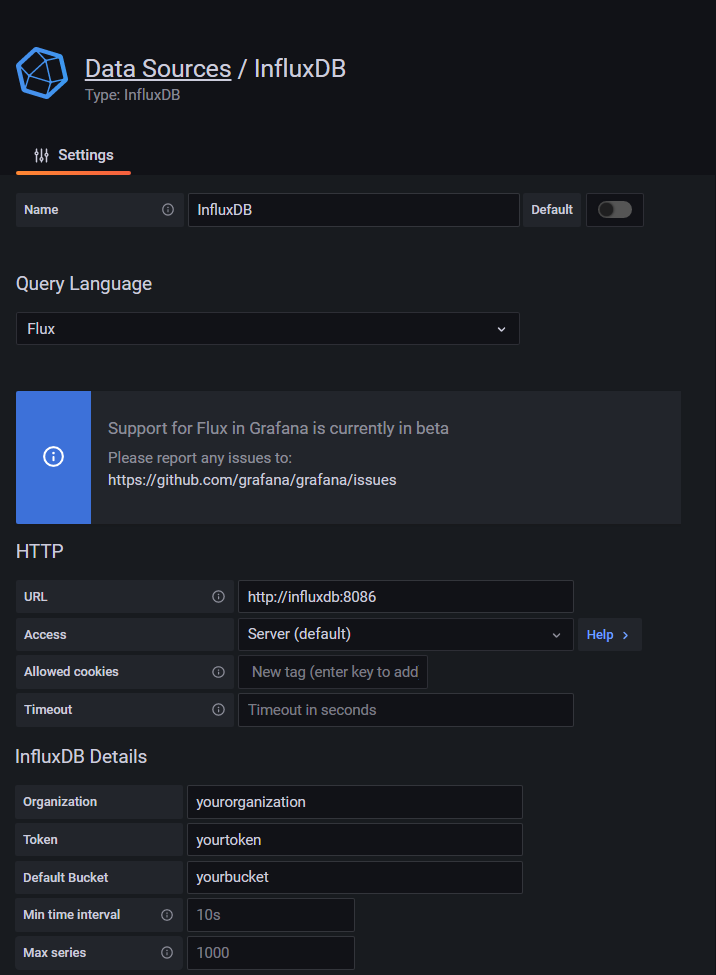

You will need to add the data sources on Grafana. Navigate to http://<docker_ip>:3000, login and click on the cog wheel and Add a Data Source.

For InfluxDB, make the following configurations

Query Language: Flux

URL: http://influxdb:8086

Organization: Your InfluxDB Organization

Token: Your Grafana InfluxDB API Token

Default Bucket: Your opnsense bucket. This will be the bucket that the panel queries will use.

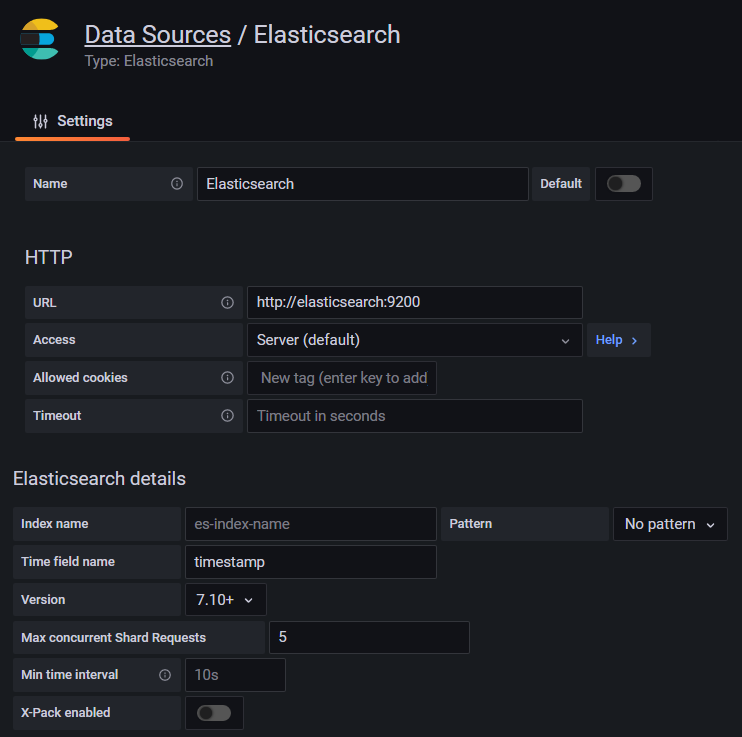

For ElasticSearch, make the following configurations

URL: http://elasticsearch:9200

Time field name: timestamp

Version: 7.10+

To import the dashboard, copy the JSON from OPNsense-Grafana-Dashboard.json

Navigate to Dashboards -> Browse -> Import, paste under Import via panel json.

Dashboard Settings -> Variables -> Click on a variable to edit

-

WAN - It is currently set to igb1 under Custom options. Use a comma-separated list for multiple WAN interfaces by editing the

Custom optionsfield. -

LAN - $LAN uses a regex to remove any interfaces you don't want to be grouped as LAN. The filtering happens in the "Regex" field. I use a negative lookahead regex to match the interfaces I want excluded. It should be pretty easy to understand what you need to do here. I have excluded

igb1(WAN). My traffic is on alagg0interface notigb0therefore i have excludedigb0as well./^(?!enc0$|igb0$|igb1$)/ -

iface - $iface is the interface variable for the Firewall panels. Its value is populated using flux query.

Recommended time range should be less than 24 hours.

(NOT YET TESTED for new filterlog format)

This section assumes you have already configured Suricata.

Add suricata.conf to /usr/local/etc/telegraf.d

sudo curl 'https://raw.githubusercontent.com/bsmithio/OPNsense-Dashboard/master/config/suricata/suricata.conf' -o /usr/local/etc/telegraf.d/suricata.conf

Add custom.yaml to /usr/local/opnsense/service/templates/OPNsense/IDS

sudo curl 'https://raw.githubusercontent.com/bsmithio/OPNsense-Dashboard/master/config/suricata/custom.yaml' -o /usr/local/opnsense/service/templates/OPNsense/IDS/custom.yaml

Create the log file and give telegraf permissions to read it

sudo touch /tmp/eve.json

sudo chown :telegraf /tmp/eve.json

sudo chmod 640 /tmp/eve.json

Restart Suricata from Services -> Intrusion Detection -> Administration

Uncheck Enabled and click Apply.

Check Enabled and click Apply.

Restart telegraf by running

sudo service telegraf restart

To import the dashboard, copy the JSON from OPNsense-Grafana-Dashboard-Suricata.json and navigate to Dashboards -> Browse -> Import and paste under Import via panel json.

- You can run most plugins from a shell/ssh session to verify the output. (the environment vars may be different when telegraf is executing the plugin)

- If you're copying from a windows system, make sure the CRLF is correct

- The below command should display unix line endings (\n or LF) as $ and Windows line endings (\r\n or CRLF) as ^M$.

cat -e /usr/local/bin/telegraf_pfifgw.php

If you get no good output from running the plugin directly, try the following command before moving to the step below.

sudo su -m telegraf -c 'telegraf --test --config /usr/local/etc/telegraf.conf --config-directory /usr/local/etc/telegraf.d'

To troubleshoot plugins further, enable Debug Log and disable Quiet Log in the Telegraf GUI then click Save. Run the above command again.

sudo su -m telegraf -c 'telegraf --test --config /usr/local/etc/telegraf.conf --config-directory /usr/local/etc/telegraf.d'

When in doubt, run a few queries to see if the data you are looking for is being populated. I recommend doing this in Grafana's Explore tab.

import "influxdata/influxdb/schema"

schema.measurements(bucket: "opnsense")

from(bucket: "opnsense")

|> range(start: -24h)

|> filter(fn: (r) => r["_measurement"] == "system")

|> limit(n:10)

You must access your InfluxDB instance's shell to do this.

To do so run

sudo docker exec -it influxdb /bin/bash

on your docker host.

Then use the following

influx delete --bucket "$YourBucket" --predicate '_measurement="$Example"' -o $organization --start "1970-01-01T00:00:00Z" --stop "2050-12-31T23:59:00Z" --token "$YourAPIToken"

https://docs.influxdata.com/influxdb/cloud/query-data/flux/query-fields/

https://docs.influxdata.com/influxdb/cloud/query-data/flux/explore-schema/

If there is no data on the Suricata dashboard, verify if there are any alerts in /tmp/eve.json.

If there is nothing in /tmp/eve.json, verify that /usr/local/opnsense/service/templates/OPNsense/IDS/custom.yaml and /usr/local/etc/suricata/custom.yaml are identical to the one in this repo.

If /usr/local/etc/suricata/custom.yaml is not identical, but /usr/local/opnsense/service/templates/OPNsense/IDS/custom.yaml is, you will need to reload Suricata from the GUI. To do so you would uncheck Enable in the Suricata GUI, click Apply, then check Enable, and click Apply again. You will need to wait for Suricata to reload. If you have a lot of rules this can take some time.

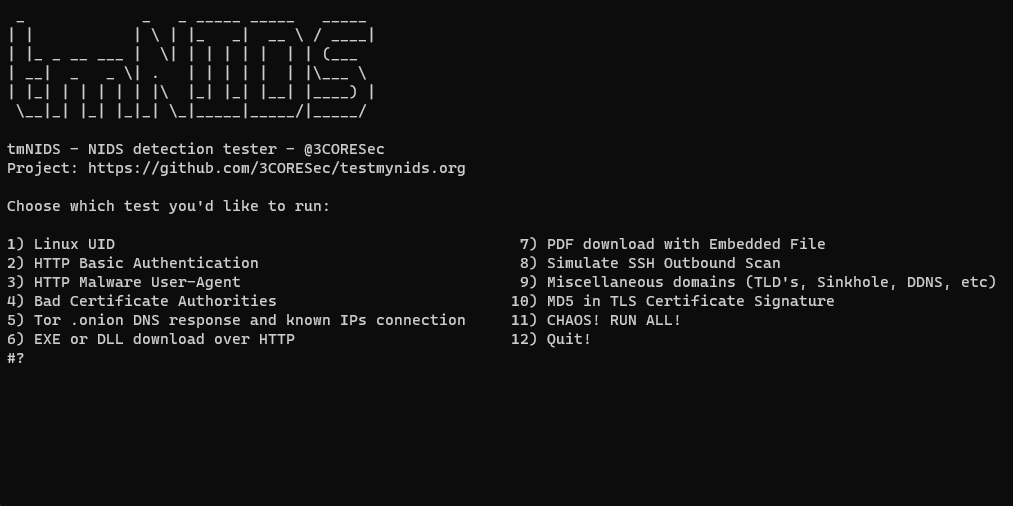

If you've verified and done all the steps above, and still see nothing, you could try using tmNIDS to generate alerts. You will need bash installed on your OPNsense system for this.

sudo pkg install bash

Once you have bash installed, you can use this one-liner to download and execute tmNIDS.

curl https://raw.githubusercontent.com/3CORESec/testmynids.org/master/tmNIDS -o /tmp/tmNIDS && chmod +x /tmp/tmNIDS && bash /tmp/tmNIDS

You can then run the tests through the CLI.

Graylog GeoIP plugin gives the following lookup information (Assuming MaxMind database is made available)

{

"as_number": 15169,

"as_organization": "Google LLC"

}

{

"continent": { "code": "NA", "geoname_id": 6255149, "names": { "en": "North America" } },

"country": { "geoname_id": 6252001, "iso_code": "US", "names": { "en": "United States" } },

"registered_country": { "geoname_id": 6252001, "iso_code": "US", "names": { } },

"represented_country": { "geoname_id": null, "iso_code": "US", "names": { } },

"traits": {

"ip_address": "8.8.8.8",

"is_anonymous_proxy": false,

"is_legitimate_proxy": false,

"is_satellite_provider": false,

"isp": null,

"organization": null,

}

}

{

"city": { "geoname_id": 5375480, "names": { "en": "Mountain View" } },

"location": {

"accuracy_radius": 1000,

"average_income": null,

"latitude": 37.386,

"longitude": -122.0838,

"metro_code": 807,

"population_density": null,

"time_zone": "America/Los_Angeles"

},

"postal": { "code": "94035" },

"subdivisions": [ { "geoname_id": 5332921, "iso_code": "CA", "names": { "en": "California" } } ],

}