NeurIPS 2024 [Paper] [Code] [Project Page] [🤗 Hugging Face Hub]

Authors Heeseung Kim1, Soonshin Seo2, Kyeongseok Jeong2, Ohsung Kwon2, Soyoon Kim2, Jungwhan Kim2, Jaehong Lee2, Eunwoo Song2, Myungwoo Oh2, Jung-Woo Ha2, Sungroh Yoon1,†, Kang Min Yoo2,†

1Seoul National University, 2NAVER Cloud, †Corresponding Authors

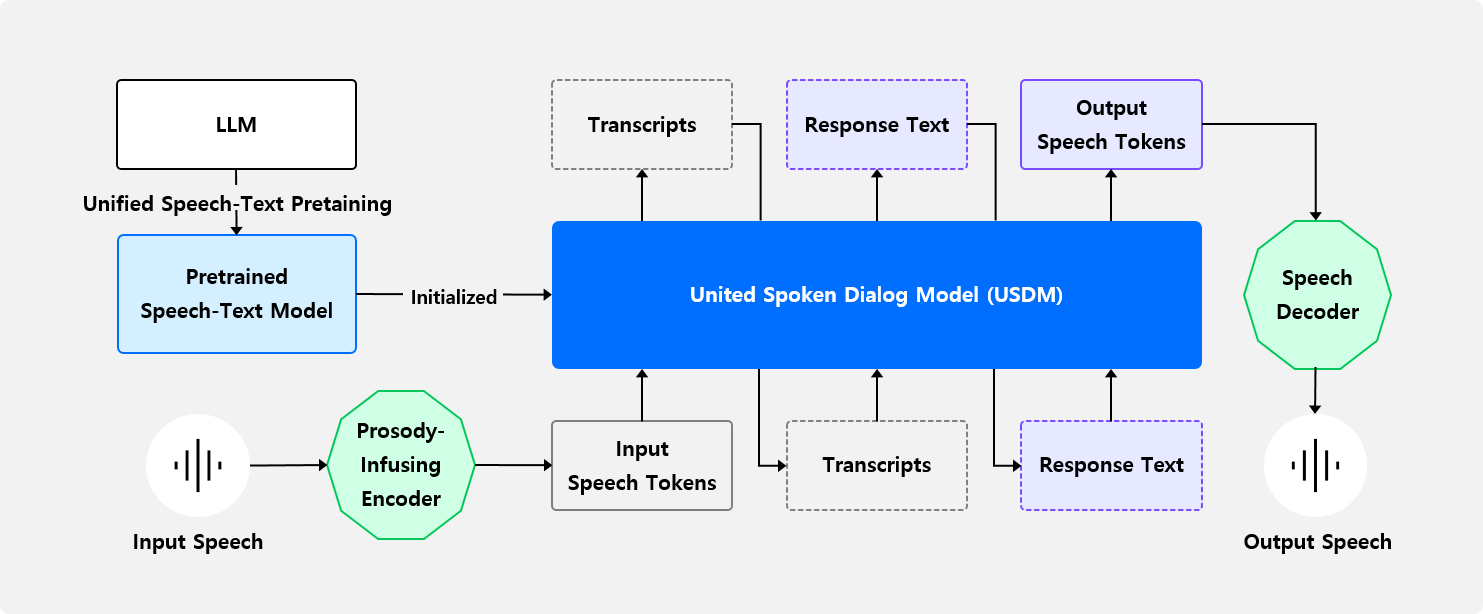

Recent work shows promising results in expanding the capabilities of large language models (LLM) to directly understand and synthesize speech. However, an LLM-based strategy for modeling spoken dialogs remains elusive, calling for further investigation. This paper introduces an extensive speech-text LLM framework, the Unified Spoken Dialog Model (USDM), designed to generate coherent spoken responses with naturally occurring prosodic features relevant to the given input speech without relying on explicit automatic speech recognition (ASR) or text-to-speech (TTS) systems. We have verified the inclusion of prosody in speech tokens that predominantly contain semantic information and have used this foundation to construct a prosody-infused speech-text model. Additionally, we propose a generalized speech-text pretraining scheme that enhances the capture of cross-modal semantics. To construct USDM, we fine-tune our speech-text model on spoken dialog data using a multi-step spoken dialog template that stimulates the chain-of-reasoning capabilities exhibited by the underlying LLM. Automatic and human evaluations on the DailyTalk dataset demonstrate that our approach effectively generates natural-sounding spoken responses, surpassing previous and cascaded baselines. Our code and checkpoints are available at https://github.com/naver-ai/usdm.

Our spoken dialog framework is structured in three parts:

-

Speech Tokenizer

- We use speech tokens (vocab size = 10,000) from SeamlessM4T, capturing essential semantic and paralinguistic information while minimizing vocabulary size for better generalization. For details and audio samples, see our project page and paper.

-

Spoken Dialog Model (USDM)

- Our model converts user speech tokens into response tokens using a two-stage training approach:

- Cross-modal training on a pre-trained LLM (Mistral-7B-v0.1) to handle text and speech.

- Fine-tuning for spoken dialog on DailyTalk.

- The DailyTalk-trained model supports two speakers; for broader scenarios, consider datasets like Fisher and Switchboard. For new tasks, load the pre-trained checkpoint and fine-tune as needed.

- Our model converts user speech tokens into response tokens using a two-stage training approach:

-

Speech Decoder

- The decoder converts model output into speech using a Voicebox-based mel-spectrogram generator and BigVGAN for speech synthesis. We trained the Voicebox model, while BigVGAN uses its official checkpoint.

We provide the following resources:

- Interleaved sequence preprocessing method for pre-training

- Preprocessing method for spoken dialog fine-tuning

- Instructions and codes for pre-training and supervised fine-tuning

- Instructions and codes for token-Voicebox training

For additional information, please refer to our paper.

We provide pre-trained models through Hugging Face Hub. The speech tokenizer and vocoder are automatically downloaded via SeamlessM4T and Hugging Face Hub.

| Repo Name | Usage |

|---|---|

naver-ai/xlsr-token-Voicebox |

Token-Voicebox: Converts speech tokens to mel-spectrograms, supporting personalization via reference mel-spectrograms. |

naver-ai/USTM |

Unified Speech-Text Model (USTM): Further-trained Mistral-7B-v0.1 on speech-text data for customizable fine-tuning on new tasks. |

naver-ai/USDM-DailyTalk |

Unified Spoken Dialog Model (USDM-DailyTalk): Fine-tuned USTM on DailyTalk with a single-turn spoken dialog setup. |

We provide a Streamlit demo. We also provide inference scripts based on transformers and vLLM libraries. Follow these steps to set up the environment (tested on CUDA V12.4.131, Python 3.10.15, Conda 24.5.0):

# Step 1: Create and activate a new conda environment

conda create -n usdm python=3.10.15

conda activate usdm

# Step 2: Install common dependencies

conda install -c conda-forge libsndfile=1.0.31

pip install torch==2.2.1 torchvision==0.17.1 torchaudio==2.2.1 --index-url https://download.pytorch.org/whl/cu121

pip install fairseq2 --extra-index-url https://fair.pkg.atmeta.com/fairseq2/whl/pt2.2.1/cu121

pip install .

pip install flash-attn==2.6.3 --no-build-isolationOur default generation strategy is set to greedy search. Feel free to experiment with different sampling strategies by modifying sampling parameters (top_k, top_p, temperature, num_beams, ...) to explore various output samples!

Note

- The released checkpoint of USDM is trained on DailyTalk using a single-turn template. For testing, we recommend using samples from the speakers in DailyTalk as inputs. If you wish to work with different speakers for input and output, we suggest fine-tuning our provided pre-trained speech-text model on your specific dataset.

- When you run the code, the checkpoints will be automatically downloaded to

YOUR_MODEL_CACHE_DIR(excluding the unit extractor). You can changeYOUR_MODEL_CACHE_DIRto save checkpoints to your preferred location.

To start Streamlit, use the following command:

MODEL_CACHE_DIR=YOUR_MODEL_CACHE_DIR CUDA_VISIBLE_DEVICES=gpu_id streamlit run src/streamlit_demo.py --server.port YOUR_PORTAccess the demo at localhost:YOUR_PORT.

Note: USDM is trained on DailyTalk and may perform less optimally for voices outside of this dataset.

# transformers-based (model.generate())

CUDA_VISIBLE_DEVICES=gpu_id python src/inference.py --input_path INPUT_WAV_PATH --output_path PATH_TO_SAVE_SPOKEN_RESPONSE --model_cache_dir YOUR_MODEL_CACHE_DIR

# vLLM-based

CUDA_VISIBLE_DEVICES=gpu_id python src/inference_vllm.py --input_path INPUT_WAV_PATH --output_path PATH_TO_SAVE_SPOKEN_RESPONSE --model_cache_dir YOUR_MODEL_CACHE_DIRTo generate a response that adapts to a reference speaker’s voice, add the reference_path argument:

# transformers-based (model.generate())

CUDA_VISIBLE_DEVICES=gpu_id python src/inference.py --input_path INPUT_WAV_PATH --reference_path REFERENCE_WAV_PATH --output_path PATH_TO_SAVE_SPOKEN_RESPONSE --model_cache_dir YOUR_MODEL_CACHE_DIR

# vLLM-based

CUDA_VISIBLE_DEVICES=gpu_id python src/inference_vllm.py --input_path INPUT_WAV_PATH --reference_path REFERENCE_WAV_PATH --output_path PATH_TO_SAVE_SPOKEN_RESPONSE --model_cache_dir YOUR_MODEL_CACHE_DIRNote: To generate spoken responses in the desired speaker's voice, our model requires 1. reference audio from the target speaker, and 2. the target speaker's speech token to be generated through USDM. The current single-turn USDM model, fine-tuned on DailyTalk, is optimized for two specific speakers and generates speech tokens for these speakers, which may limit its adaptability to other voices.

Potential Solution: To address this, USDM must be trained to robustly generate speech tokens for unseen speakers. This can be achieved by training USDM on a multi-turn, multi-speaker dataset (e.g., Fisher), enabling it to generate speech tokens for unseen speakers by learning to produce consistent speech tokens for the same speaker across multiple turns. For more detailed explanations and examples, please refer to the multi-turn Fisher examples on our project page and Section A.1 of our paper.

@inproceedings{

kim2024paralinguisticsaware,

title={Paralinguistics-Aware Speech-Empowered Large Language Models for Natural Conversation},

author={Heeseung Kim and Soonshin Seo and Kyeongseok Jeong and Ohsung Kwon and Soyoon Kim and Jungwhan Kim and Jaehong Lee and Eunwoo Song and Myungwoo Oh and Jung-Woo Ha and Sungroh Yoon and Kang Min Yoo},

booktitle={The Thirty-eighth Annual Conference on Neural Information Processing Systems},

year={2024},

url={https://openreview.net/forum?id=NjewXJUDYq}

}

USDM

Copyright (c) 2024-present NAVER Cloud Corp.

Licensed under the Apache License, Version 2.0 (the "License");

you may not use this file except in compliance with the License.

You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License.

- Speech Token Extractor: SeamlessM4T

- Example dataset for code demonstration: DailyTalk, LibriTTS

- Pre-training

- Pre-processing / Alignment Search: Montreal-Forced-Aligner

- Pre-processing / Data Packing: Multipack Sampler

- Custom Model / Preventing Cross-Contamination: Mistral-7B-v0.1 in Transformers, Flash-Attention

- Token Decoder

- Token-Voicebox Code Structure, Model Architecture: Glow-TTS, Grad-TTS, Transformers, Matcha-TTS, xformers

- Mel-spectrogram to Speech Decoder: BigVGAN