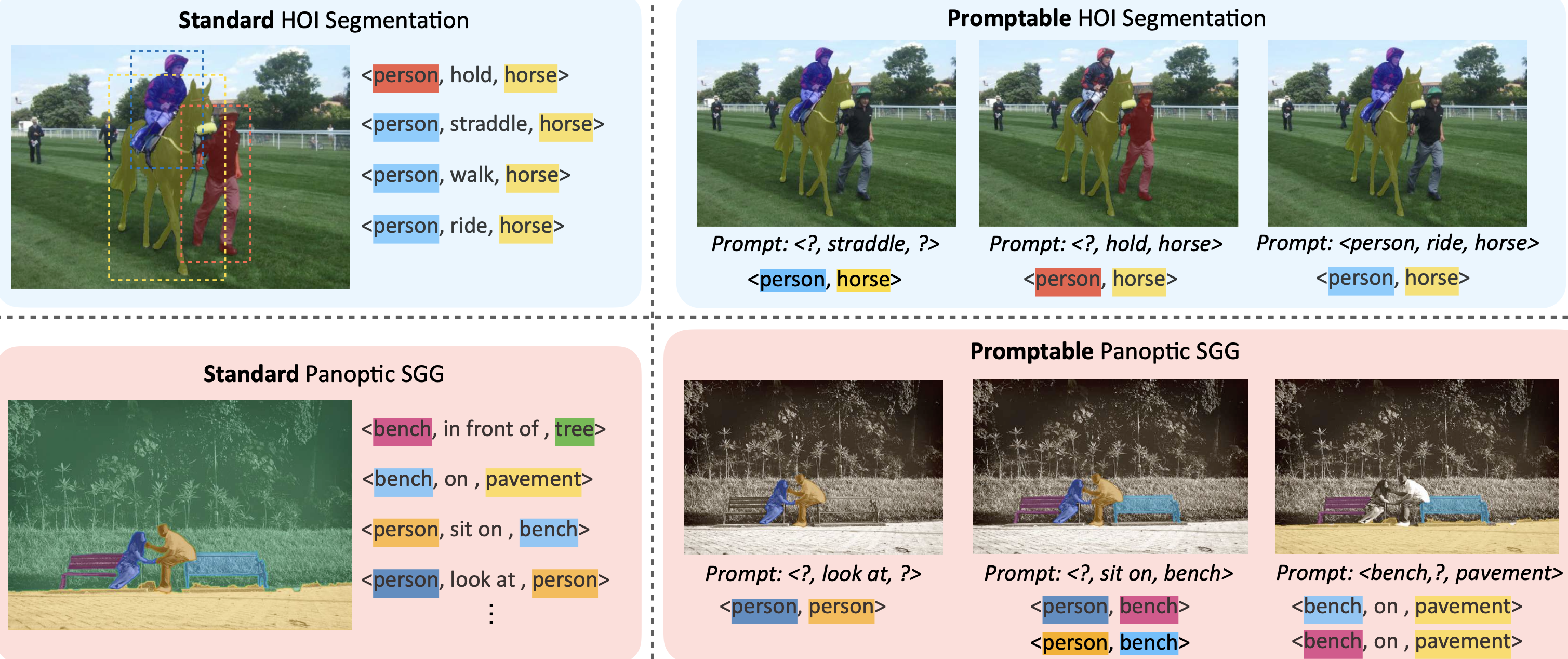

FleVRS: Towards Flexible Visual Relationship Segmentation Fangrui Zhu, Jianwei Yang, Huaizu Jiang

@inproceedings{zhu2024towards,

author = {Zhu, Fangrui and Yang, Jianwei and Jiang, Huaizu},

title = {Towards Flexible Visual Relationship Segmentation},

booktitle = {NeurIPS},

year = {2024}

}- Training and evaluation code.

- Preprocessed annotations on Huggingface.

- Model weights on Huggingface.

- Demo with SAM2.

Install the dependencies.

pip install -r requirements.txt

HICO-DET dataset can be downloaded here. After finishing downloading, unpack the tarball (hico_20160224_det.tar.gz) to the data directory.

Please download files here and mask version of the annotation here, and place them as follows.

data

└─ hico_20160224_det

|─ images

|─ train2015

|─ test2015

|─ annotations

|─ trainval_hico.json

|─ test_hico.json

|─ test_hico_w_sam_mask_merged.pkl

|─ corre_hico_filtered_nointer.npy

|─ exclude_test_filename.pkl

|─ trainval_hico_samL_mask_filt

|─ HICO_train2015_00018979.pkl

:

train2014 and val2014 are from COCO2014 dataset.

Please download corre_vcoco.npy, trainval_vcoco.json and test_vcoco.json here. And put them under annotations folder.

data

└─ VCOCO

|─ images

|─ train2014

└─ val2014

|─ COCO_val2014_000000000042.jpg

:

|─ annotations

|─ corre_vcoco.npy

|─ trainval_vcoco.json

|─ test_vcoco.json

|─ test_vcoco_w_saml_mask_merged.pkl

|─ trainval_vcoco_w_saml_mask

|─ COCO_train2014_000000309744.pkl

:

Download psg.json here.

The following files are needed for PSG.

data

├── coco

│ ├── panoptic_train2017

│ ├── panoptic_val2017

│ ├── train2017

│ └── val2017

└── psg

└── psg.json

Download the pretrained weights xdec_weights_focall.pth here. Create a folder params and put the file under it.

Please check scripts under configs/ for details.

sh configs/standard/train_standard_hico+vcoco+psg_focall.sh

sh configs/standard/train_focall_standard_hico.sh

sh configs/standard/train_standard_psg.sh

...

Please check scripts under configs/ for details.

sh configs/standard/test_hico.sh

...

Our code is based on X-Decoder.

We also thank the following works:

HiLo, CDN, Mask2Former

This code is distributed under an MIT LICENSE.

Note that our code depends on other libraries, including CLIP, Transformers, FocalNet, PyTorch, and uses datasets that each have their own respective licenses that must also be followed.