a href="https://sambanova.ai/">

- Prompt Engineering Starter Kit

- Overview

- Getting started

- Customizing the template

- Third-party tools and data sources

Begin by deploying your LLM of choice (e.g. Llama 2 13B chat, etc) to an endpoint for inference in SambaStudio either through the GUI or CLI, as described in the SambaStudio endpoint documentation.

Integrate your LLM deployed on SambaStudio with this AI starter kit in two simple steps:

- Clone repo.

git clone https://github.com/sambanova/ai-starter-kit.git

- Update API information for the SambaNova LLM. These are represented as configurable variables in the environment variables file in the root repo directory

sn-ai-starter-kit/export.env. For example, an endpoint with the URL "https://api-stage.sambanova.net/api/predict/nlp/12345678-9abc-def0-1234-56789abcdef0/456789ab-cdef-0123-4567-89abcdef0123" would be entered in the config file (with no spaces) as:

BASE_URL="https://api-stage.sambanova.net"

PROJECT_ID="12345678-9abc-def0-1234-56789abcdef0"

ENDPOINT_ID="456789ab-cdef-0123-4567-89abcdef0123"

API_KEY="89abcdef-0123-4567-89ab-cdef01234567"

- Install requirements: It is recommended to use virtualenv or conda environment for installation, and to update pip.

cd ai-starter-kit/prompt-engineering

python3 -m venv prompt_engineering_env

source prompt_engineering_env/bin/activate

pip install -r requirements.txt

To run the demo, run the following commands:

streamlit run streamlit/app.py

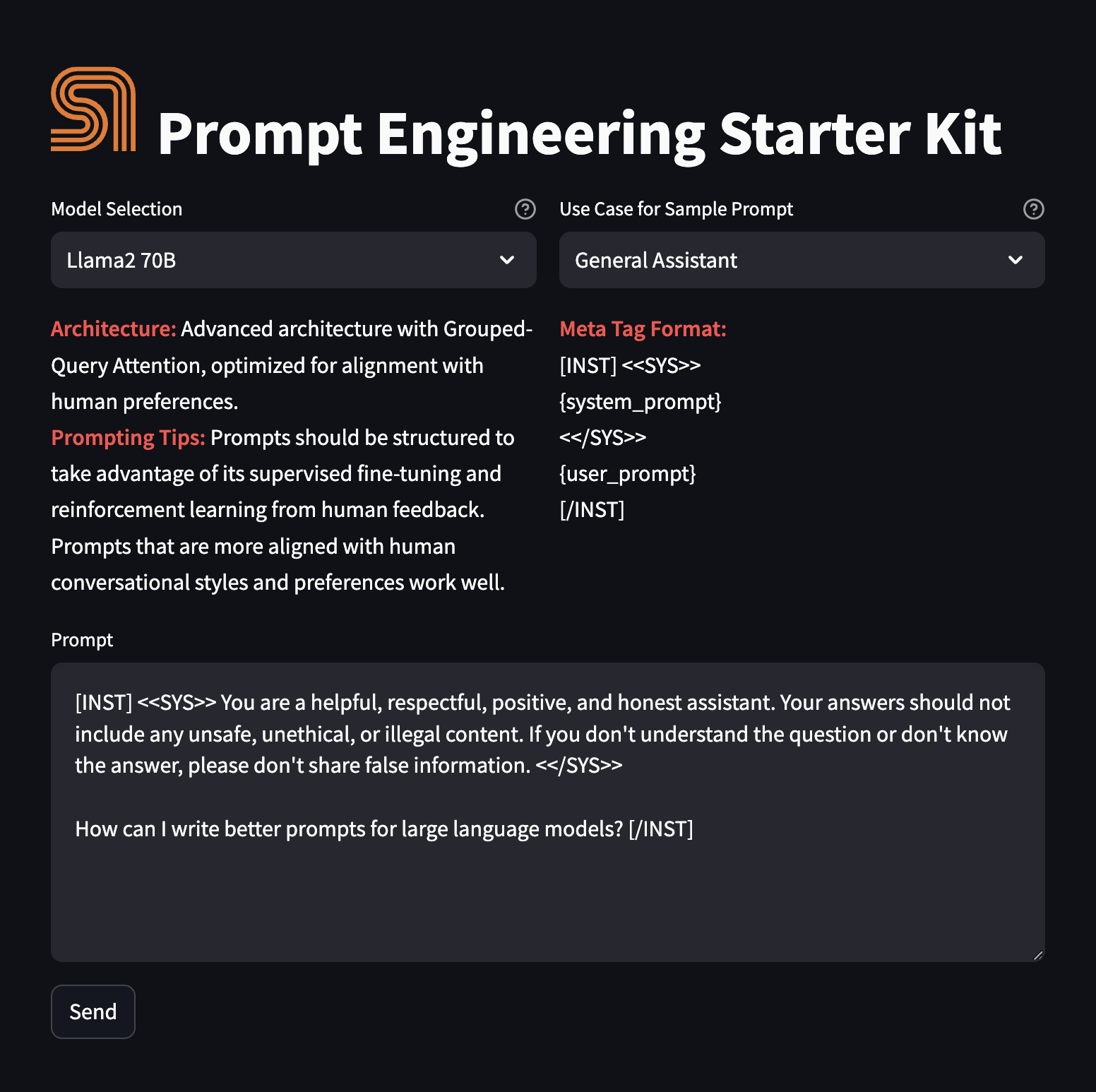

After deploying the starter kit you should see the following application user interface

1- Choose the LLM to use from the options available under Model Selection (Currently, only Llama2 70B is available) Upon selection, you'll see a description of the architecture, along with prompting tips and the Meta tag format required to optimize the model's performance.

2- Select the template in Use Case for Sample Prompt, you will find a list of available templates:

-

General Assistant: Provides comprehensive assistance on a wide range of topics, including answering questions, offering explanations, and giving advice. It's ideal for general knowledge, trivia, educational support, and everyday inquiries.

-

Document Search: Specializes in locating and briefing relevant information from large documents or databases. Useful for research, data analysis, and extracting key points from extensive text sources.

-

Product Selection: Assists in choosing products by comparing features, prices, and reviews. Ideal for shopping decisions, product comparisons, and understanding the pros and cons of different items.

-

Code Generation: Helps in writing, debugging, and explaining code. Useful for software development, learning programming languages, and automating simple tasks through scripting.

-

Summarization: Outputs a summary based on a given context. Essential for condensing large volumes of text

3- Review and edit the input to the model in the Prompt text input field

4- Click the Send button, button to submit the prompt. The model will retrieve and display the response.

You can include more models to the kit

- First [set your model] in Sambastudio (#integrate-your-model)

- Include your model description in the model section in the

config.jsonfile - Populate key variables from your env file in

streamlit/app.py - Define the method for calling the model, you can see the example call_sambanova_llama2_70b_api in

streamlit/app.py - Include the method usage in

st.buton(send)copletition section in thestreamlit/app.py

You can edit one of the existing templates using the create_prompt_yamls() method in streamlit/app.py, then execute the method, this will modify the prompt yaml file in prompts folder

You can include more templates to the app following the instructions in Edit a template and then including the template use case in the use_cases list of config.yaml file

For further examples, we encourage you to visit any of the following resources:

All the packages/tools are listed in the requirements.txt file in the project directory. Some of the main packages are listed below:

- streamlit (version 1.25.0)

- langchain (version 1.1.4)

- python-dotenv (version 1.0.0)

- Requests (version 2.31.0)

- sseclient (version 0.0.27)

- streamlit-extras (version 0.3.6)

- pydantic (version 1.10.14)

- pydantic_core (version 2.10.1)