This is Helm Chart for deploying Confluent Open Source on Kubernetes, which include:

- Confluent Kafka

- Confluent Zookeeper

- Confluent Schema Registry

- Confluent Kafka REST Proxy

- Confluent Kafka Connect

- Kubernetes 1.9.2+

- Helm 2.8.2+

- Confluent Platform Open Source Docker Images 4.1.1

Different options of creating a Kubernetes Cluster:

- Minikube, https://github.com/kubernetes/minikube.

- Google Kubernetes Engine, https://cloud.google.com/kubernetes-engine/docs/quickstart.

Follow Helm's quickstart to install and deploy Helm to the k8s cluster.

Run helm ls to verify the local installation.

NOTE: For Helm versions prior to 2.9.1, you may see "connect: connection refused", and will need to fix up the deployment before proceeding.

# Fix up the Helm deployment, if needed:

kubectl delete --namespace kube-system svc tiller-deploy

kubectl delete --namespace kube-system deploy tiller-deploy

kubectl create serviceaccount --namespace kube-system tiller

kubectl create clusterrolebinding tiller-cluster-rule --clusterrole=cluster-admin --serviceaccount=kube-system:tiller

kubectl patch deploy --namespace kube-system tiller-deploy -p '{"spec":{"template":{"spec":{"serviceAccount":"tiller"}}}}'

helm init --service-account tiller --upgradegit clone https://github.com/confluentinc/cp-helm-charts.git

- The steps below will install a 3 node cp-zookeeper, a 3 node cp-kafka cluster,1 schema registry,1 rest proxy and 1 kafka connect in your k8s env.

helm install cp-helm-charts- To install with a specific name, you can do:

$ helm install --name my-confluent-oss cp-helm-charts- To install without rest proxy, schema registry and kafka connect

helm install --set cp-schema-registry.enabled=false,cp-kafka-rest.enabled=false,cp-kafka-connect.enabled=false cp-helm-charts/helm test <release name> will run the embedded test pod in each sub-chart to verify installations

- Deploy a zookeeper client pod

kubectl apply -f cp-helm-charts/examples/zookeeper-client.yaml - Log into the Pod

kubectl exec -it zookeeper-client -- /bin/bash - Use zookeeper-shell to connect in the zookeeper-client Pod:

zookeeper-shell <zookeeper service>:<port> - Explore with zookeeper commands, for example:

# Gives the list of active brokers ls /brokers/ids # Gives the list of topics ls /brokers/topics # Gives more detailed information of the broker id '0' get /brokers/ids/0

- Deploy a kafka client pod

kubectl apply -f cp-helm-charts/examples/kafka-client.yaml - Log into the Pod

kubectl exec -it kafka-client -- /bin/bash - Explore with kafka commands:

## Setup export RELEASE_NAME=<release name> export ZOOKEEPERS=${RELEASE_NAME}-cp-zookeeper:2181 export KAFKAS=${RELEASE_NAME}-cp-kafka-headless:9092 ## Create Topic kafka-topics --zookeeper $ZOOKEEPERS --create --topic test-rep-one --partitions 6 --replication-factor 1 ## Producer kafka-run-class org.apache.kafka.tools.ProducerPerformance --print-metrics --topic test-rep-one --num-records 6000000 --throughput 100000 --record-size 100 --producer-props bootstrap.servers=$KAFKAS buffer.memory=67108864 batch.size=8196 ## Consumer kafka-consumer-perf-test --broker-list $KAFKAS --messages 6000000 --threads 1 --topic test-rep-one --print-metrics

helm ls # to check find out release name

helm delete <release name>

# delete all pvc created by this release

kubectl delete pvc --selector=release=<release name>

NOTE: All Scale In/Out operations should be done offline with no producer/consumer connection

Install co-helm-charts with default 3 nodes zookeeper ensemble

helm install cp-helm-charts

Scale zookeeper nodes out to 5, change servers under cp-zookeeper to 5 in values.yaml

helm upgrade <release name> cp-helm-charts

Scale zookeeper nodes out to 5, change servers under cp-zookeeper to 3 in values.yaml

helm upgrade <release name> cp-helm-charts

NOTE: Scaling in/out Kafka brokers without doing Partition Reassignment will cause data loss!!

Be sure to reassign partitions correctly before scaling in/out Kafka cluster. Please refer: https://kafka.apache.org/documentation/#basic_ops_cluster_expansion

Install co-helm-charts with default 3 brokers kafka cluster

helm install cp-helm-charts

Scale kafka brokers out to 5, change brokers under cp-kafka to 5 in values.yaml

helm upgrade <release name> cp-helm-charts

Scale kafka brokers out to 5, change brokers under cp-kafka to 3 in values.yaml

helm upgrade <release name> cp-helm-charts

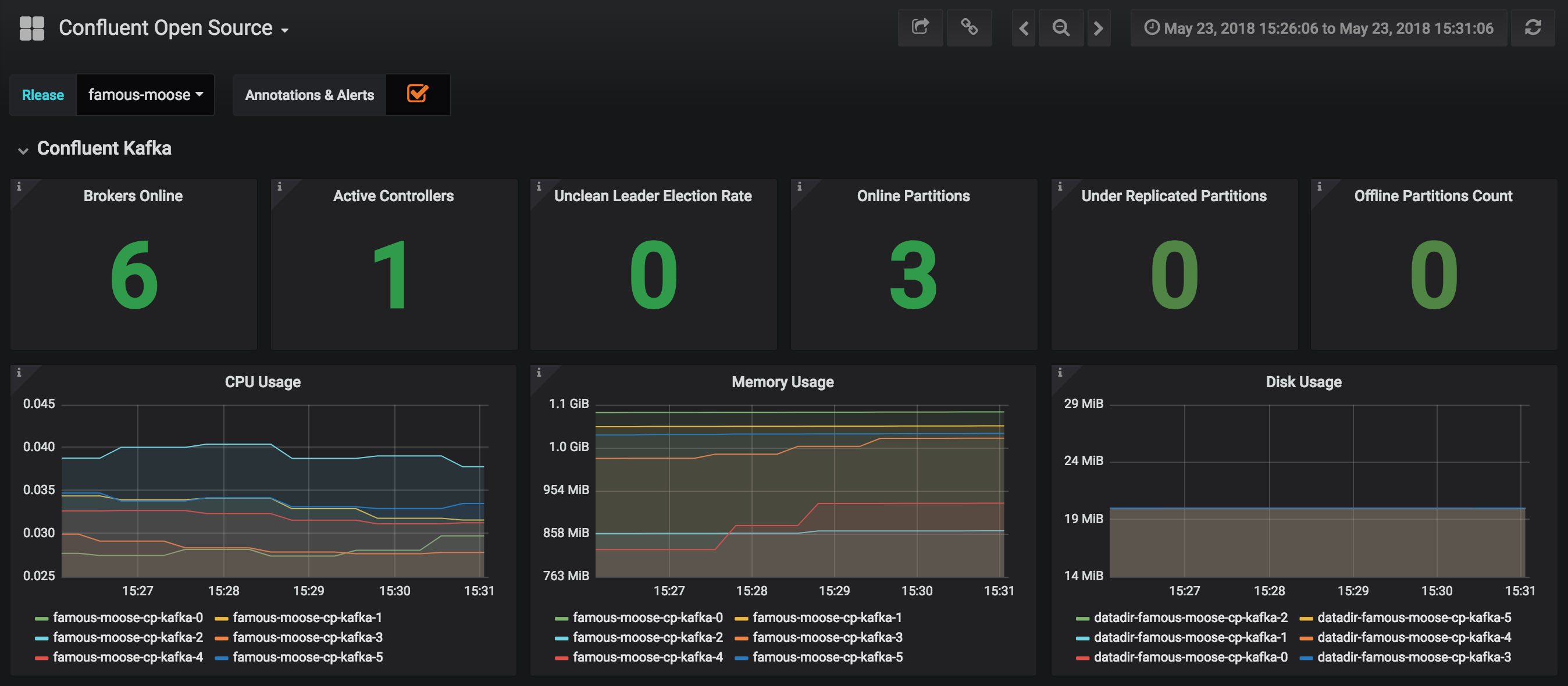

JMX Metrics are enabled by default for all components, Prometheus JMX Exporter is installed as a sidecar container along with all Pods.

-

Install Prometheus and Grafana in same Kubernetes cluster using helm

helm install stable/prometheus helm install stable/grafana -

Add Prometheus as Data Source in Grafana, url should be something like:

http://illmannered-marmot-prometheus-server:80 -

Import dashboard under grafana-dashboard into Grafana

Huge thanks to: