This function calling kit is an example of tools calling implementation and a generic function calling module that can be used inside your application workflows.

- Function Calling kit

- Before you begin

- Use the Function Calling kit

- Third-party tools and data sources

This example application for function calling automates multi-step analysis by enabling language models to use information and operations from user-defined functions. While you can use any functions or database with the application, an example use case is implemented here: Uncovering trends in music sales using the provided sample database and tools. By leveraging natural language understanding, database interaction, code generation, and data visualization, the application provides a real-world example of how models can use function calling to automate multi-step analysis tasks with accuraccy.

In addition to the sample DB of music sales, the application includes four tools that are available for the model to call as functions:

- query_db: Allows users to interact with the sample music sales database via natural queries. You can ask questions about the data, such as "What are the top-selling albums of all time?" or "What is the total revenue from sales in a specific region?" The function will then retrieve the relevant data from the database and display the results.

- python_repl: Provides a Python Read-Eval-Print Loop (REPL) interface, allowing the model to execute Python code and interact with the results in real-time. The model can use this function to perform data analysis, create data visualizations, or execute custom code to generate and verify the answer to any arbitrary question where code is helpful.

- calculator: Provides a simple calculator interface that allows the model to perform mathematical calculations using natural language inputs. The user can ask questions like "What is 10% of 100?" or "What is the sum of 2+2?" and the function will return the result.

- get_time: Returns the current date and time for use in queries or calculations.

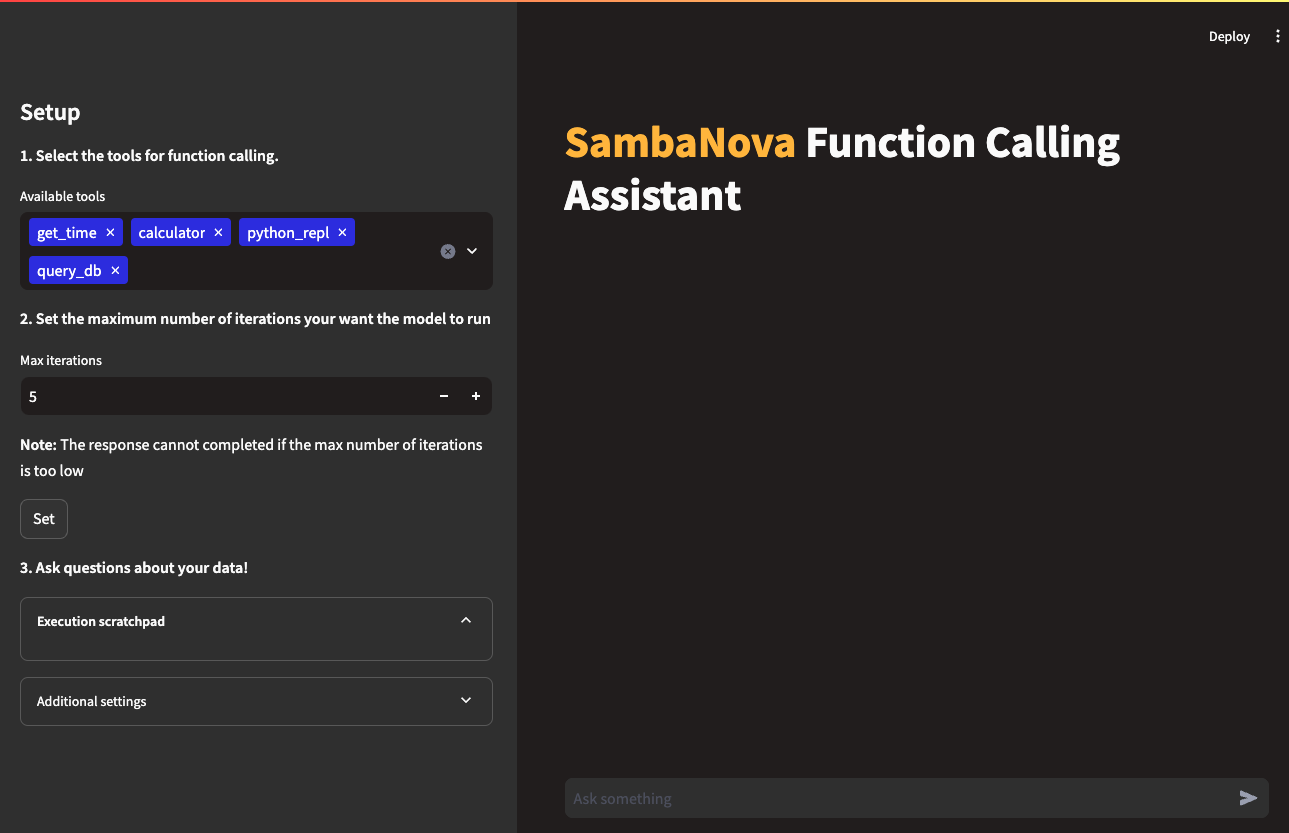

Once the API credentials are set in the Streamlit GUI, a user can select which tools are available to the model for function calling, and select how many iterations (reasoning steps) that the model can call a tool before stopping. Once these parameters are set, users can then submit natural language queries about the dataset or select from the examples. While the application runs, the user can view how the model is trying to solve them in the execution scratchpad, and view the output from execution in the chat window.

Here is a five-minute video walking through use of the kit: https://github.com/user-attachments/assets/71e1a4d1-fbc6-4022-a997-d31bfa0b5f14

To use this in your application you need an instruction model, we recommend to use the Meta Llama3 70B or Llama3 8B.

Clone the starter kit repo.

git clone https://github.com/sambanova/ai-starter-kit.git

The next step is to set up your environment variables to use one of the inference models available from SambaNova. You can obtain a free API key through SambaNova Cloud. Alternatively, if you are a current SambaNova customer, you can deploy your models using SambaStudio.

-

SambaNova Cloud (Option 1): Follow the instructions here to set up your environment variables. Then, in the config file, set the llm

apivariable to"sncloud"and set theselect_expertconfig depending on the model you want to use. -

SambaStudio (Option 2): Follow the instructions here to set up your endpoint and environment variables. Then, in the config file, set the llm

apivariable to"sambastudio", and set thebundleandselect_expertconfigs if you are using a bundle endpoint.

You have the following options to set up your embedding model:

-

CPU embedding model (Option 1): In the config file, set the variable

typeinembedding_modelto"cpu". -

SambaStudio embedding model (Option 2): To increase inference speed, you can use a SambaStudio embedding model endpoint instead of using the default (CPU) Hugging Face embedding. Follow the instructions here to set up your endpoint and environment variables. Then, in the config file, set the variable

typeinembedding_modelto"sambastudio", and set the configsbatch_size,bundleandselect_expertaccording to your sambastudio endpoint

We recommend that you run the starter kit in a virtual environment. We also recommend using Python >= 3.10 and < 3.12.

Install the python dependencies in your project environment:

cd ai-starter-kit/function_calling

python3 -m venv function_calling_env

source function_calling_env/bin/activate

pip install -r requirements.txtWe provide a simple GUI that allows you to interact with your function calling model

To run it execute the following command

streamlit run streamlit/app.py --browser.gatherUsageStats false After deploying the starter kit GUI App you see the following user interface:

On that page you will be able to select your function calling tools and the max number of iterations available for the model to answer your query.

We provide a simple module for using the Function Calling LLM, for this you will need:

-

Create your set of tools:

You can create a set of tools that you want the model to be able to use, those tools, should be langchain tools.

We provide an example of different langchain integrated tools and implementation of custom tools in src/tools.py, and in the step by step notebook.

See more in langchain tools

-

Instantiate your Function calling LLM, passing the model to use (Sambastudio), and the required tools as argument, then you can invoke the function calling pipeline using the

function_call_llmmethod passing the user queryfrom function_calling.src.function_calling import FunctionCallingLlm ### Define your tools from function_calling.src.tools import get_time, calculator, python_repl, query_db tools = [get_time, calculator, python_repl, query_db] fc = FunctionCallingLlm(tools) fc.function_call_llm("<user query>", max_it=5, debug=True)

we provide an usage notebook that you can use as a guide for using the function calling module

The example module can be further customized based on the use case.

The complete tools generation, methods, prompting and parsing for implementing function calling, can be found and further customized for your specific use case following the Guide notebook

All the packages/tools are listed in the requirements.txt file in the project directory.