- Multimodal Knowledge Retrieval

- Overview

- Before you begin

- Deploy the starter kit GUI

- Use the starter kit GUI

- Workflows

- Customizing the starter kit

- Third-party tools and data sources

This AI Starter Kit is an example of a multimodal retrieval workflow. You load your images or PDF files, and get answers to questions about the documents content. The Kit includes:

- A configurable SambaStudio connector. The connector generates answers from a deployed multimodal model, and a deployed LLM.

- An integration with a third-party vector database.

- An implementation of a semantic search workflow.

- An implementation of a multi-vector database for searching over document summaries

- Prompt construction strategies.

This sample is ready-to-use. We provide two options:

- Use the starter kit GUI helps you run a demo by following a few simple steps. You must perform the setup in Before you begin and Deploy the starter kit GUI first.

- Customizing the starter kit serves as a starting point for customizing the demo to your organization's needs.

multimodal.final.mp4

The AI Starter Kit includes capabilities for retrieving and analyzing images contained in your source documents and integrating them with table textual information to generate comprehensive answers.

Image Source Retrieval: The system retrieves and shows the image sources used to generate an answer, allowing you to perform further analysis on the original images.

General Image Understanding:

The model is capable of understanding the general content and some specific details of images such as photos, logos, and other visual elements.

-

Charts and Graphs:

General Understanding: The model can understand the generalities of charts, including simple and uncluttered charts where it can identify trends. Axes Recognition: It usually recognizes what the axes in plots represent. Detailed Data Points: Comparative Analysis: It is not proficient at making comparisons between data points or performing detailed analyses of graphs.

-

Diagrams and Schematics:

General Understanding: The model can grasp general concepts in diagrams such as schematics and database schemas. Detailed Information: It is unreliable in generating detailed information, such as text contained within diagrams or specific details of connections, and can sometimes produce hallucinated information.

PDF Documents: The model performs well in analyzing PDF documents that include both text and images. It uses tables and images to generate comprehensive answers and provides related images to support its responses.

This overview should help users understand the strengths and limitations of the image analysis capabilities in your AI Starter Kit, enabling them to make better use of the system.

This starter kit, you need both a LVLM and a LLM.

- We use the Llama3.2 11B LVLM, which is currently available in SambaNovaCloud.

- You can use a LLM of your choice, from SambaStudio or SambaNovaCloud.

It might make sense to use two SambaNovaCloud models.

Clone the starter kit repo.

git clone https://github.com/sambanova/ai-starter-kit.git

- Deploy LVLM of choice (e.g. Llava 1.5) to an endpoint for inference in SambaStudio, either through the GUI or CLI. See the SambaStudio endpoint documentation.

The next step is to set up your environment variables to use one of the models available from SambaNova. If you're a current SambaNova customer, you can deploy your models with SambaStudio. If you are not a SambaNova customer, you can self-service provision API endpoints using SambaNova Cloud.

-

If using SambaNova Cloud Please follow the instructions here for setting up your environment variables. Then in the config file set the llm and lvlm

typevariable to"sncloud"and set theselect_expertconfig depending on the models you want to use. -

If using SambaStudio Please follow the instructions here for setting up endpoint and your environment variables. Then in the config file set the llm and lvlm

typevariable to"sambastudio", set thebundleandselect_expertconfigs if using bundle endpoints.

You have these options to specify the embedding API info:

-

Option 1: Use a CPU embedding model

In the config file, set the variable

typeinembedding_modelto"cpu" -

Option 2: Set a SambaStudio embedding model

To increase inference speed, you can use a SambaStudio embedding model endpoint instead of using the default (CPU) Hugging Face embeddings.

-

Follow the instructions here for setting up your environment variables.

-

In the config file, set the variable

typeembedding_modelto"sambastudio"and set the configsbatch_size,bundleandselect_expertaccording your sambastudio endpointNOTE: Using different embedding models (cpu or sambastudio) may change the results, and change How the embedding model is set and what the parameters are.

- Ubuntu instalation:

sudo apt install tesseract-ocr - Mac Homebrew instalation:

brew install tesseract

- Windows instalation:

> [Windows tessearc instalation](https://github.com/UB-Mannheim/tesseract/wiki)

- For other linux distributions, follow the Tesseract-OCR installation guide

We recommend that you run the starter kit in a virtual environment or use a container.

NOTE: python 3.10 or higher is required to run this kit.

-

Install and update pip.

cd ai-starter-kit/multimodal_knowledge_retriever python3 -m venv multimodal_knowledge_env source multimodal_knowledge_env/bin/activate pip install -r requirements.txt

-

Run the following command:

streamlit run streamlit/app.py --browser.gatherUsageStats false

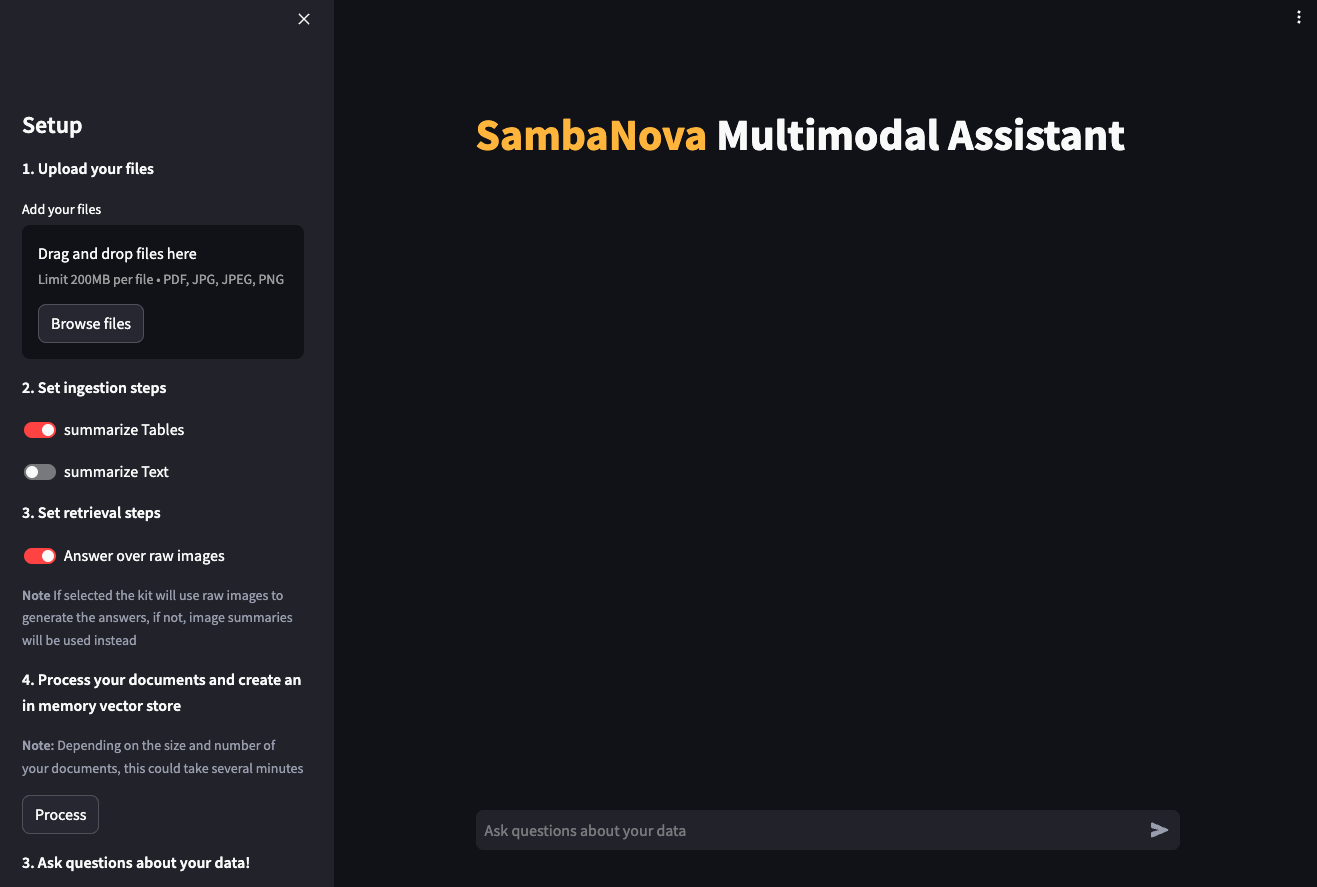

After deploying the starter kit you see the following user interface:

NOTE: If you are deploying the docker container in Windows be sure to open the docker desktop application.

To run the starter kit with docker, run the following command:

docker-compose up --build

You will be prompted to go to the link (http://localhost:8501/) in your browser where you will be greeted with the streamlit page as above.

When the GUI is up and running, follow these steps to use it:

-

In the left pane, under Upload your files, drag and drop PDF or image files or browse for files.

-

Under Set ingestion steps, select processing steps you want to apply to the loaded files.

-

Under Set retrieval steps, select the retrieval method that you want to use for answering, see more in Q&A workflow.

-

Click Process to process all loaded PDFs. A vector store is created in memory.

-

Ask questions about your data in the main panel.

The following workflows are part of this starter kit out of the box.

This workflow is an example of parsing and indexing data for subsequent Q&A. The steps are:

-

Document parsing: The unstructured inference Python package is used to extract text from PDF documents. Multiple integrations for text extraction from PDF are available on the LangChain website. Depending on the quality and the format of the PDF files, this step might require customization for different use cases.

-

Split data: After the data has been parsed and its content extracted, we need to split the data into chunks of text to be embedded and stored in a vector database. The size of the chunks of text depends on the context (sequence) length offered by the model. Generally, larger and related context chunks result in better performance. The method used to split text has an impact on performance (for instance, making sure there are no word breaks, sentence breaks, etc.). The downloaded data is split using the unstructured partition pdf method with

chunking_strategy="by_title".

3 Summarize data Text chunks and identified tables are summarized using the selected Large Language Model. For image parsing the Large Vision-Language Model is used as image summarizer.

-

Embed data: For each chunk from the previous step, we use an embeddings model to create a vector representation. These embeddings are used in the storage and retrieval of the most relevant content given a user's query.

For more information about what an embedding is click here

-

Store embeddings: Embeddings for each chunk are stored in a vector database, along with content and relevant metadata (such as source documents). The embedding acts as the index in the database. In this starter kit, we store information with each entry, which can be modified to suit your needs. Several vector databases are available, each with their own pros and cons. This AI starter kit is set up to use Chroma as the vector database because it is a free, open-source option with straightforward setup, but you could use a different vector database if you like.

This workflow is an example of leveraging data stored in a vector database and doc storage along with a large language model to enable retrieval-based Q&A off your data. The steps are:

-

Embed query: The first step is to convert a user-submitted query into a common representation (an embedding) for subsequent use in identifying the most relevant stored content. Use the same embedding model for ingestion and to generate embeddings. In this sample, the query text is embedded using HuggingFaceInstructEmbeddings, which is the same model in the ingestion workflow.

-

Retrieve relevant documents Next, we use the embeddings representation of the query to make a retrieval request from the vector database, which in turn returns relevant entries (documents or summaries). The vector database and doc storage therefore also act as a retriever for finding the relevant information and fetching the original chunks from the doc storage.

-

Find more information about embeddings and their retrieval here*

-

Find more information about retrieval-augmented generation with LangChain here

-

Find more information about multi-vector retrievers here*

After the relevant information is retrieved, what happens next depends on the setting of Answer over raw images.

-

If Answer over raw images is disabled, the content (table and text documents/summaries, and images summaries) is sent directly to a SambaNova LLM to generate a final response to the user query.

-

If Answer over raw images is enabled, the retrieved raw images and query are both sent to the LVLM. With each image, intermediate answers to the query are received. These intermediate answers are included with relevant text and table documents/summaries to be used as context.

The user's query is combined with the retrieved context along with instructions to form the prompt before being sent to the LLM. This process involves prompt engineering, and is an important part of ensuring quality output. In this AI starter kit, customized prompts are provided to the LLM to improve the response quality.

Learn more about Prompt engineering

The example template can be further customized based on the use case.

PDF Format: Different packages are available to extract text from PDF files. They can be broadly categorized as

- OCR-based: pytesseract, paddleOCR, unstructured

- Non-OCR based: pymupdf, pypdf, unstructured Most of these packages have easy integrations with the Langchain library.

You can find examples of the usage of these loaders in the Data extraction starter kit

Unstructured kit includes a easy to use image extraction method using YOLOX model which is used by default in the kit. When using other extraction tool, implement a proper image extraction strategy.

You can include a new loader in the following location:

file: src/multimodal.py

function: extract_pdf

This kit uses the data normalization and partition strategy from the unstructured package but you can experiment with different ways of splitting the data, such as splitting by tokens or using context-aware splitting for code or markdown files. LangChain provides several examples of different kinds of splitting here.

you can modify the maximum length of each chunk in the following location: file: config.yaml

retrieval:

"max_characters": 1000

"new_after_n_chars": 800

"combine_text_under_n_chars": 500

...Several open-source embedding models are available on Hugging Face. This leaderboard ranks these models based on the Massive Text Embedding Benchmark (MTEB). A number of these models are available on SambaStudio and can be further fine-tuned on specific datasets to improve performance.

You can do this modification in the following location:

file: src/multimodal.py

function: create_vectorstore

For details about the SambaStudio hosted embedding models see the section Use Sambanova's LLMs and Embeddings Langchain wrappers here

The starter kit can be customized to use different vector databases to store the embeddings generated by the embedding model. The LangChain vector stores documentation provides a broad collection of vector stores that can be easily integrated.

You can do this modification in the following location:

file: src/multimodal.py

function: create_vectorstore

A wide collection of retriever options is available. In this starter kit, the vector store and doc store are used as retriever, but they can be enhanced and customized, as shown in here.

You can do this modification in the following location: file: config.yaml

"k_retrieved_documents": 3and file: app.py

function: get_retrieval_chain

You can customize the Large Language Model. The customization depends on the endpoint that you are using.

The starter kit uses the SN LLM model, which can be further fine-tuned to improve response quality.

- To train a model in SambaStudio, prepare your training data, import your dataset into SambaStudio and run a training job

- To modify the parameters for calling the model, make changes to the

config.yamlfile. - You can also set the values of temperature and maximum generation token in that file.

Prompting has a significant effect on the quality of LLM responses. Prompts can be further customized to improve the quality of the responses from the LLMs. For example, in this starter kit, the following prompt was used to generate a response from the LLM, where question is the user query and context is the documents retrieved by the retriever.

custom_prompt_template = """[INST]<<SYS>> You are a helpful assistant for question-answering tasks. Use the following pieces of retrieved context to answer the question. If the answer is not in the context, say that you don't know. Cross check if the answer is contained in provided context. If not than say \"I do not have information regarding this\". Do not use images or emojis in your answer. Keep the answer conversational and professional.<</SYS>>

{context}

Question: {question}

Helpful answer: [/INST]"""

CUSTOMPROMPT = PromptTemplate(

template=custom_prompt_template, input_variables=["context", "question"]

)You can make modifications in the prompts folder

All the packages/tools are listed in the requirements.txt file in the project directory.