- Web Crawled Data Retrieval

- Overview

- Before you begin

- Deploy the starter kit GUI

- Use the starter kit GUI

- Workflows

- Customizing the AI starter kit

- Third-party tools and data sources

This AI starter kit is an example of a semantic search workflow that can be built using the SambaNova platform to get answers to your questions using website-crawled information as the source.

- A configurable SambaStudio connector for running inference off a model already available in SambaStudio.

- A configurable integration with a third-party vector database.

- An implementation of a semantic search workflow and prompt construction strategies.

This sample is ready to use. We provide:

- Instructions for setup.

- Instructions for running the model as is.

- Instructions for customization.

You can view a demo video of deploying and using the starter kit:

webbcrawling_medium.mp4

You have to set up your environment before you can run or customize the starter kit.

Clone the starter kit repo.

git clone https://github.com/sambanova/ai-starter-kit.git

The next step is to set up your environment variables to use one of the models available from SambaNova. If you're a current SambaNova customer, you can deploy your models with SambaStudio. If you are not a SambaNova customer, you can self-service provision API endpoints using SambaNova Cloud.

-

If using SambaNova Cloud Please follow the instructions here for setting up your environment variables. Then in the config file set the llm

apivariable to"sncloud"and set theselect_expertconfig depending on the model you want to use. -

If using SambaStudio Please follow the instructions here for setting up endpoint and your environment variables. Then in the config file set the llm

apivariable to"sambastudio", set thebundleandselect_expertconfigs if using a bundle endpoint.

You have these options to specify the embedding API info:

-

Option 1: Use a CPU embedding model

In the config file, set the variable

typeinembedding_modelto"cpu" -

Option 2: Set a SambaStudio embedding model

To increase inference speed, you can use a SambaStudio embedding model endpoint instead of using the default (CPU) Hugging Face embeddings.

-

Follow the instructions here for setting up your environment variables.

-

In the config file, set the variable

typeembedding_modelto"sambastudio"and set the configsbatch_size,bundleandselect_expertaccording your sambastudio endpointNOTE: Using different embedding models (cpu or sambastudio) may change the results, and change How the embedding model is set and what the parameters are.

We recommend that you run the the starter kit in a virtual environment or use a container.

If you want to use virtualenv or conda environment:

-

Install and update pip.

cd ai-starter-kit/web_crawled_data_retriever python3 -m venv web_crawled_data_retriever_env source web_crawled_data_retriever_env/bin/activate pip install -r requirements.txt

-

Run the following command:

streamlit run streamlit/app.py --browser.gatherUsageStats false

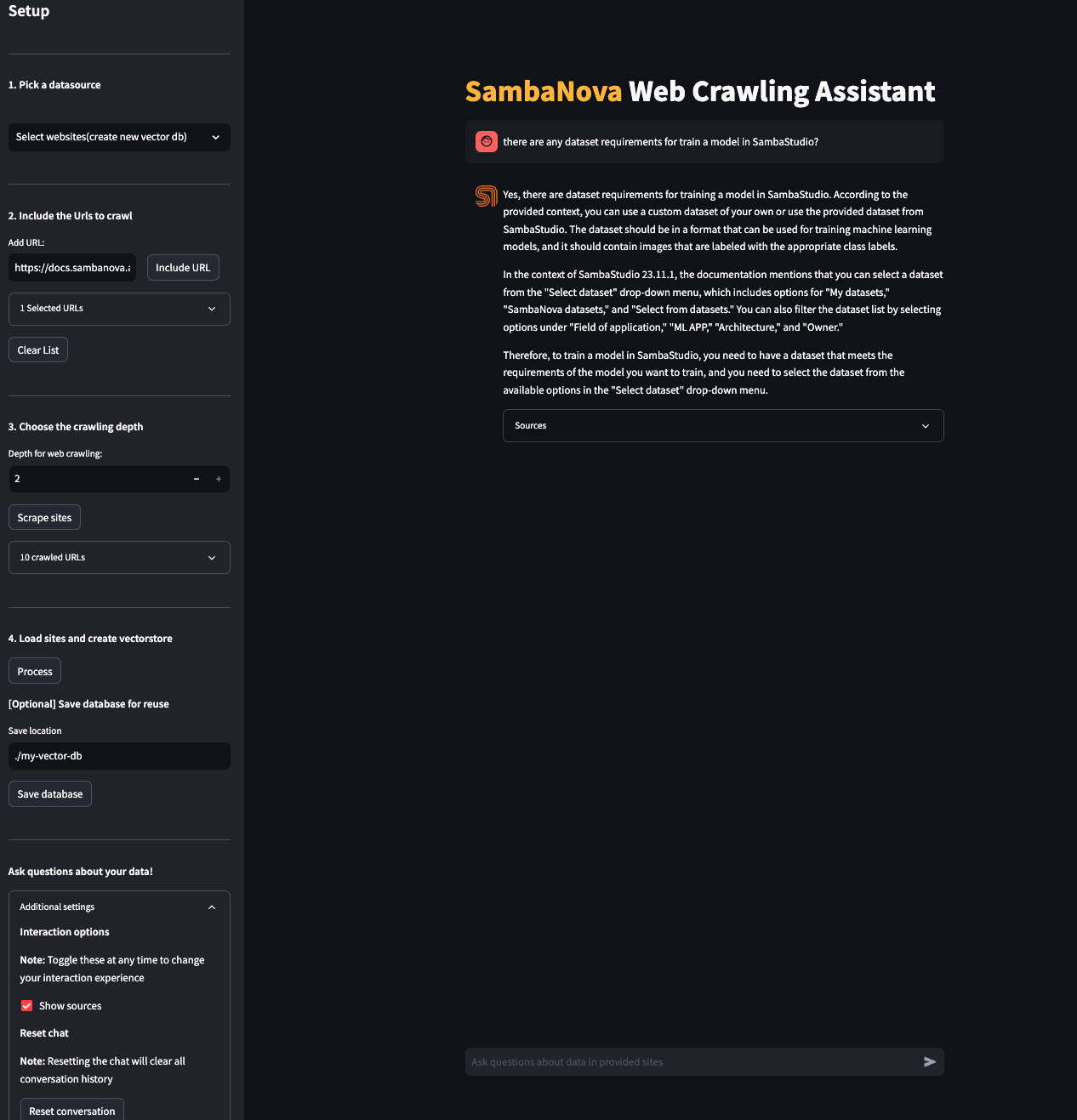

You should see the following user interface:

If you want to use Docker, run the following command:

docker-compose up --build

You will be prompted to go to the link (http://localhost:8501/) in your browser where you will be greeted with the streamlit GUI as above.

After you've deployed the GUI, you can use the start kit. Follow these steps:

-

Under Pick a datasource, select a previously stored FAISS vectorstore or a list of website URLs.

-

Under Include the Urls to crawl, specify each site you want to crawl in the text area and click Include URL. You can clear the list if needed.

-

Under Choose the crawling depth, specify how many layers of internal links to explore. (limited to 2)

NOTE: An increase in crawling depth leads to exponential growth in the number of processed sites. Consider resource implications for efficient workflow performance.

-

Click Scrape sites to crawl and process the sites. The app creates a vectorstore in memory, which you can store on disk if you want.

-

Use the question area on the right to ask questions about website data!

This AI starter kit implements these distinct workflows. Each consist of a sequence of operations.

This workflow is an example of crawling, parsing and indexing data for subsequent Q&A. The steps are:

-

Website crawling: The Langchain AsyncHtmlLoader, which is built on top of the requests and aiohttp Python packages, is used to scrape the HTML from the websites.

This starter kit uses an iterative approach to delve deeper into the website's references.

- First we load the initial HTML content and extract links using the beautifulSoup package.

- For each extracted link, we repeat the crawling process, loading the linked page's HTML content, and again identifying additional links within that content.

This iterative cycle continues for 'n' iterations, where 'n' represents the specified depth. With each iteration, the workflow explores deeper levels of the website hierarchy, progressively expanding the scope of data retrieval.

-

Document parsing: Document transformers are tools used to transform and manipulate documents. They take structured documents as input and apply transformations to extract specific information or modify the document's content. Document transformers can extract properties, generate summaries, translate text, filter redundant documents, and more. These transformers can process a large number of documents efficiently and can be used to preprocess data before further analysis or to generate new versions of the documents with desired modifications.

We use the Langchain html2text document transformer to extract plain and clear text from the HTML documents. Other document transformers likeBeautfulSoup transformer are available for plain text extraction from HTML included in the LangChain package. Depending on the information you need to extract from websites, this step might require some customization.

If you want to retrieve remote files, this starter kit includes extra file type loading functionality. You can activate or deactivate these loaders listing the filetypes in the config file in the parameter extra_loaders. Right now remote PDF loading is available

-

Data splitting: Due to token limits in LLMs, you need to split the data into chunks of text to be embedded and stored in a vector database after the data has been parsed and its content extracted. The size of the chunk of text depends on the context (sequence) length offered by the model. Generally, larger context lengths result in better performance. The method used to split text also has an impact on performance (for instance, making sure there are no word breaks, sentence breaks, etc.). The downloaded data is split using RecursiveCharacterTextSplitter.

-

Data embedding: For each chunk of text from the previous step, we use an embeddings model to create a vector representation of it. These embeddings are used in the storage and retrieval of the most relevant content given a user's query. The split text is embedded using HuggingFaceInstructEmbeddings.

For more information about what an embeddings is click here*

- Store embeddings: Embeddings for each chunk, along with content and relevant metadata (such as source website), are stored in a vector database. The embedding acts as the index in the database. In this starter kit, we store information with each entry, which can be modified to suit your needs. Several vector database options are available, each with its own pros and cons. This starter kit uses FAISS as the vector database because it's a free, open-source option with straightforward setup, but can easily be updated to use another database if desired. In terms of metadata,

website sourceis also attached to the embeddings which are stored during web scraping.

This workflow is an example of leveraging data stored in a vector database along with a large language model to enable retrieval-based Q&A of your data. This method is called Retrieval Augmented Generation RAG, The steps are:

-

Embed query: The first step is to convert a user-submitted query into a common representation (an embedding) for subsequent use in identifying the most relevant stored content. Because of this, we recommend that you use the same embedding model to generate embeddings. In this sample, the query text is embedded using HuggingFaceInstructEmbeddings, which is the same model in the ingestion workflow.

-

Retrieve relevant content: Next, we use the embeddings representation of the query to make a retrieval request from the vector database, which returns relevant entries (content). The vector database acts as a retriever for fetching relevant information from the database.

Find more information about embeddings and their retrieval here

Find more information about Retrieval augmented generation with LangChain here

When the relevant information has been retrieved, the content is sent to a SambaNova LLM to generate the final response to the user query. The user's query is combined with the retrieved content along with instructions to form the prompt before being sent to the LLM. This process involves prompt engineering, and is an important part in ensuring quality output. In this starter kit, customized prompts are provided to the LLM to improve the quality of response for this use case.

Learn more about prompt engineering here.

You can further customize the code in this starter kit.

Different packages are available to crawl and extract from websites. The demo app uses the AsyncHtmlLoader, langchain also includes several HTML loaders that you can use. This modification can be done in the following location:

file: src/web_crawling_retriever.py

function:

load_htmls

After the scraping, all the referenced links are saved and filtered using beautifulSoup package for each site. Then the web crawling method iterates 'n' times scraping the sites and finding referenced links,. The maximum depth is currently 2 and the maximum number of crawled sites is 20, but you can modify these limits and the behavior of the web crawling in the following location:

file: config.yaml

web_crawling: "max_depth": 2 "max_scraped_websites": 20

file: src/web_crawling_retriever.py

function: web_crawl

WARNING: An increase in crawling depth leads to exponential growth in the number of processed sites. Consider resource implications for efficient workflow performance.

Depending on the loader used for scraping the sites, you may want to use a transformation method to clean up the downloaded documents. You can do the cleanup in the following location:

file: src/web_crawling_retriever.py

function:

clean_docs

LangChain provides several document transformers that you can use with your data.

You can experiment with different ways of splitting the data, such as splitting by tokens or using context-aware splitting for code or markdown files. LangChain provides several examples of different kinds of splitting here.

You can customize the RecursiveCharacterTextSplitter in get_text_chunks method of vectordb class, which is used by this starter kit by changing the chunk_size and chunk_overlap parameters.

- For LLMs with a long sequence length, try using a larger value of

chunk_sizeto provide the LLM with broader context and improve performance. - The

chunk_overlapparameter is used to maintain continuity between different chunks.

This modification can be done in the following location:

file: config.yaml

retrieval: "chunk_size": 1200 "chunk_overlap": 240 "db_type": "faiss" "k_retrieved_documents": 4 "score_treshold": 0.5

Several open source embedding models are available on HuggingFace. This leaderboard ranks these models based on the Massive Text Embedding Benchmark (MTEB). Several of these models are available on SambaStudio and can be used or further fine-tuned on specific datasets to improve performance.

This modification can be done in the following location:

file: ../vectordb/vector_db.py

function:

load_embedding_model

Find more information about the usage of SambaStudio hosted embedding models in the section Use Sambanova's LLMs and Embeddings Langchain wrappers here.

Customize search assistant to use a different vector database to store the embeddings generated by the embedding model. The LangChain vector stores documentation provides a broad collection of vector stores that are easy to integrate.

This modification can be done in the following location:

file: ../vectordb/vector_db.py

function:

create_vector_store

Similar to the vector stores, a wide collection of retriever options is also available. This starter kit uses the vector store as a retriever, but it can be enhanced and customized, as shown in some of the examples here.

This modification can be done in the following location:

file: config.yaml

"db_type": "chroma"

"k_retrieved_documents": 3

"score_treshold": 0.6and

file: src/search_assistant.py

function:

retrieval_qa_chain

You can further customize the model itself.

The starter kit uses the SN LLM model, which can be further fine-tuned to improve response quality.

To train a model in SambaStudio, learn how to:

You can modify the parameters for calling the model and the temperature and maximum generation token in the config.yaml file.

Prompting has a significant effect on the quality of LLM responses. Prompts can be further customized to improve the overall quality of the responses from the LLMs. For example, in this starter kit, the following prompt was used to generate a response from the LLM, where question is the user query and context are the documents retrieved by the search engine.

template: |

<s>[INST] <<SYS>>\nUse the following pieces of context to answer the question at the end.

If the answer is not in context for answering, say that you don't know, don't try to make up an answer or provide an answer not extracted from provided context.

Cross check if the answer is contained in provided context. If not than say \"I do not have information regarding this.\"\n

context

{context}

end of context

<</SYS>>/n

Question: {question}

Helpful Answer: [/INST]

)This modification can be done in the file prompts/llama7b-web_crwling_data_retriever.yaml

Learn more about prompt engineering here.

All the packages/tools are listed in the requirements.txt file in the project directory.