Benchmarking the performance of automated software engineering against DevBench.

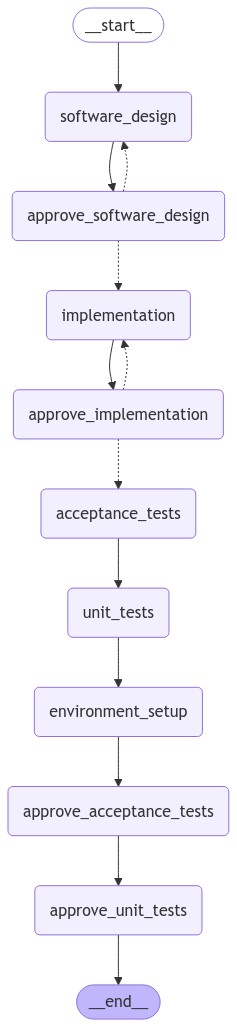

The 5 evaluations in DevBench will serve as the primary nodes in the system. Progression to the next node is determined by hardcoded logic or an LLM in "approve_" prefixed nodes.

Tasks/Nodes:

- Software Design

- Environment Setup

- Implementation

- Acceptance Testing

- Unit Testing

We use langgraph to manage the control flow of the system. Decision nodes make decisions based on hard-coded rules or an LLM can be used to make the decision. They are prefixed with "approve_". They have conditional edges to route the flow of the system.

In the graph state we accumulate "documents" produced by each node in the graph these are our final artifacts/outputs.

A .env. file is required (see .env.example). It should contain the following:

OPENAI_API_KEY="your key"

LANGCHAIN_API_KEY="your key"

LANGCHAIN_TRACING_V2="true"

LANGCHAIN_PROJECT="autoSWE-1"

LANGCHAIN_ENDPOINT="https://api.smith.langchain.com"You will need to make an account at Langchain.

To point at a specific PRD.md file and run the full program:

python main.py --prd_path path/to/PRD.md --out_path outputs/name_of_repo.jsonAlternatively, you can run the example PRD.md file in the repo:

python main.py --out_path outputs/sample.json