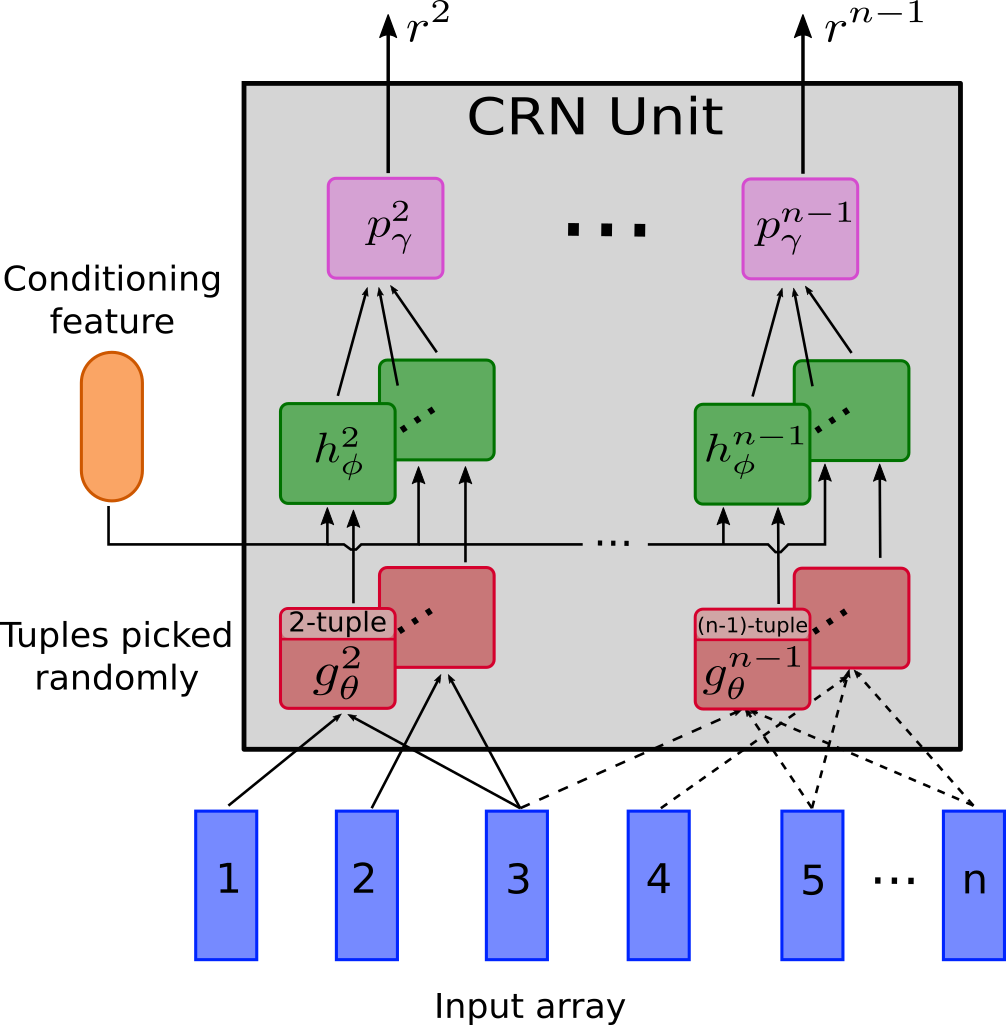

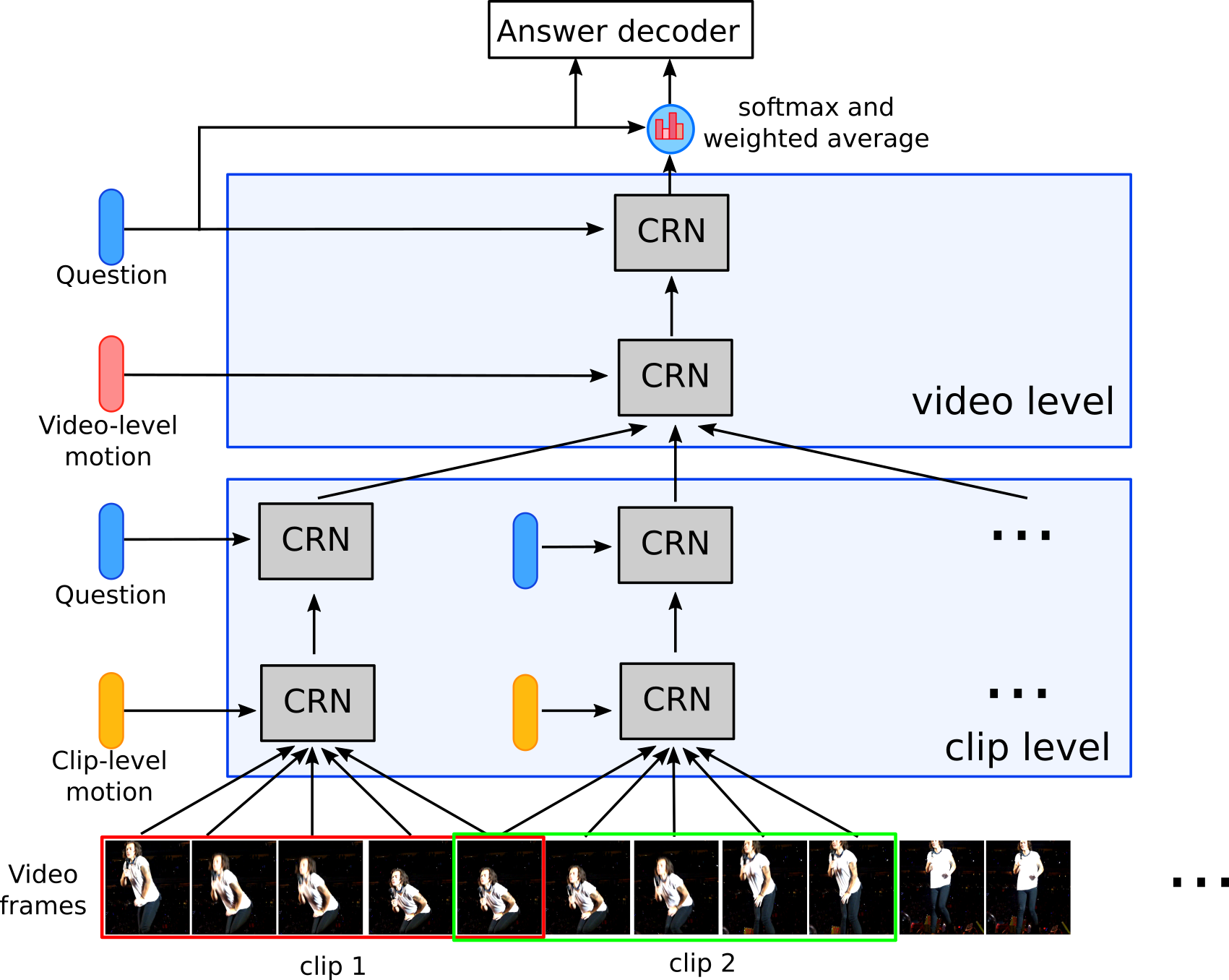

We introduce a general-purpose reusable neural unit called Conditional Relation Network (CRN) that encapsulates and transforms an array of tensorial objects into a new array of the same kind, conditioned on a contextual feature. The flexibility of CRN units is then examined in solving Video Question Answering, a challenging problem requiring joint comprehension of video content and natural language processing.

Illustrations of CRN unit and the result of model building HCNR for VideoQA:

Check out our paper for details.

-

Clone the repository:

git clone https://github.com/thaolmk54/hcrn-videoqa.git -

Download TGIF-QA, MSRVTT-QA, MSVD-QA dataset and edit corresponding paths in the repo upon where you locate your data.

-

Install dependencies:

conda create -n hcrn_videoqa python=3.6

conda activate hcrn_videoqa

conda install -c conda-forge ffmpeg

pip install -r requirements.txtDepending on the task to chose question_type out of 4 options: action, transition, count, frameqa.

-

To extract appearance feature:

python preprocess/preprocess_features.py --gpu_id 2 --dataset tgif-qa --model resnet101 --question_type {question_type} -

To extract motion feature:

Download ResNeXt-101 pretrained model (resnext-101-kinetics.pth) and place it to

data/preprocess/pretrained/.python preprocess/preprocess_features.py --dataset tgif-qa --model resnext101 --image_height 112 --image_width 112 --question_type {question_type}

Note: Extracting visual feature takes a long time. You can download our pre-extracted feature (action task) from [here](not available) for appearance and here for motion.

-

Download glove pretrained 300d word vectors to

data/glove/and process it into a pickle file:python txt2pickle.py -

Preprocess train/val/test questions:

python preprocess/preprocess_questions.py --dataset tgif-qa --question_type {question_type} --glove_pt data/glove/glove.840.300d.pkl --mode trainpython preprocess/preprocess_questions.py --dataset tgif-qa --question_type {question_type} --mode test

Choose a suitable config file in configs/{task}.yml for one of 4 tasks: action, transition, count, frameqa to train the model. For example, to train with action task, run the following command:

python train.py --cfg configs/tgif_qa_action.ymlTo evaluate the trained model, run the following:

python validate.py --cfg configs/tgif_qa_action.ymlNote: Pretrained model for action task is available here. Save the file in results/expTGIF-QAAction/ckpt/ for evaluation.

The following to to run experiments with MSRVTT-QA dataset, replace msrvtt-qa with msvd-qa to run with MSVD-QA dataset.

-

To extract appearance feature:

python preprocess/preprocess_features.py --gpu_id 2 --dataset msrvtt-qa --model resnet101 -

To extract motion feature:

python preprocess/preprocess_features.py --dataset msrvtt-qa --model resnext101 --image_height 112 --image_width 112

Preprocess train/val/test questions:

`python preprocess/preprocess_questions.py --dataset msrvtt-qa --glove_pt data/glove/glove.840.300d.pkl --mode train`

`python preprocess/preprocess_questions.py --dataset msrvtt-qa --question_type {question_type} --mode val`

`python preprocess/preprocess_questions.py --dataset msrvtt-qa --question_type {question_type} --mode test`

python train.py --cfg configs/msrvtt_qa.ymlTo evaluate the trained model, run the following:

python validate.py --cfg configs/msrvtt_qa.yml