STG4Traffic:A Benchmark Study of Using Spatial-Temporal Graph Neural Networks for Traffic Prediction

Motivation:The existing benchmarks for traffic prediction lack a standard and unified benchmark, and the experimental settings are not standardized, with complex environment configurations and poor scalability. Furthermore, there are significant differences between the reported results in the original papers and the results obtained from the existing benchmarks, making it difficult to compare different models based on fair baselines.

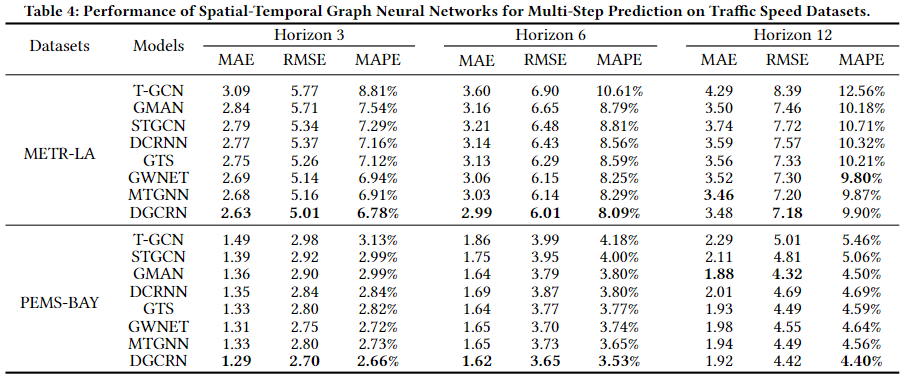

Given the heterogeneity of traffic data, which can result in significant variation in model performance across different datasets, we have selected 15 to 20 models with high impact factors on traffic speed (METR-LA, PEMS-BAY) and traffic flow (PEMSD4, PEMSD8), as well as representative models, to construct a benchmark project file for the uniform evaluation of model performance. The selected methods are as follows: blue squares represent methods applied to traffic flow, while orange squares represent methods applied to traffic speed.

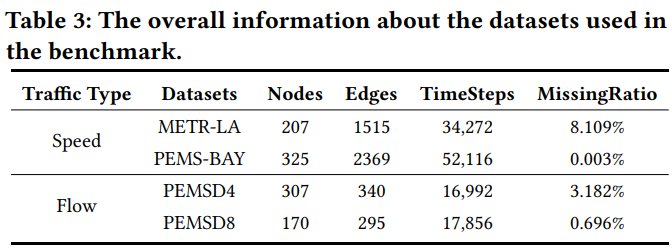

Datasets:We validate the methods in the benchmark on the following dataset.

- METR-LA: Los Angeles Metropolitan Traffic Conditions Data, which records traffic speed data collected at 5-minute intervals by 207 freeway loop detectors from March 2012 to June 2012.

- PEMS-BAY: A network representation of 325 traffic sensors in the Bay Area, collected by the California Department of Transportation (CalTrans) Measurement System (PeMS), displaying traffic flow data at 5-minute intervals from January 2017 to May 2017.

- PEMSD4/8:The traffic flow datasets are collected by the Caltrans Performance Measurement System (PeMS) (Chen et al., 2001) in real time every 30 seconds. The traffic data are aggregated into every 5-minute interval from the raw data.

[METR-LA & PEMS-BAY]:https://github.com/liyaguang/DCRNN

[PEMSD4 & PEMSD8]:https://github.com/Davidham3/ASTGCN

Requirements:The code is built based on Python 3.8.5 and PyTorch 1.8.0. You can install other dependencies via:

pip install -r requirements.txtTo ensure consistency with previous research, we divided the speed data into training, validation, and test sets in a ratio of 7:1:2, while the flow data was divided in a ratio of 6:2:2. If the validation error converges within 15-20 epochs or stops after 100 epochs, the training of the model is stopped and the best model on the validation data is saved. To determine the specific model parameters and settings, including the optimizer, learning rate, loss function, and model parameters, we remained faithful to the original papers while also making multiple tuning efforts to select the best experimental results. In our experiments, we used root mean square error (RMSE), mean absolute error (MAE), and mean absolute percentage error (MAPE) based on masked data as metrics to evaluate the model performance, with zero values being ignored.

cd STG4Traffic/TrafficFlowThis directory level enables you to become familiar with the project's public data interface. If you observe carefully, you can see that we have decoupled the data interface, model design, model training and evaluation modules to achieve a structural separation of the benchmarking framework.

| Directory | Explanation |

|---|---|

| data | Data files (including historical traffic volume records, connection information of nodes) |

| lib | Tool class (data loaders, evaluation metrics, graph construction methods, etc.) |

| model | Model design files |

| log | The directory where the project logs and models are stored |

How can we extend the use of this benchmark?

Self-Defined Model Design: Create the ModelName directory under model and write the modelname.py file;

Model Setup, Run and Test: The path GNN4Traffic/TrafficFlow/ModelName creates the following 4 files:

[1] ModelName_Config.py: Retrieving the model's parameter configuration entries;

[2]ModelName_Utils.py: Additional tool classes for model setup [optional];

[3] ModelName_Trainer.py: Model trainer, which undertakes the task of training, validation and testing of models throughout the process;

[4] ModelName_Main.py: Project start-up portal to complete the initialization of model parameters, optimizer, loss function, learning rate decay strategy and other settings;

[5] Dataset_ModelName.conf: Different datasets set different parameter terms for the model [can be multiple].

Talk is cheap. Show me the code. You can get a handle on the execution of this benchmark by experimenting with a simple model, such as DCRNN → ModelName, which will help you understand the meaning of the above table of contents.

If you find this repository useful for your work, please consider citing it as follows:

STG4Traffic: A Survey and Benchmark of Spatial-Temporal Graph Neural Networks for Traffic Prediction

We hope this research can make positive and beneficial contributions to the field of spatial-temporal prediction.