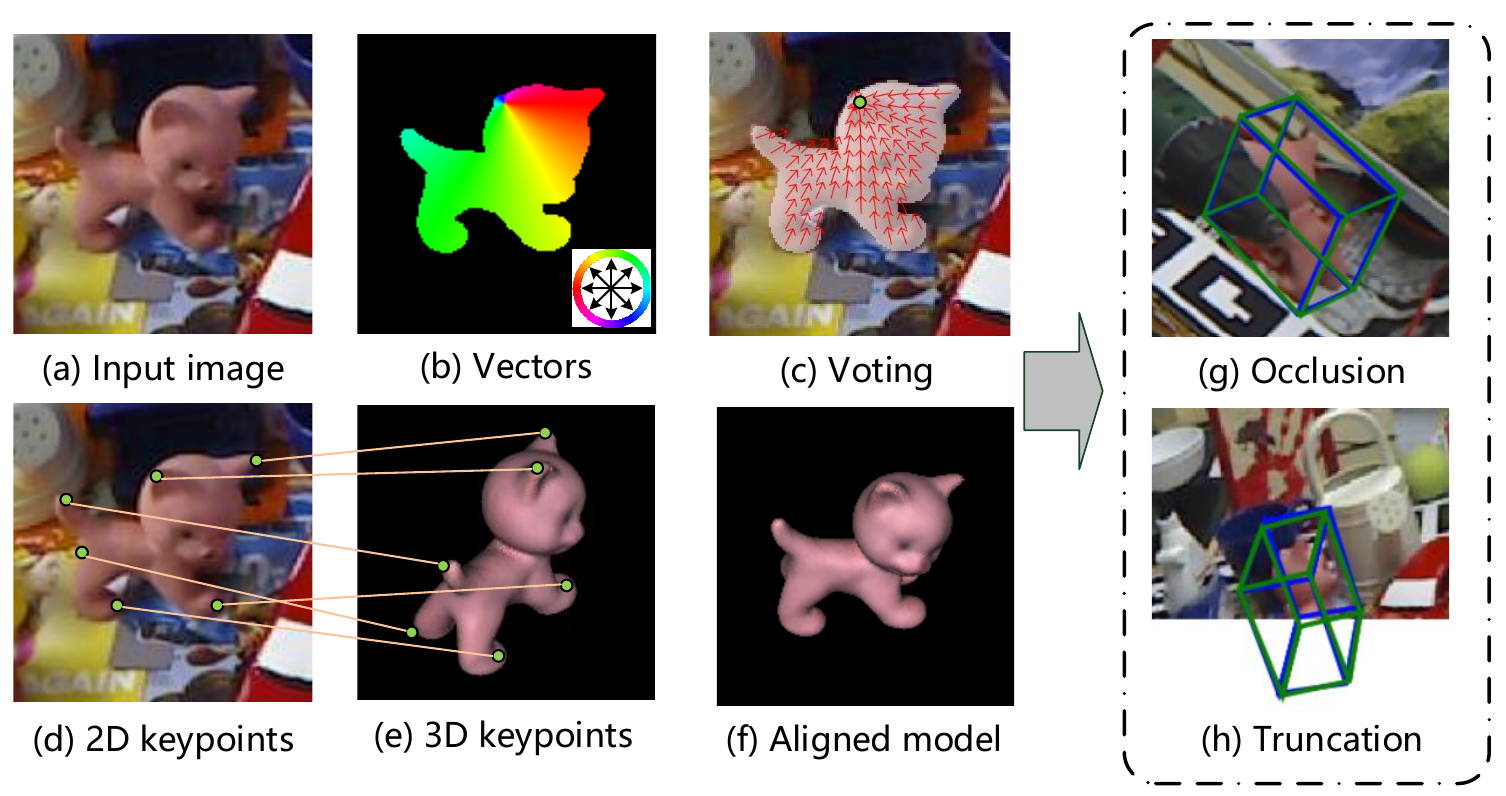

PVNet: Pixel-wise Voting Network for 6DoF Pose Estimation

Sida Peng, Yuan Liu, Qixing Huang, Xiaowei Zhou, Hujun Bao

CVPR 2019 oral

Project Page

Any questions or discussions are welcomed!

Thanks Haotong Lin for providing the clean version of PVNet and reproducing the results.

The structure of this project is described in project_structure.md.

- Set up the python environment:

conda create -n pvnet python=3.7 conda activate pvnet # install torch 1.1 built from cuda 9.0 pip install torch==1.1.0 -f https://download.pytorch.org/whl/cu90/stable pip install Cython==0.28.2 pip install -r requirements.txt - Compile cuda extensions under

lib/csrc:ROOT=/path/to/clean-pvnet cd $ROOT/lib/csrc export CUDA_HOME="/usr/local/cuda-9.0" cd dcn_v2 python setup.py build_ext --inplace cd ../ransac_voting python setup.py build_ext --inplace cd ../fps python setup.py - Set up datasets:

ROOT=/path/to/clean-pvnet cd $ROOT/data ln -s /path/to/linemod linemod ln -s /path/to/linemod_orig linemod_orig ln -s /path/to/occlusion_linemod occlusion_linemod

Download datasets which are formatted for this project:

- linemod

- linemod_orig: The dataset includes the depth for each image.

- occlusion linemod

- truncation linemod: Check TRUNCATION_LINEMOD.md for the information about the Truncation LINEMOD dataset.

We provide the pretrained models of objects on Linemod, which can be found at here.

Take the testing on cat as an example.

- Prepare the data related to

cat:python run.py --type linemod cls_type cat - Download the pretrained model of

catand put it to$ROOT/data/model/pvnet/cat/199.pth. - Test:

python run.py --type evaluate --cfg_file configs/linemod.yaml model cat

python run.py --type evaluate --cfg_file configs/linemod.yaml test.dataset LinemodOccTest model cat

- Test with icp:

python run.py --type evaluate --cfg_file configs/linemod.yaml model cat test.icp True

python run.py --type evaluate --cfg_file configs/linemod.yaml test.dataset LinemodOccTest model cat test.icp

Take the cat as an example.

- Prepare the data related to

cat:python run.py --type linemod cls_type cat - Download the pretrained model of

catand put it to$ROOT/data/model/pvnet/cat/199.pth. - Visualize:

python run.py --type visualize --cfg_file configs/linemod.yaml model cat

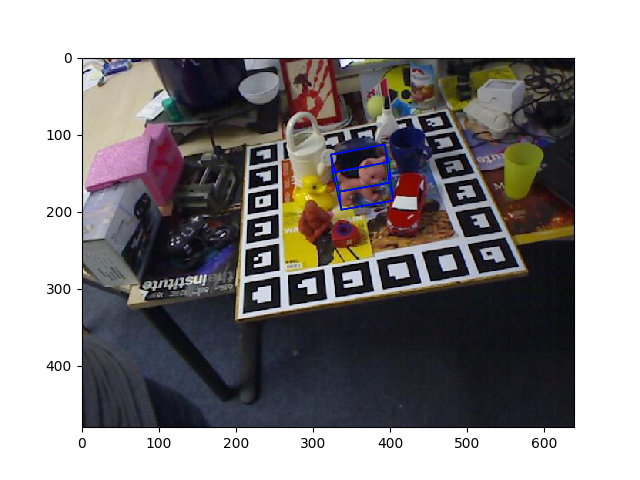

If setup correctly, the output will look like

- Prepare the data related to

cat:python run.py --type linemod cls_type cat - Train:

python train_net.py --cfg_file configs/linemod.yaml model mycat cls_type cat

The training parameters can be found in project_structure.md.

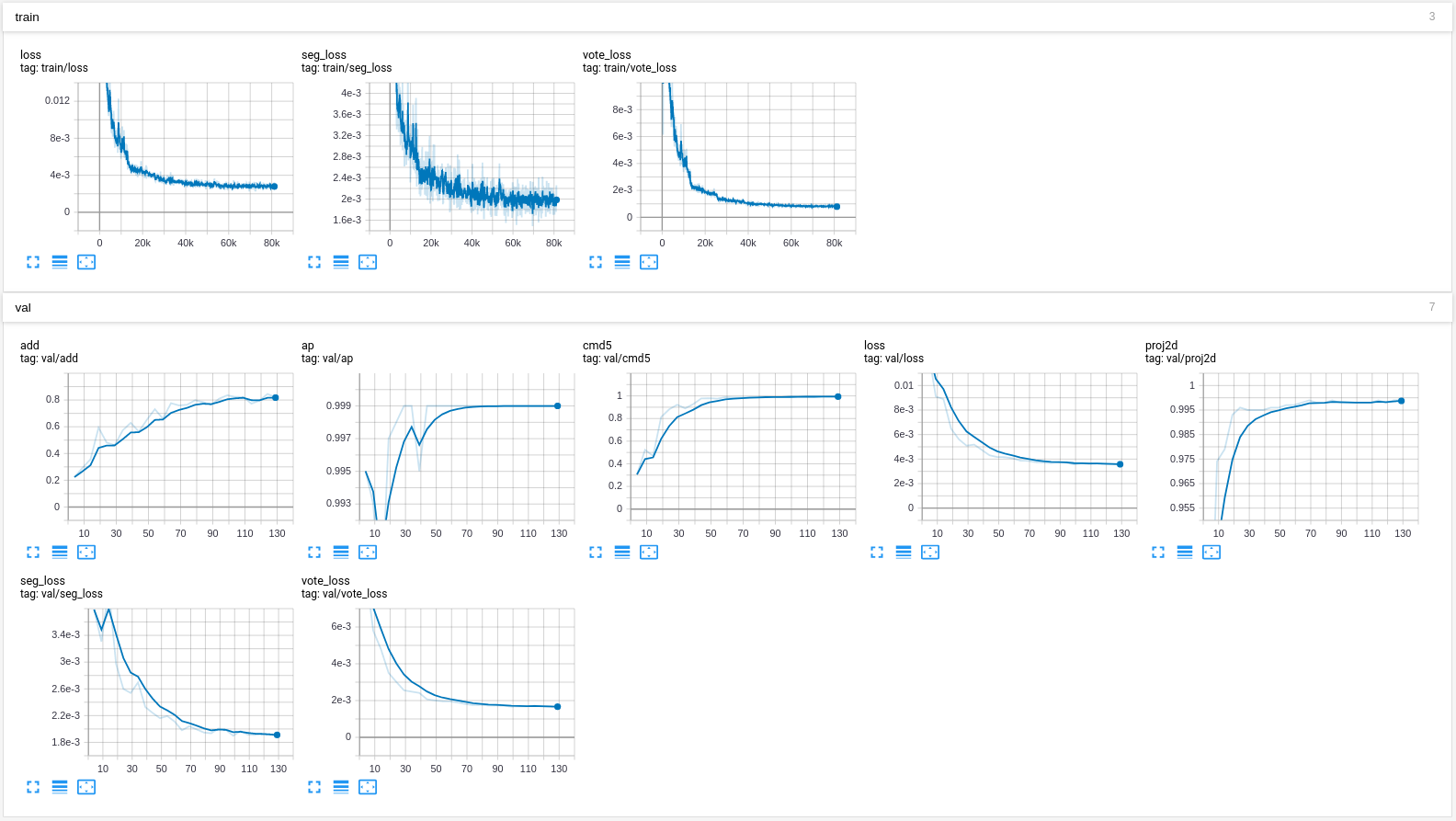

tensorboard --logdir data/record/pvnet

If setup correctly, the output will look like

- Create a dataset using https://github.com/F2Wang/ObjectDatasetTools

- Organize the dataset as the following structure:

├── /path/to/dataset │ ├── model.ply │ ├── camera.txt │ ├── rgb/ │ │ ├── 0.jpg │ │ ├── ... │ │ ├── 1234.jpg │ │ ├── ... │ ├── mask/ │ │ ├── 0.png │ │ ├── ... │ │ ├── 1234.png │ │ ├── ... │ ├── pose/ │ │ ├── 0.npy │ │ ├── ... │ │ ├── 1234.npy │ │ ├── ... │ │ └── - Create a soft link pointing to the dataset:

ln -s /path/to/custom_dataset data/custom - Process the dataset:

python run.py --type custom - Train:

python train_net.py --cfg_file configs/linemod.yaml train.dataset CustomTrain test.dataset CustomTrain model mycat train.batch_size 4 - Watch the training curve:

tensorboard --logdir data/record/pvnet - Visualize:

python run.py --type visualize --cfg_file configs/linemod.yaml train.dataset CustomTrain test.dataset CustomTrain model mycat - Test:

python run.py --type evaluate --cfg_file configs/linemod.yaml train.dataset CustomTrain test.dataset CustomTrain model mycat

An example dataset can be downloaded at here.

If you find this code useful for your research, please use the following BibTeX entry.

@inproceedings{peng2019pvnet,

title={PVNet: Pixel-wise Voting Network for 6DoF Pose Estimation},

author={Peng, Sida and Liu, Yuan and Huang, Qixing and Zhou, Xiaowei and Bao, Hujun},

booktitle={CVPR},

year={2019}

}