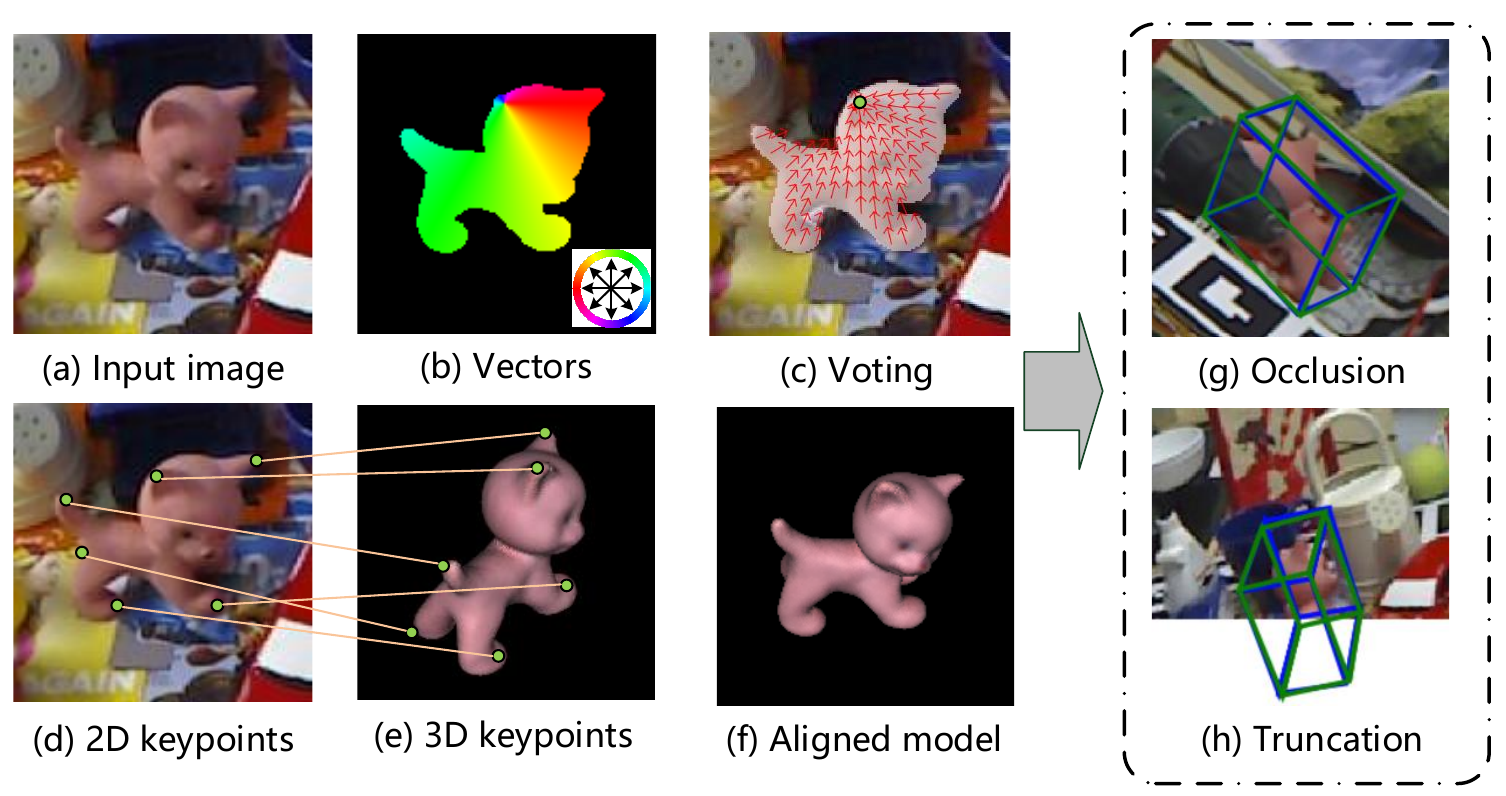

PVNet: Pixel-wise Voting Network for 6DoF Pose Estimation

Sida Peng, Yuan Liu, Qixing Huang, Xiaowei Zhou, Hujun Bao

CVPR 2019 oral

Project Page

This is the custom implementation of PVNet for the Master Thesis project of Arturo Guridi at the Technical University Munich, chair of biomedical computing (CAMPAIR).

This work focuses on changing PVNet to work with a custom input of both RGB and polarized images. For that purpose, first some images and annotations were generated using the Mitsuba Renderer with Blenderproc (please see MitsubaRenderer)

The core functionalities of PVNet stay the same, with the need to pre-compile some libraries for using it.

Thanks to Haotong Lin for provinding a clean version of PVnet to use.

The structure of this project is described in project_structure.md.

One way is to set up the environment with docker. See this.

Note that in contrast to the original PVNet, this work was tested with more modern version of the packages and proved to work just fine with CUDA 11.4 and pytorch 1.4

For the C++ cuda packages, CUDA 11.4 and g++ 9.0 was used and worked fine. No additional changes are needed to those files.

-

Set up the python environment:

conda create -n pvnet python=3.7 conda activate pvnet # install torch 1.4 built from cuda 11 sudo apt-get install libglfw3-dev libglfw3 pip install -r requirements.txt -

Compile cuda extensions under

lib/csrc:ROOT=/path/to/clean-pvnet cd $ROOT/lib/csrc export CUDA_HOME="/usr/local/cuda" cd ransac_voting python setup.py build_ext --inplace cd ../nn python setup.py build_ext --inplace cd ../fps python setup.py build_ext --inplace # If you want to run PVNet with a detector cd ../dcn_v2 python setup.py build_ext --inplace # If you want to use the uncertainty-driven PnP cd ../uncertainty_pnp sudo apt-get install libgoogle-glog-dev sudo apt-get install libsuitesparse-dev sudo apt-get install libatlas-base-dev python setup.py build_ext --inplace -

Set up datasets:

The datasets from Linemod were not used, since it was tested with the custom dataset from Mitsuba. Note that this implementation wont work with the standard datasets since it was programed to take also some polarized images as input.

Download datasets which are formatted for this project:

We provide the pretrained models of objects on Linemod, which can be found at here.

Take the testing on cat as an example.

- Prepare the data related to

cat:python run.py --type linemod cls_type cat - Download the pretrained model of

catand put it to$ROOT/data/model/pvnet/cat/199.pth. - Test:

python run.py --type evaluate --cfg_file configs/linemod.yaml model cat cls_type cat python run.py --type evaluate --cfg_file configs/linemod.yaml test.dataset LinemodOccTest model cat cls_type cat - Test with icp:

python run.py --type evaluate --cfg_file configs/linemod.yaml model cat cls_type cat test.icp True python run.py --type evaluate --cfg_file configs/linemod.yaml test.dataset LinemodOccTest model cat cls_type cat test.icp True - Test with the uncertainty-driven PnP:

export LD_LIBRARY_PATH=$LD_LIBRARY_PATH:./lib/csrc/uncertainty_pnp/lib python run.py --type evaluate --cfg_file configs/linemod.yaml model cat cls_type cat test.un_pnp True python run.py --type evaluate --cfg_file configs/linemod.yaml test.dataset LinemodOccTest model cat cls_type cat test.un_pnp True

We provide the pretrained models of objects on Tless, which can be found at here.

- Download the pretrained models and put them to

$ROOT/data/model/pvnet/. - Test:

python run.py --type evaluate --cfg_file configs/tless/tless_01.yaml # or python run.py --type evaluate --cfg_file configs/tless/tless_01.yaml test.vsd True

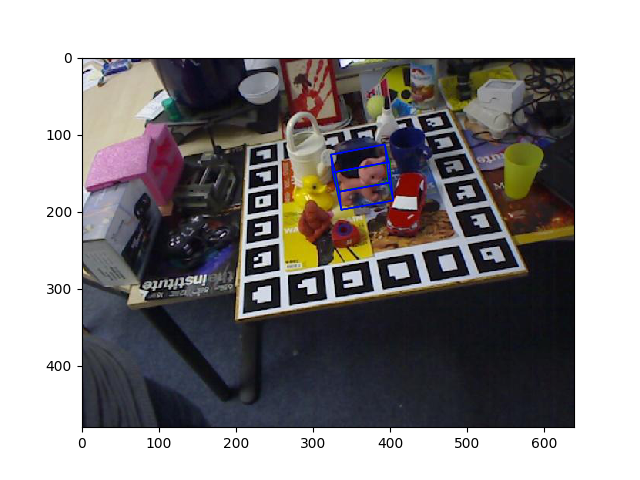

python run.py --type demo --cfg_file configs/linemod.yaml demo_path demo_images/cat

Take the cat as an example.

- Prepare the data related to

cat:python run.py --type linemod cls_type cat - Download the pretrained model of

catand put it to$ROOT/data/model/pvnet/cat/199.pth. - Visualize:

python run.py --type visualize --cfg_file configs/linemod.yaml model cat cls_type cat

If setup correctly, the output will look like

-

Visualize with a detector:

Download the pretrained models here and put them to

$ROOT/data/model/pvnet/pvnet_cat/59.pthand$ROOT/data/model/ct/ct_cat/9.pthpython run.py --type detector_pvnet --cfg_file configs/ct_linemod.yaml

Visualize:

python run.py --type visualize --cfg_file configs/tless/tless_01.yaml

# or

python run.py --type visualize --cfg_file configs/tless/tless_01.yaml test.det_gt True

- Prepare the data related to

cat:python run.py --type linemod cls_type cat - Train:

python train_net.py --cfg_file configs/linemod.yaml model mycat cls_type cat

The training parameters can be found in project_structure.md.

Train:

python train_net.py --cfg_file configs/tless/tless_01.yaml

tensorboard --logdir data/record/pvnet

If setup correctly, the output will look like

An example dataset can be downloaded at here.

- Create a dataset using https://github.com/F2Wang/ObjectDatasetTools

- Organize the dataset as the following structure:

├── /path/to/dataset │ ├── model.ply │ ├── camera.txt │ ├── diameter.txt // the object diameter, whose unit is meter │ ├── rgb/ │ │ ├── 0.jpg │ │ ├── ... │ │ ├── 1234.jpg │ │ ├── ... │ ├── mask/ │ │ ├── 0.png │ │ ├── ... │ │ ├── 1234.png │ │ ├── ... │ ├── pose/ │ │ ├── pose0.npy │ │ ├── ... │ │ ├── pose1234.npy │ │ ├── ... │ │ └── - Create a soft link pointing to the dataset:

ln -s /path/to/custom_dataset data/custom - Process the dataset:

python run.py --type custom - Train:

python train_net.py --cfg_file configs/custom.yaml train.batch_size 4 - Watch the training curve:

tensorboard --logdir data/record/pvnet - Visualize:

python run.py --type visualize --cfg_file configs/custom.yaml - Test:

python run.py --type evaluate --cfg_file configs/custom.yaml

An example dataset can be downloaded at here.

If you find this code useful for your research, please use the following BibTeX entry.

@inproceedings{peng2019pvnet,

title={PVNet: Pixel-wise Voting Network for 6DoF Pose Estimation},

author={Peng, Sida and Liu, Yuan and Huang, Qixing and Zhou, Xiaowei and Bao, Hujun},

booktitle={CVPR},

year={2019}

}

This work is affliated with ZJU-SenseTime Joint Lab of 3D Vision, and its intellectual property belongs to SenseTime Group Ltd.

Copyright (c) ZJU-SenseTime Joint Lab of 3D Vision. All Rights Reserved.

Licensed under the Apache License, Version 2.0 (the "License");

you may not use this file except in compliance with the License.

You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License.