Official code of COOPER and F-COOPER

(Please follow SECOND for KITTI object detection) python 3.6, pytorch 1.0.0. Tested in Ubuntu 16.04.

It is recommend to use Anaconda package manager.

conda install shapely fire pybind11 pyqtgraph tensorboardX protobufFollow instructions in https://github.com/facebookresearch/SparseConvNet to install SparseConvNet.

Install Boost geometry:

sudo apt-get install libboost-all-devAdd following environment variable for numba.cuda, you can add them to ~/.bashrc:

export NUMBAPRO_CUDA_DRIVER=/usr/lib/x86_64-linux-gnu/libcuda.so

export NUMBAPRO_NVVM=/usr/local/cuda/nvvm/lib64/libnvvm.so

export NUMBAPRO_LIBDEVICE=/usr/local/cuda/nvvm/libdeviceAdd ~/F-COOPER/ to PYTHONPATH

- Dataset preparation

Download KITTI dataset and create some directories first:

└── KITTI_DATASET_ROOT

├── training <-- 7481 train data

| ├── image_2 <-- for visualization

| ├── calib

| ├── label_2

| ├── velodyne

| └── velodyne_reduced <-- empty directory

└── testing <-- 7580 test data

├── image_2 <-- for visualization

├── calib

├── velodyne

└── velodyne_reduced <-- empty directory

- Create kitti infos:

python create_data.py create_kitti_info_file --data_path=KITTI_DATASET_ROOT- Create reduced point cloud:

python create_data.py create_reduced_point_cloud --data_path=KITTI_DATASET_ROOT- Create groundtruth-database infos:

python create_data.py create_groundtruth_database --data_path=KITTI_DATASET_ROOT- Download T&J dataset

Tom and Jerry Dataset (in our COOPER and F-COOPER papers) T&J to overwrite LiDAR frames in velodyne and velodyne_reduced folders

Modify the data information in ~/F-COOPER/COOPER/data/ImageSets/

- Modify config file

train_input_reader: {

...

database_sampler {

database_info_path: "/path/to/kitti_dbinfos_train.pkl"

...

}

kitti_info_path: "/path/to/kitti_infos_train.pkl"

kitti_root_path: "KITTI_DATASET_ROOT"

}

...

eval_input_reader: {

...

kitti_info_path: "/path/to/kitti_infos_val.pkl"

kitti_root_path: "KITTI_DATASET_ROOT"

}python ./pytorch/train.py train --config_path=./configs/car.config --model_dir=/path/to/model_dirYou can download pretrained models in Car_detection. The car model is corresponding to car.config.

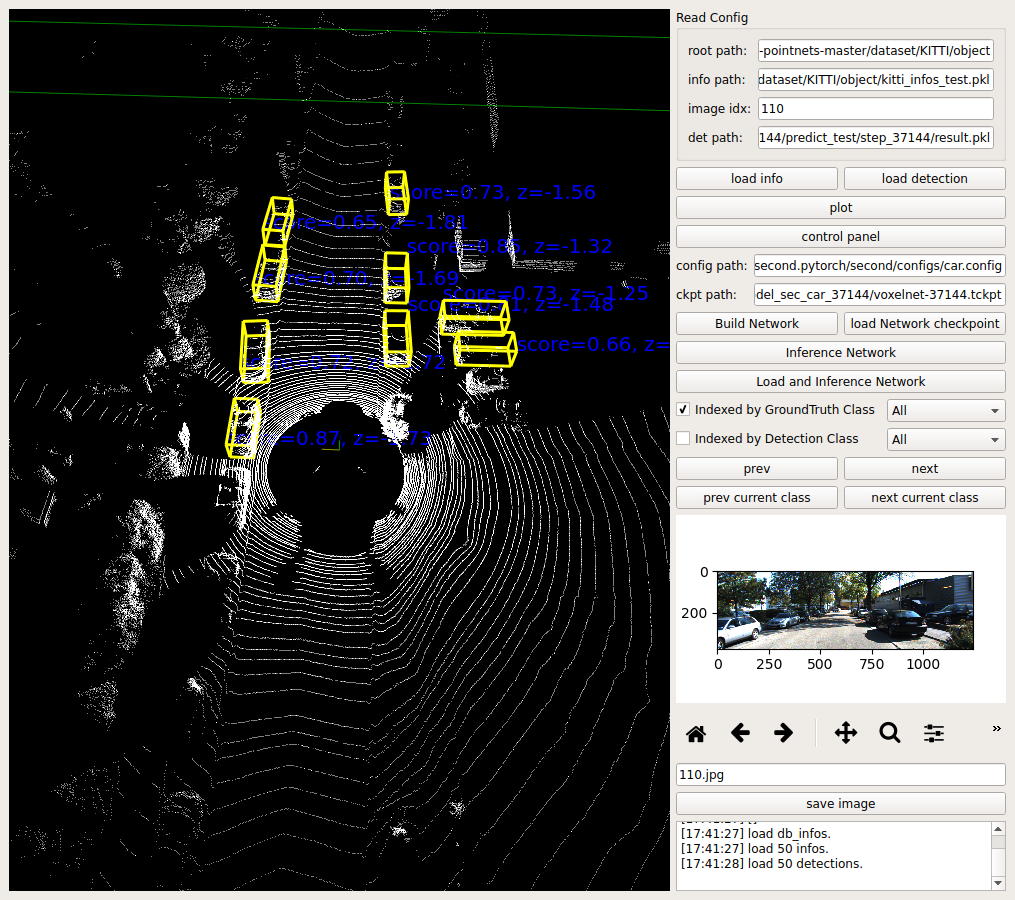

python ./pytorch/train.py evaluate --config_path=./configs/car.config --model_dir=/path/to/model_dirrun python ./kittiviewer/viewer.py, check following picture to view:

If you use related work, please cite our papers:

@misc{1905.05265,

Author = {Qi Chen and Sihai Tang and Qing Yang and Song Fu},

Title = {Cooper: Cooperative Perception for Connected Autonomous Vehicles based on 3D Point Clouds},

Year = {2019},

Eprint = {arXiv:1905.05265},

}