POS4ASC - Exploiting Position Bias for Robust Aspect Sentiment Classification

- Our code and data for ACL 2021 paper titled "Exploiting Position Bias for Robust Aspect Sentiment Classification"

- Fang Ma, Chen Zhang, and Dawei Song.

-

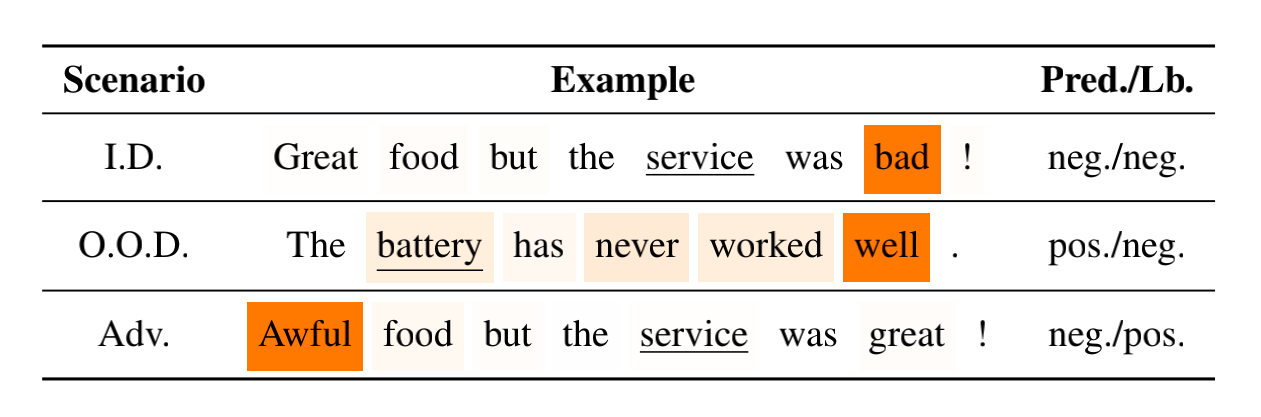

State-of-the-art ASC models are recently shown to suffer from the issue of robustness. Particularly in two common scenarios: 1) out-of-domain (O.O.D.) scenario, and 2) adversarial (Adv.) scenario. A case is given below:

-

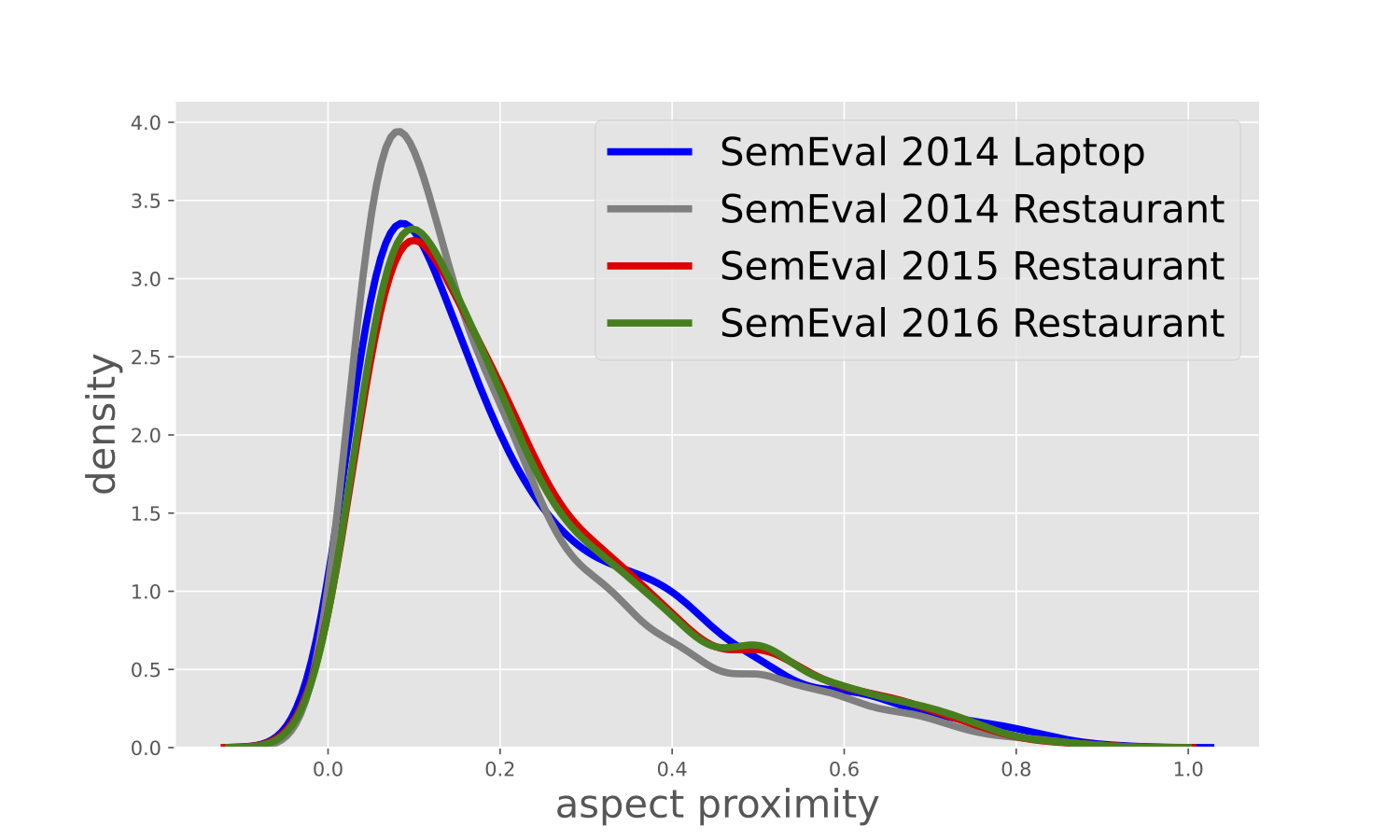

We hypothesize that position bias (i.e., the words closer to a concerning aspect would carry a higher degree of importance) is crucial for building more robust ASC models by reducing the probability of mis-attending. The hypothesis is statistically evidenced by existing benchmarks as below, aspect proximity depicts how close opinions are positioned around their corresponding aspects:

-

From the observation, we propose two mechanisms for capturing position bias, namely position-biased weight and position-biased dropout, which can be flexibly injected into existing models to enhance word representations, thereby robustness of models.

- python 3.8

- pytorch 1.7.0

- nltk

- transformer

- Download pretrained GloVe embeddings with this link and extract

glove.840B.300d.txtinto the root directory. - Train a model with a command similar to those in run.sh or run_bert.sh, optional arguments could be found in run.py.

- An example of training with LSTM w/ position-biased weight

python run.py --model_name lstm --train_data_name laptop --mode train --weight - An example of training with Roberta w/ position-biased weight

python run.py --model_name roberta --train_data_name laptop --mode train --learning_rate 1e-5 --weight_decay 0.0 - Evaluate the model with a command similar to those in run.sh or run_bert.sh, optional arguments could be found in run.py.

- An example of evaluation with LSTM w/ position-biased weight on the adversarial test set

python run.py --model_name lstm --train_data_name laptop --test_data_name arts_laptop --mode evluate --weight - An example of evaluation with Roberta w/ position-biased weight on the adversarial test set

python run.py --model_name roberta --train_data_name laptop --test_data_name arts_laptop --mode evaluate --weight

If you use the code in your paper, please kindly star this repo and cite our paper

@inproceedings{ma-etal-2021-exploiting,

title = "Exploiting Position Bias for Robust Aspect Sentiment Classification",

author = "Ma, Fang and Zhang, Chen and Song, Dawei",

booktitle = "Findings of ACL",

year = "2021",

address = "Online",

publisher = "Association for Computational Linguistics",

} - For any issues or suggestions about this work, don't hesitate to create an issue or directly contact me via [email protected] !