Check out our Large Driving Model Series!

Xin Fei*, Wenzhao Zheng

$\dagger$ , Yueqi Duan, Wei Zhan, Masayoshi Tomizuka, Kurt Keutzer, Jiwen Lu

Tsinghua University, UC Berkeley

*Work done during an internship at UC Berkeley,

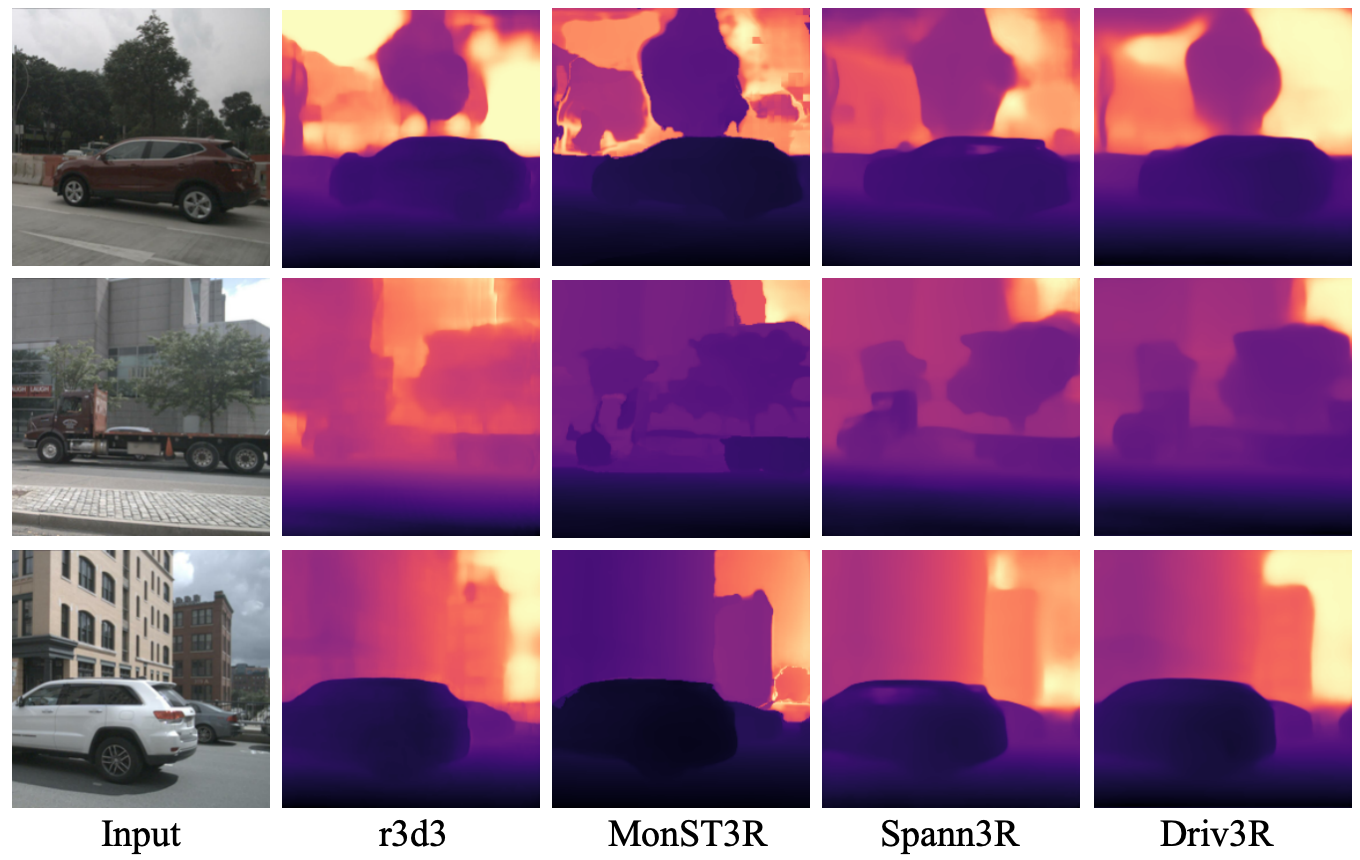

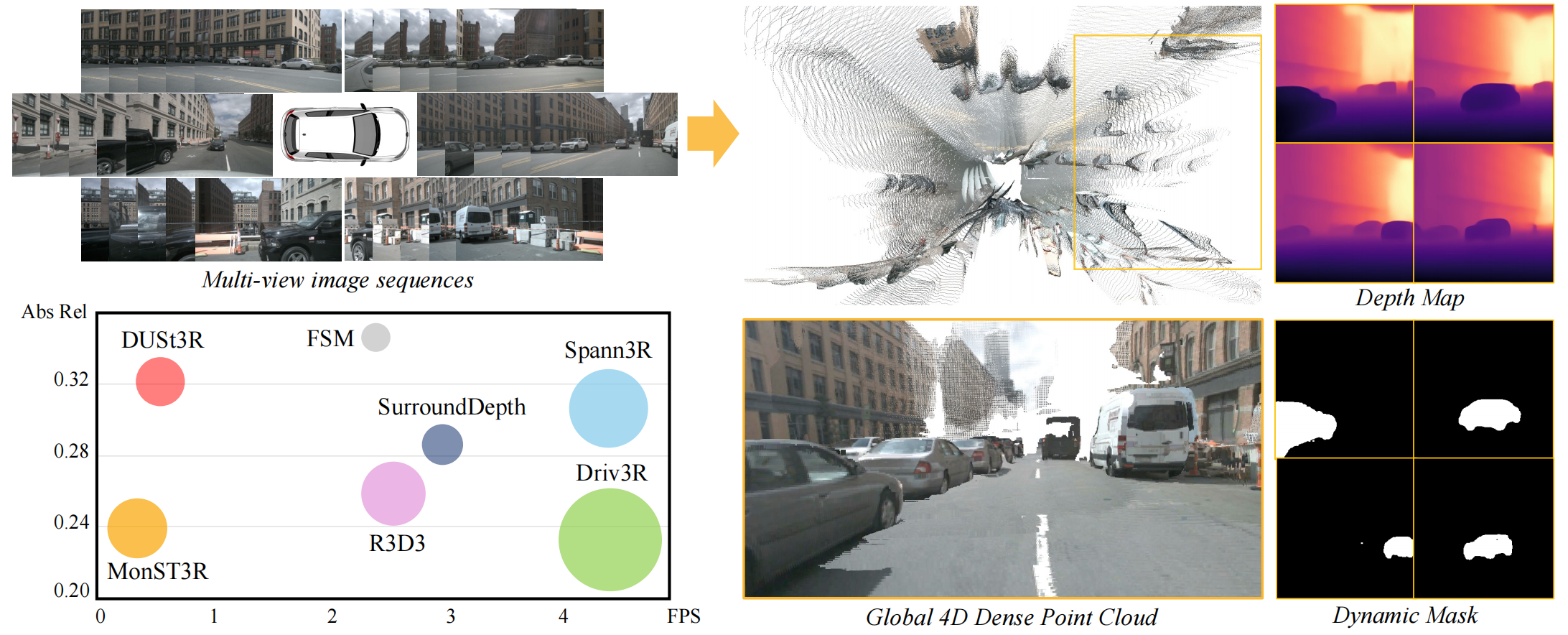

For real-time 4D reconstruction in autonomous driving, existing DUSt3R-based methods fall short due to inaccurate modeling of fast-moving dynamic objects and reliance on computationally expensive global alignment processes. In comparison, our Driv3R predicts per-frame pointmaps in the global consistent coordinate system in an optimization-free manner. It can accurately reconstruct fast-moving objects on large-scale scenes with 15x faster inference speed compared to methods requiring global alignment.

For real-time 4D reconstruction in autonomous driving, existing DUSt3R-based methods fall short due to inaccurate modeling of fast-moving dynamic objects and reliance on computationally expensive global alignment processes. In comparison, our Driv3R predicts per-frame pointmaps in the global consistent coordinate system in an optimization-free manner. It can accurately reconstruct fast-moving objects on large-scale scenes with 15x faster inference speed compared to methods requiring global alignment.

- [2024/12/9] Code release.

- [2024/12/9] Paper released on arXiv.

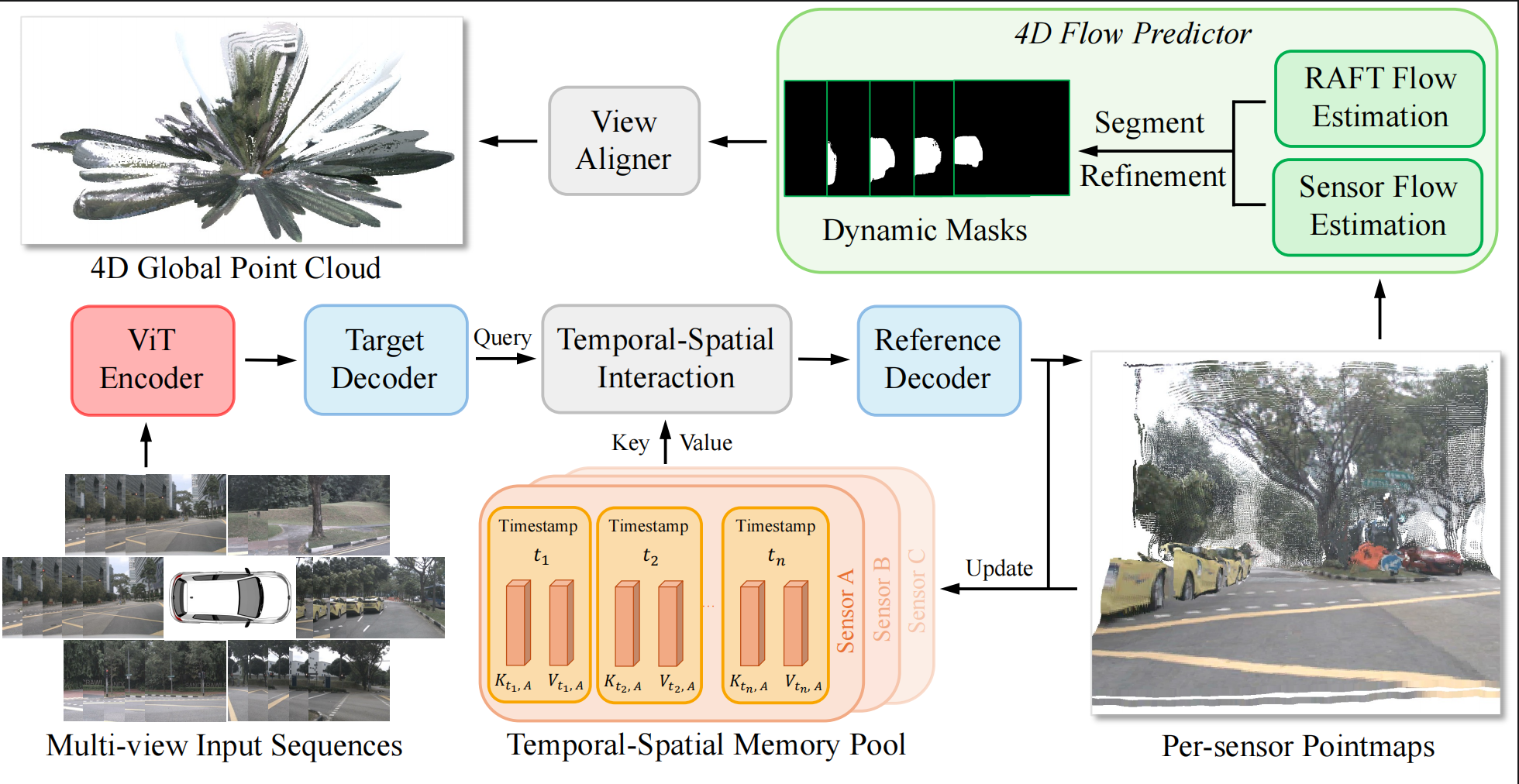

Pipeline of Driv3R: Given multi-view input sequences, we construct a sensor-wise memory pool for temporal and spatial information interactions. After obtaining per-frame point maps, the 4D flow predictor identifies the dynamic objects within the scene. Finally, we adopt an optimization-free multi-view alignment strategy to formulate the 4D global point cloud in the world coordinate system.

conda create -n driv3r python=3.11 cmake=3.14.0

conda activate driv3r

conda install pytorch torchvision pytorch-cuda=12.1 -c pytorch -c nvidia Then, install the packages as shown in requirement.txt. Also, please compile the external sam2 package in ./third_party/sam2.

- Please download the nuScenes full dataset with the following structure:

├── datasets/nuscenes

│ ├── maps

│ ├── samples

│ ├── v1.0-test

│ ├── v1.0-trainval

Then, run the following command to generate train_metas.pkl and val_metas.pkl.

python preprocess/nuscenes.pyNote: If you wish to apply our model to other AD datasets, please add them under ./driv3r/datasets. We are currently in the process of integrating the Waymo, KITTI, and DDAD datasets into our codebase and will update it as soon as the integration is complete.

- For training only: To train the Driv3R model, you will need the inference results from the R3D3 model as supervision. Since the virtual environment of R3D3 is not compatible with CUDA 12.1, we strongly recommend completing this step in advance. After obtaining the depth predictions, please modify the depth_root parameter in script/train.sh.

To download all checkpoints required for Driv3R, please run the following command:

sh script/download.sh- To train the Driv3R model on nuScenes dataset, please run:

sh script/train.shPlease modify the nproc_per_node and batch_size according to your GPUs.

- To generate the complete 4D point cloud in the global coordinate system, please run:

sh script/vis.shNote that you can modify sequence_length to control the length of the input sequences.

- To evaluate the performance of your pretrained model, please run:

sh script/eval.shIf you find this project helpful, please consider citing the following paper:

@article{driv3r,

title={Driv3R: Learning Dense 4D Reconstruction for Autonomous Driving},

author={Fei, Xin and Zheng, Wenzhao and Duan, Yueqi and Zhan, Wei and Tomizuka, Masayoshi and Keutzer, Kurt and Lu, Jiwen},

journal={arXiv preprint arXiv:2412.06777},

year={2024}

}