FitDiT is designed for high-fidelity virtual try-on using Diffusion Transformers (DiT).

2024/12/20: The FitDiT model weight is available.2024/12/17: Inference code is released.2024/12/4: Our Online Demo is released.2024/11/25: Our Complex Virtual Dressing Dataset (CVDD) is released.2024/11/15: Our FitDiT paper is available.

Our algorithm is divided into two steps. The first step is to generate the mask of the try-on area, and the second step is to try-on in the mask area.

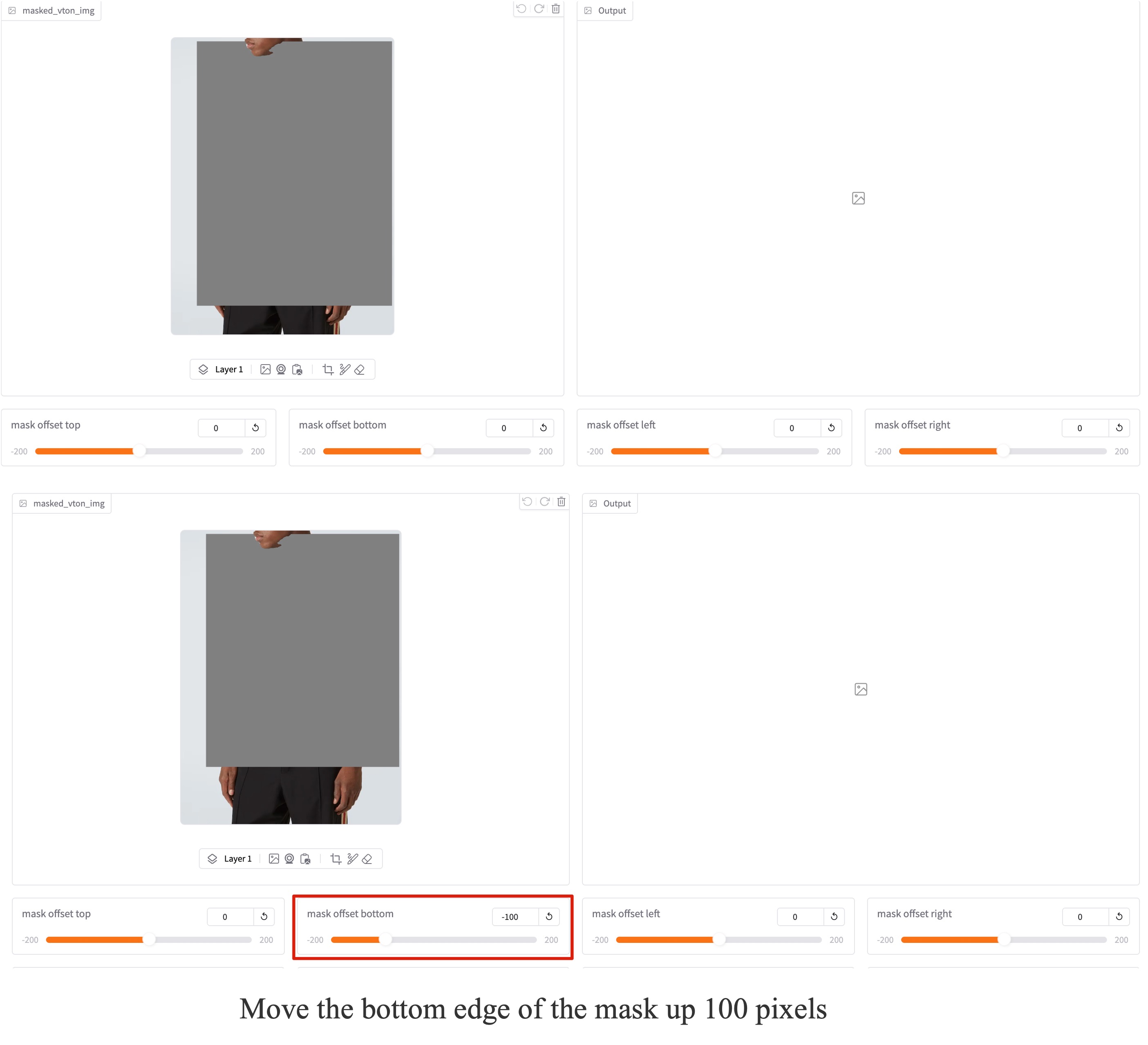

You can simpley get try-on mask by click Step1: Run Mask at the right side of gradio demo. If the automatically generated mask are not well covered the area where you want to try-on, you can either adjust the mask by:

-

Drag the slider of mask offset top, mask offset bottom, mask offset left or mask offset right and then click Step1: Run Mask button, this will re-generate mask.

-

Using the brush or eraser tool to edit the automatically generated mask

After generating a suitable mask, you can get the try-on results by click Step2: Run Try-on. In the Try-on resolution drop-down box, you can select a suitable processing resolution. In our online demo, the default resolution is 1152x1536, which means that the input model image and garment image will be pad and resized to this resolution before being fed into the model.

First apply access of FitDiT model weight, then clone model to local_model_dir

We test our model with following enviroment

torch==2.3.0

torchvision==0.18.0

diffusers==0.31.0

transformers==4.39.3

gradio==5.8.0

onnxruntime-gpu==1.20.1

# Run model with bf16 without any offload, fastest inference and most memory

python gradio_sd3.py --model_path local_model_dir

# Run model with fp16

python gradio_sd3.py --model_path local_model_dir --fp16

# Run model with fp16 and cpu offload, moderate inference and moderate memory

python gradio_sd3.py --model_path local_model_dir --fp16 --offload

# Run model with fp16 and aggressive cpu offload, slowest inference and less memory

python gradio_sd3.py --model_path local_model_dir --fp16 --aggressive_offload

This model can only be used for non-commercial use. If you want to use it for commercial use or expect better results, please contact me at [email protected]

If you find our work helpful for your research, please consider citing our work.

@misc{jiang2024fitditadvancingauthenticgarment,

title={FitDiT: Advancing the Authentic Garment Details for High-fidelity Virtual Try-on},

author={Boyuan Jiang and Xiaobin Hu and Donghao Luo and Qingdong He and Chengming Xu and Jinlong Peng and Jiangning Zhang and Chengjie Wang and Yunsheng Wu and Yanwei Fu},

year={2024},

eprint={2411.10499},

archivePrefix={arXiv},

primaryClass={cs.CV},

url={https://arxiv.org/abs/2411.10499},

}