[2025/02/18] Training/evaluation code and data preparation released!

[2025/01/18] Model released on 🤗HuggingFace!

[2024/11/29] Inference code and weights released. Try inference your images!

- Provide inference code, support image folder input

- Provide code and data for training or evaluation

We tested with python 3.11, PyTorch 2.4.1 and CUDA 12.1.

- Clone the repo and create a conda environment.

git clone https://github.com/ChiSu001/sat-hmr.git

cd sat-hmr

conda create -n sathmr python=3.11 -y

conda activate sathmr

# Install PyTorch. It is recommended that you follow [official instruction](https://pytorch.org/) and adapt the cuda version to yours.

conda install pytorch==2.4.1 torchvision==0.19.1 torchaudio==2.4.1 pytorch-cuda=12.1 -c pytorch -c nvidia

# Install xFormers. It is recommended that you follow [official instruction](https://github.com/facebookresearch/xformers) and adapt the cuda version to yours.

pip install -U xformers==0.0.28.post1 --index-url https://download.pytorch.org/whl/cu121

- Install other dependencies.

pip install -r requirements.txt

- You may need to modify

chumpypackage to avoid errors. For detailed instructions, please check this guidance.

-

Download SMPL-related weights.

- Download

basicModel_f_lbs_10_207_0_v1.0.0.pkl,basicModel_m_lbs_10_207_0_v1.0.0.pkl, andbasicModel_neutral_lbs_10_207_0_v1.0.0.pklfrom here (female & male) and here (neutral) to${Project}/weights/smpl_data/smpl. Please rename them asSMPL_FEMALE.pkl,SMPL_MALE.pkl, andSMPL_NEUTRAL.pkl, respectively. - Download others from Google drive and put them to

${Project}/weights/smpl_data/smpl.

- Download

-

Download DINOv2 pretrained weights from their official repository. We use

ViT-B/14 distilled (without registers). Please putdinov2_vitb14_pretrain.pthto${Project}/weights/dinov2. These weights will be used to initialize our encoder. You can skip this step if you are not going to train SAT-HMR. -

Download pretrained weights for inference and evaluation from Google drive or 🤗HuggingFace. Please put them to

${Project}/weights/sat_hmr.

Now the weights directory structure should be like this.

${Project}

|-- weights

|-- dinov2

`-- dinov2_vitb14_pretrain.pth

|-- sat_hmr

|-- sat_644_3dpw.pth

|-- sat_644_agora.pth

`-- sat_644.pth

`-- smpl_data

`-- smpl

|-- body_verts_smpl.npy

|-- J_regressor_h36m_correct.npy

|-- SMPL_FEMALE.pkl

|-- SMPL_MALE.pkl

|-- smpl_mean_params.npz

`-- SMPL_NEUTRAL.pkl

Please follow this guidance to prepare datasets and annotations. You can skip this step if you are not going to train or evaluate SAT-HMR.

We provide the script ${Project}/debug_data.py to verify that the data has been correctly prepared and visualize the GTs:

python debug_data.py

Visualization results will be saved in ${Project}/datasets_visualization.

We provide some demo images in ${Project}/demo. You can run SAT-HMR on all images on a single GPU via:

python main.py --mode infer --cfg demo

Results with overlayed meshes will be saved in ${Project}/demo_results.

You can specify your own inference configuration by modifing ${Project}/configs/run/demo.yaml:

input_dirspecifies the input image folder.output_dirspecifies the output folder.conf_threshspecifies a list of confidence thresholds used for detection. SAT-HMR will run inference using thresholds in the list, respectively.infer_batch_sizespecifies the batch size used for inference (on a single GPU).

You can also try distributed inference on multiple GPUs if your input folder contains a large number of images. Since we use 🤗 Accelerate to launch our distributed configuration, first you may need to configure 🤗 Accelerate for how the current system is setup for distributed process. To do so run the following command and answer the questions prompted to you:

accelerate config

Then run:

accelerate launch main.py --mode infer --cfg demo

We use 🤗 Accelerate to launch our distributed configuration, first you may need to configure 🤗 Accelerate for how the current system is setup for distributed process. To do so run the following command and answer the questions prompted to you:

accelerate config

To train on all datasets, run:

accelerate launch main.py --mode train --cfg train_all

Note: Training on AGORA and BEDLAM datasets is sufficient to reproduce our results on the AGORA Leaderboard. If you wish to save time and not train on all datasets, you can modify L39-40 in the ${Project}/run/train_all.yaml config file.

Training logs and checkpoints will be saved in the ${Project}/outputs/logs and ${Project}/outputs/ckpts directories, respectively.

You can monitor the training progress using TensorBoard. To start TensorBoard, run:

tensorboard --logdir=${Project}/outputs/logs

We provide code for evaluating on AGORA, BEDLAM and 3DPW. Evaluation results will be saved in ${Project}/results/${cfg_name}/evaluation.

# Evaluate on AGORA-val and BEDLAM-val

# AGORA-val: F1: 0.95 MPJPE: 63.0 MVE: 59.0

# BEDLAM-val: F1: 0.98 MPJPE: 48.7 MVE: 46.2

python main.py --mode eval --cfg eval_ab

# Evaluate on 3DPW-test

# 3DPW-test: MPJPE: 63.6 PA-MPJPE: 41.6 MVE: 73.7

python main.py --mode eval --cfg eval_3dpw

# Evaluate on AGORA-test

# AGORA-test: F1: 0.95 MPJPE: 67.9 MVE: 63.3

# This will generate a zip file in `${Project}/results/test_agora/evaluation/agora_test/thresh_0.5`

# which can be submitted to [AGORA Leaderboard](https://agora-evaluation.is.tuebingen.mpg.de/)

python main.py --mode eval --cfg test_agora

We recommend using a single GPU for evaluation as it provides more accurate results. However, we also provide code for distributed evaluation to obtain results faster.

# Multi-GPU configuration

accelerate config

# Evaluation

accelerate launch main.py --mode eval --cfg ${cfg_name}

If you find this code useful for your research, please consider citing our paper:

@article{su2024sathmr,

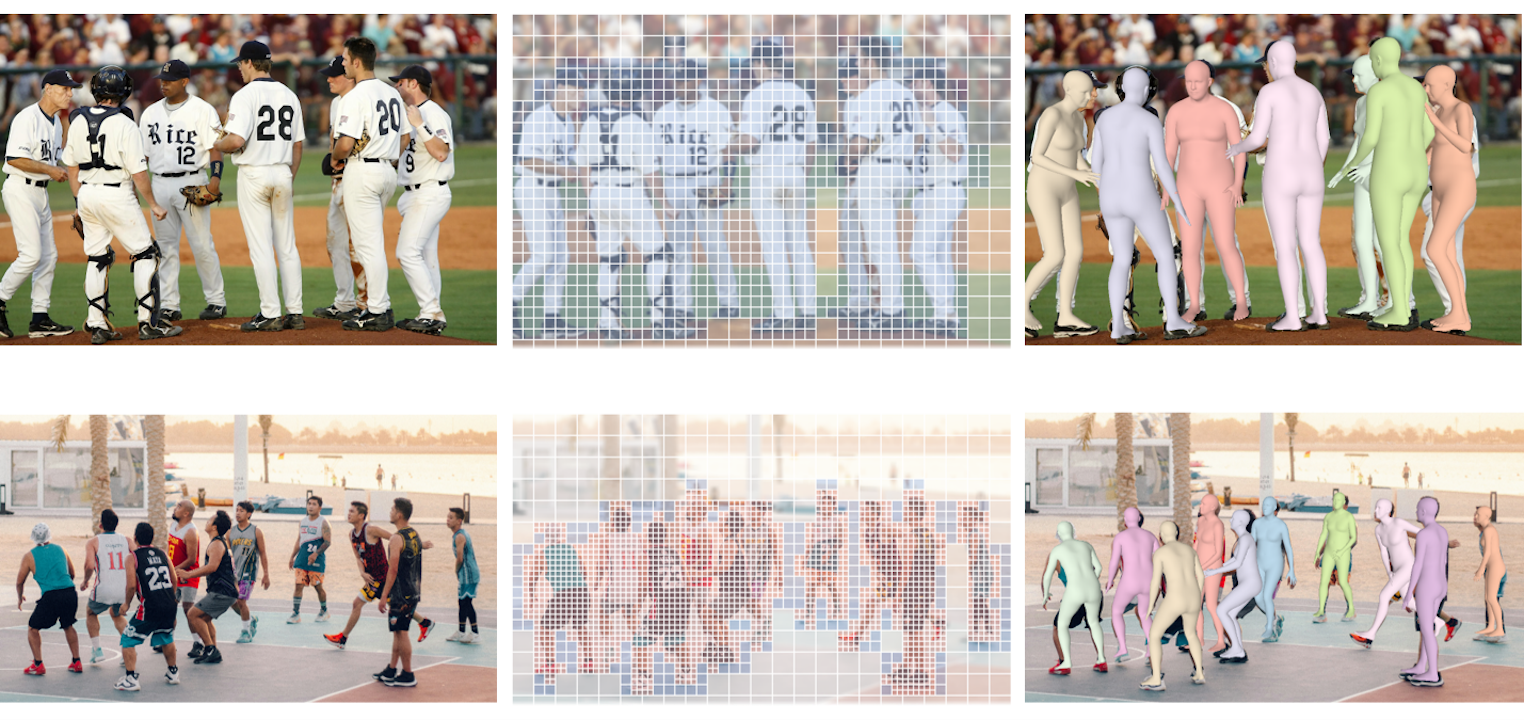

title={SAT-HMR: Real-Time Multi-Person 3D Mesh Estimation via Scale-Adaptive Tokens},

author={Su, Chi and Ma, Xiaoxuan and Su, Jiajun and Wang, Yizhou},

journal={arXiv preprint arXiv:2411.19824},

year={2024}

}

This repo is built on the excellent work DINOv2, DAB-DETR, DINO and 🤗 Accelerate. Thanks for these great projects.