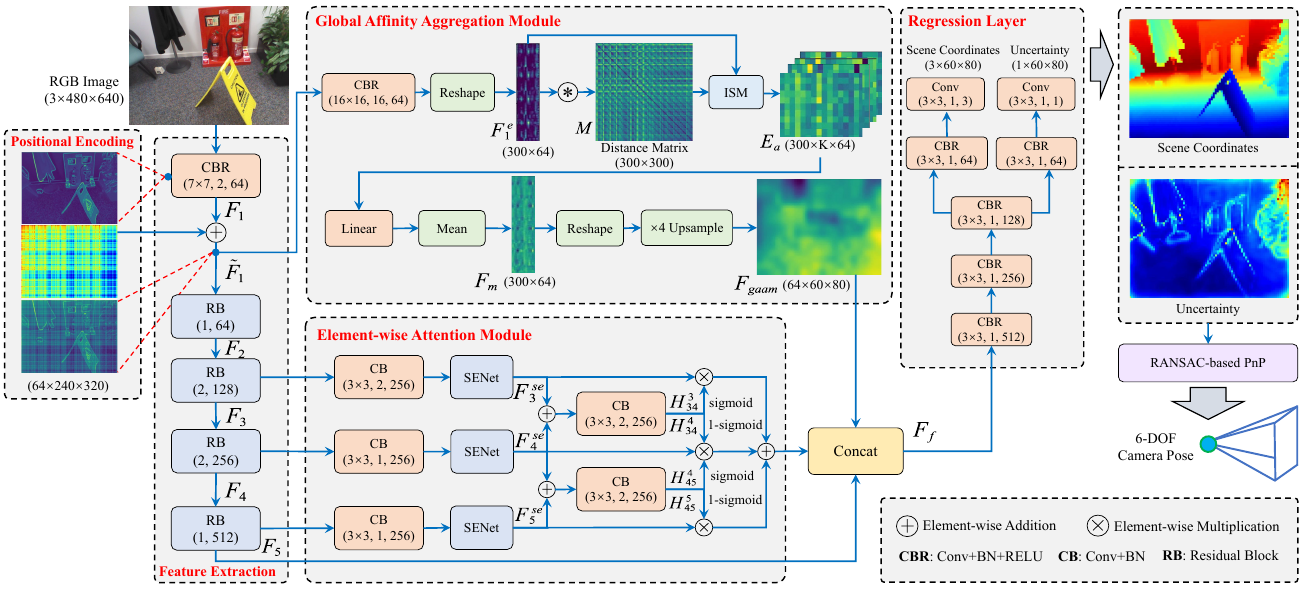

This is the PyTorch implementation of our paper "EAAINet: An Element-wise Attention Network with Global Affinity Information for Accurate Indoor Visual Localization".

Firstly, we need to set up python3 environment from requirement.txt:

pip3 install -r requirement.txt Subsequently, we need to build the cython module to install the PnP solver:

cd ./pnpransac

rm -rf build

python setup.py build_ext --inplaceWe utilize two standard datasets (i.e, 7-Scenes and 12-Scenes) to evaluate our method.

- 7-Scenes: The 7-Scenes dataset can be downloaded from 7-Scenes.

- 12-Scenes: The 12-Scenes dataset can be downloaded from 12-Scenes.

The pre-trained models can be downloaded from 7-Scenes ansd 12-Scenes. Then, we can run tran_7S.sh or train_12S.sh to evaluate EAAINet on 7-Scenes and 12-Scenes datasets.

bash tran_7S.shNotably, we need to modify the path of the downloaded models in tran_7S.sh or train_12S.sh. The meaning of each part of tran_7S.sh or train_12S.sh is as follows:

python main.py --model multi_scale_trans -dataset [7S/12S] --scene [scene name, such as chess]

--data_path ./data/ --flag test --resume [model_path]We can run the tran_7S.sh or train_12S.sh to train EAAINet. The meaning of each part of tran_7S.sh or train_12S.sh is as follows:

python main.py --model multi_scale_trans -dataset [7S/12S] --scene [scene name, such as chess] --data_path ./data/

--flag train --n_epoch 500 --savemodel_path [save_path]The PnP-RANSAC pose solver is referenced from HSCNet. The sensor calibration file and the normalization translation files for the 7-Scenes dataset are from DSAC.